Install and Run Microsoft Phi-3 Small Language Model Locally

Quick Summary

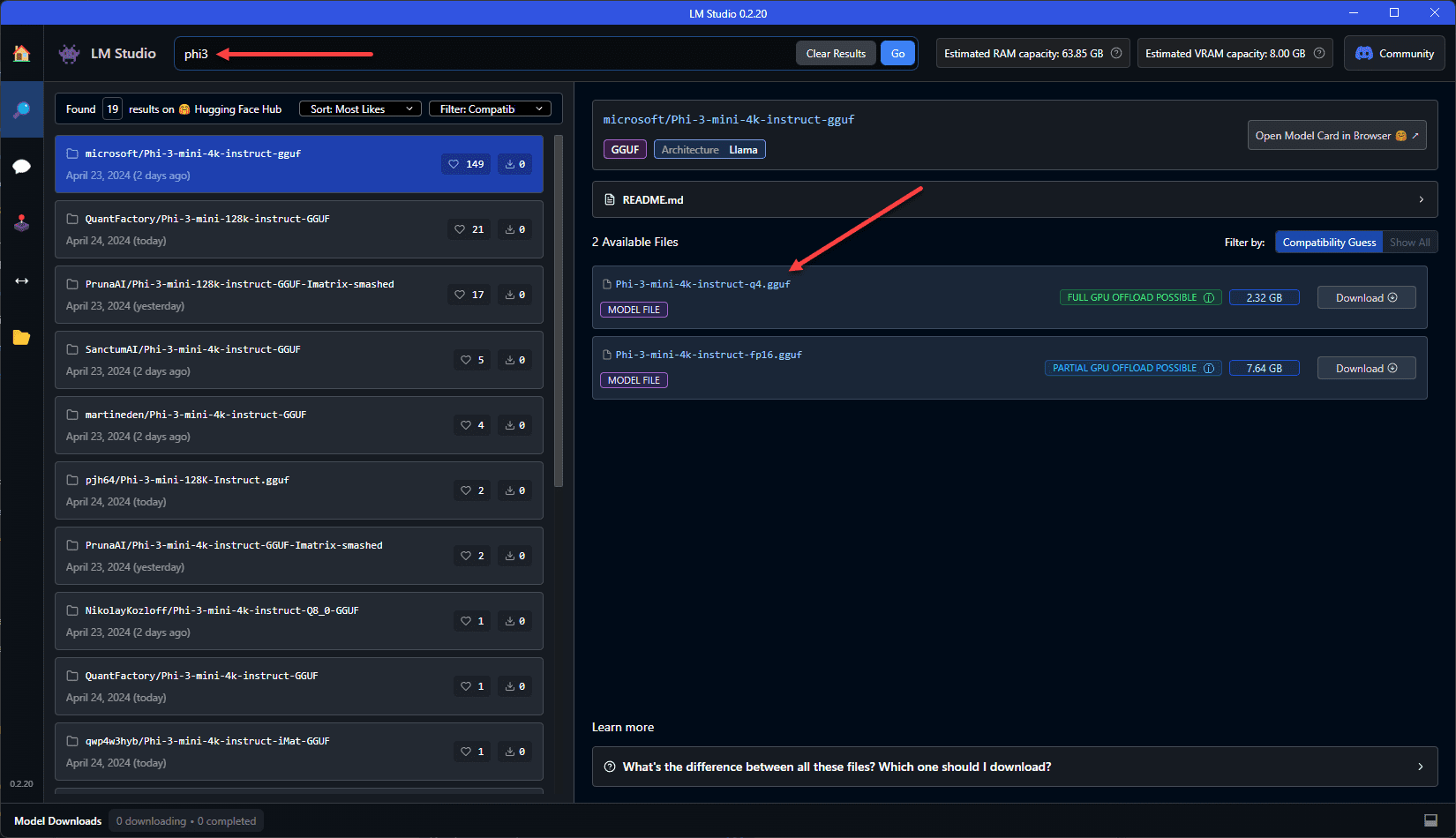

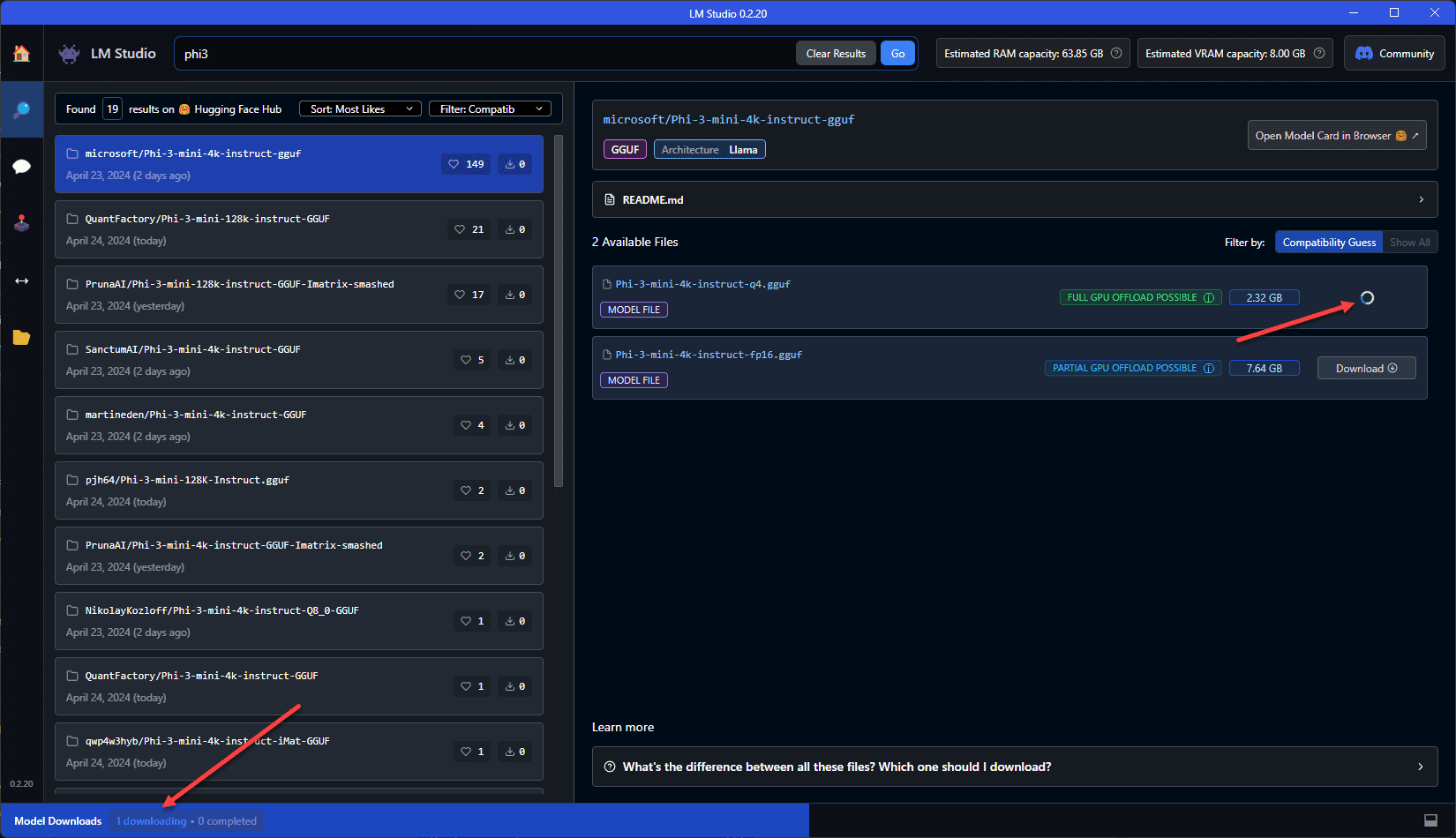

- In the search menu at the top, just type in phi3 and note the results in the right-hand pane.

- The next step we need to accomplish is to search for and download the Microsoft Phi-3 small language model.

- The Microsoft Phi-3 Small Language Model provides a compact yet highly effective language model.

It is mind-blowing how powerful generative AI is today and how quickly language models have become available that can even be run locally. These have become extremely powerful and very useful. Running AI models locally means that you don’t have to rely on ChatGPT, Google, or another cloud service. You can run the model locally without requiring it to hit cloud APIs. Microsoft has just released its Phi-3 small language model, and it is mind-blowing how powerful it is and how small it is. Let’s look at how to install and run Microsoft Phi-3 small language model AI Chat locally.

Table of contents

What is Microsoft Phi-3 small language model?

The Microsoft Phi-3 Small Language Model provides a compact yet highly effective language model. Phi-3 models include a tiny variant, called Phi-3-mini with 3.8 billion parameters, that is capable of much better performance than even larger language models, even though it is small in size.

The development of the Phi-3 small language model was inspired by the simplicity and high-quality content of children’s books. Microsoft curated datasets to train these smaller models with efficiency. This helps to boost performance and has the potential to make AI available even in resource limited environments.

Specs of Microsoft’s Phi-3 AI model

Note the following specs of the Phi-3 AI model:

- Models:

- Phi-3-mini: 3.8 billion parameters

- Phi-3-small: 7 billion parameters

- Phi-3-medium: 14 billion parameters

- Performance:

- Outperforms models of similar and even larger sizes across various benchmarks.

- Enhanced capabilities in language, coding, and mathematical tasks.

- Availability:

- Accessible through Microsoft Azure AI Model Catalog.

- Also available on Hugging Face and as an NVIDIA NIM microservice.

- Deployable on local machines via Ollama framework.

- Training Data:

- Utilizes high-quality, selectively curated datasets.

- Training approach inspired by the simplification seen in children’s books.

- Applications:

- Suitable for both cloud-based and edge-based (local) computations.

- Designed to be highly efficient in environments with limited computational resources.

- Safety and Security:

- Undergoes extensive testing and red-teaming to ensure model reliability and safety.

- Additional training and feedback loops incorporated to enhance model response quality.

Installing Microsoft Phi-3 Small Language Model

The steps to run the Microsoft Phi-3 small language model locally include:

- Download LM Studio

- Search for and download the Phi 3 mini 4k model

- Select the Language Model to use in LM Studio

- Start chatting

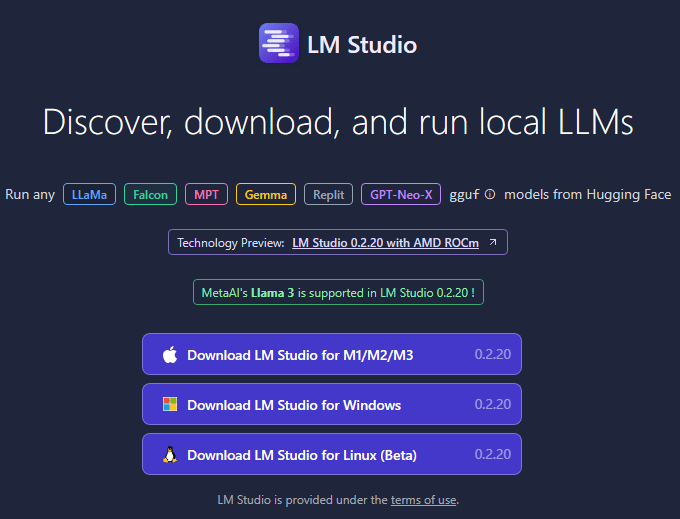

1. Download LM Studio

The first step is to download LM studio. LM studio is a piece of software that allows you to run LLMs locally. It includes features like the following:

- Run LLMs on your laptop, entirely offline

- Use models through the in-app Chat UI or an OpenAI compatible local server

- Download any compatible model files from HuggingFace repsitories

- Discover new LLMs in the app

You can download LM Studio from here: LMStudio.ai. Select the appropriate installer for your platform.

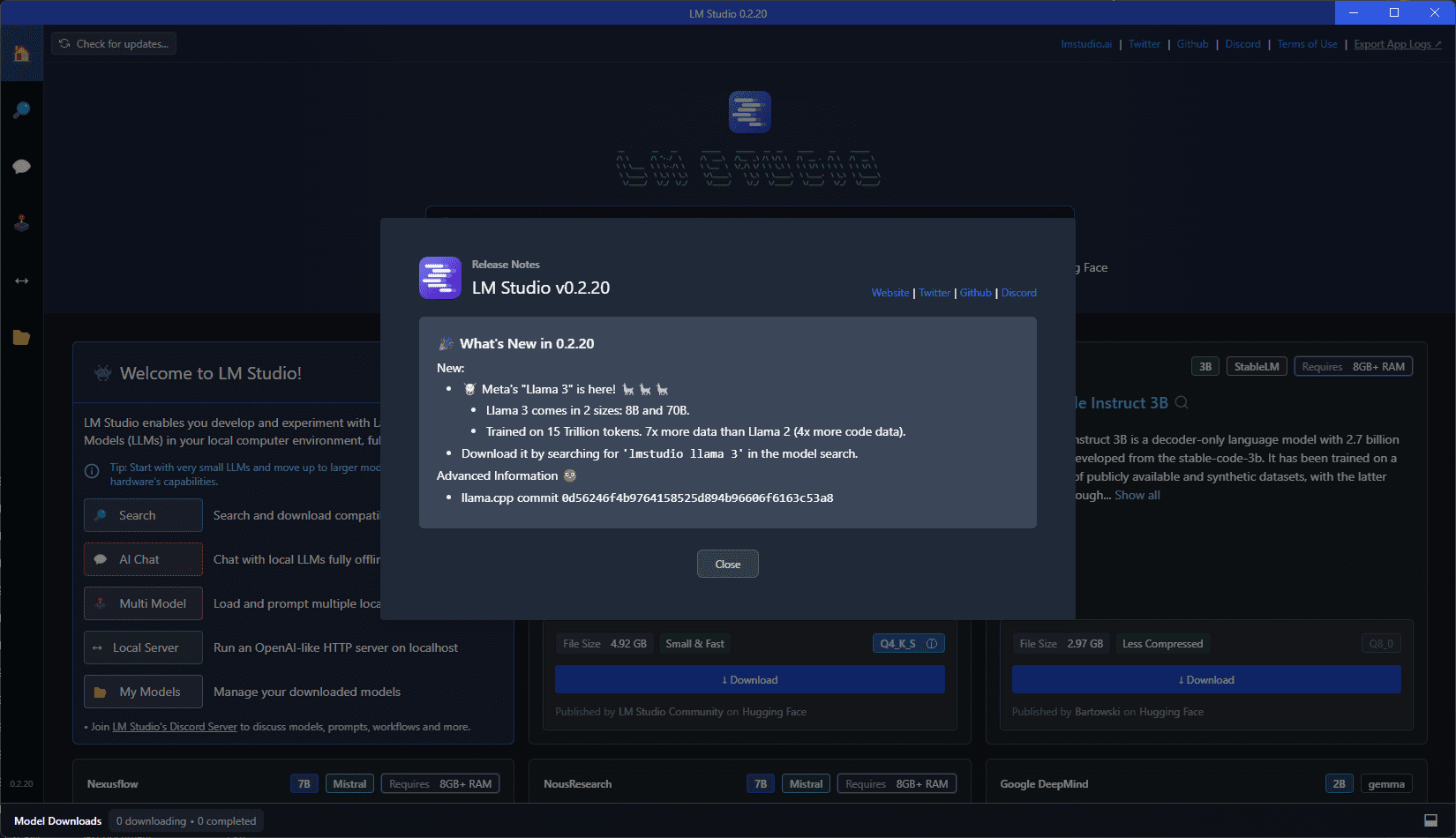

After installing, LM Studio will launch.

2. Search for and download the Phi 3 mini 4k model

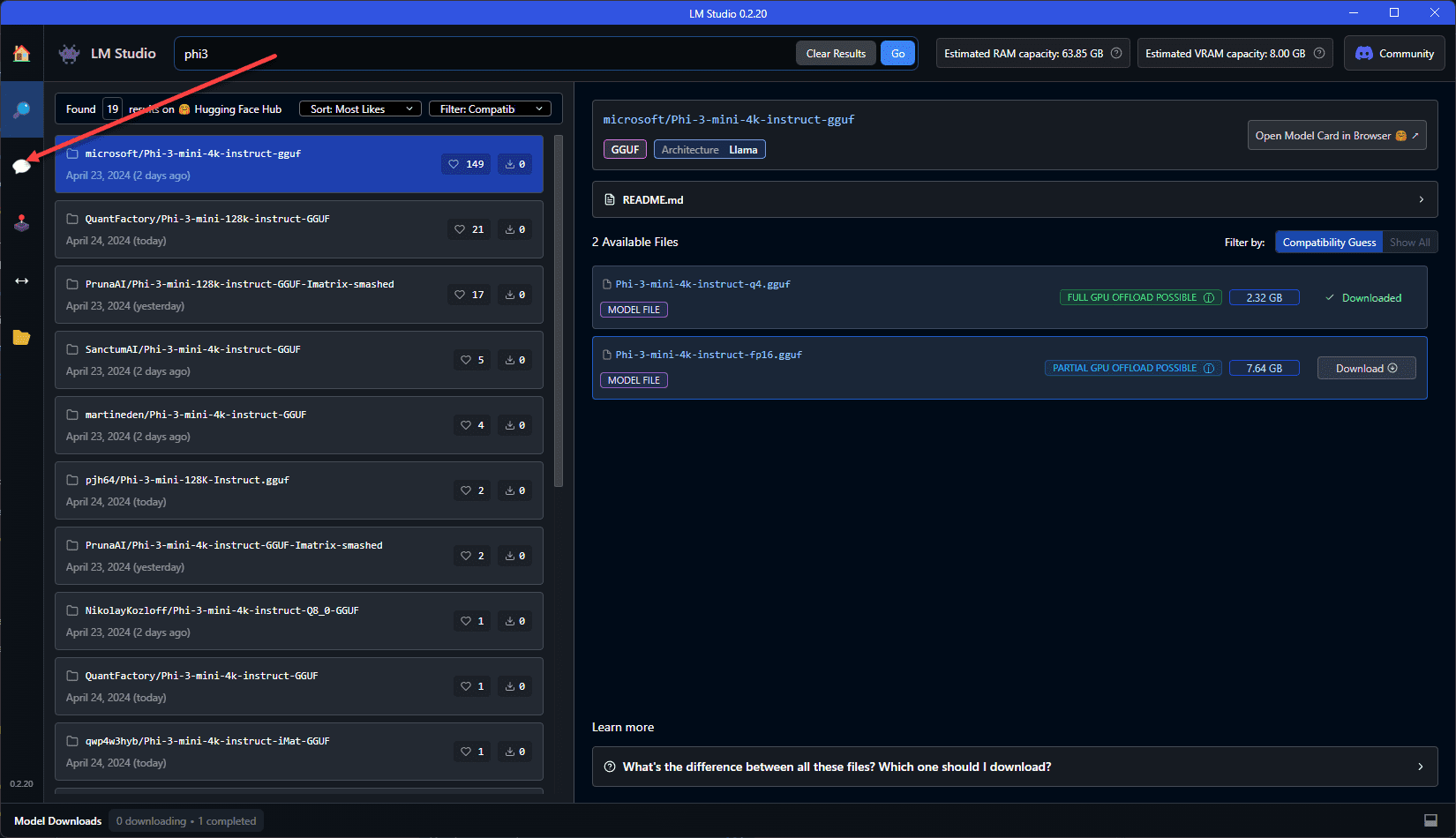

The next step we need to accomplish is to search for and download the Microsoft Phi-3 small language model. In the search menu at the top, just type in phi3 and note the results in the right-hand pane. Here we will download the Phi-3-mini-4k-instruct-q4.gguf model file. The size as you can see in the screenshot is 2.32 GB.

Click Download next to the language model.

After clicking the download button, you will see the progress of the language model download.

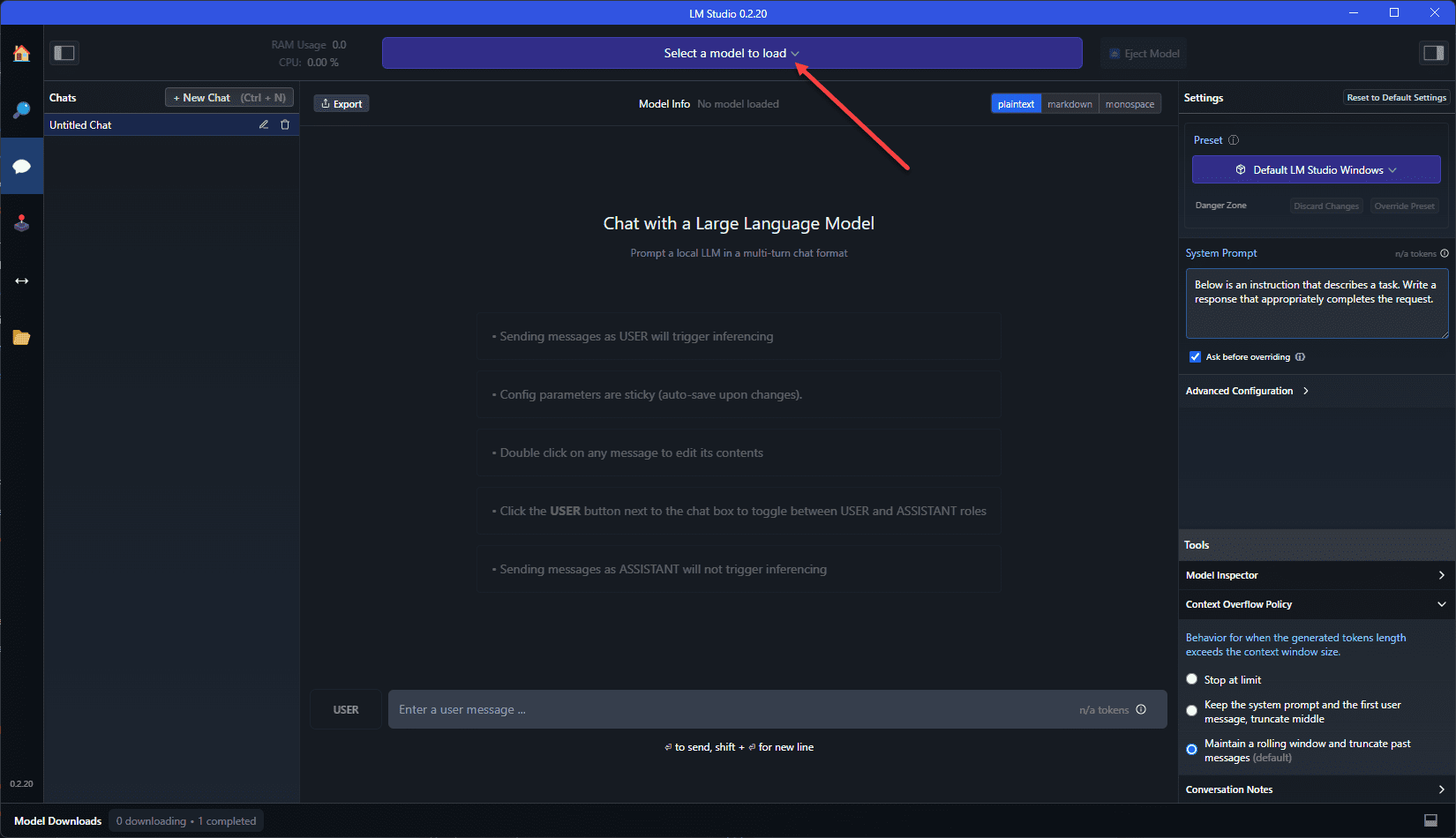

3. Select the Language Model to use in LM Studio

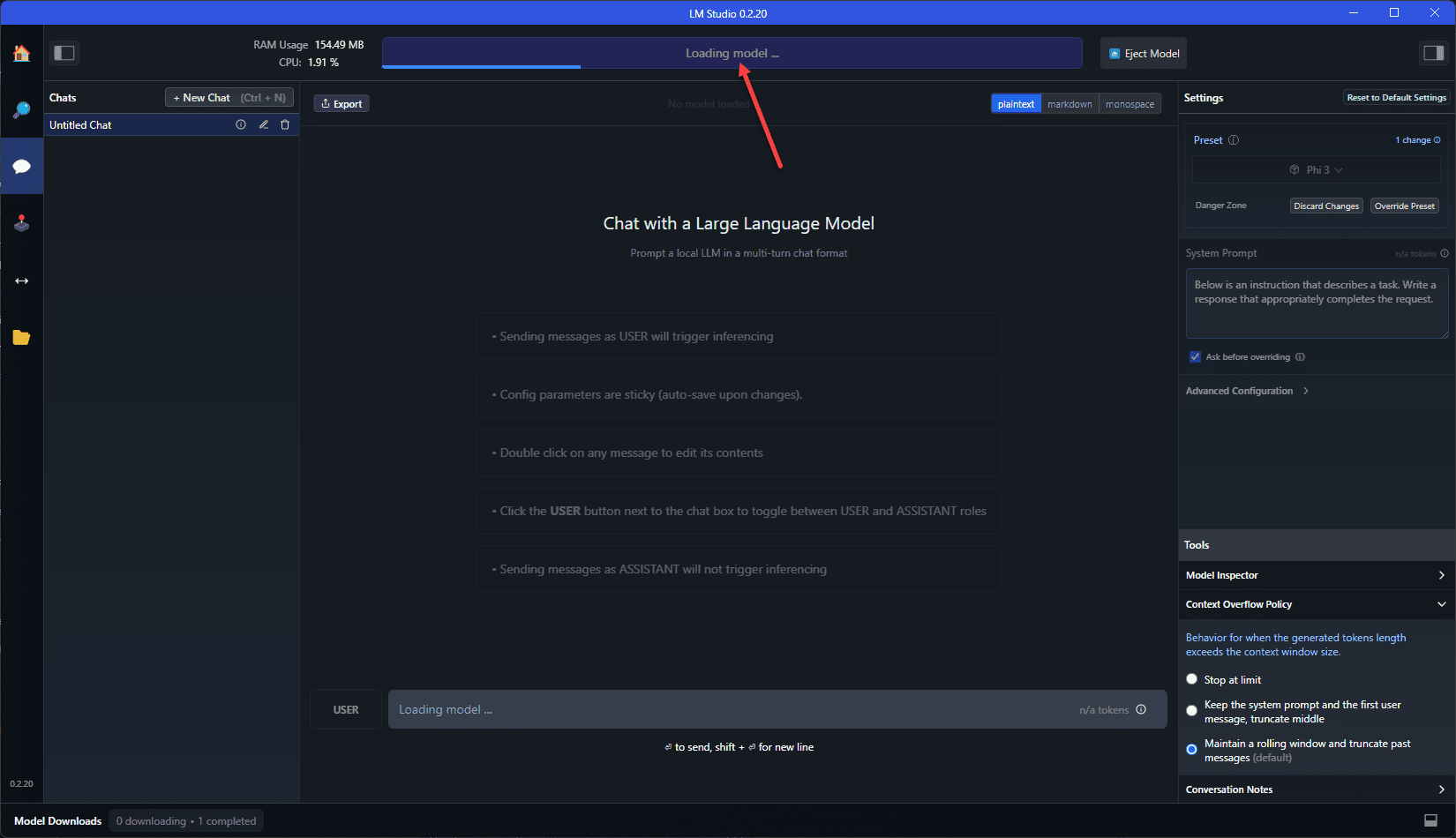

Now that we have the model downloaded, we can select this model in LM Studio to begin chatting. Click the Chat icon on the left hand side of the interface.

Select the Select a model to load drop down and choose the Phi-3 model we downloaded.

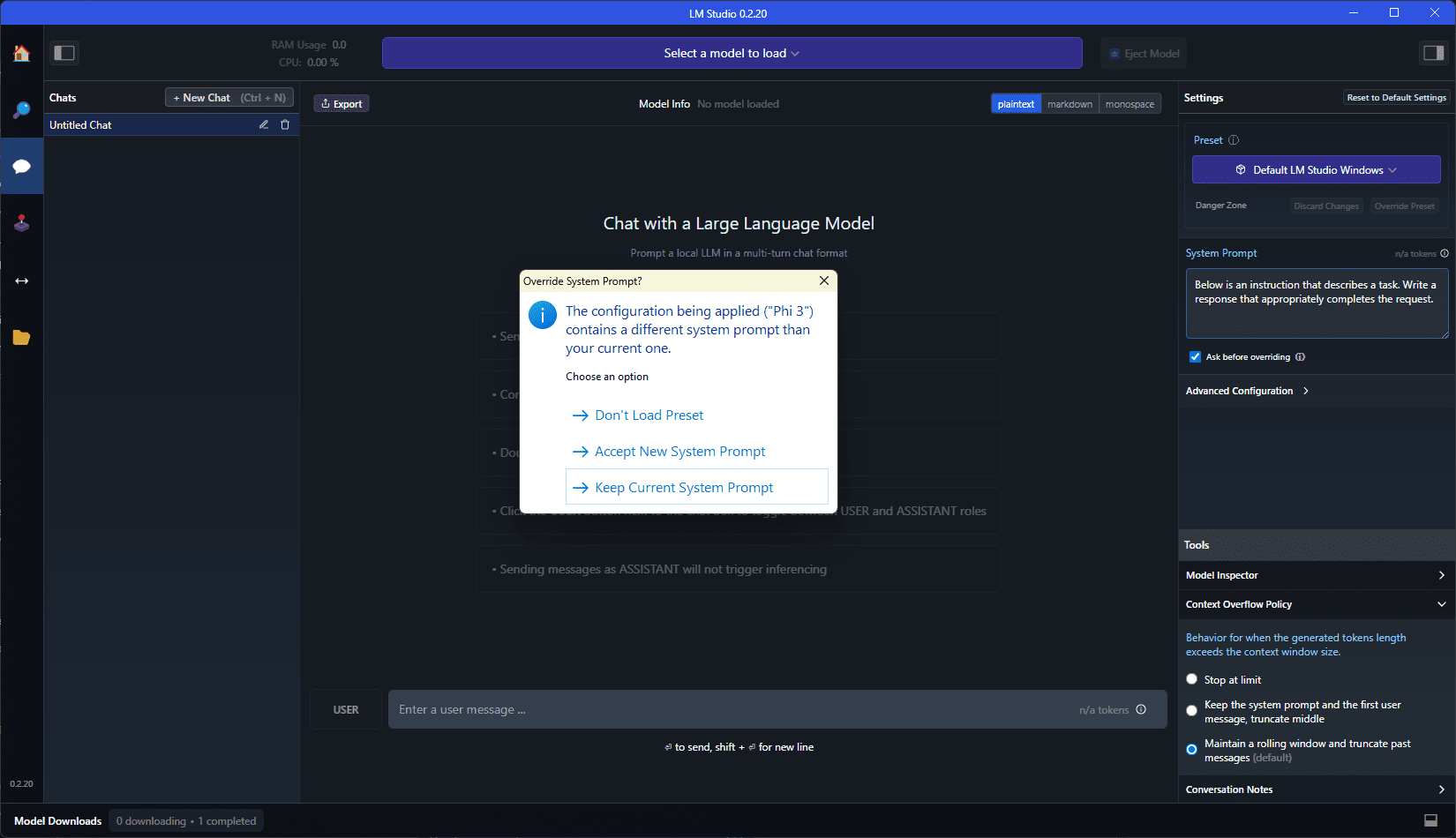

On the Override System Prompt, I just selected to Keep Current System Prompt.

After selecting the choice on the system prompt, you will see the language model start loading in. For me, this entire process was super fast.

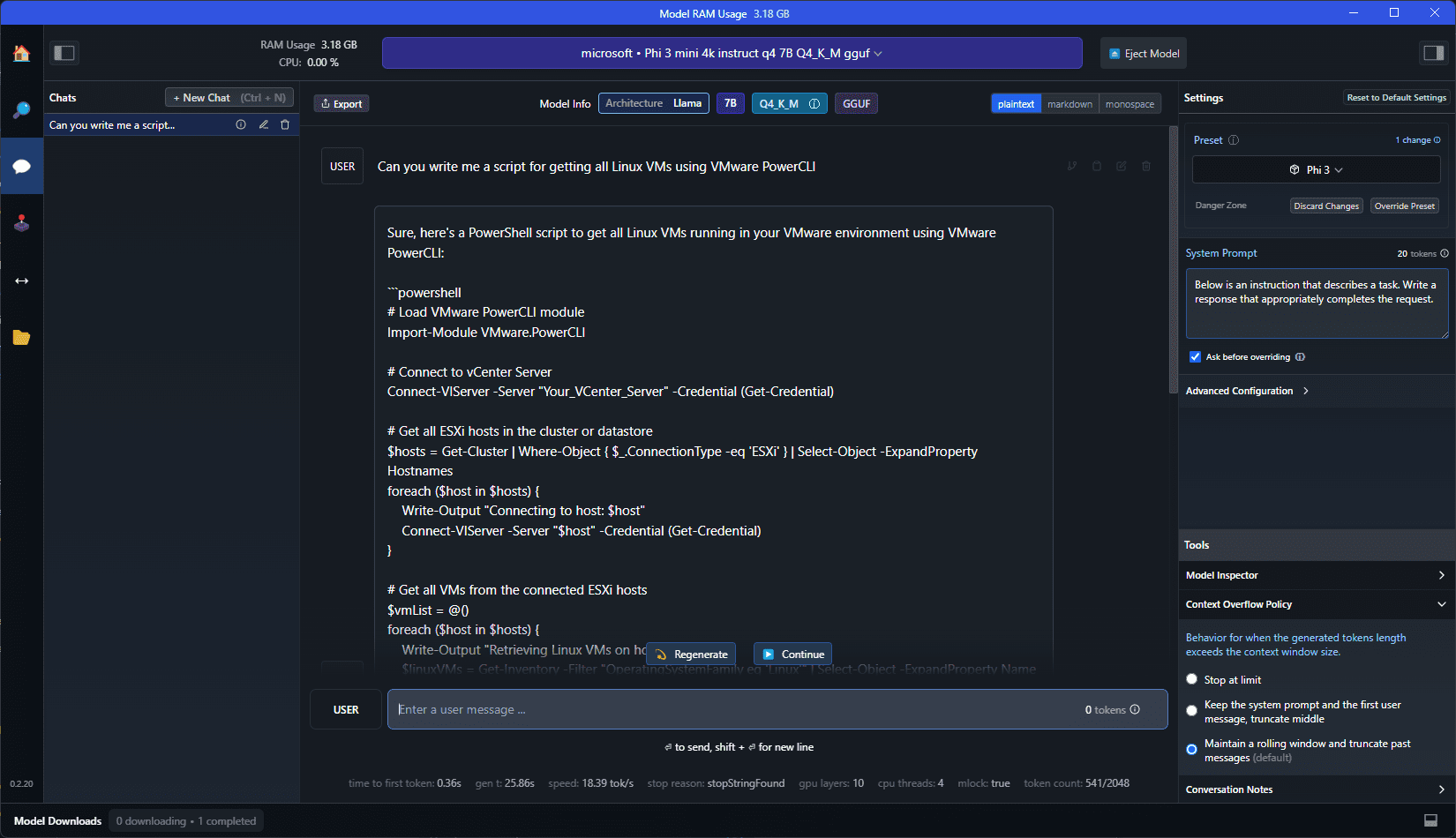

4. Start chatting

Now that we have the Microsoft Phi-3 language model loaded, we can start chatting with the model. Here I am just giving it a simple query to write a PowerCLI script that gets all Linux VMs.

Key takeaways

All I can say is that I am blown away by the power of this small language model. It is amazing the clarity and accuracy of something so small that you can literally run on a laptop without hundreds or thousands of servers. It is amazing to think that even just a few months back, this kind of AI chat technology would have been unheard of. It will be interesting to see where these small language models take us and the use cases for these. The possibilities seem endless and allow AI to literally reach to every nook and cranny of technology, website, edge location, device, etc.

Let me know in the comments what you think about these types of AI chat technologies and if you are using this in your home lab environment or elsewhere?