@brandon-lee this is all really great stuff. I also ran into this video that appears to show that a ceph fs can be accessed directly from Windows via the use of Ceph Dokan drivers:

@brandon-lee it would be cool for you to do a similar video.

They say there is no such thing as a stupid question but let me try ... 🙂

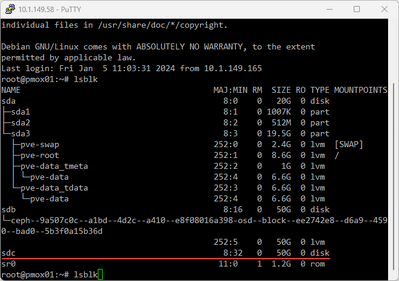

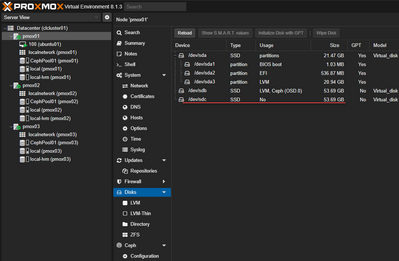

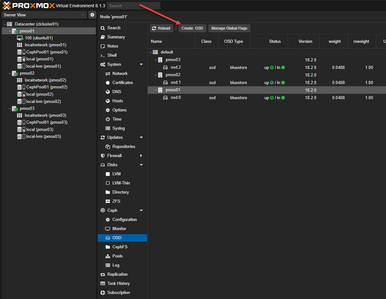

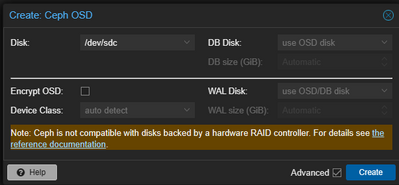

In your video "Proxmox 8 Cluster with Ceph Storage configuration" you create a 3 node pmox cluster, with a 53.69GB disc for each node. I suspect the total useable pool size is only 53.69GB (a 3-way mirror if you will)... what happens if you add another node? 4-way mirror or something else? What happens if you add an additional disc to a node but not all nodes (does the pool grow)? All these questions are leading up to understanding how ceph grows not only in performance (additional nodes) but also in useable pool space.

@brandon-lee not all hero's wear capes! Thank you for doing this... my continued research points to storage efficiency settings 3x replica (33% efficiency) vs. erasure coding (66% for 3 node cluster with 1 failure tolerance) or as high as 71.5% efficiency in larger 14 node clusters with 4 failure tolerance. In summary native ceph 33% efficiency does not excite me for my home lab storage pool solution... but 66%+ with erasure coding might be the cat's meow

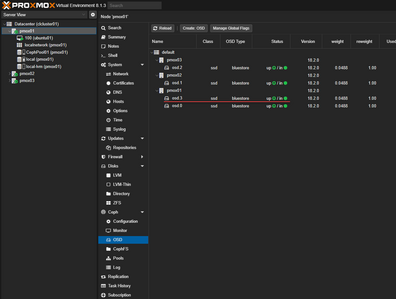

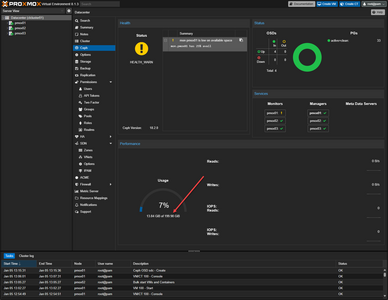

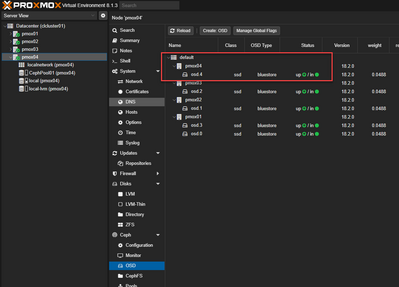

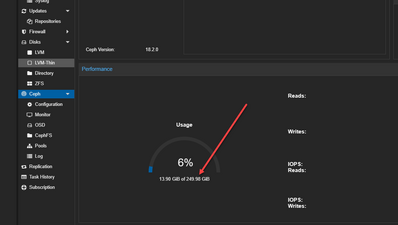

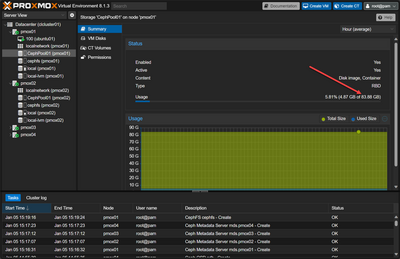

@wikemanuel This is after adding a 4th host to the cluster. You can see the Pool size is growing as expected. This is immediate....pretty cool.

This is all super useful! If I am reading this all correctly; that 249.98GB on the end is 4 nodes each with a ~50GB disc and 1 of the nodes using 2 of the 50GB discs... so I am assuming it is showing you total available (similar to a stripe) but once you implement some sort of redundancy the total useable will be lower no? It would be mine boggling to think it adds drives up like a stripe and manages to have redundancy. I would assume 33% or 66% useable of the 249.98GB depending on if you do or do not use erasure coding.