[Solved] Mikrok8s storage cluster with Microceph, rook-ceph, PVC pending, waiting for a volume to be created by external provisioner

@mscrocdile ok good, it is now showing as default and what we want. Let's delete out the existing pvcs and then recreate again. I don't think it will retro create those that are pending or I have seen that issue before. You can get your existing PVCs with:

microk8s kubectl get pvc then microk8s kubectl delete pvc <PVC name>

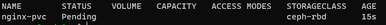

@brandon-lee unfortunately it is still pending.

I deleted both pvc and pod. And created again.

This is what i use

---

apiVersion: v1

kind: PersistentVolumeClaim

metadata:

name: my-pvc

spec:

accessModes:

- ReadWriteOnce

resources:

requests:

storage: 50Mi

storageClassName: ceph-rbd

---

apiVersion: v1

kind: Pod

metadata:

name: nginx-pod

spec:

volumes:

- name: my-storage

persistentVolumeClaim:

claimName: my-pvc

containers:

- name: nginx

image: nginx:1.14.2

ports:

- containerPort: 80

volumeMounts:

- mountPath: "/usr/share/nginx/html"

name: my-storage

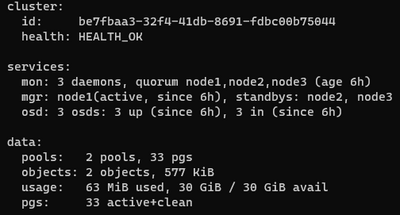

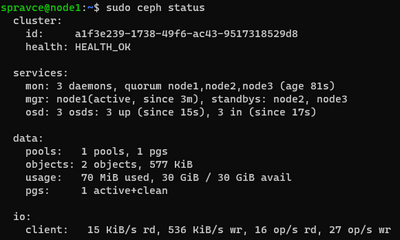

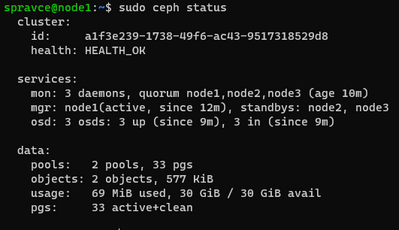

@mscrocdile I think you mentioned that everything was healthy. Can you post output of:

ceph status

Then also run a:

microk8s kubectl describe pvc

Also, this is my steps that I used to get ceph-rbd working, I'm not sure if any of these steps differ from what you did?

Kubernetes Persistent Volume Setup with Microk8s Rook and Ceph - Virtualization Howto

yes, i saved the text from the result before:

Looking for MicroCeph on the host

Detected existing MicroCeph installation

Attempting to connect to Ceph cluster

Successfully connected to be7fbaa3-32f4-41db-8691-fdbc00b75044 (192.168.30.51:0/1602486096)

Creating pool microk8s-rbd0 in Ceph cluster

Configuring pool microk8s-rbd0 for RBD

Successfully configured pool microk8s-rbd0 for RBD

Creating namespace rook-ceph-external

namespace/rook-ceph-external created

Configuring Ceph CSI secrets

Successfully configured Ceph CSI secrets

Importing Ceph CSI secrets into MicroK8s

secret/rook-ceph-mon created

configmap/rook-ceph-mon-endpoints created

secret/rook-csi-rbd-node created

secret/rook-csi-rbd-provisioner created

storageclass.storage.k8s.io/ceph-rbd created

Importing external Ceph cluster

NAME: rook-ceph-external

LAST DEPLOYED: Tue Dec 19 07:26:04 2023

NAMESPACE: rook-ceph-external

STATUS: deployed

REVISION: 1

TEST SUITE: None

NOTES:

The Ceph Cluster has been installed. Check its status by running:

kubectl --namespace rook-ceph-external get cephcluster

Visit https://rook.io/docs/rook/latest/CRDs/ceph-cluster-crd/ for more information about the Ceph CRD.

Important Notes:

- You can only deploy a single cluster per namespace

- If you wish to delete this cluster and start fresh, you will also have to wipe the OSD disks using `sfdisk`

=================================================

Successfully imported external Ceph cluster. You can now use the following storageclass

to provision PersistentVolumes using Ceph CSI:

NAME PROVISIONER RECLAIMPOLICY VOLUMEBINDINGMODE ALLOWVOLUMEEXPANSION AGE

ceph-rbd rook-ceph.rbd.csi.ceph.com Delete Immediate true 5s

@mscrocdile ok that looks good at least. I don't see any major differences between your YML with the pod you are provisioning, can you delete out your PVCs that are pending, and use this one:

# pod-with-pvc.yaml

---

apiVersion: v1

kind: PersistentVolumeClaim

metadata:

name: nginx-pvc

spec:

storageClassName: ceph-rbd

accessModes: [ReadWriteOnce]

resources: { requests: { storage: 5Gi } }

---

apiVersion: v1

kind: Pod

metadata:

name: nginx

spec:

volumes:

- name: pvc

persistentVolumeClaim:

claimName: pod-pvc

containers:

- name: nginx

image: nginx:latest

ports:

- containerPort: 80

volumeMounts:

- name: pvc

mountPath: /usr/share/nginx/html

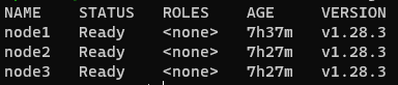

@mscrocdile I'm going to setup a lab really quick to reproduce and follow steps, should be able to see if I run into the same issue with the steps. Also, your cluster is healthy and nodes ready?

microk8s kubectl get nodes

@mscrocdile got it...looks like everything should be good...I'm going to go through setting up a little cluster in the lab and let you know what I find

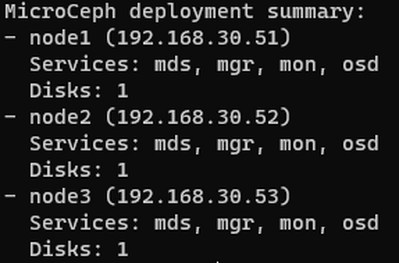

@mscrocdile I just built a quick little cluster in the lab and was able to get it to work. I am using the latest microk8s 1.29. I am adding (1) local disk to each VM in ubuntu as Ceph storage. What type of storage are you using for Ceph?

i use MicroK8s v1.28.3. Could that be the difference?

I was also adding 1 local drive into each hyperv Ubuntu VM.

@mscrocdile Well, i would say that isn't in play since when i wrote my blog post, I was using 1.28. However, you might try, if you have quick and easy snapshots to roll back to, with 1.29 and see what you get. Also, I am guessing the disks are showing up correctly in Ubuntu for use with Ceph....I would think so since your Ceph status shows Healthy.

Everything is ok but it doesn't work 🙂

Well, i need to read fairytales for children now.. i will continue tomorrow morning.

I will upgrade to 1.29 if possible and tell you the result.

Thank you for your help.

@mscrocdile Curious if you have had a chance to try the cluster with v1.29? Let me know what you find on your ceph configuration. 👍

i ran this on each node to update

sudo snap refresh microk8s --classic --channel=1.29/stable

I'm not sure i updated correctly and how it is then in production. Maybe rook-ceph plugin had to be disabled first and then enabled (including microk8s connect-external-ceph) - i don't know.

However this did not help. PVC is still pending.

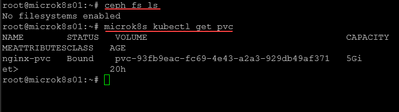

sudo ceph fs ls

No filesystems enabled

Is this correct there is no filesystem?

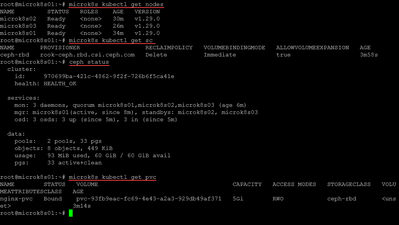

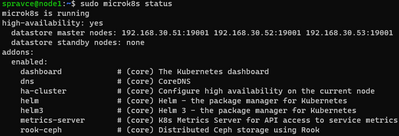

I'm also adding microceph status:

I've installed VM's again and started

sudo snap install microk8s --classic --channel=1.29/stable

but even if i was waiting more then 20 minutes microk8s status -w is not finished and nodes are not ready

I will return to 1.28 ...

I thought it will help me to run sudo microceph init at each node.

I noticed there is also IO section which i never noticed before so i though it will be better now

However that PVC is still pending. No change.

There are two pools now and IO disappeared after enabling rook-ceph :

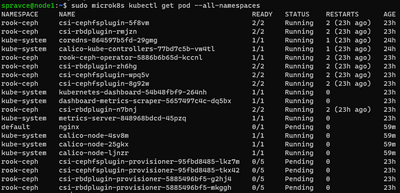

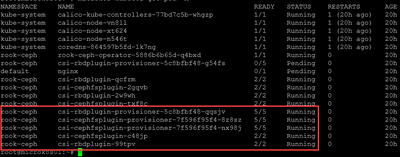

@mscrocdile I think you are onto something when you posted the status of the rook-ceph pods. Here is what I see:

Also, to answer your question about the file system, this is what I see when running the command (also see the no filesystems enabled):

sudo ceph fs ls

See what you see when you run this command:

microk8s kubectl -n rook-ceph logs -l app=rook-ceph-operator

Also, check out their troubleshooting guide, steps through the commands to run:

Ceph Common Issues - Rook Ceph Documentation

In meantime i've created 3 vm instances in google cloud and exactly the same problem. So i can continue in LAN.

operator logs:

@mscrocdile hmmm that might be something to try. I know my lab VMs have 4 GB. Also, can you follow my steps here and see what you come up with? I can't remember if I diverged from the steps in the official guide. This is what I used yesterday: Kubernetes Persistent Volume Setup with Microk8s Rook and Ceph - Virtualization Howto

only difference i can see in:

snap install microk8s --channel=1.28/stable --classic but in screenshot there is "candidate". Is it something that could be important?

and also here

sudo snap install microceph --channel=latest/edge

i did not use channel parameter at all

I'm increasing that memory...

4GB of memory did not help

(true is i did not installed whole machine or microk8s, just disabled/enabled rook-ceph addon + external)

Another difference i can see is that i use everywhere sudo.

If i add my user into group:

sudo usermod -a -G microk8s spravce

and reboot then if i run microk8s enable rook-ceph (without sudo) it results to error:

PermissionError: [Errno 13] Permission denied: '/var/snap/microk8s/common/plugins/connect-external-ceph'

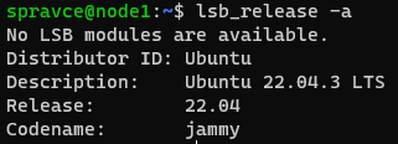

@mscrocdile I think when I first tested rook-ceph with Ubuntu 22.04, I tested with the release candidate. I don't think that matters, since I used the 1.28 and 1.29 stable release without issues after they were released.

Also, what do you see if you describe the rook-ceph pods that are not spun up? I don't think you will see storage provisioned if those pods are not healthy.

Problem fixed!

In that pending csi pod there was message "Insufficient cpu."

I used only 1 cpu all the time for each node.

Not exactly, i increased it while testing but it was not enough. This time i installed all nodes with 2 cpu and 4GB mem from the beginning. I also think i described those pods before and there was nothing about cpu.

But i was so tired from that error that i don't know what is true and what is not.

Thank you very much! I will talk about you everywhere now.

@mscrocdile That is awesome! Great troubleshooting...you were very methodical and tried what I would have tried as well. Good job! Please do talk up the VHT forums and point others here for help. Don't hesitate to post on the forums and help us grow the community here 🙂 Thanks again.

@mscrocdile also in my testing since it is the only storage provisioner on my cluster, and looks like yours as well, I didn't have to set as default so that was a red herring.