Starting a new forum thread here. I had this question:

Hello, I have 3 nodes with Microk8s. I have installed Microceph - health ok ( https://canonical-microceph.readthedocs-hosted.com/en/latest/tutorial/multi-node/ ) I have enabled rook-ceph. Then microk8s connect-external-ceph Everything is ok. When i create pod with pvc then it is still pending. I don't know what else i should check.

One of the first things to check:

kubectl get sc

kubectl get sc

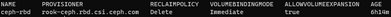

NAME PROVISIONER RECLAIMPOLICY VOLUMEBINDINGMODE ALLOWVOLUMEEXPANSION AGE

ceph-rbd rook-ceph.rbd.csi.ceph.com Delete Immediate true 6h14m

@mscrocdile would you be able to post a screenshot of that output as well? You can copy and paste images here as well.

@mscrocdile ok it may not be recognizing it as the default....let's run this:

kubectl patch storageclass ceph-rbd -p '{"metadata": {"annotations":{"storageclass.kubernetes.io/is-default-class":"true"}}}'

@mscrocdile, Actually just remembered, we need to prepend microk8s to that command:

microk8s kubectl patch storageclass ceph-rbd -p '{"metadata": {"annotations":{"storageclass.kubernetes.io/is-default-class":"true"}}}'