Hello, i just imagine if i'm able to use Microk8s in production.

Let's suppose i'll buy 3 VM's (3 is enough for my current services) somewhere (i'll take hetzner but it doesn't matter - it just have to support metallb).

I ask myself for this:

1) if i setup metallb and buy one floating ip address to make a cluster working when one node dies - is it ok that generally all the traffic goes through that one node?

2) Where to store pods data. Is rook ceph (microceph) the best , fast and reliable? Do I need it? Problems: i need to buy additional storage for this case = more expensive. I dont know how to back it up yet (it is like black box for me so far) and where - to object storage anywhere or into LAN?. What else except pod's datashould i backup? What if the whole datacenter dies? Should i use microceph(microk8s) nodes in another datacenter? What about performance then?

3) Generally, what if some part of cluster fails? Floating ip mechanism or the whole datacenter? Should i rather use cloud load balancer(does it work with metallb or without). Or should I rather buy the whole managed cloud kubernetes?

Except failover and where to save HA data i don't see it so difficult.. What do you think? What would you recommend me? I have fear some part fails and i will never restore it as quickly as in old school case without cluster. Is microk8s really easy wit microceph and am i able to manage it myself in production?

@mscrocdile, great questions about running Microk8s in production, and I will take a stab at a few of your questions with Microk8s. One of the good things about Microk8s is that it has HA built-in by default for the control plane, meaning every node runs the control plane using a SQLlite DB.

With the control plane, Microk8s has an embedded datastore on the API server, Dqlite, at least 3 nodes are needed. Each API server replicates to the datastore. Also, each node is also a worker in a Microk8s cluster, so it has datastore replication and worker replication.

You can do the more traditional etcd cluster replication, but it is more complex to configure, and with Microk8s, I believe it isn't really needed.

1). With MetalLB, you should be good if you have replicas of your MetalLB pods on the other nodes in the cluster. If one node fails, the MetalLB pod should take over handling IP provisioning. Keep in mind that MetalLB handles provisioning IP addresses to your services in Kubernetes. So, your service should continue to maintain the IP address it has been assigned by MetalLB, so that ingress services will maintain their associated configurations.

(2 & 3) I think Microceph is a good solution for local replicated storage. You can do shared storage like NFS as well, or something like Rancher Longhorn, which is open-source and also a replicated storage solution with local storage on each Kubernetes node.

Veeam also makes a free backup solution called Kasten K10 for Kubernetes that allows you to back up your Pod data and store it in a number of locations, such as local or cloud object storage. Having both local and offsite backups helps to ensure you are meeting the best practices with 3-2-1 backups. More about K10 in this link:

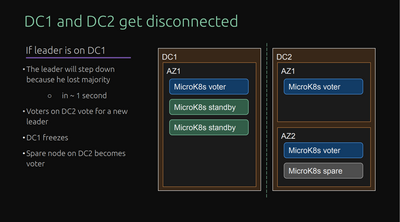

With Microk8s, you can specify the failure domain each node is in, and MicroK8s, along with dqlite will spread the voters and stand-bys....more on those:

voters: db replication, participating in leader election

standby: db replication, not participating in leader election

spare: no db replication, not participating in leader election

So with that, protecting yourself from an entire site failure is always a good idea.

Also, i would definitely recommend getting more familiar with Microk8s and the solutions you want to support in a production environment in a lab, which it sounds like you are definitely doing that. I think a lot of your comfort level with the solution will come from experience with it in the lab. I would set up some of these failure scenarios to see how the pods behave with actual services there.

Also, I want to ping @t3hbeowulf and get his input on the conversation with Kubernetes in production, as I want to make sure I am not missing something with the recommendations and would definitely like to hear his input on disaster recovery and configurations for resilient clusters that he has been involved with.

Thank you for tips and information.

You are right, I must learn a lot in lab yet.

As we say here I cannot see the wood through the trees. So i will appreciate all above as soon as i will understand it.

Let me just return to that low cost and low setup microk8s in production. I still dont understand if it is ok to have 3 nodes(3VMs) and use one floating ip as external ip of metalLB and in case one node is down it will switch to another using keepalived.

If I go another way mayby the better one and if i use external TCP haproxy load balancer (2 VM's+keepalived) then am i able to correctly configure letsencrypt in the cluster?

https://example.com will go to haproxy and it forwards it then to one of the public ip's of cluster. Do i understand it well that haproxy just forwards it without touch and it will be the cluster who will have a chance to manage the rest? Or do i have to somehow implement that load balancer directly inside cluster?

Hello, I'm back with some progress I'd like to share and ask for something yet.

Finally I had only one option - to use https://github.com/hetznercloud/hcloud-cloud-controller-manager but it worked fine. With the help of https://blog.kay.sh/kubernetes-hetzner-cloud-loadbalancer-nginx-ingress-cert-manager/ i was able to successfully access test nginx site via https.

However I wanted to use traefik ingress controller. I've installed traefik using helm, annotated traefik service the same way so it has external ip.

So far, good. First i wanted to test just http at port 80 (no certificates yet). I've created deployment, service and IngressRoute. Everything seems ok and service is accessible from cluster. But i cannot access it from internet, i get http error 400. In Hetzner LB there are correct 80 and 443 ports open. Nothing in traefik log. In case i use Ingress instead of IngressRoute then i can see there is no address if i list "k get ingress".

No idea what to check. Traefik is in DEBUG.