Running Docker containers and learning them is one of the best things you can do for your IT learning and career in 2025. Containerized technologies are everywhere. They are simple, effective, efficient, and they make self-hosting extremely powerful. However, they can quickly grow in your environment to dozens or even hundreds of containers running that you may lose track of and have tons of cryptic container names that you have no clue about, making documenting Docker containers extremely important.

This is the situation that I have found myself in quite a few times and it has lead to me starting to take documenting my Docker setup. and taking this seriously In this post, I’ll walk you through why I began documenting containers, how I approached it using folder structure and version tracking. Then, we can see what I now include to make my Docker environment self-explanatory.

Why documentation matters even for your Docker containers

Docker containers are probably even more important to document arguably than some other types of infrastructure, because they are very ephemeral in nature. The configuration of your container exists in the configuration for Docker itself.

Spinning up new services is simple in the grand scheme of things, but a few lines of code that might seem simple today, might be very complex later or months later and might be very difficult to recall or reinvent the wheel when you are trying to come up with the configuration to recreate your containers.

In your Docker documentation, it is extremely important to document what your container is connected to, what its dependencies are, so on and so forth.

Here are some things to think about:

- Avoid configuration drift: It is VERY easy to lose track of container settings especially if you are just doing everything from the command line

- Disaster recovery: In a recovery scenario you will need to understand how to recreate your containers in the event of a disaster

- Collaboration: This is probably more of a production environment item but still something to think about. If you share a project with others, having things documented is golden

- Security audits: when you have all of your configurations documented, it is easy to see which ports are exposed, which volumes are mounted for the container and if there are any sensitive environment variables

Now let’s dive into how I build the structure in my environment to document my containers.

Structuring the Docker Environment

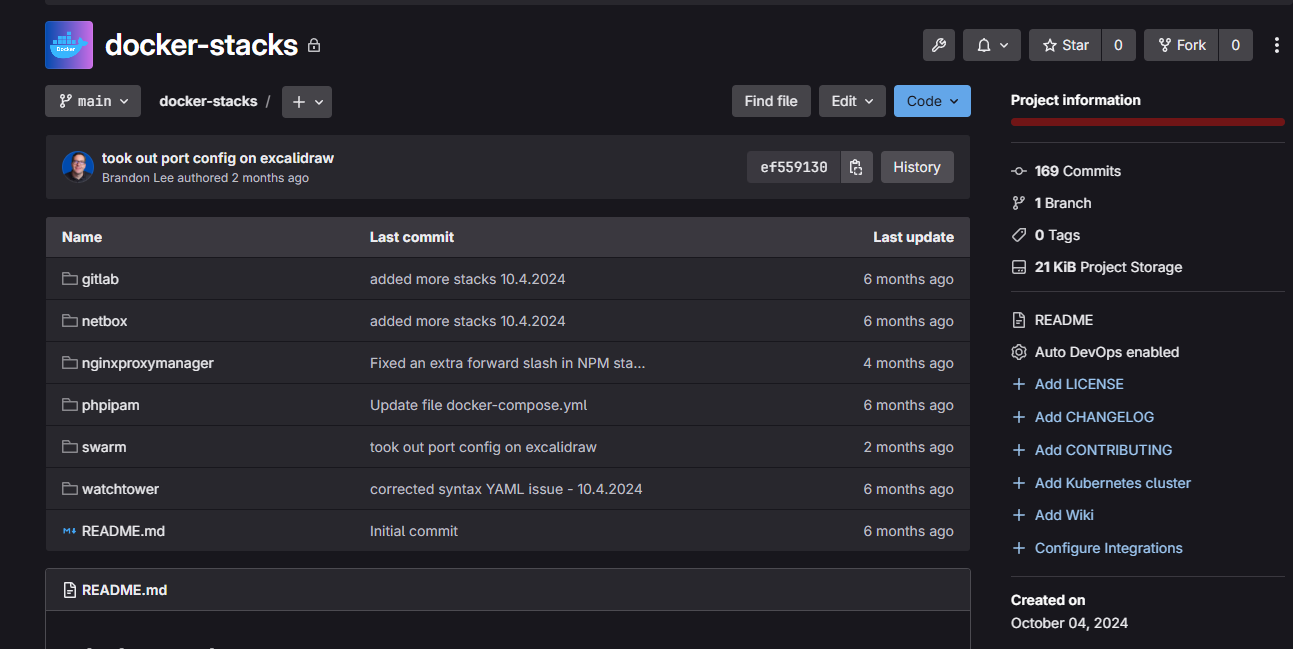

The first step I took, was organizing my stacks into folders. So high-level, I have a Git project that I created in Gitlab that is for my Docker-Stacks. Then I clone down the repo to my local admin workstation and created the subfolders that I wanted for each stack.

In each stack folder, I have the docker-compose.yml file, a README.md, and any .env files that are needed for the docker stack. So, every app or stack doesn’t need this .env file, but below is an example of what might be in each folder.

My Folder Structure

/docker-stacks

│

├── freshrss/

│ ├── docker-compose.yml

│ ├── README.md

│ └── .env

│

├── uptime-kuma/

│ ├── docker-compose.yml

│ ├── README.md

│ └── notes.md

│

├── traefik/

│ ├── docker-compose.yml

│ ├── traefik.yml

│ ├── acme.json

│ └── README.md

│

└── portainer/

├── docker-compose.yml

└── README.md

So each app or service have a docker-compose.yml file that is scoped to just one app, and the folder becomes a complete snapshot of the Docker container(s) and the service.

This structure allows me to have an easy way to version everything, keep things organized, and in Portainer, I can use Git as the source of “templates” that I can create for each service.

More details on what I Include in each folder

Let’s dive a little bit deeper into the contents of each folder and look at examples of each item and component that I think you should document.

1. docker-compose.yml

This is the most important file in your documentation for your containers, the docker-compose.yml file. If you don’t include anything else, do include this file. Also, use best practices when it comes to how you describe your containers in your files.

- Use descriptive container names (

container_name: freshrss_app) - Define your networks and volumes explicitly.

- You can use

.envfiles for credentials or configs so they can be swapped out easily.

Example:

version: "3.8"

services:

freshrss:

image: freshrss/freshrss

container_name: freshrss_app

ports:

- "8080:80"

environment:

- TZ=America/New_York

volumes:

- freshrss_data:/var/www/FreshRSS/data

restart: unless-stopped

volumes:

freshrss_data:

2. README.md

The README is a very good idea to include as it describes the purpose of the container, how it works, any considerations you need to make, backup and restore type operations and any issues or thing to watch out for.

I typically include:

- Description of the app/service

- How to start:

docker compose up -d - Required networks or dependencies

- Reverse proxy considerations (e.g., domain name, headers)

- Backup/restore procedures

- Known issues or things to watch for (e.g., container updates that break config)

Example snippet from my Uptime Kuma README:

## Uptime Kuma - Self-Hosted Monitoring

This monitors public/private endpoints using HTTP, Ping, DNS, etc.

### Deployment

- Run with `docker compose up -d`

- Web interface on port 3001

- Login with [email protected] / changeme (change after first login!)

### Reverse Proxy

Traefik route: uptime.mydomain.com

3. Change Log or notes.md

Some containers go untouched for months, either until you have something break or you want to reconfigure something differently. This is where a file like notes.md or CHANGELOG.md helps.

You can log things like:

- When you update the compose file or make any kinds of changes

- What those changes are (e.g., changing port mappings, volume mounts)

- What happened after the update (e.g., “v1.22 broke reverse proxy headers – fixed by adding middleware”)

It’s not fancy. Just date-stamped bullet points. But this one file that can save you hours of re-troubleshooting the same problems that may have taken a lot of time to troubleshoot before.

4. Sample .env File

If the stack uses environment variables, I create a .env.sample file with placeholders.

MYSQL_ROOT_PASSWORD=changeme

MYSQL_USER=appuser

MYSQL_PASSWORD=changeme

This helps to make sure I don’t commit secrets but still know what’s expected.

5. Healthcheck scripts or monitoring information

For apps that have a way to do health checks or need uptime monitoring, I sometimes add example scripts or curl commands. This is helpful to test endpoints. This ties in with monitoring solutions like Prometheus or Netdata-style setups.

Version Control with Git

All of this lives inside a Git repository in something like GitLab which is what I use in the home lab. This gives me history of changes, rollback capability, and I can tag versions of code in my home lab setup. You can use things like branches to work on your code. If you have a pipeline enabled it can even roll out changes when you commit your code. Something like Portainer can also periodically check repos for changes and redeploy your containers when it finds an update.

Make sure your commit messages are descriptive and make sense. Take a look at the following commit messages and what you might include.

Update FreshRSS to latest image - fixed data volume permissions

Add Prometheus alert rule for CPU threshold

Document Traefik config changes for new wildcard certWhat are tools I use in 2025 for Docker documentation?

There are many great tools out there that I use for my docker documentation and configuration. These include the following:

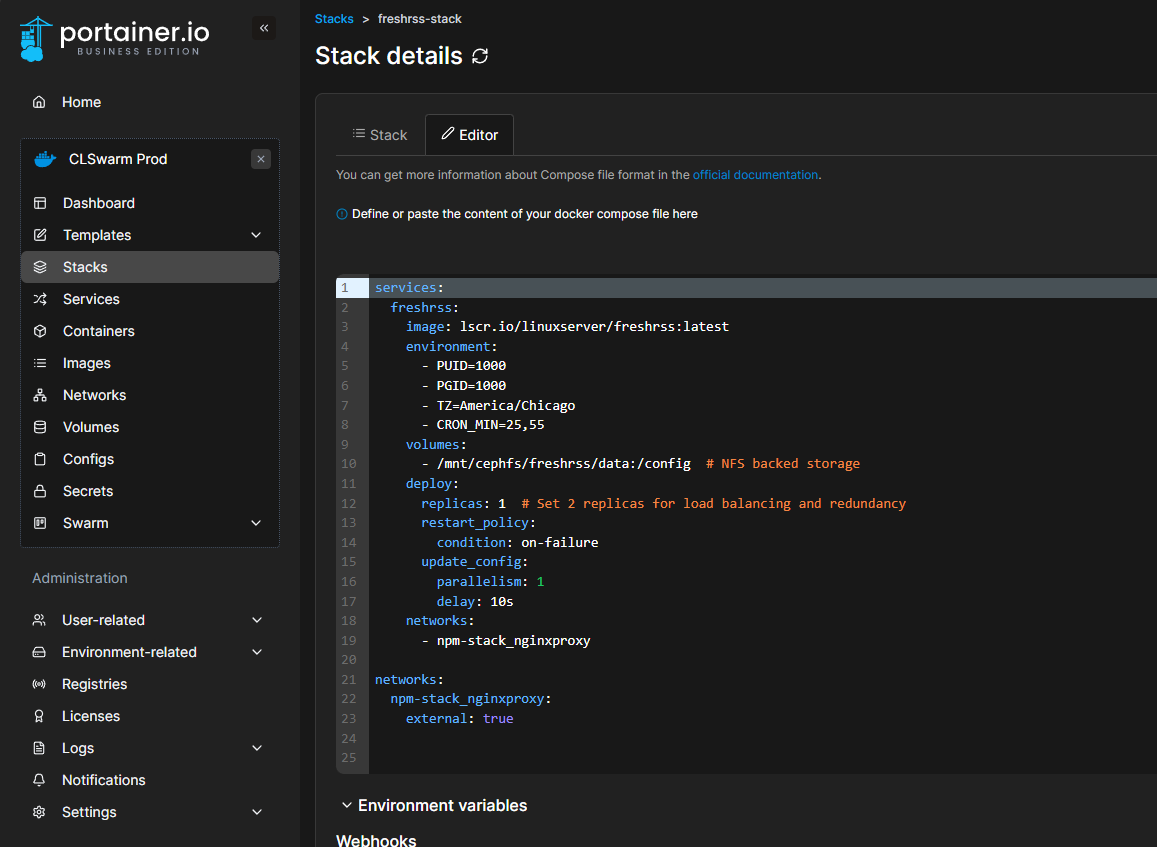

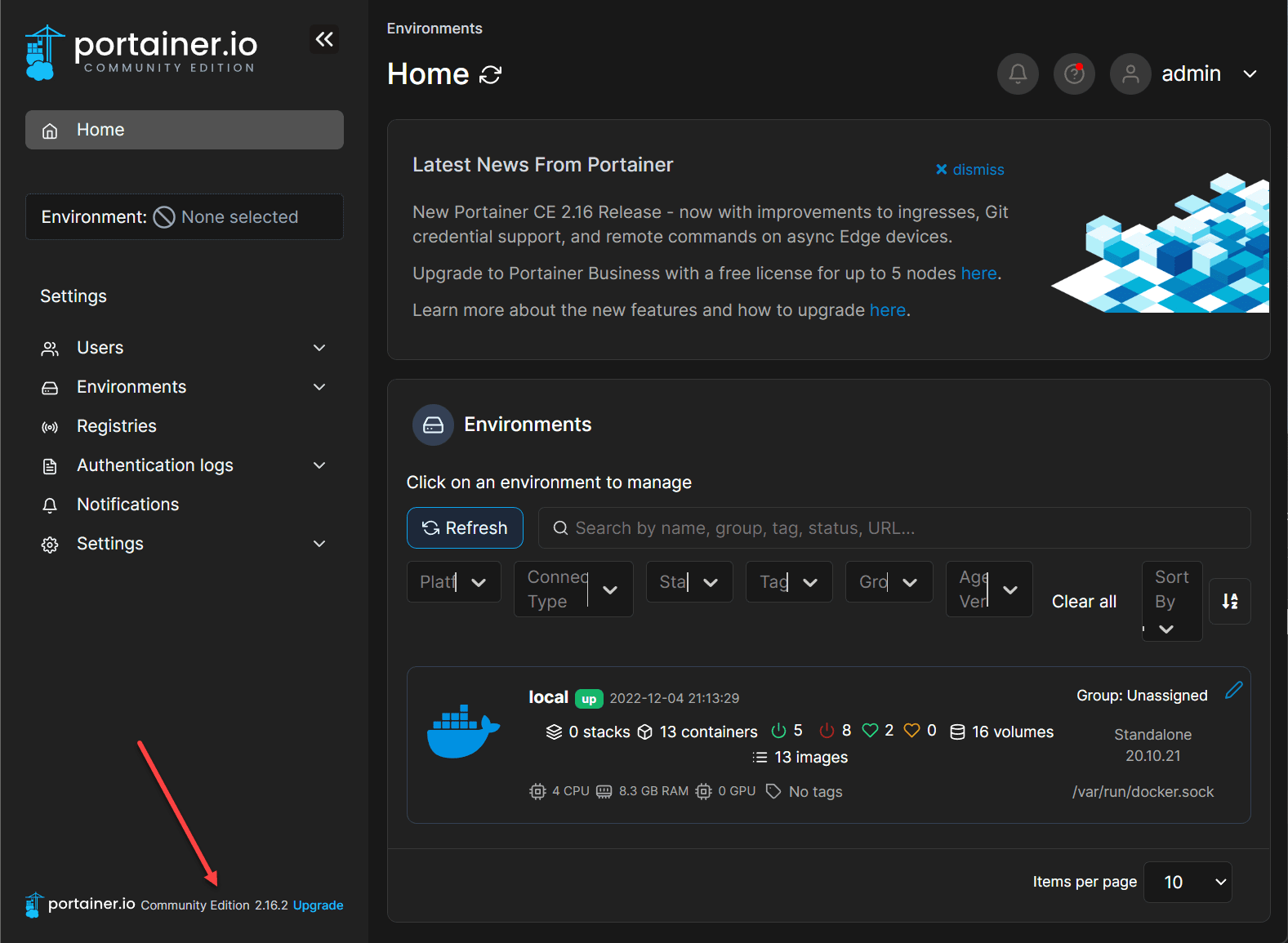

1. Portainer Business Edition

I do use and pay for Portainer Business Edition (for home labs). The home labs edition that you pay for allows you to have 15 managed hosts which is great. I am all about open-source and free. But, I am also keen to pay for tools that makes life easier also.

Portainer’s stack view shows you the real-time configurations, logs, and environment variables. I often take screenshots of this as part of my documentation that is easy to include in a folder or even a hard copy printout.

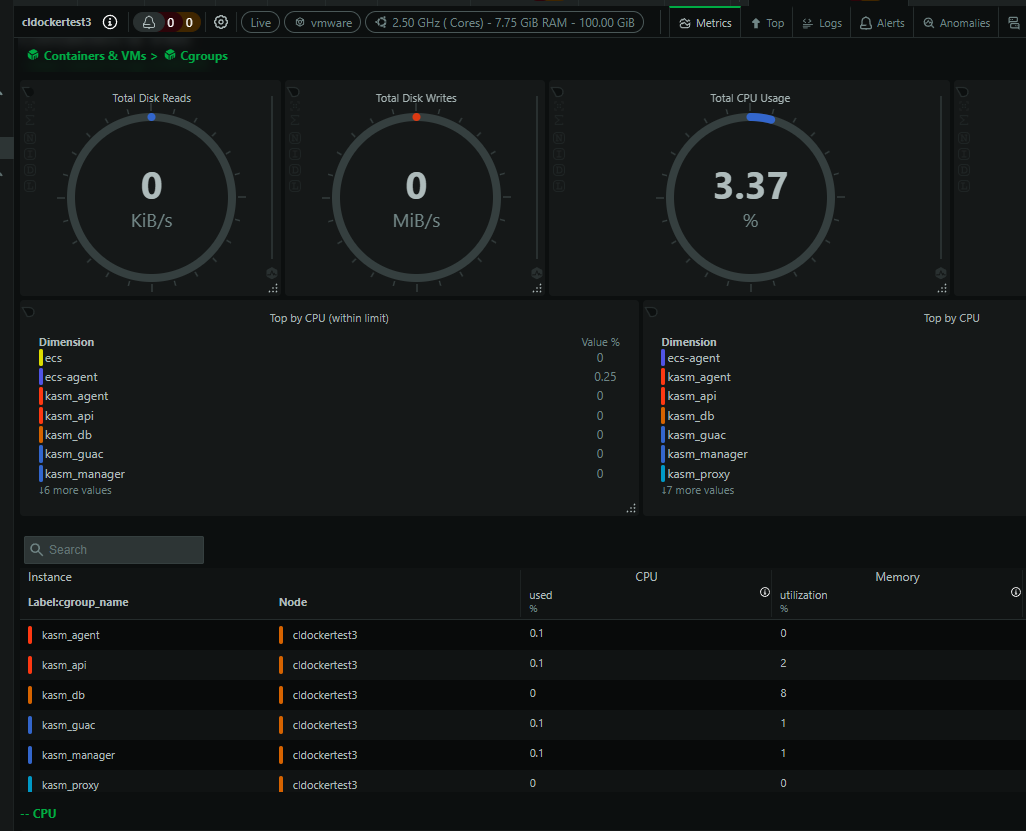

Netdata

I run Netdata on my Docker hosts to visualize performance. Netdata has great monitoring for Docker containers. You can see container CPU/memory usage, and alerts. It will tell you if containers are unhealthy or there are issues even at the container level, not just the host.

Markdown previewer in VSCode

Since every container folder has a README.md or notes file, I use the Markdown preview in VSCode to format my notes and documentation. As a bonus tidbit: you can use GitLens to track file history.

Watchtower and Shepherd for auto-updates

I also include which containers auto-update and which tool is doing the auto-updating (Watchtower, Shepherd, or Portainer, or manual updates if you are doing it this way). This prevents surprises when a container silently breaks due to a background update.

My own Docker Dashboard I have created

I also created a simple tool that queries all of my Docker hosts and gets all the containers and important metrics with them and the hosts. Take a look at my GitHub repo here as well as my video on the topic:

Pro tips for documenting your Docker containers

Here are a few habits I have come to have when documenting my Docker containers:

- Take screenshots of your app’s web UI after major config changes (e.g., Uptime Kuma dashboards, Portainer stacks).

- Include restore instructions next to backup commands. It might be hard to remember months later what you meant by simply saying click restore.sh. Where is the command? How do you run it, does it need parameters passed? Include all of this.

- Use labels in Docker Compose to describe the container purpose or group them in Portainer.

Example:

labels:

com.docker.description: "FreshRSS news aggregator"

com.docker.maintainer: "Brandon Lee"

Wrapping up

Documenting your Docker containers might sound like extra work, but it’s the opposite. It saves time, reduces frustration, and brings confidence to your home lab or production deployments.

Once I adopted a folder-per-app structure and began writing just a few markdown lines per service, my environment became way more transparent and resilient. Whether you’re managing 3 containers or 300, documentation scales — and future-you will thank you.

If you’re not already documenting your containers, now’s a great time to start. The tooling is mature, and with just a few habits, you can turn your chaotic Docker mess into a clean, understandable stack.

Google is updating how articles are shown. Don’t miss our leading home lab and tech content, written by humans, by setting Virtualization Howto as a preferred source.