The Best Kubernetes Storage CSI Providers for Home Lab Enthusiasts

I have been experimenting and trying various Kubernetes Storage CSI providers over the past few months and I wanted to compile and give you a breakdown of my thoughts on each of them, their strengths and weaknesses and what each brings to the table. Is there one that shines above them all? Let’s take a look at the best Kubernetes storage CSI providers for home lab enthusiasts.

Table of contents

Why do you need a Kubernetes Storage CSI for your cluster?

Well, the Kubernetes storage CSI is a standardized API that allows your Kubernetes cluster to interact with a certain type of storage system and do this in a dynamic way. The last thing you want to have to do is manually configure storage for each and every pod.

The CSI enables you to provision and mount persistent storage across different types of storage backends. This can be on-premises providers, and cloud providers as well as even HCI or software defined storage.

The storage CSI is usually configured for a specific type of storage or a storage vendor that allows your Kubernetes cluster to work with that specific type of storage.

Best Kubernetes Storage CSI providers

Keep in mind “best” here is subjective. I am not trying to bash any storage provider by not including them on the list or including them lower than some might expect. These are my thoughts on storage providers that I have personally worked with and the pros and cons of working with them.

- Microceph and CephFS with Rook

- Synology CSI provider

- vSphere CSI provider

- LongHorn

- NFS Subdir provisioner

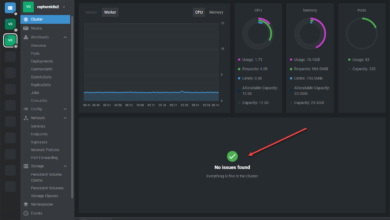

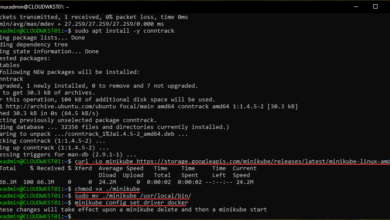

1. Microceph and CephFS with Rook

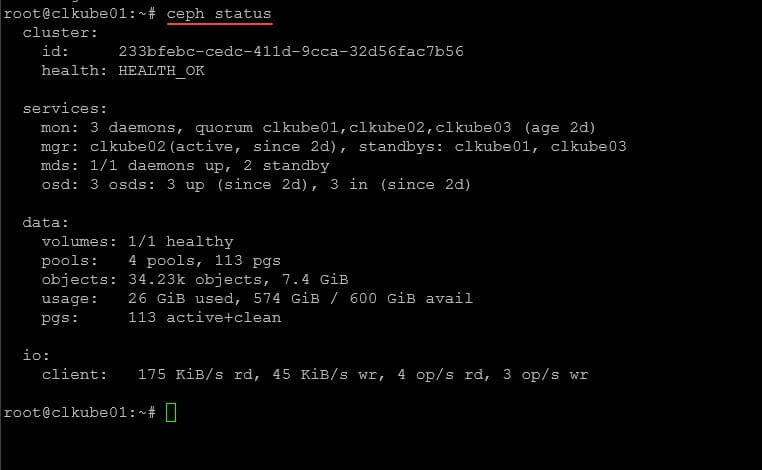

I have to say that I have tried a lot of different Kubernetes storage CSI provisioners in my Kubernetes home lab, and Microceph and CephFS are my favorite. With Microk8s, you can enable Ceph storage with Rook and it will make use of Ceph software defined storage.

However, you don’t have to be running Microk8s to take advantage of Microceph. As I have written about before, I use Microceph with Docker Swarm as well. So, it is not just a Microk8s technology. Ceph in general is a great HCI storage solution for a wide range of technologies.

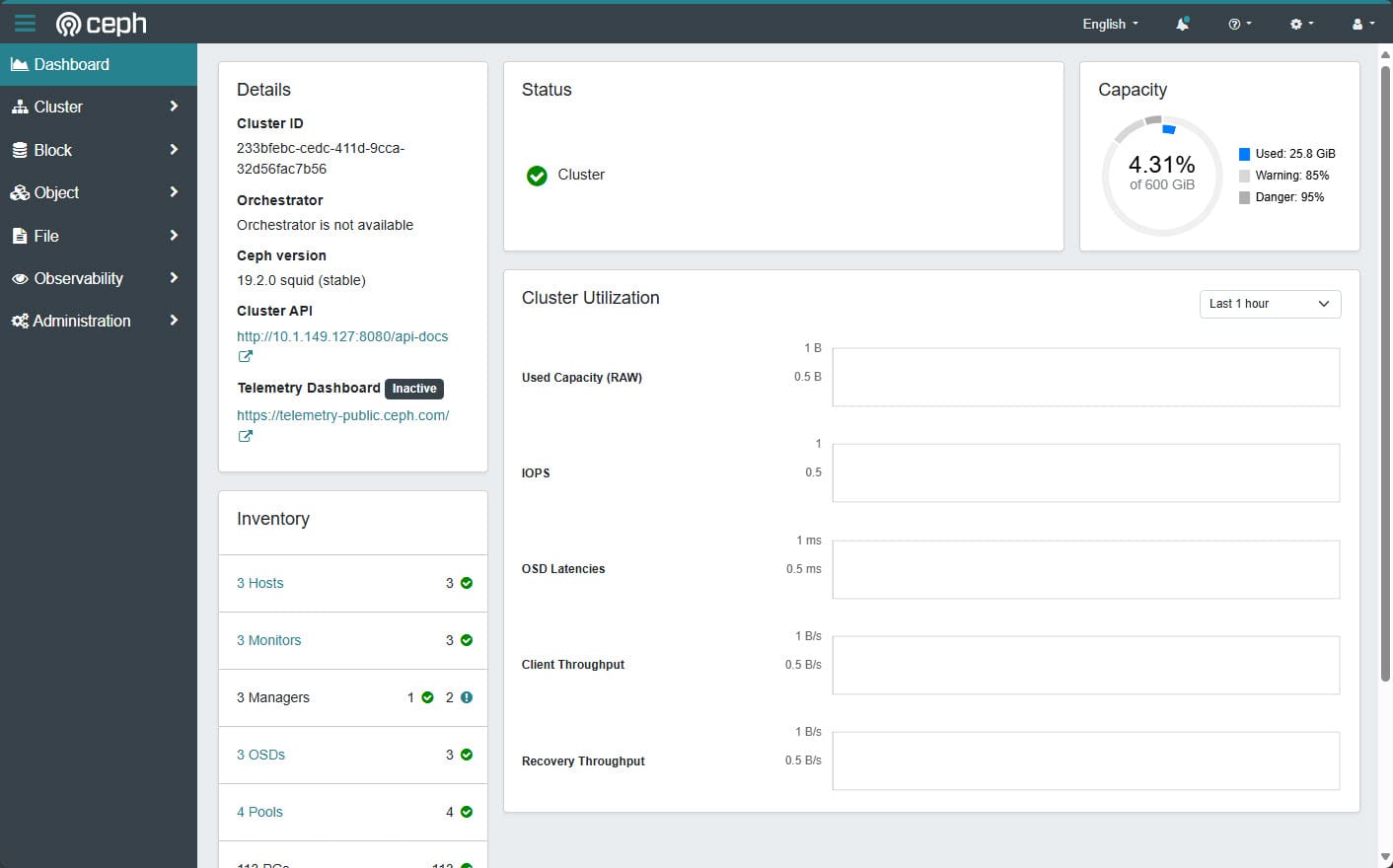

You can also install the Ceph dashboard for GUI monitoring and management.

Pros

- Installed with a simple snap command

- Much lighter weight than full-blown Ceph

- With CephFS you can directly access Kubernetes files

- Makes it easy to migrate from Docker to Kubernetes without having an intermediary pod to mount storage, etc

- Can do erasure coding with enough nodes

- You can install the Ceph dashboard to have a GUI tool for management

Cons

- 3x space for replication, many nodes can do erasure coding though

- Ceph itself can be relatively complicated with OSDs, Monitors, etc

- Ceph can be difficult to troubleshoot without experience

- CephFS is another layer on top of Ceph that can add additional complexity

2. Synology CSI provider

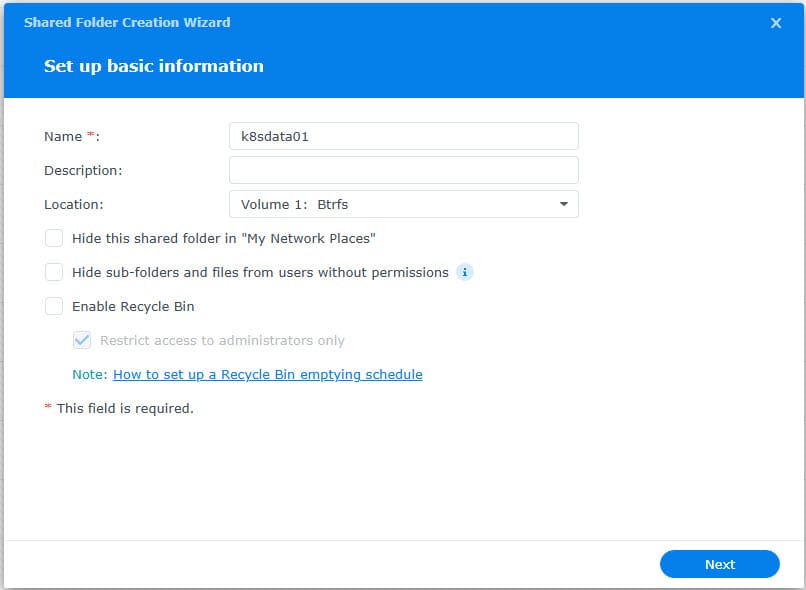

Many home lab enthusiasts are running Synology NAS devices. I have one and it was the first real NAS that I had purchased for storage in my home lab and it was a great investment. Synology is well suited for many different storage use cases. But, did you know you can use the Synology CSI provider to have a dynamic storage solution for your Kubernetes cluster?

This one makes a lot of sense if you have a Synology NAS. Not so much if you don’t. I wouldn’t just buy a Synology for Kubernetes storage. However, it is one of those things that makes sense if you have already bought into the ecosystem.

Take a look at the project here: SynologyOpenSource/synology-csi.

The Synology CSI provider is a little bit quirky I found with the install, but that seems to be true with just about every CSI provider with Kubernetes. It always seems hardware than it should be. But all in all, this is one of the easier ones to install.

Pros

- Easier to install that others

- Open source

- Can make use of your existing NAS environment in the home lab

- It supports many different storage protocols, including iSCSI, NFS, and SMB

- You can do Read Write Many volumes

Cons

- You have to have a Synology NAS for it to work

- Synology NAS devices are relatively expensive

- I found that it seems to have a bug where you can’t use a subfolder and must create CSI volumes in the root of your volume1 on your Synology NAS

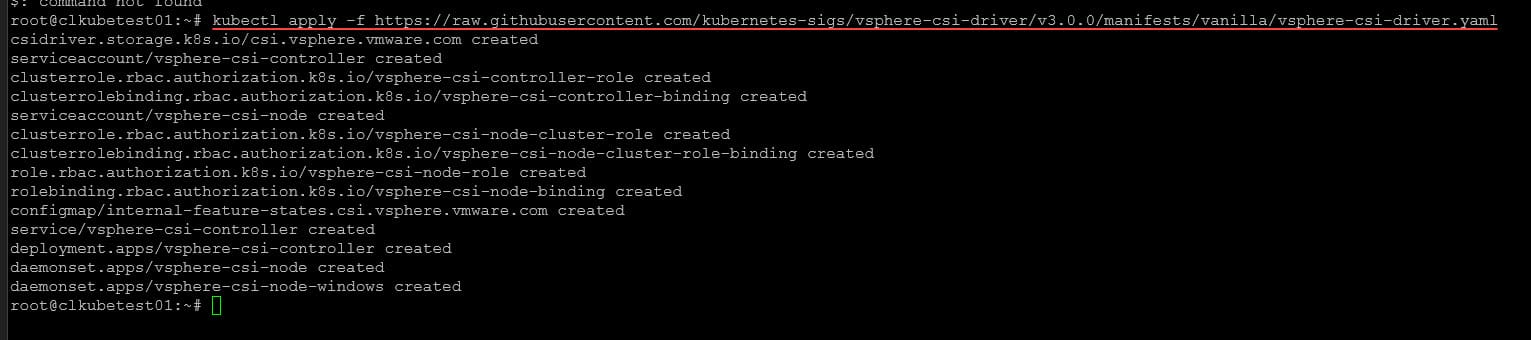

3. vSphere CSI provider

If you are still running VMware vSphere in the home lab, the vSphere CSI storage provider is an integrated solution if you are using VMware vSphere for your Kubernetes cluster hosts. It allows your Kubernetes cluster to dynamically provision pod storage on a VMware vSphere datastore.

Also, you don’t have to run VMware vSAN to use it. You can just have a traditional VMFS datastore running on iSCSI or NFS storage. One downside to it though that I hate is that you can’t do ReadWriteMany storage volumes unless you are running vSAN NFS.

Take a look at the project here: kubernetes-sigs/vsphere-csi-driver: vSphere storage Container Storage Interface (CSI) plugin.

Pros

- Works well with VMware vSphere

- Doesn’t require vSAN at least for ReadWriteOnce volumes

- Free for VMware

- You have visibility over your CSI storage in the vSphere Client GUI

Cons

- Documentation is outdated with old references to image repos, etc

- Fairly complicated to install, due to outdated documentation (it sucks)

- Can’t do ReadWriteMany volumes without vSAN, only ReadWriteOnce

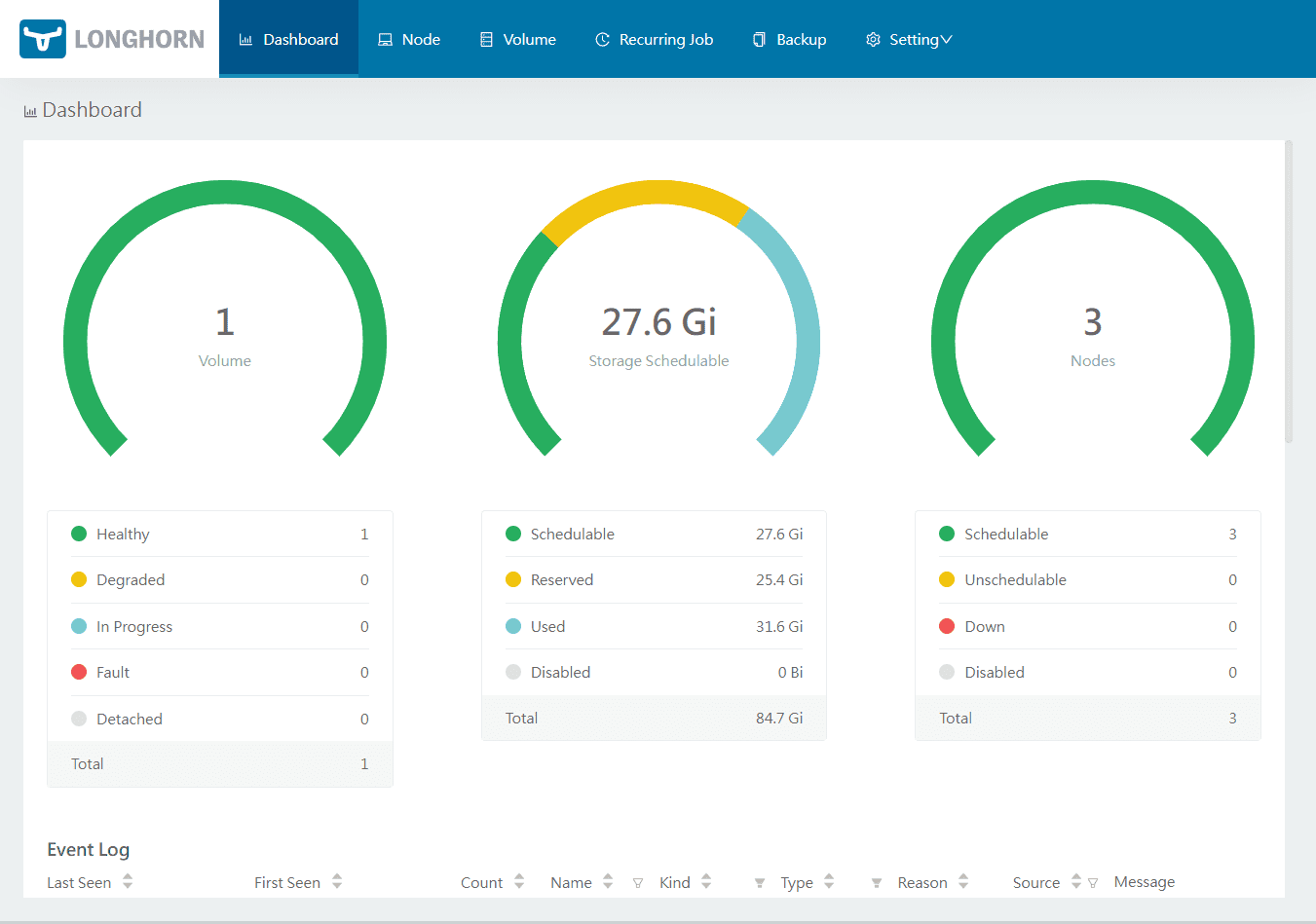

4. LongHorn

Another really cool Kubernetes CSI storage solution is a solution called LongHorn. I had my first experience with LongHorn when installing Kubernetes nodes with Rancher orchestration. I really like Rancher. It makes installing LongHorn super easy as well.

However, you aren’t required to have Rancher to have LongHorn. What is it exactly? It is a block storage system that is designed for Kubernetes distros. It allows you to have a software-defined storage solution that has data redundancy, snapshots, backups, and other DR features that are built-in out of the box. It is open-source and developed under the CNCF.

Check out the project here: https://longhorn.io/.

Pros

- Open source

- It has backups and replications built-in

- It has snapshots and volume resizing capabilities

- Built-in UI management

Cons

- Storage overhead writing 3x the space with replication

- It does’t scale easily with large deployments

- Performance directly relates to the quality and speed of the network

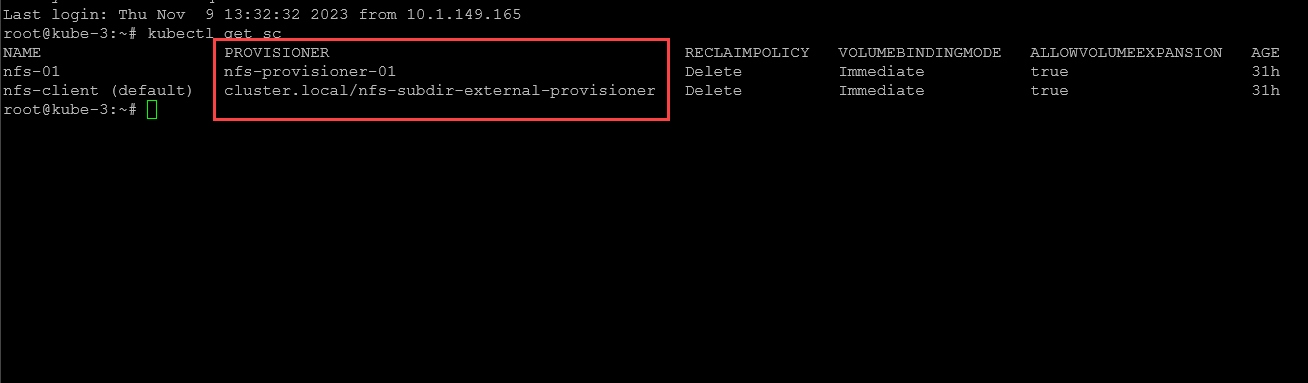

5. NFS Subdir Provisioner

This is another open-source project that I have had good success with in provisioning storage using an NFS connection. Also, with the NFS Subdir provisioner, you don’t have to have a specific type of NAS, unlike the Synology CSI provider.

With the NFS Subdir Provisioner, you can use any NAS or other storage that provides the NFS storage protocol. This provides a free and easy way to provision storage on anything that supports NFS.

Take a look at the project here: kubernetes-sigs/nfs-subdir-external-provisioner: Dynamic sub-dir volume provisioner on a remote NFS server..

Pros

- Open source

- Easy to configure

- Can take advantage of any storage that can serve storage over NFS

Cons

- The project has had minimal contributions over the past several months at the time of this writing

- Limited to NFS

- No GUI or other management tools outside of your NAS or NFS server tool

Wrapping up

Hopefully, this list of CSI providers will help in settling on one for your home lab. I encourage you to try them all and others that I have not included on this list as what is right for one person may not be right for someone else. Options are a good thing. Some may not have a Synology NAS or many may not be running vSphere, so these wouldn’t be good options for those. The Ceph-based storage is my personal overall pick since you can run this on just about anything and you get the option for CephFS on top of Ceph that allows you to have simple file storage you can navigate, browse, copy, etc. This makes it super easy to move from Docker to Kubernetes if you have persistent volume data in Docker you want to move over.