Home Lab Tour 2024: Hardware, Software, Containers and More!

Well, what a year it has been! Wow, I can’t believe how many changes we have seen this year and bumps in the road along the way. But, here we are, at the end of 2024. I want to take you guys through in written form, before the video comes out my home lab tour at the end of 2024 and show you what I am running in the lab environment for my critical home lab services.

Table of contents

Hardware Overview

So, what have I settled on running by the end of the 2024 home lab season? Well, it has been all about mini PCs this year for me in the home lab. I finally decommissioned by Supermicro servers that served me well for several years now and I am running my critical workloads off Minisforum MS-01 mini PC with 10 gig uplinks. Below is an overview of the hardware, including mini PCs, storage, and networking that I am running in the lab currently. I will go into detail in the sections below.

Mini PCs

vSphere Cluster

- (2) Minisforum MS-01 (VMware vSphere Cluster)

- i9-13900H procs

- 96 GB

- NVMe memory tiering

- 2 TB of internal storage and a single datastore

- (1) F8 SSD Plus NVMe NAS

- 9 TB of useable NVMe storage

- 32 GB of memory

Standalone hosts:

- Asus NUC 14 Pro – Standalone Proxmox VE Server

- 32 GB of RAM

- 2TB of NVMe storage

- GMKtec NUCbox10 – Standalone VMware ESXi host

- Ryzen 7 5800U CPU

- 64 GB of RAM

- 2 TB of NVMe storage

- Trikey – Standalone VMware ESXi host

- AMD Ryzen 7 7840HS w/ Radeon 780M Graphics

- 32 GB of RAM

- 2 TB of NVMe storage

Single Board Computers

- Raspberry Pi3 – monitors generator

- Raspberry Pi4 – Used for home lab kiosk dashboard

- Raspberry Pi5 – Used for testing and playing around at this point

Storage

- Synology DS1625xs Plus – 25 TB of storage usable

- Terramaster F4-424 Max – 15 TB of storage usable

- Terramaster F8 Plus NVMe – (mentioned earlier) with 10 TB usable

Networking

- Firewall – Palo Alto PA440

- Top of rack 10 GbE – Ubiquiti Edgeswitch 16XG

- Client Edge and PoE – Ubiquiti 48 port 2.5 GbE switch

Mini PC obsession

This year has been an obsession with mini PCs and mini PC hardware coming onto the market. With the YouTube channel as well, I have had the liberty of receiving several mini PCs from various vendors. This has allowed me to try out several different models of mini PCs to see how these work with various hypervisors, including VMware vSphere, Proxmox, and I even toyed around with Nutanix Community Edition this year.

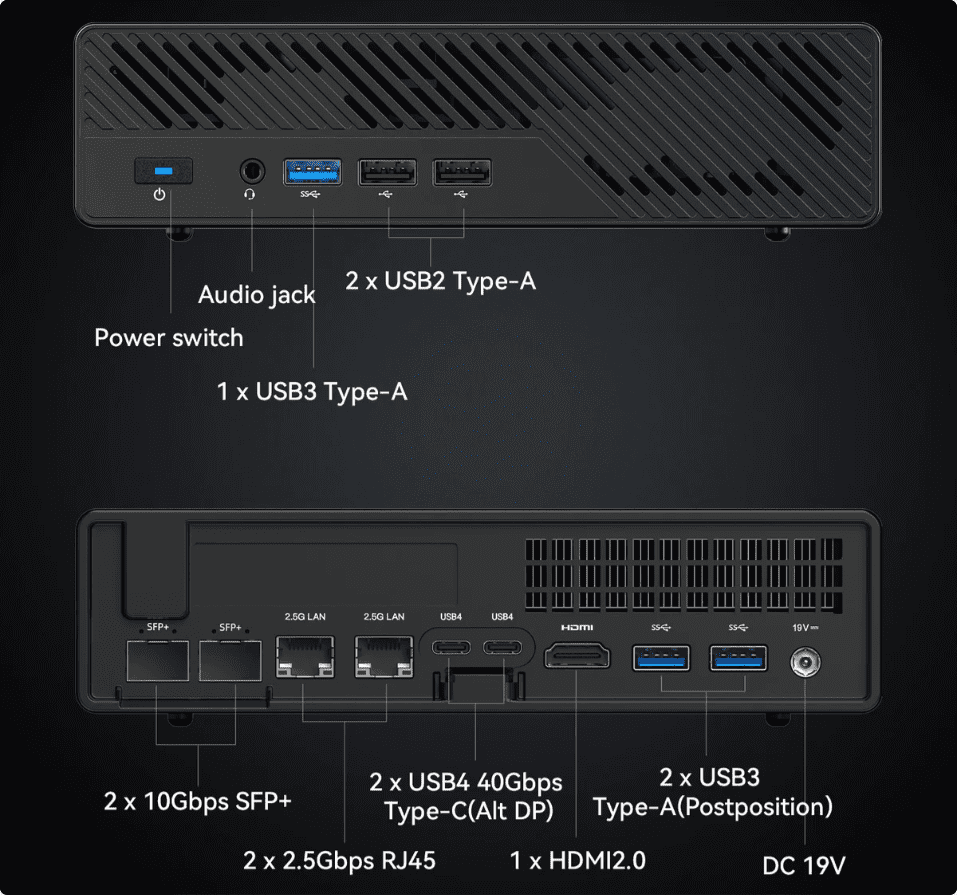

However, I think the one that never was outshined in 2024 has been the Minisforum MS-01. There just hasn’t really been a contender with this mini PC in my honest opinion when it comes to an all around great mini PC that can compete with the MS-01. It has everything you would want, including 10 GbE connectivty, 2.5 GbE connectivity, thunderbolt, a PCI-e slot, and the choice between a Core i9-12900H and 13900H processor.

A look at the MS-01 front view.

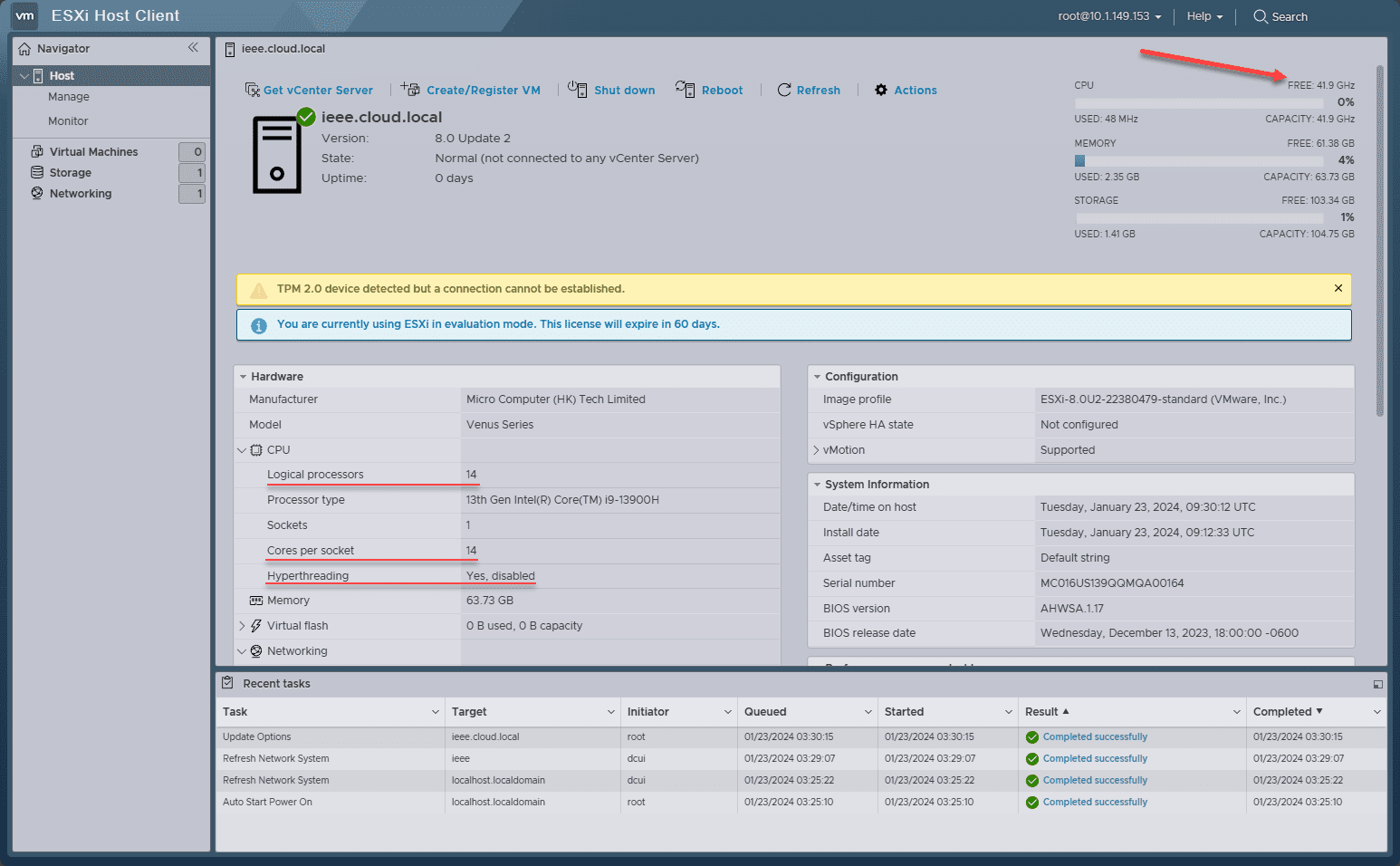

Below is a look at the CPU information in the host client with VMware ESXi.

This has been the goto for my home lab this year and it is serving me very well, since moving from the Supermicro servers that I had in the home lab. It lacks some of the enterprise features that I would like to have such as a built-in IPMI module. But all-in-all, it gets the job done and I can live without some of the bells and whistles that I had with the Supermicros.

Storage

This year, I have been able to try out some really great products from Terramaster, which I hadn’t had my hands on before. One of the models that I have in the home lab now is my first all NVMe NAS. The F8 SSD Plus is a great unit that although it operates at PCI-e gen 3 it still has enough performance to run multiple virtual machines in my lab environment.

I have connected the F8 SSD Plus to my (2) node Minisforum MS-01 cluster and use the NVMe storage for backing storage for my virtual machines running in the environment.

The Terramaster F4-424 Max unit is one that I am starting to play around with ideas on integrating the additional storage I now have in the home lab.

Networking

May networking stack in 2024 hasn’t changed too much since 2023. I am still making use of my Unifi Edgeswitch XG16 top of rack for my 10 GbE uplinks. I have both 10 gig base T connections via base-T modules and I am also using Twinax cables from servers into the top of rack as well. This is how I have my Minisforum MS-01’s uplinked to the network.

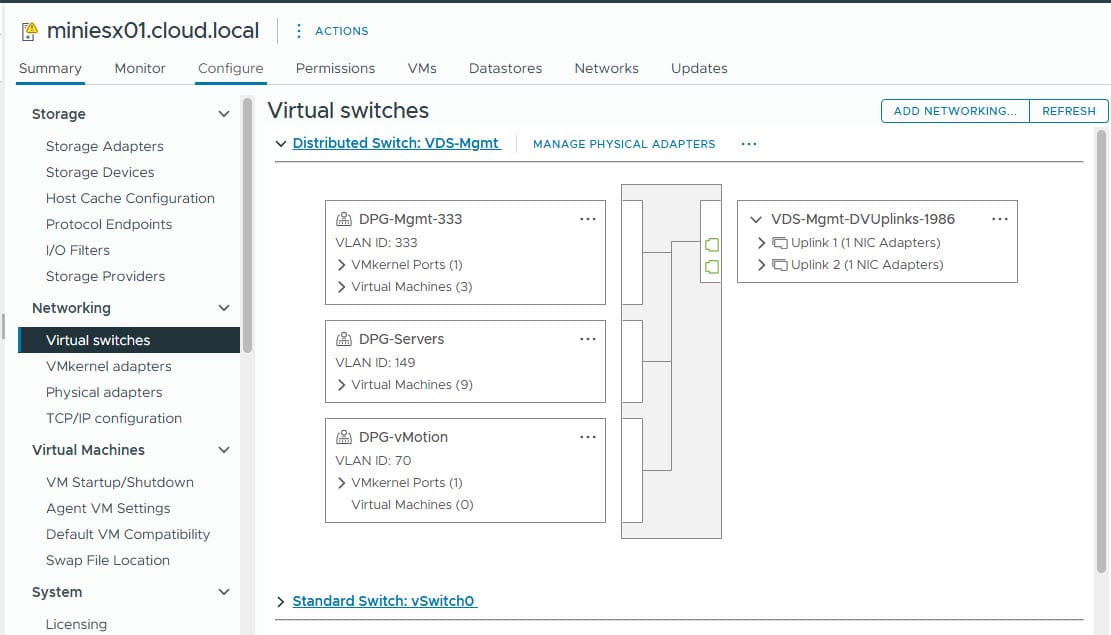

I have each MS-01’s 2 uplinks plumbed into the network switch which backs my vSphere Distributed Switch in vSphere as I am using a converged networking model for my virtual networks and traffic.

For my client and 2.5 GbE server connections I have a Ubiquiti 48 port 2.5 GbE switch uplinking these devices. This switch has really been a great addition to the home lab and provides plenty of uplinks for my needs currently.

Hypervisors

This is where things have been most in flux and changing in 2024 for my home lab. As we all know the fallout from VMware by Broadcom licensing changes and other not so good news, like VMUG now requiring certification for licenses, is leading many to consider their options, myself included.

However, for the time being, I am still running my production workloads on top of my 2-node VMware vSphere cluster. I am definitely getting more familiar with Proxmox and loving the new features that have been added since the release of Proxmox VE Server 8.x.

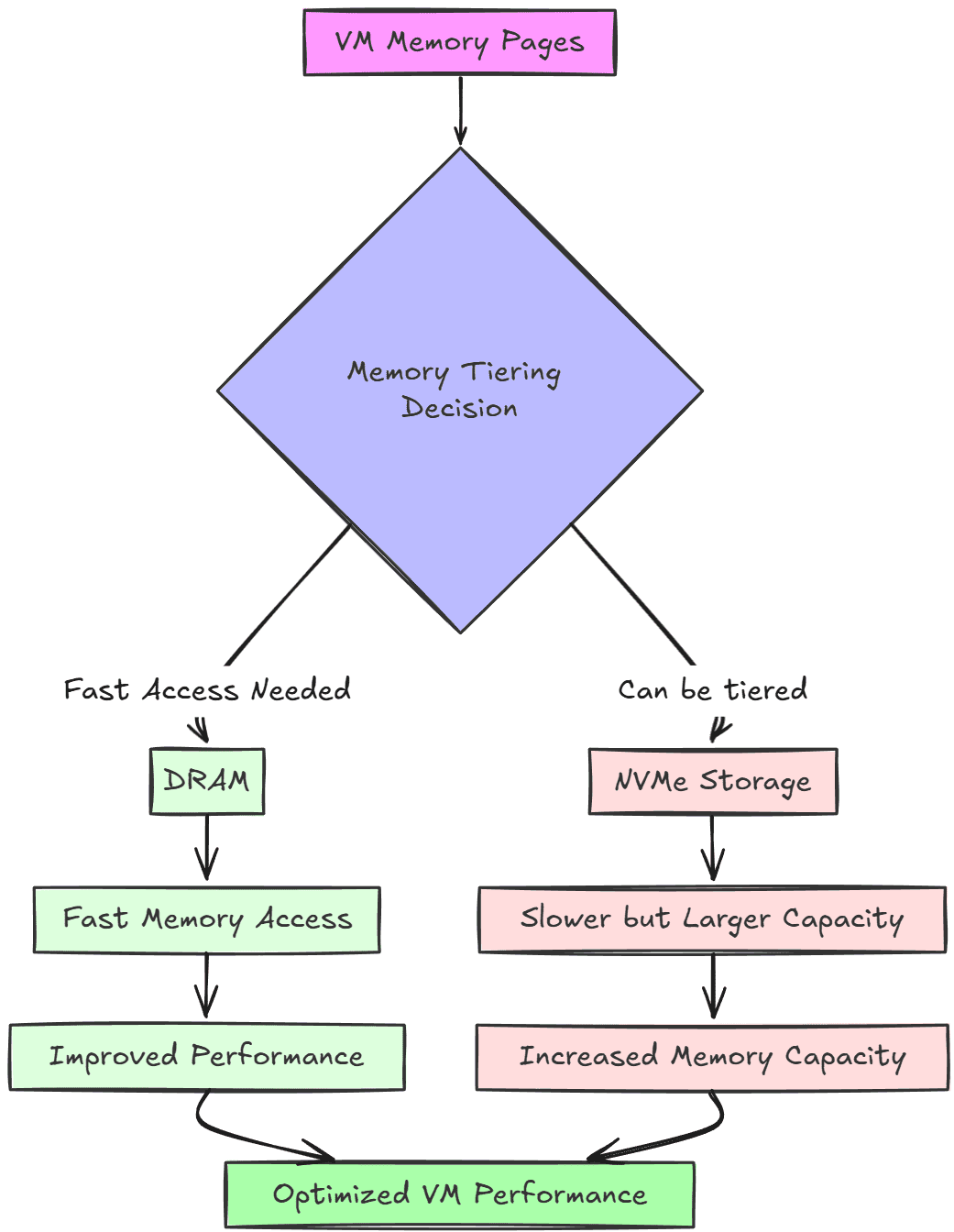

The one feature that helped to keep me drawn in with VMware for the time being is the NVMe memory tiering feature. This is a really great feature that I am making use of in my VMware vSphere cluster to go from 96 GB of physical RAM installed to over 470 GB+ of RAM that is useable with the tiered space on the NVMe drive.

Below is an overview of memory tiering feature in VMware ESXi:

Proxmox is still looking better and better, especially since I am becoming less and less reliant on the underlying hypervisor with more of the focus being on containers and container orchestration.

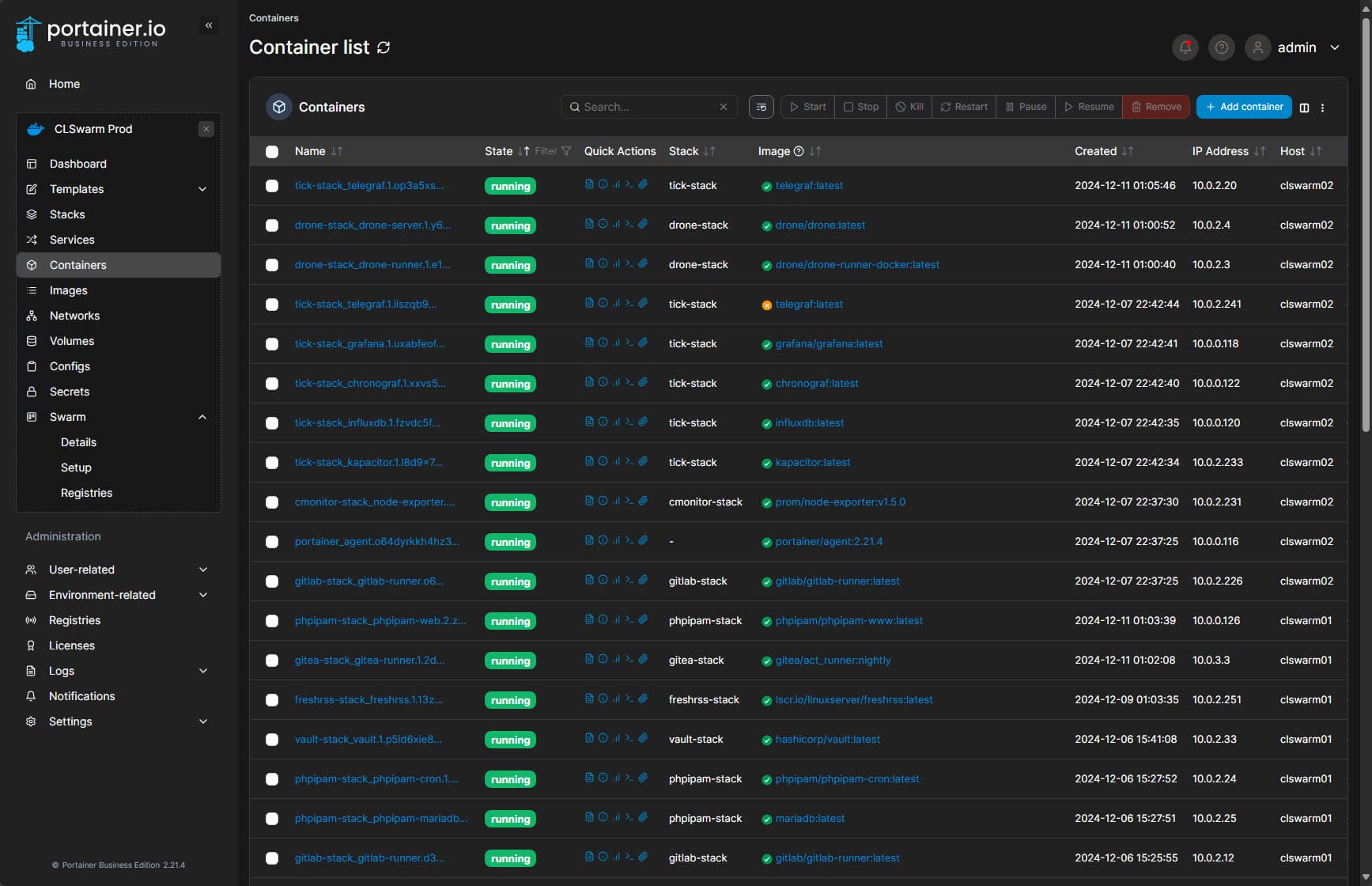

Containers and Container Orchestration

For me, 2024 has been all about container orchestration and I haven’t looked back since. Containers are awesome and they allow you to do so many things really really well, like spinning up new applications and environments very quickly and easily.

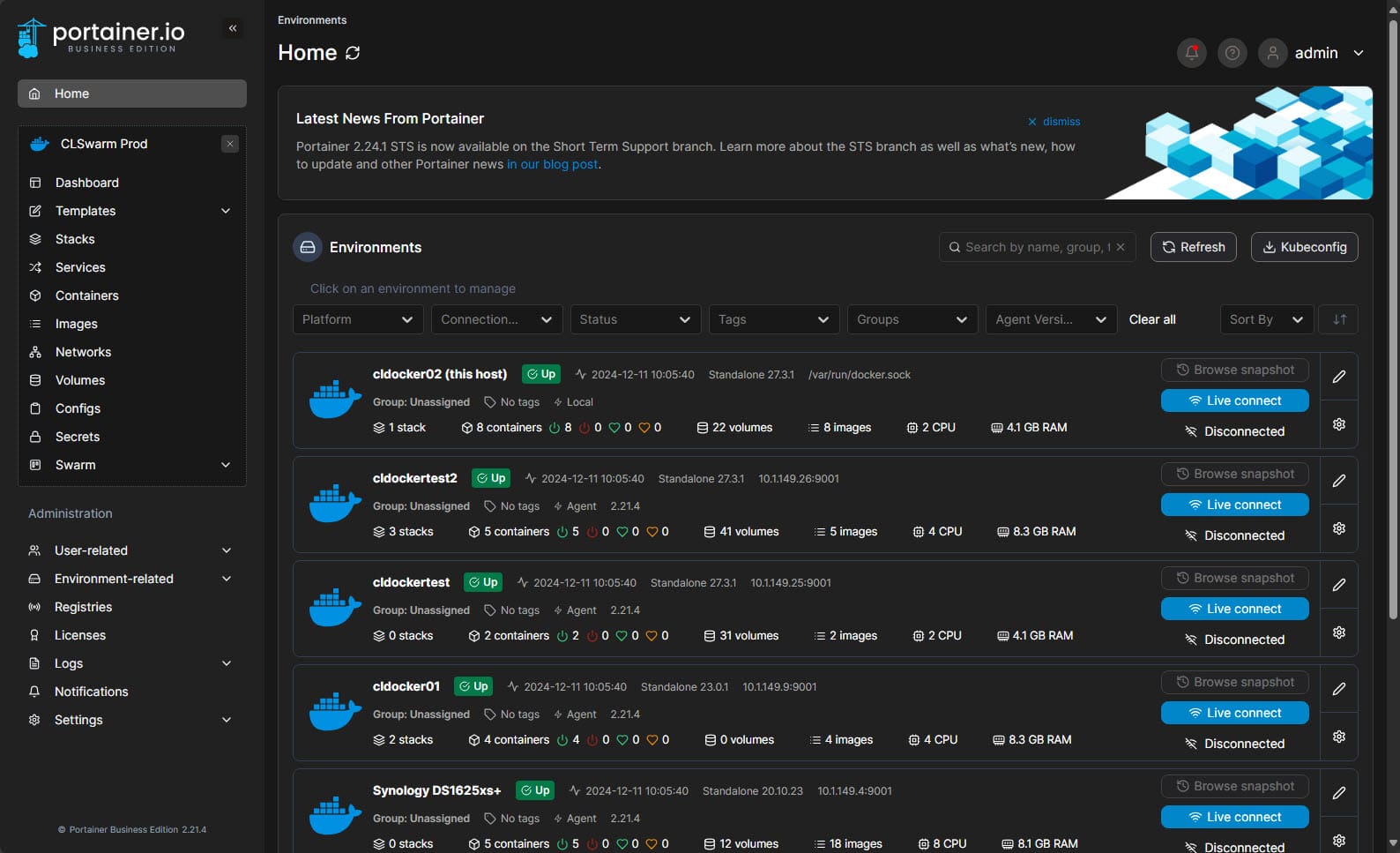

In terms of container orchestration, I made a big move this year to move away from standalone Docker hosts to orchestration using Docker Swarm and Kubernetes. However, the big surprise for me when trying both in the home lab is just how awesome Docker Swarm still is.

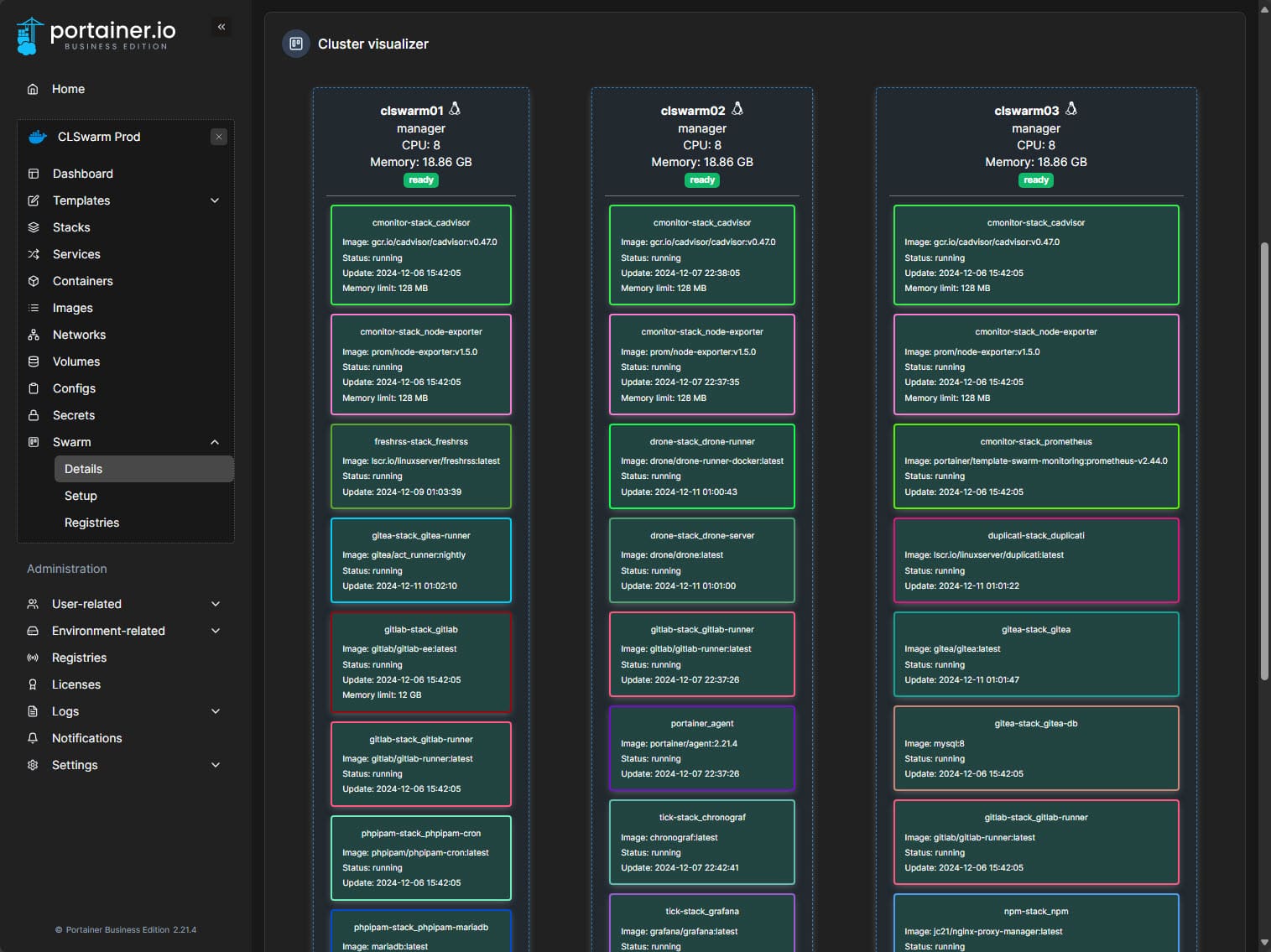

Docker Swarm

When you Google and look at information from many on Docker Swarm, you will always here, “it is old, it is deprecated, no one wants to use Docker Swarm.” But, that is really not true. Docker Swarm is still a very viable solution that is still supported and can be used for container orchestration.

What I found with Docker Swarm is:

- It is super easy to stand up

- It uses all the same Docker Compose code that we know (with a few minor mods)

- It uses most if not all the same Docker commands along with the service command

- It allows you to have high-availability for your Docker containers

- Using Portainer, you have an awesome GUI tool that can help have an overview of your containers, drain or pause the hosts, and keep an eye on things

This is currently the configuration that I have running the bulk of my home lab services.

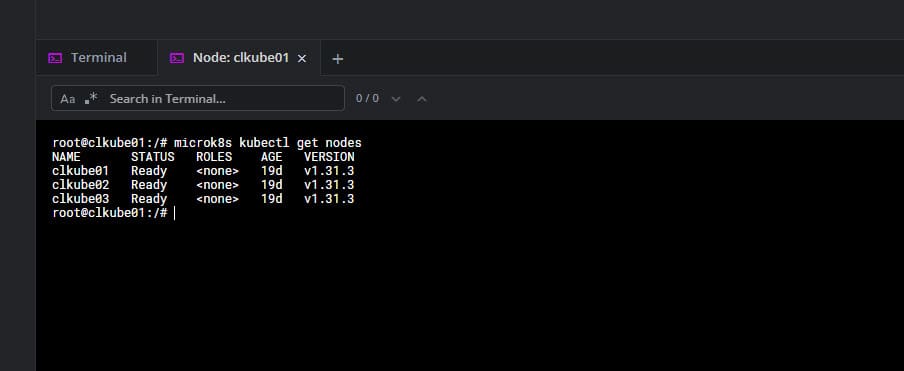

Kubernetes

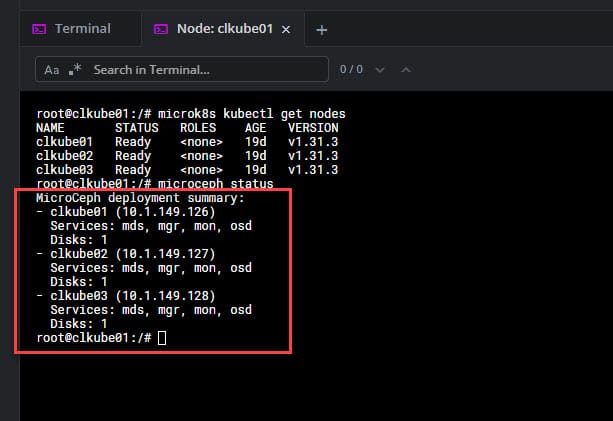

I have dived head first into Kubernetes this year more than previously and have experimented with many different Kubernetes distributions. The one that I have settled on for now is Microk8s since it has tight integration with storage solutions like Microceph and both are packaged by Canonical.

Both are noted to be production-ready and are lightweight as well. Microk8s also makes it easy to add modules like Traefik, MetalLB, and others. This is just a simple enable command using the microk8s command line.

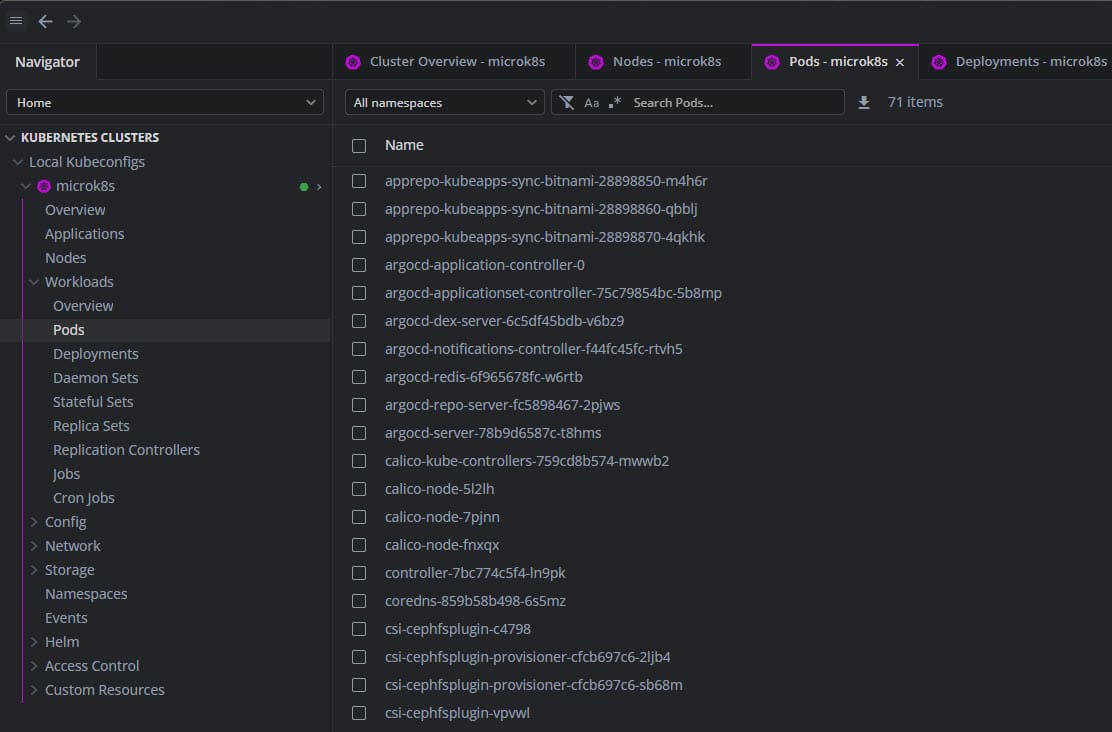

Below is a look at my 3-node Microk8s cluster running v1.31.3 at the time of this writing.

Viewing pods in the Lens utility.

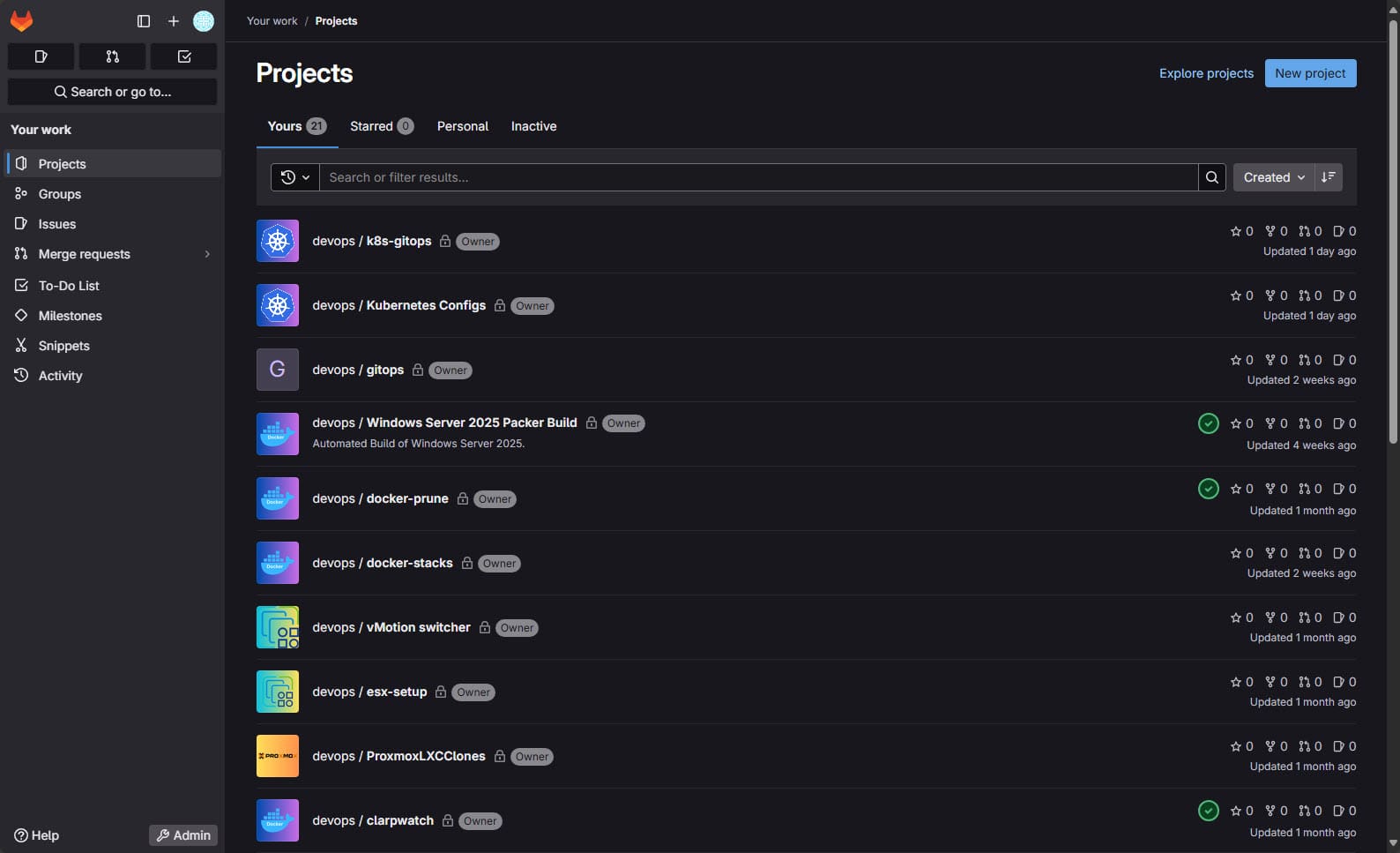

I have been getting into GitOps in a large way this year as well. Having everything in code and applications being deployed based on code in a git repository. This allows fully automating updates as one of the benefits. See my recent post here: FluxCD How to Modify Kustomize Image Tag.

Software-defined storage

For several years, I have been invested in VMware vSAN as my software-defined storage solution of choice. VMware vSAN has been rock solid for me running virtual machine workloads and Docker hosts. I even had a Tanzu Kubernetes deployment running for quite a while as well. However, I wanted to dive outside my comfort zone more with software-defined storage solutions.

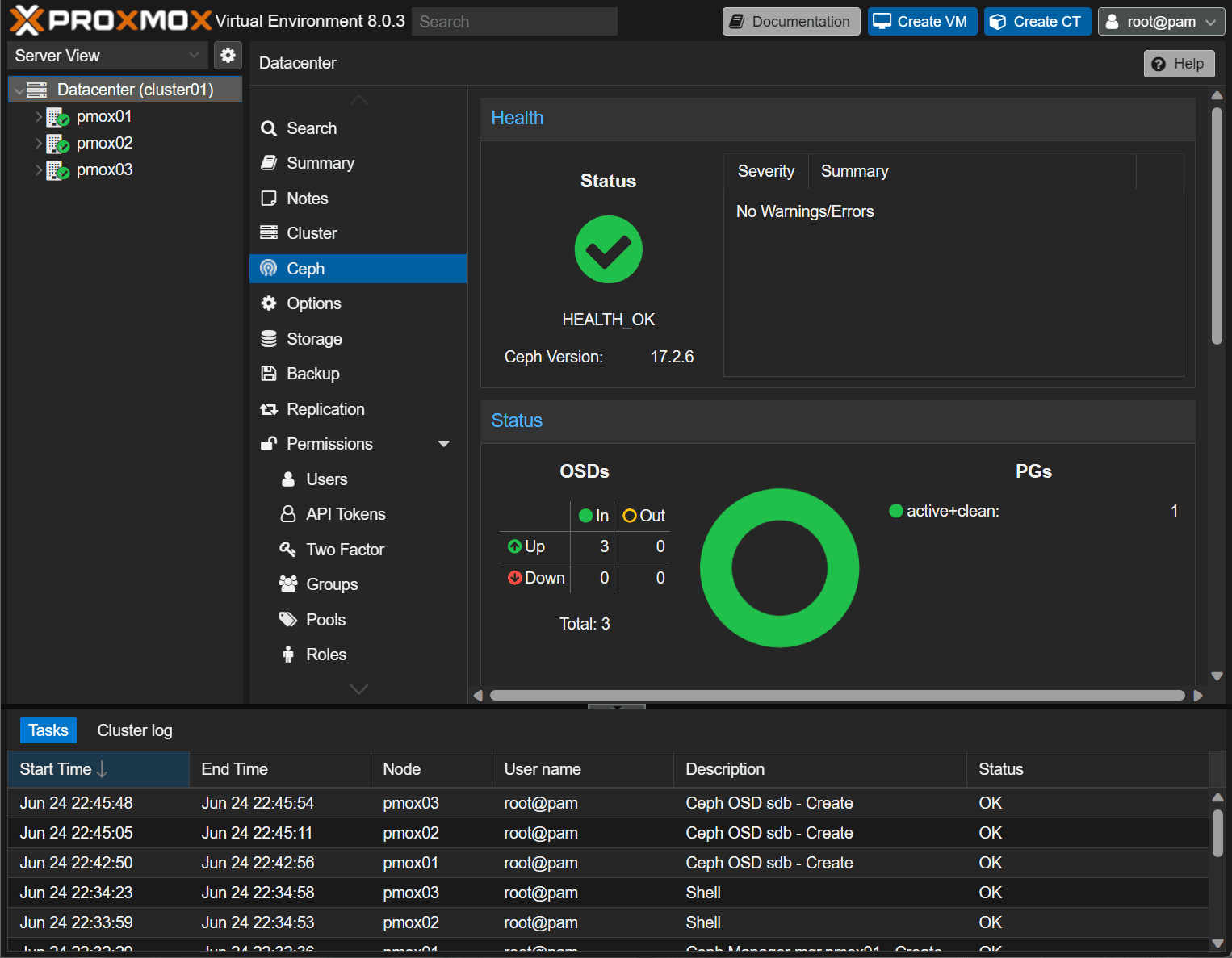

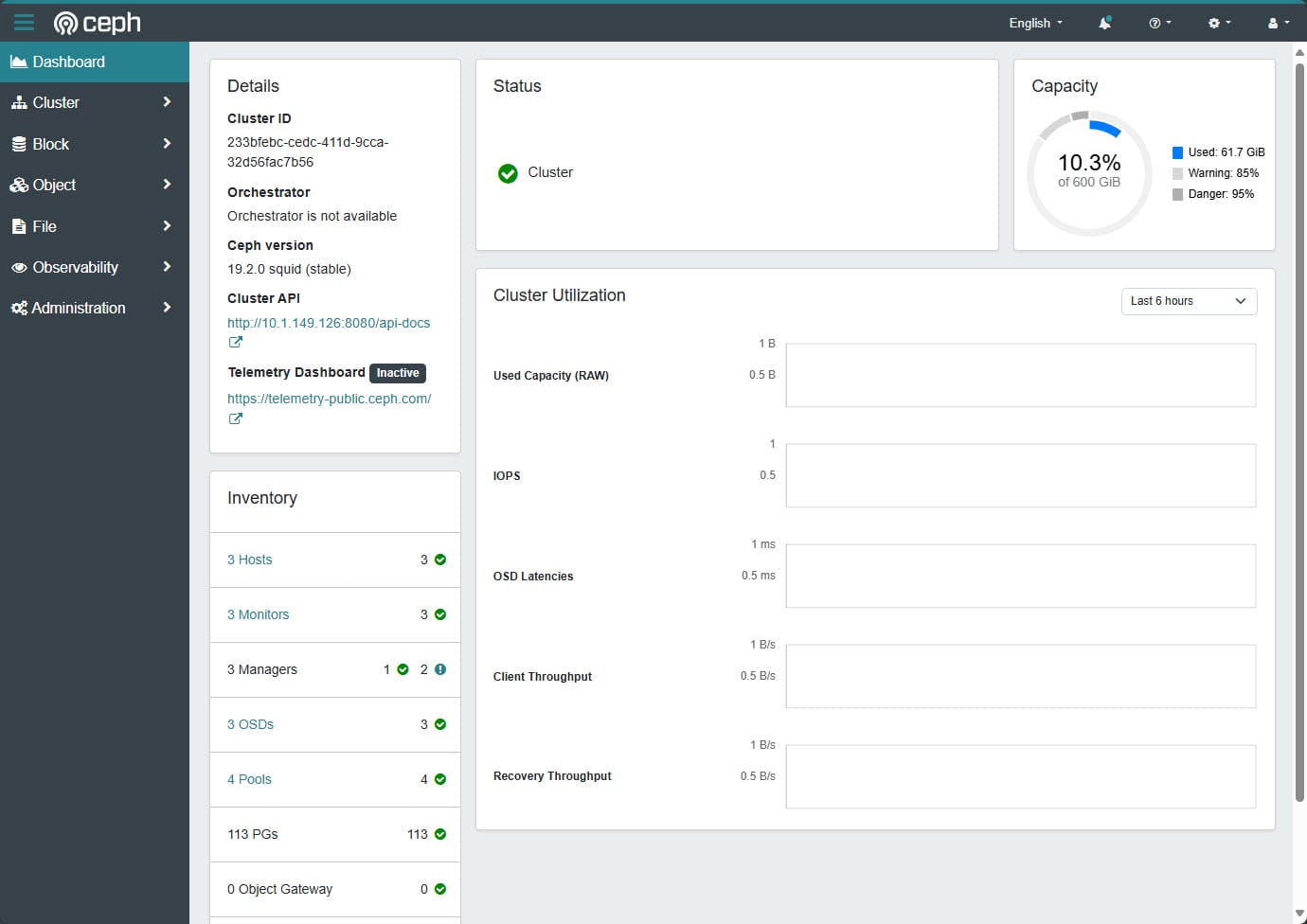

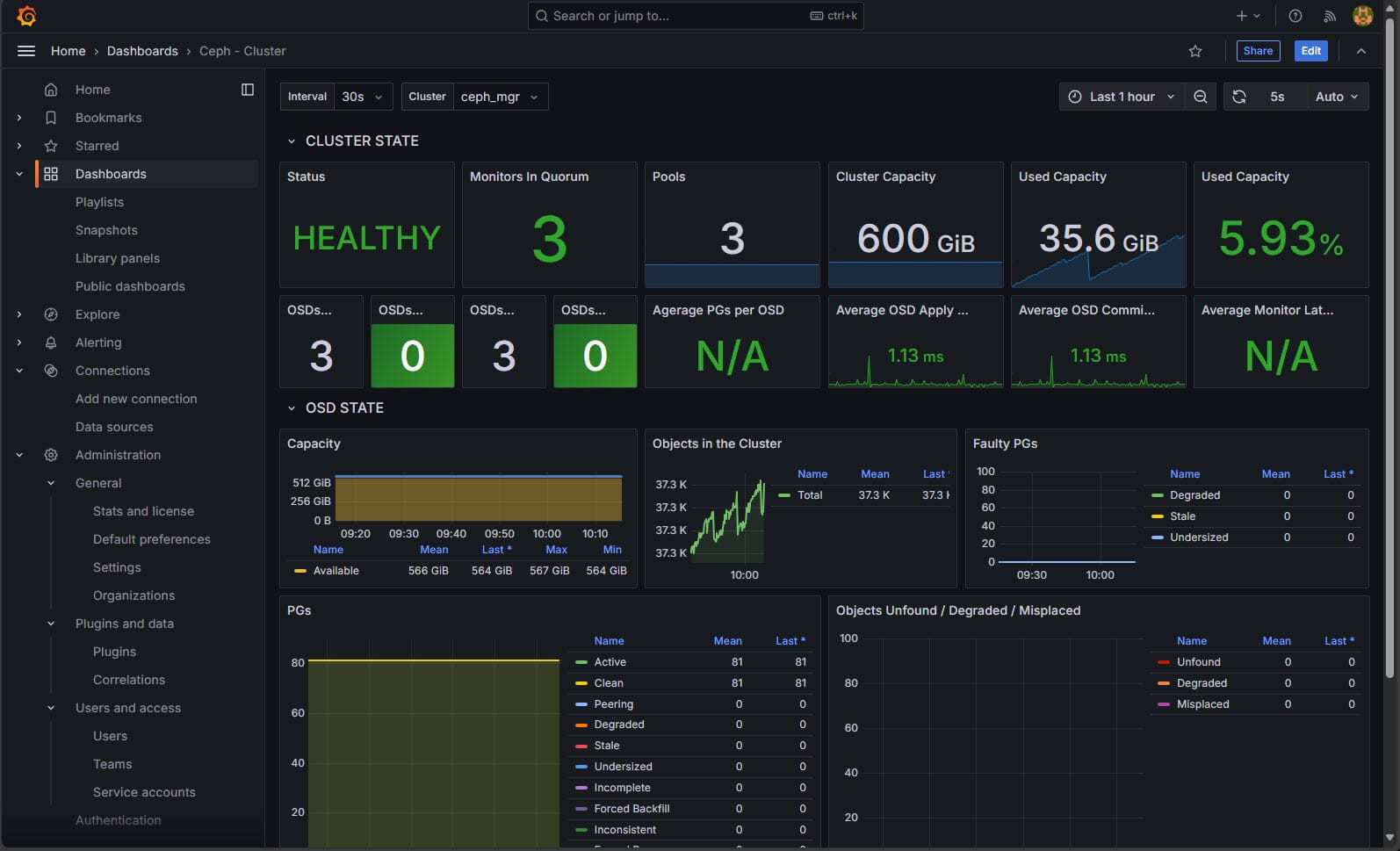

I settled on Ceph all the way around. Ceph is an open-source software-defined storage solution that allows aggregating local disks on your Ceph storage nodes and pool these together to form a logical storage volume.

This is available natively in Proxmox VE Server if you want to deploy HCI storage between multiple Proxmox nodes. The wizards built into the Proxmox VE server hosts makes deploying it super easy.

Also, the great thing about Ceph is you can do either block level storage or you can do file storage on top of Ceph with CephFS. I am using Ceph storage in Docker Swarm to back my shared container storage between the hosts.

I am also using it in my Kubernetes cluster. Microceph is a great way to easily install Ceph and have dynamic storage for your Kubernetes clusters.

You can also enable the Ceph dashboard which allows having a GUI view of your Ceph environment, health, performance statistics, and you can perform some operational tasks.

After logging into the Ceph dashboard and viewing statistics.

Software

Now the fun part. What do I actually run on all this? Well, several apps actually that I will list below:

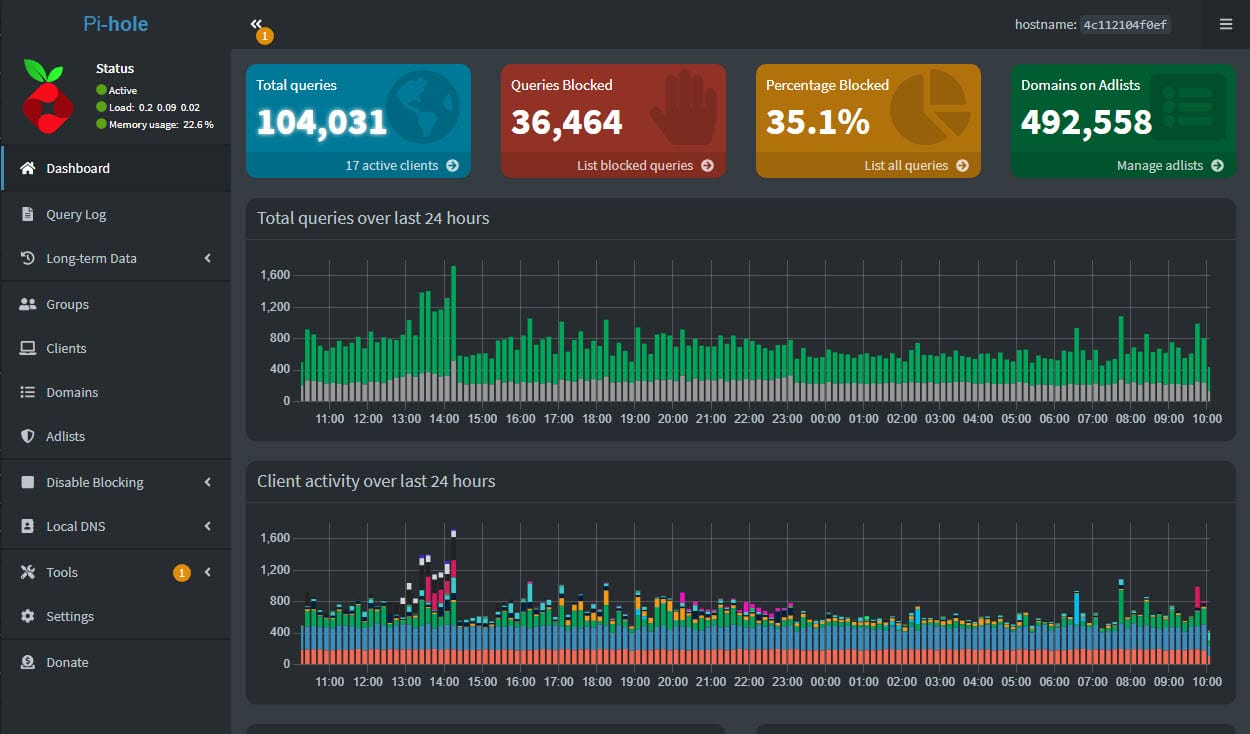

Pihole

FreshRSS for self-hosted RSS feed reader aggregation.

Hashicorp Vault for storing secrets that get pulled in CI/CD:

Gitlab for CI/CD and self-hosted code repo.

Gitea for another self-hosted repo (not my main one but testing it)

Portainer for all things container management, Kubernetes, etc.

Drone CI for use with Gitea.

phpIPAM for keeping up with addresses, VLANs, hosts, etc.

Grafana for monitoring and creating dashboards of metrics throughout the lab.

Unifi network application for managing Unifi devices and switches.

ArgoCD for Gitops.

NAKIVO Backup & Replication for lab backups.

MinIO for self-hosted S3 compatible object storage.

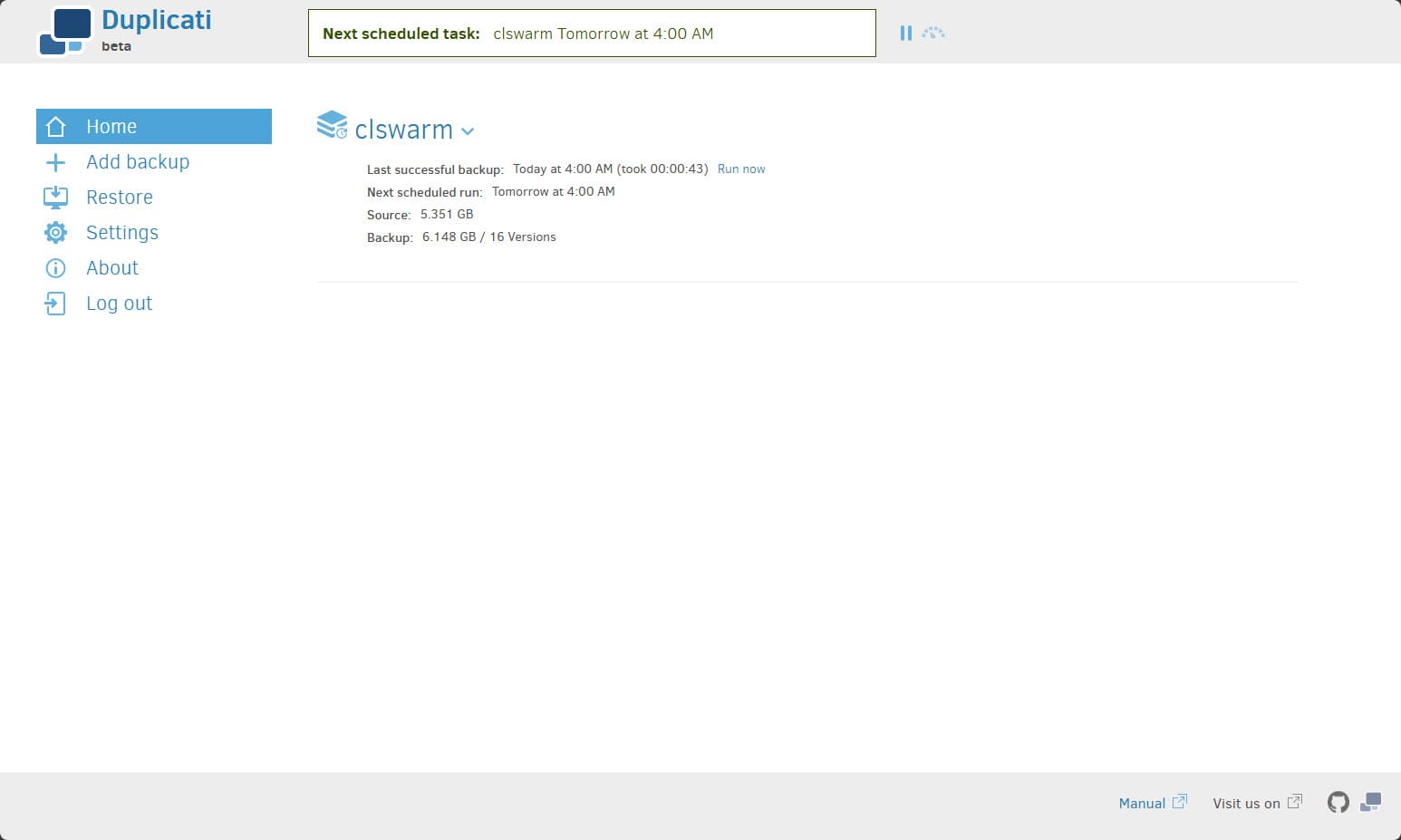

Duplicati for container backups.

Plex media server.

Other applications

I ran so many other applications, it is hard to mention them all. However, These are some of the other applications I have running and installed in the home lab:

- VMware Aria Operations

- ARP watch for network ARP monitor

- Faddom Application Mapping

- PRTG for monitoring

- Twingate Remote access

- Veeam Backup & Replication

- Minecraft server

- Azure DevOps server

- Grafana Loki for syslog

What is in store for 2025?

More containers, more DevOps, and more GitOps orchestration. I am going to double down further on cloud-native and DevOps skills and see how far I can take GitOps in the home lab realm as well. As always stay tuned to VirtualizationHowto blog and my YouTube channel 🙂

Video is here!

Wrapping up

This year has been one of flux for me in the home lab, but good changes overall. I have significantly reduced my power footprint due to going to mini PCs and power efficient storage. Also, the hypervisor itself has become less important for me since I have been more focused on containers and container orchestration. This has proven to be a great move for me in general and allowed a much more devops way of doing things and now getting into GitOps.

You are my idol. I love it this post. Thanks!

Carmelo,

Thank you for your kind words!

Cheers,

Brandon