As I look back on 2024, when it comes to my learning and labbing adventures, it has been quite a fruitful year along those lines. I really have enjoyed the growing pains and decision making for the home lab that has in part come by necessity, and others that have come as a result of technologies I have wanted to learn. I want to share with you what I feel have been my top 5 home lab projects in 2024, how they are coming along, and what if any changes or plans I have moving forward.

Table of contents

How my goals have changed with home lab projects

In 2024, I set out to move to a more modern containerized infrastructure for my services. I don’t think VMs will ever go away in the foreseeable future as containers need something to run on and having a virtualized container host is the way to go for many reasons.

However, the focus on VMs for me has lessened since containers allow you to have a sharper focus on the applications. Also, I have had a focus on DevOps in 2024 and implementing automation, CI/CD and automated processes. You will see the home lab projects below reflect that as my focus.

1. Exploring and learning new hypervisor options

I have been a VMware vSphere fan for years now. The stuff just works and is rock solid in every environment I have been a part of where it has been installed. However, the major change this year with VMware comes not as a result of a technology change, but rather ownership and licensing.

Since the Broadcom buyout, licensing has been exponentially multiplied for most customers, leaving many looking for much less expensive options out there. It is really hard to be optimistic about the future for VMware with so many looking at their options now. I am extremely disappointed in the direction that Broadcom has taken recently with the change in VMUG Advantage. You can no longer get licensing unless you are certified in VMware Cloud Foundation.

While I think it is great that there are major discounts on the solution, I think they are cutting off a large majority of users that were paying for and learning VMware solutions by this change as many will probably not go after the certification if I had my guess. So, long story short, this is my #1 on the list of home lab projects I’m exploring – learning new hypervisors.

What are the top picks for home lab?

- Proxmox is a definite #1 in this list I think

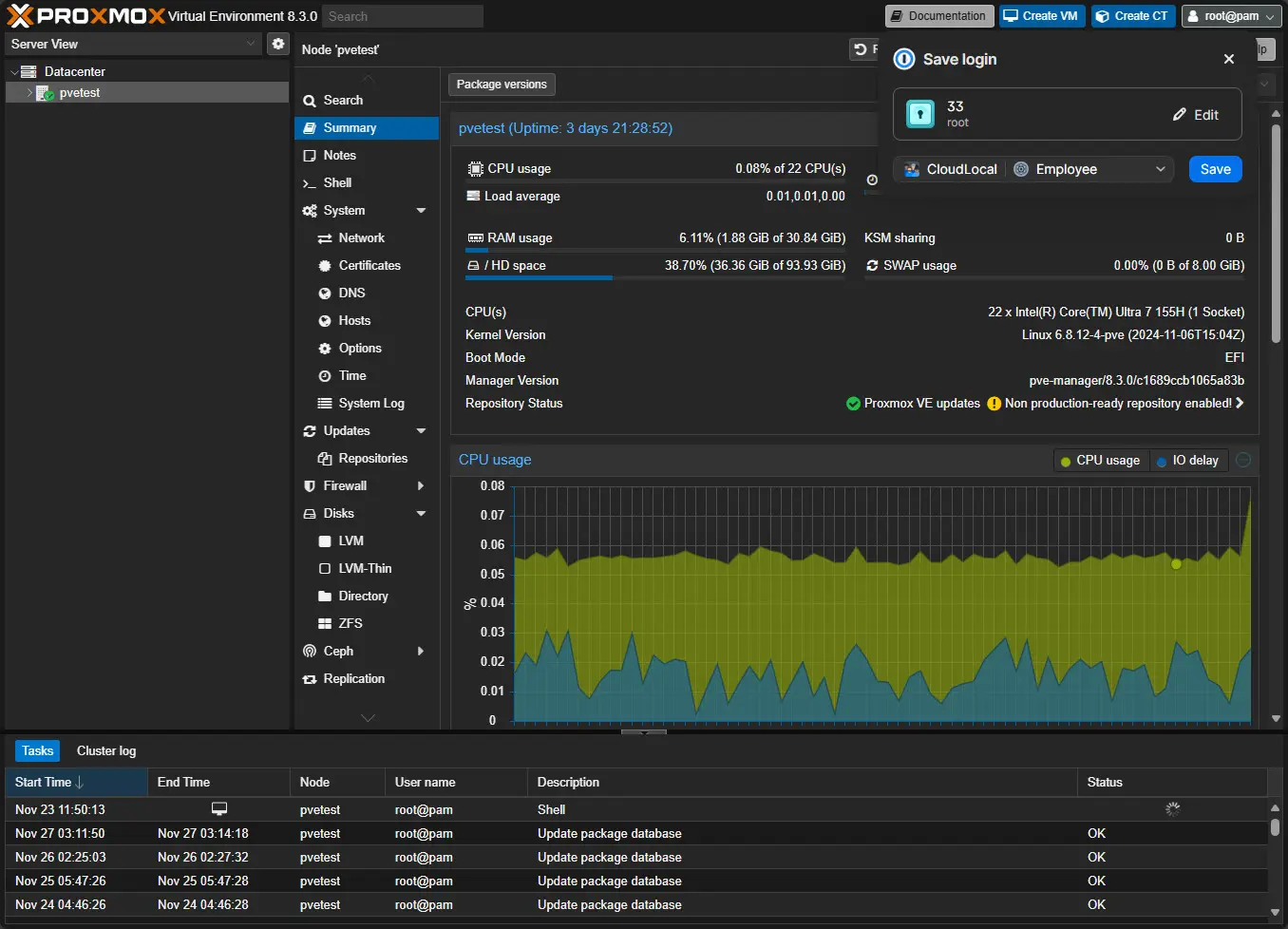

- I have spun up several Proxmox nodes, both physical and nested in VMware for learning and playing around and it is great. There aren’t as many bells and whistles with Proxmox, but I think it does probably 95% of what the majority want their hypervisor to do.

- XCP-ng is a good option – Definitely a good option, however, not quite as popular as Proxmox

- Hyper-V is actually coming back into focus for some – If you own Windows Pro license, you can run Hyper-V for free.

- Now that VMware Workstation Pro is free, many may pivot back to this type of setup – Broadcom has recently made VMware Workstation Pro free for everyone, instead of just personal licenses

What about for the enterprise?

- Nutanix will win big here I think

- Azure Local (new successor to Azure Stack HCI)

- HPE’s new option?

- Kubevirt for those running Kubernetes

Anyways, this has been a great project that has been fun and exciting to learn new platforms for virtualization and one that I think you would enjoy as well.

2. Doubling down on containers

With the uncertainty of the virtualization platform, it helped me to decide that really it isn’t about the VMs anymore, it is really about the apps and being able to easily consume your apps. I have doubled down on containers in my home lab environment and have all of my “production” home lab services running in a container now.

This is everything from the the following:

- Pi-Hole

- phpIPAM

- Unbound

- GitLab

- Gitea

- TICK stack monitoring

- Hashicorp Vault

- FreshRSS

- ArgoCD

- Unifi Network application

- …more

Containers totally solve many of the complexity problems you might face running applications inside VMs. Once you move to containers, you no longer have to worry about prerequisites or any other dependencies with your application as these are packaged with the container image. Also, updates are super easy with containers. Just pull the latest container image and respin the container. There are many tools as well that allow you to automate this like Watchtower, or Shepherd with Swarm.

3. Docker Swarm and Kubernetes Container orchestration

This year I have really focused on learning container orchestration platforms and getting these implemented in the home lab environment.

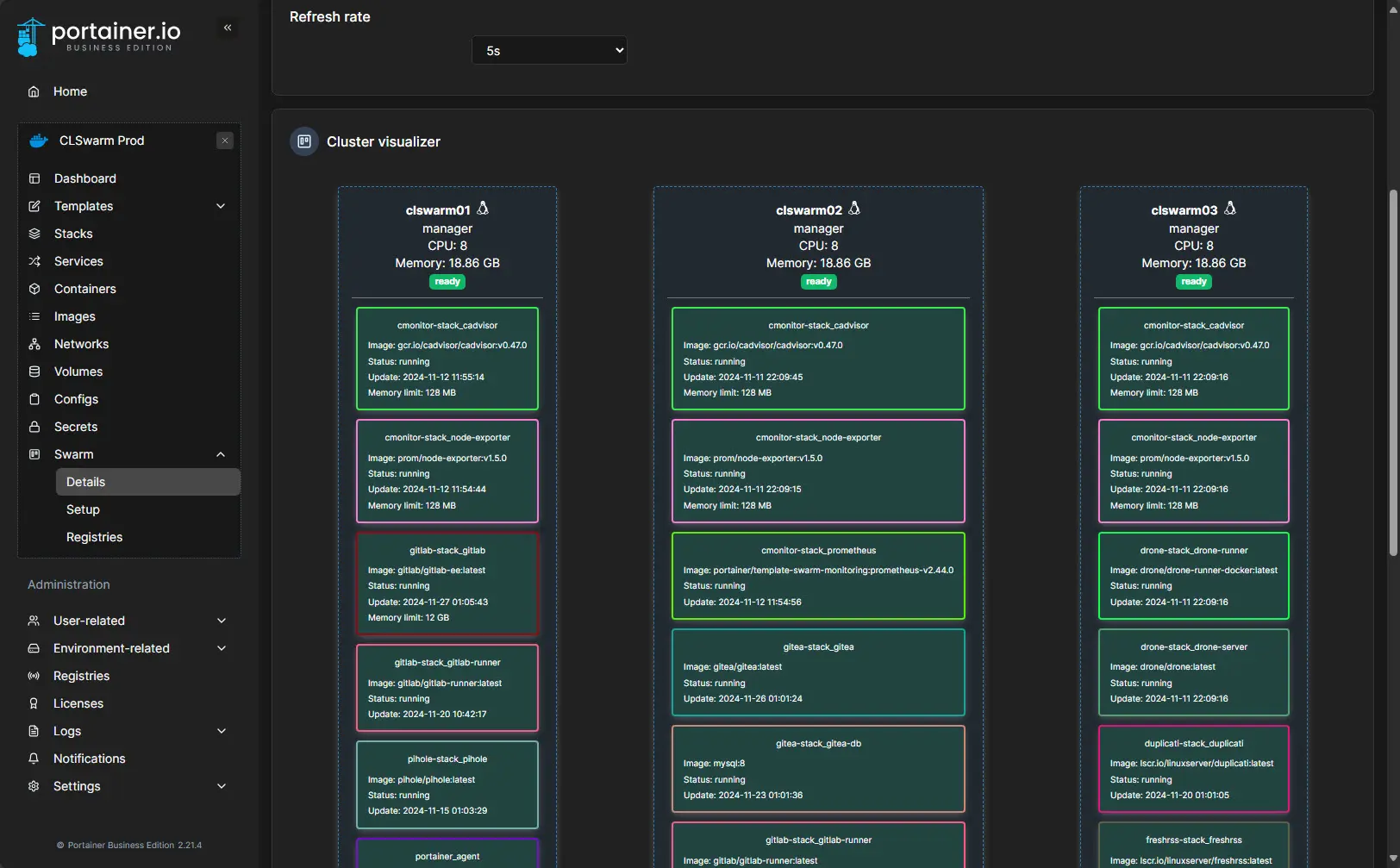

Docker Swarm

The first of these is Docker Swarm. This project was eye opening. It really taught me that Docker Swarm is a very realistic alternative to Kubernetes and may be just what organizations are looking for in between standalone Docker hosts and Kubernetes clusters.

Docker Swarm is still based on Docker, so the learning curve is not that much different than a standalone Docker container host. With Swarm, you just learn the few little tweaks you need to make in your Docker compose code to run in Swarm, such as not using a restart policy or a container_name stanzas to name a couple.

You also use the deploy section in your YAML code for configuring your replicas and placement restrictions you want to use for your Docker Swarm nodes.

Kubernetes

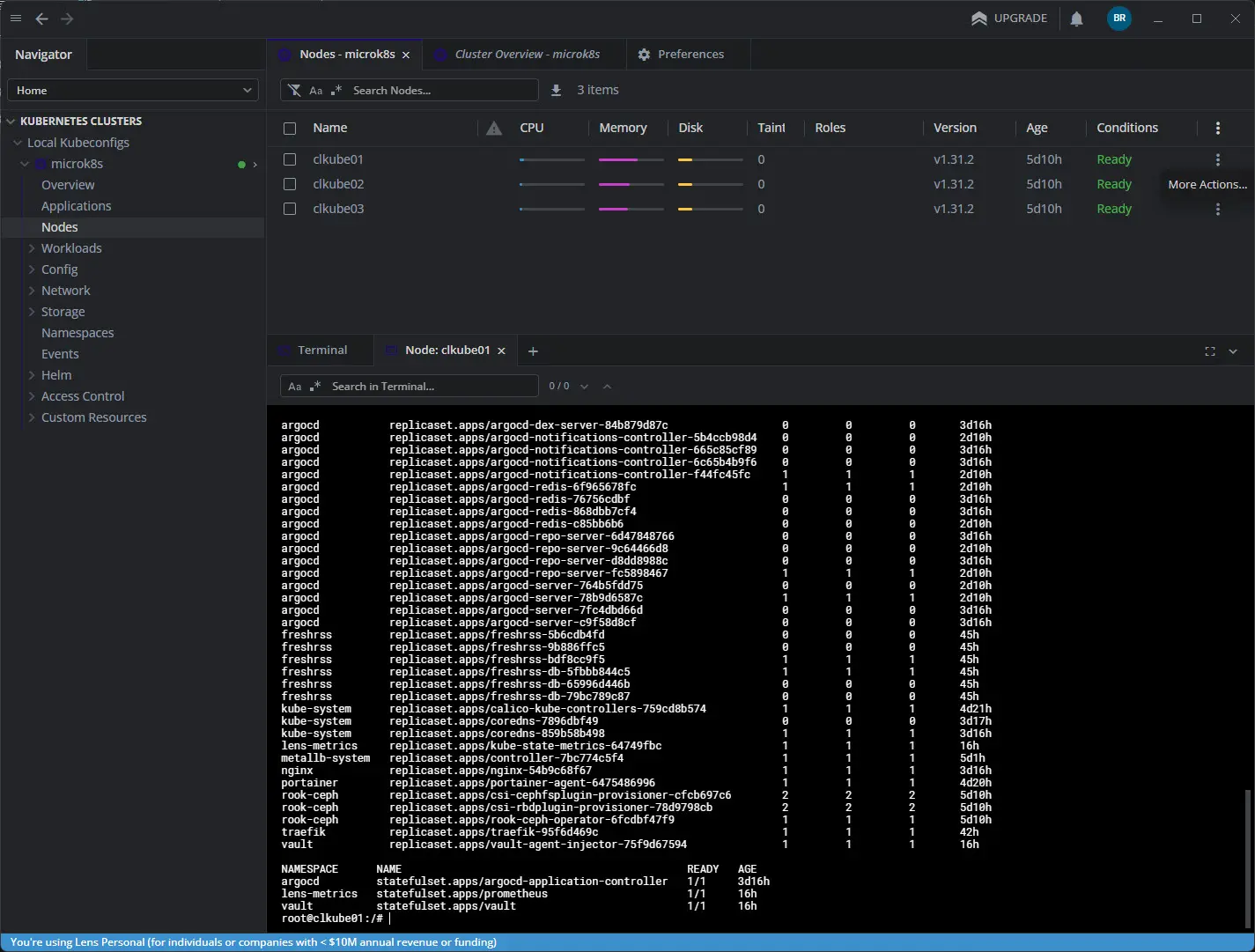

Kubernetes is still the current defacto standard in running modern applications. It provides the tools and technologies that organizations need to run modern applications and implement GitOps in their enviornments.

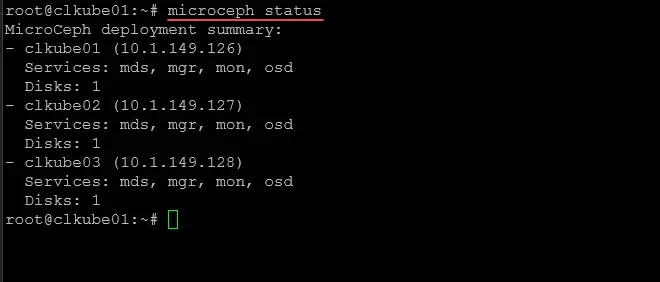

I have settled on for now, running Microk8s on 3 nodes with Microceph with Rook-Ceph storage underneath for running pods. This setup has been very easy to implement and is great for learning and running your services.

4. Open source Software Defined Storage

I have been a huge fan of software-defined storage for quite some time now and had a VMware vSAN cluster for the longest time. However, with all the uncertainty with VMware and the future there, I decided to look at the other options out there. Ceph commonly comes up as the standard for open-source software-defined storage, especially for running virtualization and also for modern container and Kubernetes storage.

With that being said, I have used Ceph with Proxmox for HCI storage and I am also now using Ceph as the underlying storage for my Docker Swarm Cluster in the form of Microceph and CephFS as a home lab project. Then, when it comes to Kubernetes, I am also using Microceph with my Microk8s cluster and using the rook-ceph plugin for Microk8s as my storage provisioner for provisioning my persistent volume claims.

Software-defined storage is a great way to have shared storage without a storage array or some other type of traditional shared storage between your nodes.

5. Implementing all mini PCs

Prior to 2024, I had been running on enterprise-grade Supermicro servers in the home lab. However, with energy costs and the need to upgrade to more modern and efficient CPUs. With mini PCs getting so powerful and many great options hitting the market, this is the direction I decided to go.

The Minisforum MS-01 was the mini PC that I settled on and I have been running (2) of them connected to an NVMe NAS device from Terramaster. This configuration has allowed me to reduce power consumption in the home lab as well as heat. This summer, since I had already migrated to mini PCs, I had much less heat in the lab and ran the AC less. The performance of the MS-01 is fantastic, it has 10 gig connectivity, and multiple M.2 slots, and a PCI-e slot.

Video

Wrapping up

The home lab has definitely been in flux in 2024 with so many changes in the industry, and my needs and goals changing as you can see with the home lab projects I have tackled this year. I wanted to move to a more containerized infrastructure in 2024 and I feel like I have succeeded in doing that with the infrastructure changes and adding a Docker Swarm cluster and “production” Kubernetes cluster for running my services. This has allowed me to have a much more DevOps style stance for home lab tasks and operations which is very rewarding.

Google is updating how articles are shown. Don’t miss our leading home lab and tech content, written by humans, by setting Virtualization Howto as a preferred source.