I have been trying out various storage solutions in my home lab environment over the past couple of months or so. Two that I have been extensively testing are GlusterFS vs Ceph, and specifically GlusterFS vs CephFS to be exact, which is Ceph’s file system running on top of Ceph underlying storage. I wanted to give you a list of pros and cons of GlusterFS vs Ceph that I have seen in working with both file systems in the lab running containers, etc.

Table of contents

What is GlusterFS?

GlusterFS is a distributed file system that allows you to have multiple servers with locally attached disks participate in sharing storage between them. It is a free and open-source solution that is published under the GNU license (General Public License). There is no cost to use GlusterFS.

It can be used for large-scale server implementations or just very simple configurations like you would use in a home lab environment. I have tested and used it for underlying container storage on Docker Swarm hosts.

Take a look at the official project here: Gluster.

What is Ceph and CephFS?

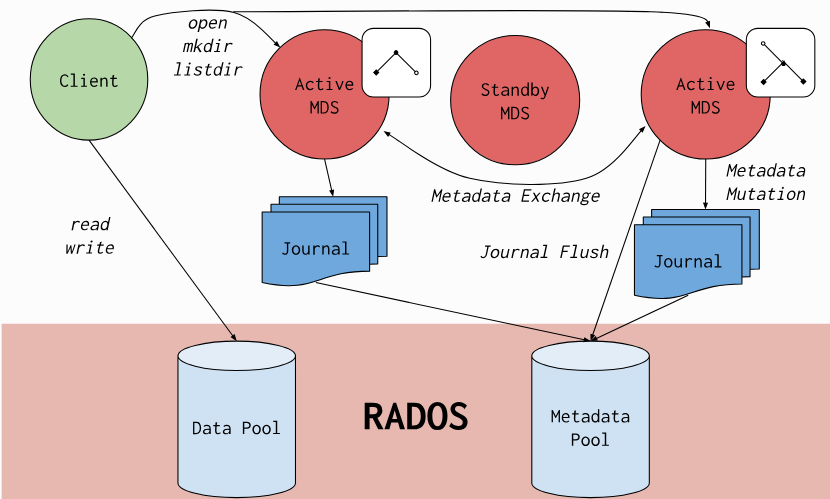

What is Ceph and CephFS? Ceph is a distributed storage solution for HCI that many are familiar with in Proxmox and other virtualization-related solutions. CephFS (Ceph file system) is a POSIX-compliant distributed file system built on top of Ceph.

With CephFS, you can have highly scalable storage on top of Ceph’s object storage system (RADOS). With it, multiple clients can access and share files across multiple nodes with the underlying protection of replicated data between the hosts. So, you can have high throughput and fault tolerance for your data.

Take a look at the official Ceph site here: Ceph.io.

Detailed review of installing and using both GlusterFS vs Ceph

First, before we dive into the topics below, let me give you the links to the review and installation of GlusterFS vs Ceph and CephFS so you can see the process for both:

- GlusterFS: GlusterFS configuration in Ubuntu Server 22.04 and 24.04

- CephFS: CephFS for Docker Container Storage

Comparing GlusterFS vs Ceph

Let’s look at the following:

- Installation

- Performance

- Management

- Support

- Monitoring

1. Installation

Comparing the installation of GlusterFS vs Ceph, both technologies, I would have to say that GlusterFS wins in this comparison because of ease of installation. It just feels simpler and really is simpler underneath the hood. Note the following:

- It takes fewer commands to get GlusterFS up and running

- There are way fewer components with GlusterFS compared to CephFS

- Management and troubleshooting will be easier as a result.

Compared to GlusterFS, CephFS has many more components, including things like:

- the Monitor (MON), Object Storage Daemon (OSD), Metadata Server (MDS), and the Ceph Manager (MGR).

- The initial installation of CephFS requires more commands and configuration when you compare it with GlusterFS.

2. Performance

In comparing the two solutions in the home lab, GlusterFS “feels” a little more snappy vs CephFS. It seems like there is just more overhead with CephFS. However, I just have a single disk on 3 nodes which I know is not best practice in terms of performance with Ceph and CephFS. So, with that in mind, for smaller home lab setups, I think most may get better performance out of GlusterFS than CephFS.

Just a few things to note when comparing the two:

- GlusterfS is better for file-based I/O workloads and workloads that don’t demand high IOPs

- GlusterFS is not as good if you cluster expands beyond a few nodes as this is where Ceph and CephFS start to shine.

- Gluster also lacks the advanced caching features of Ceph and can be slower when the workloads get very heavy

- Ceph with CephFS is object-based and helps with performance

- With the CRUSH (Controlled Replication Under Scalable Hashing) rules and CRUSH maps, Ceph can handle high IOPS and better throughput

- Ceph also supports caching and tiering for faster random I/O operations

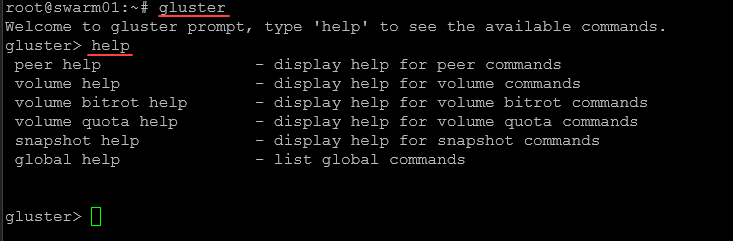

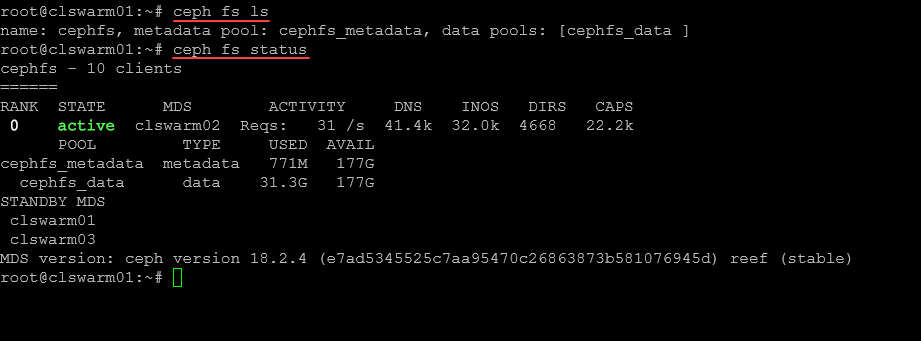

3. Management

I think actually both solutions are easy to manage when it comes to GlusterFS vs Ceph. I would say that Ceph and CephFS have more commands to work with the solution and see various things with them. However, both are “set it and forget it” type solutions that just work for the most part. GlusterFS shines in the simple way you can manage it. I think Ceph and CephFS are definitely more complex in management and operational-type tasks.

Below is a look at running the gluster command and then help.

Below, you see a couple of commands for ceph:

ceph fs ls

ceph fs status4. Support

Here is where things are not so good for GlusterFS. GlusterFS is an officially dead product with Red Hat Support at the end of this year. Even though GlusterFS is a free and open source project that stands apart from Red Hat, the deprecation on the Red Hat side of things means there will be WAY LESS contributions, bug fixes, enhancements, and other things moving forward.

This is unfortunate for the project. However, I have seen several posts on the official GlusterFS Github site from many of the developers saying they will still be contributing. Time will tell on this front. However, from other projects I have seen in the past, when a major company pulls support, it can be a death knell for a product.

You can read Red Hat’s KB on Gluster Storage end of life here: Red Hat Gluster Storage Life Cycle – Red Hat Customer Portal.

This is not the case with Ceph and CephFS. Development and support for Ceph is alive and well and it has broad industry support from Red Hat, SUSE, and Canonical which offer it as part of their enterprise-grade support options. So, with that, support for Ceph is strong.

All-in-all it is a more popular solution than GlusterFS as well and has many third-party integration options and community projects that are built on top of it. So when comparing GlusterFS vs Ceph in the support area, Ceph is the winner hands down.

5. Monitoring

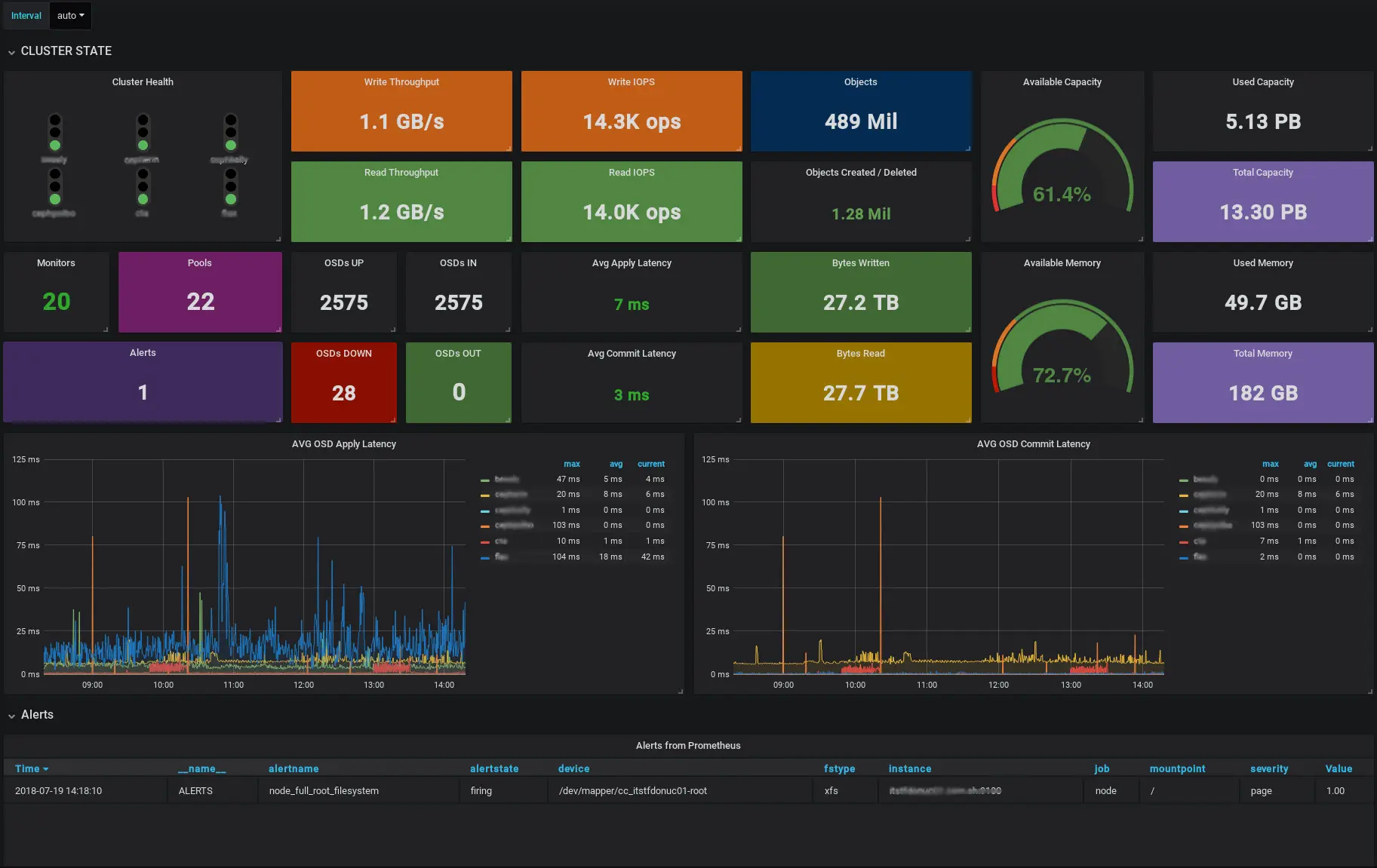

Monitoring is another area where Ceph and CephFS will win out when compared with GlusterFS vs Ceph. There are just many more projects and monitoring solutions from third-party vendors and open-source projects that will monitor Ceph and CephFS compared with GlusterFS.

This is just simply due to the popularity of Ceph compared with GlusterFS. There are a few dashboards you can set up with GlusterFS using Prometheus and Grafana. However, there are far more with Ceph and CephFS when you compare the two.

Also, other third-party freemium and pay for products like Netdata have support for Ceph and not GlusterFS as an example from what I can tell in working with it.

Wrapping up

If you are looking for great storage options, both GlusterFS and Ceph and CephFS are great solutions for this task. However, both have their strengths and weaknesses when it comes to thinking about using one or the other in a project. Ceph is definitely more complex in its architecture and harder to setup. GlusterFS is very simple and works well. However, GlusterFS is losing the support of Red Hat at the end of 2024 so this may be a major reason to pick Ceph regardless between the two. Let me know your thoughts if you are using one or the other or considering using one or the other.

Google is updating how articles are shown. Don’t miss our leading home lab and tech content, written by humans, by setting Virtualization Howto as a preferred source.