If you are running Docker containers that serve out critical services in your home lab environment, and probably most of you are, then what is the best way to backup Docker containers volumes and home server? Well, that is a good question and one that we can answer by highlighting a few solutions that are free to use and will help you avoid a data disaster that can certainly happen if you are not protecting your data.

Table of contents

Docker container volumes what are they?

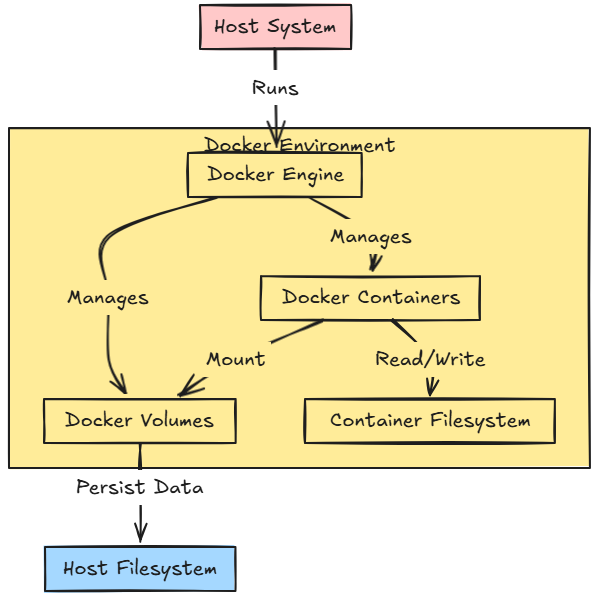

When you think about docker containers, you likely think about the container image and running the container images on your docker container hosts. However, there is another extremely important component to docker and that is your docker volume data. What is this?

Many applications need to have data stored on disk for accessing this data later. Think databases, or other types of apps that need to store data over the course of time. The data needs to remain persistent, even if the container is respun again in the future with a newer container image.

Docker volumes are a way for docker containers to have this type of persistence and allows it to store data locally to the container host and read/write to this data between different sessions, etc.

Different strategies and tools for different Docker environments

Note the following types of Docker container environments:

- Standalone host – Many will run a home server environment that is comprised of standalone Docker hosts. In this case, you may simply be running a standalone physical Docker host or if you have a single server, you may be running a hypervisor on your hardware like Proxmox, VMware ESXi, etc.

- Docker Swarm cluster – Docker Swarm is another configuration that provides great benefits including high availability for your services. It is usually comprised of three or more Docker container hosts that have shared storage between them.

In both of these cases, each configuration can require different tools to note for protecting your data. Let’s look at a couple of tools that you can use for backing up Docker containers.

Duplicati

I have written about Duplicati before, but it is a free and open source tool that allows you to have access to a tool to back up your Docker data on your Docker host or Swarm cluster. I say Swarm Cluster as you generally need to have shared data storage between the Swarm hosts.

Duplicati runs as a Docker container itself and you mount the persistent volume mount that contains your data to your Duplicati container and it can read from that location and then copy your data to various storage locations, including S3 storage, Azure, and custom storage locations to name a few like your own S3 compatible storage like MinIO.

You can check out the Duplicati project here: https://github.com/linuxserver/docker-duplicati

Install Duplicati with Docker Compose

Below is my Docker compose code for Duplicati. You can see the local directory on my Docker container host is /mnt/cephfs that is mounted to the internal container directory /source. This will make more sense as we look at the wizard to create a backup job in Duplicati.

services:

duplicati:

container_name: duplicati

image: lscr.io/linuxserver/duplicati:latest

environment:

PUID: 1000

PGID: 1000

TZ: America/Chicago

CLI_ARGS: "" # optional

volumes:

- /mnt/cephfs/duplicati/appdata/config:/config

- /mnt/cephfs/duplicati/backups:/backups

- /mnt/cephfs:/source

networks:

- npm-stack_nginxproxy

restart: always

networks:

nginxproxy:

external: trueCreate a Duplicati backup job

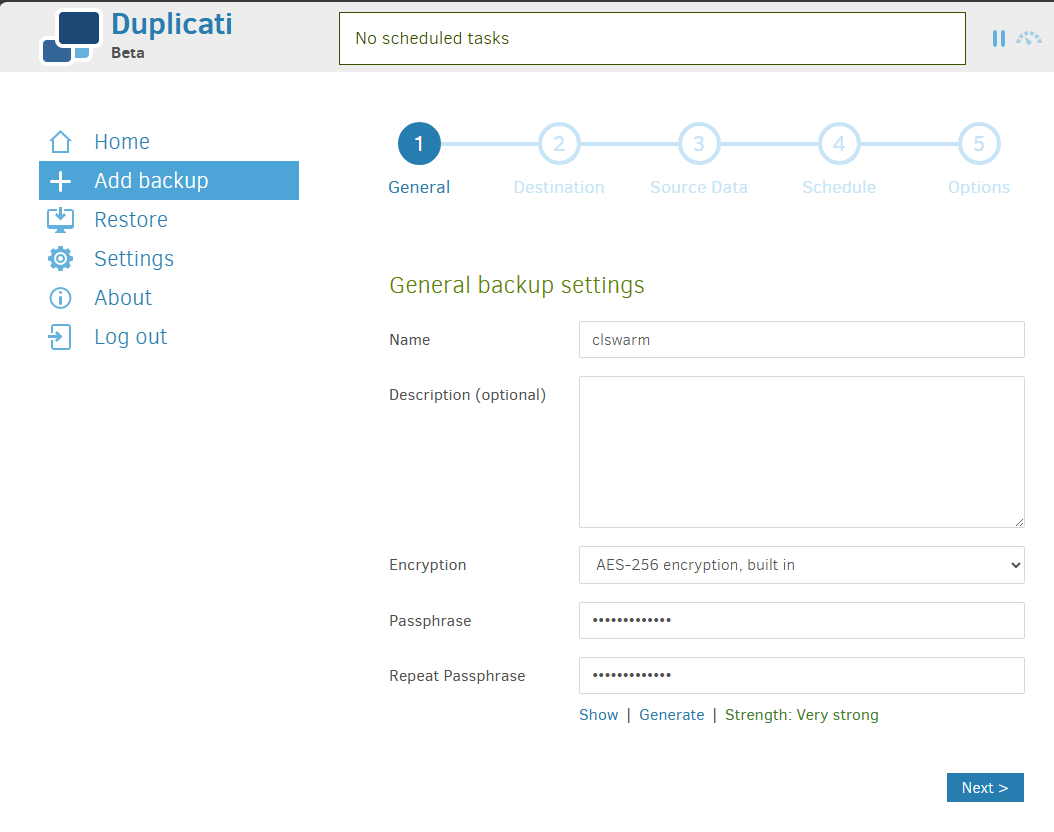

Let’s look at the Duplicati backup job wizard and see how you can create a new backup job for protecting your Docker container data.

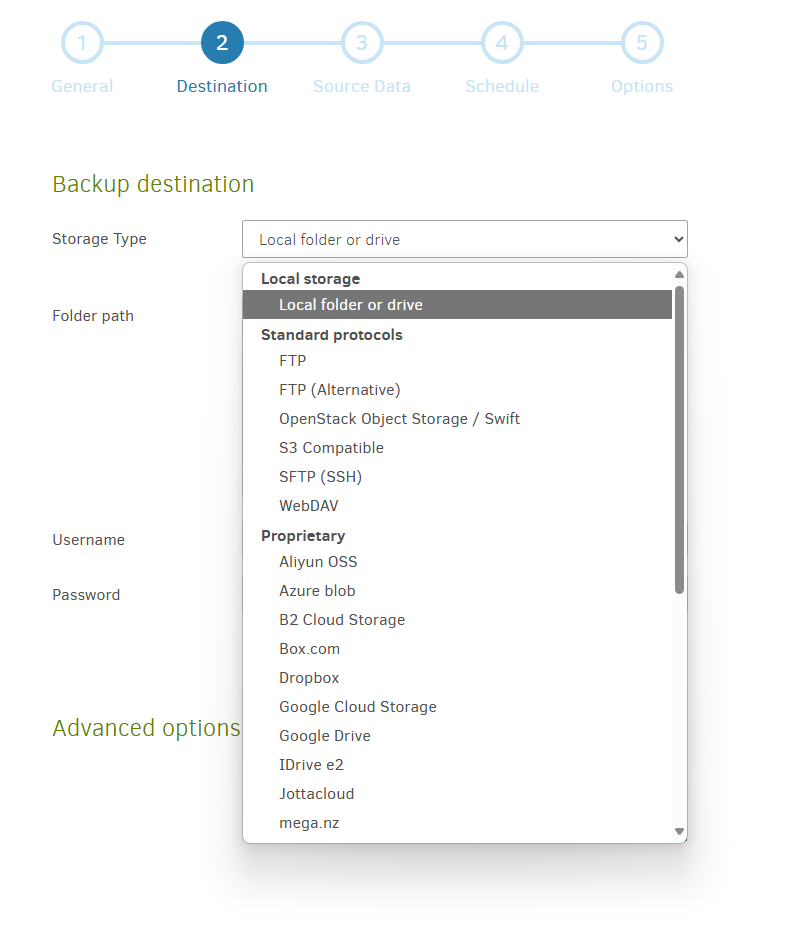

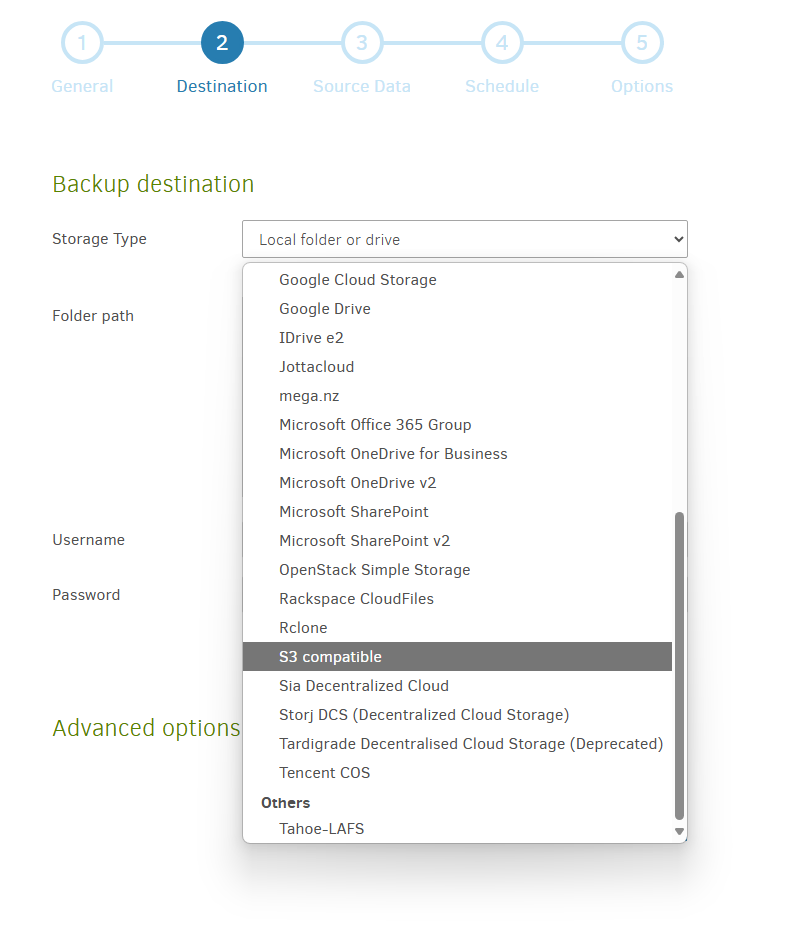

Next, we configure the destination of the duplicati backups. Here Duplicati has a wide range of options. I had to capture two screenshots to show you all the options it has available. However, note these below:

Below is the second screenshot picking up where the first screenshot leaves off. Tons of options here.

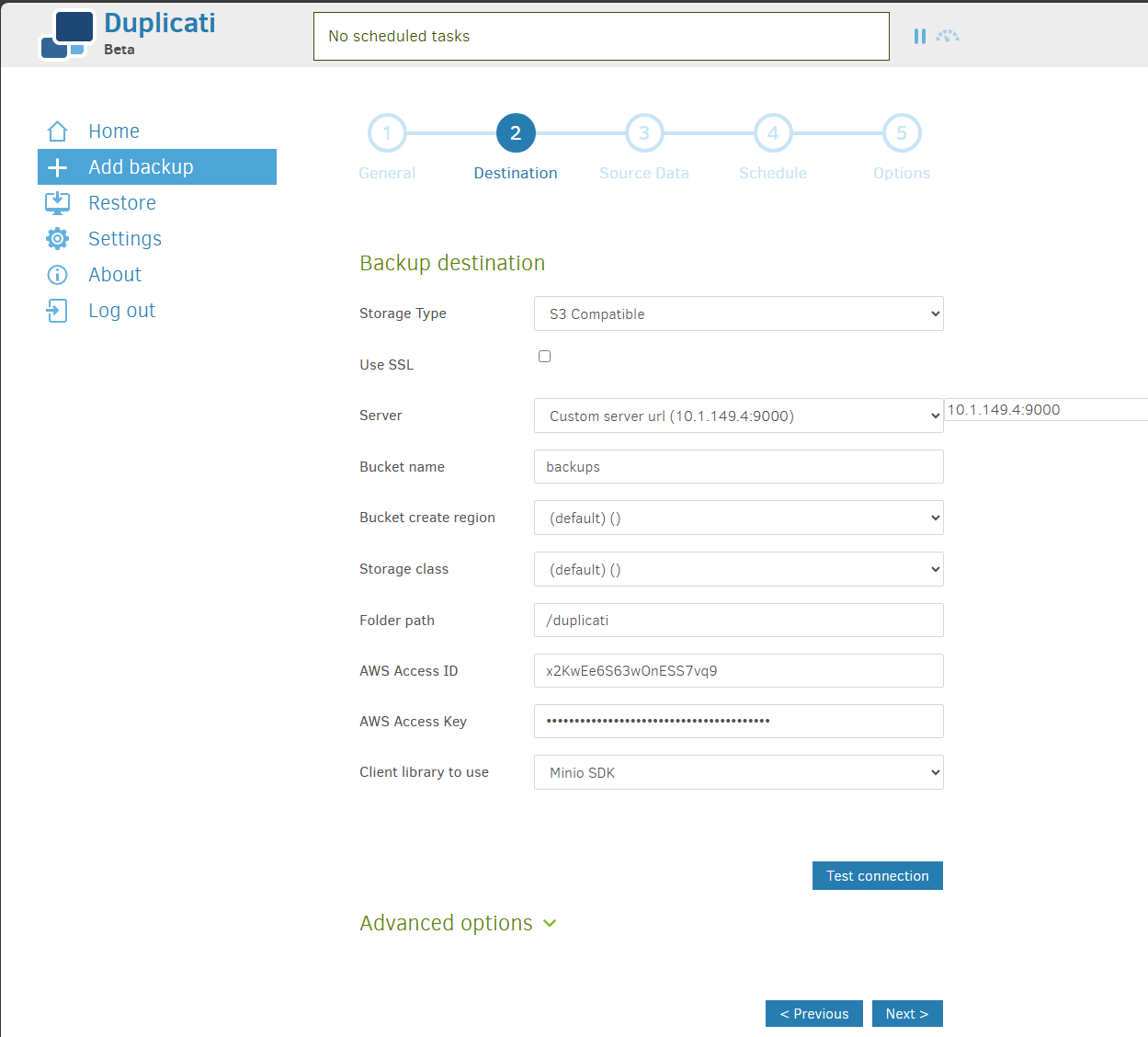

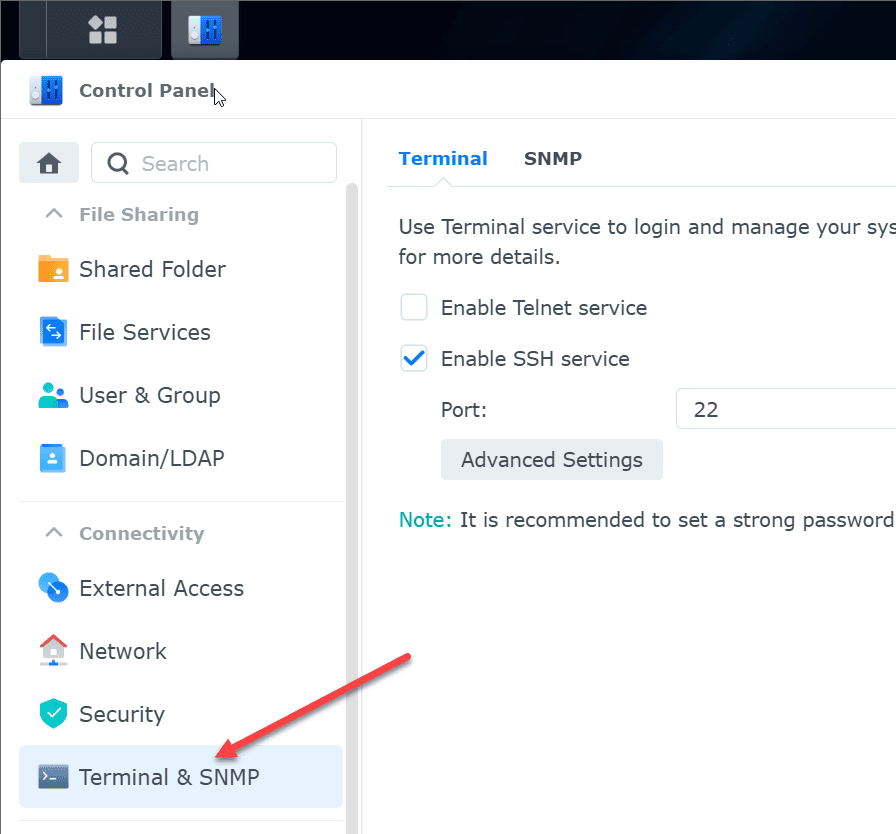

Here I am configuring my Duplicati installation to target a self-hosted MinIO S3 compatible storage server I am running on my Synology NAS device.

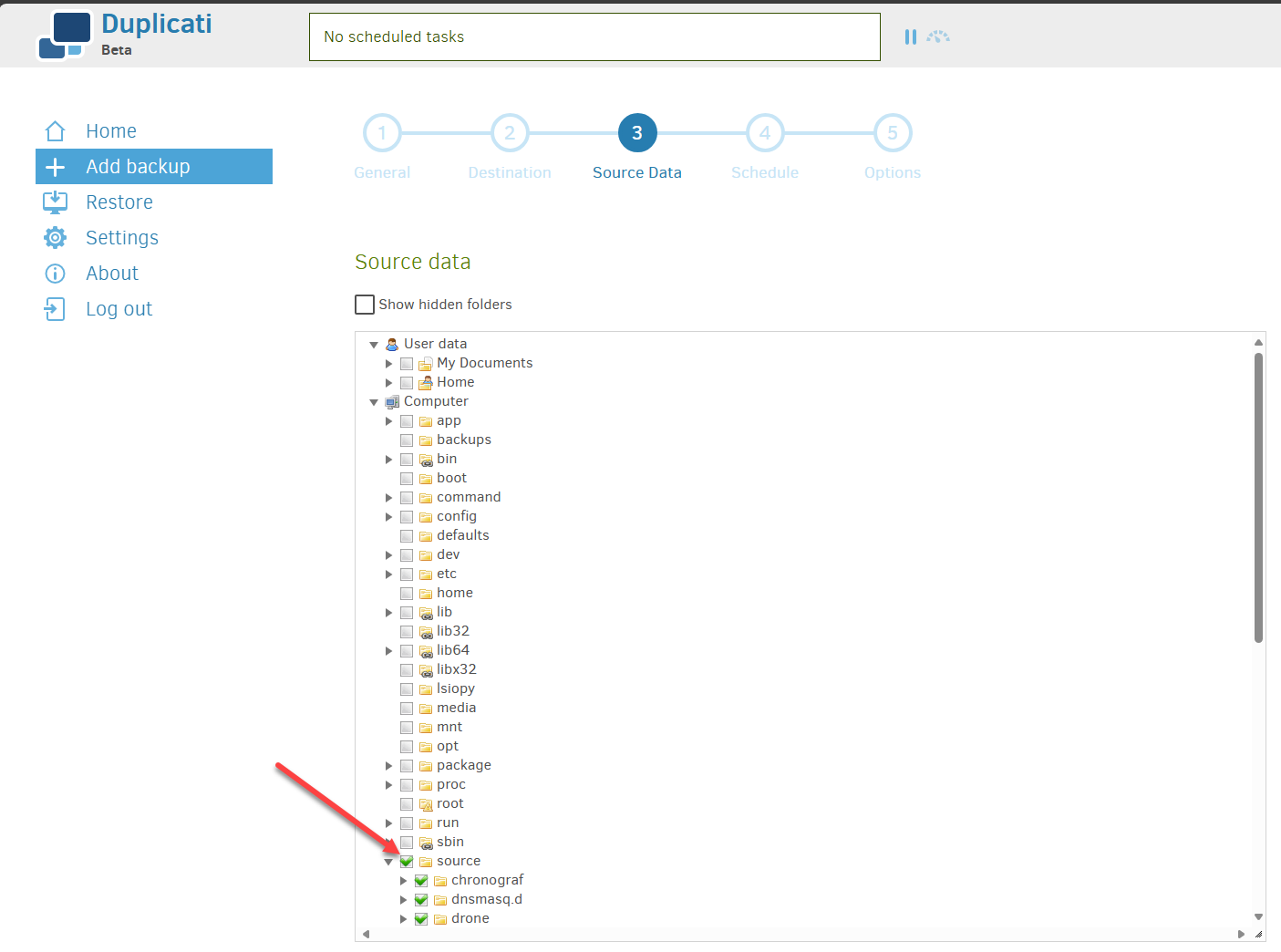

On step 3 of the wizard, you select the source of the backups. Here, the source folder now makes more sense in the Docker Compose code. As you can see, I mounted the root of my CephFS folder which contains all the persistent data for my docker containers.

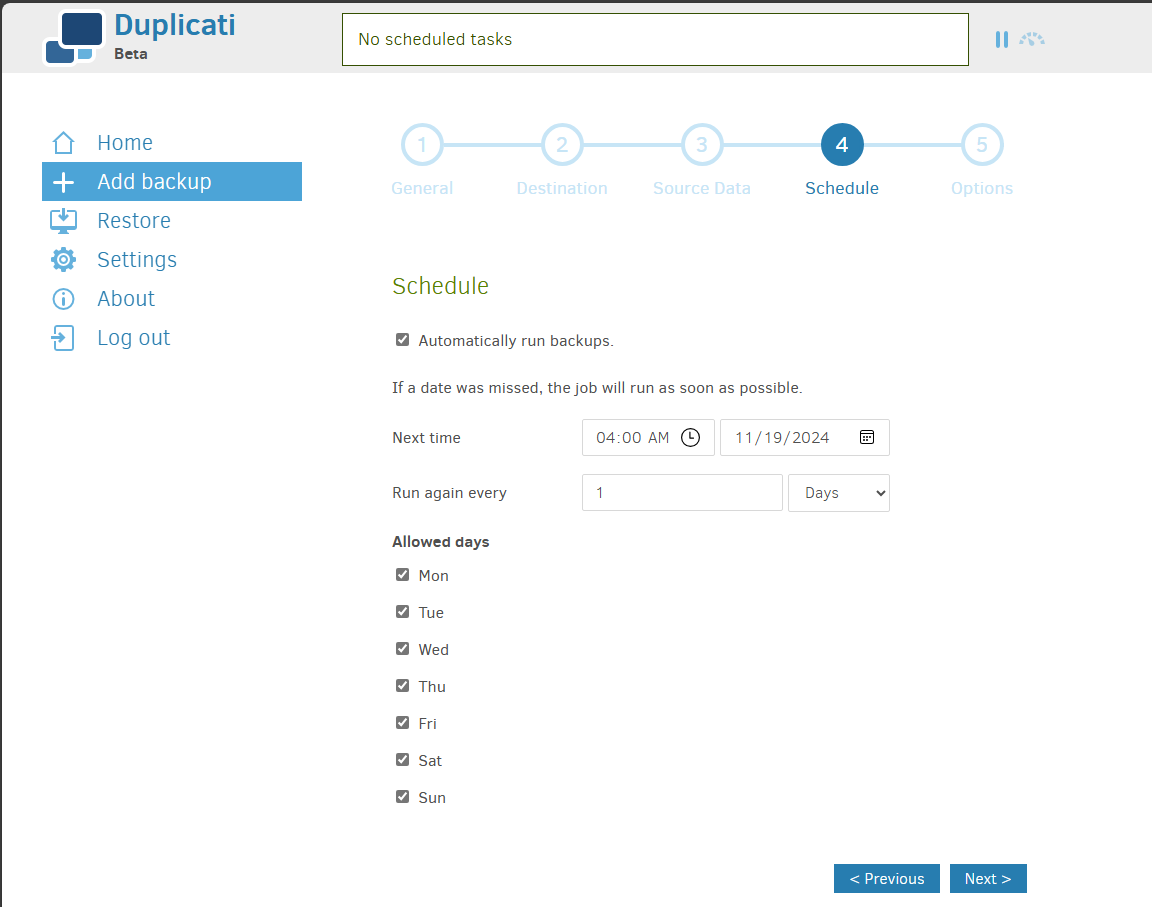

Next, we configure the schedule for the duplicati backups.

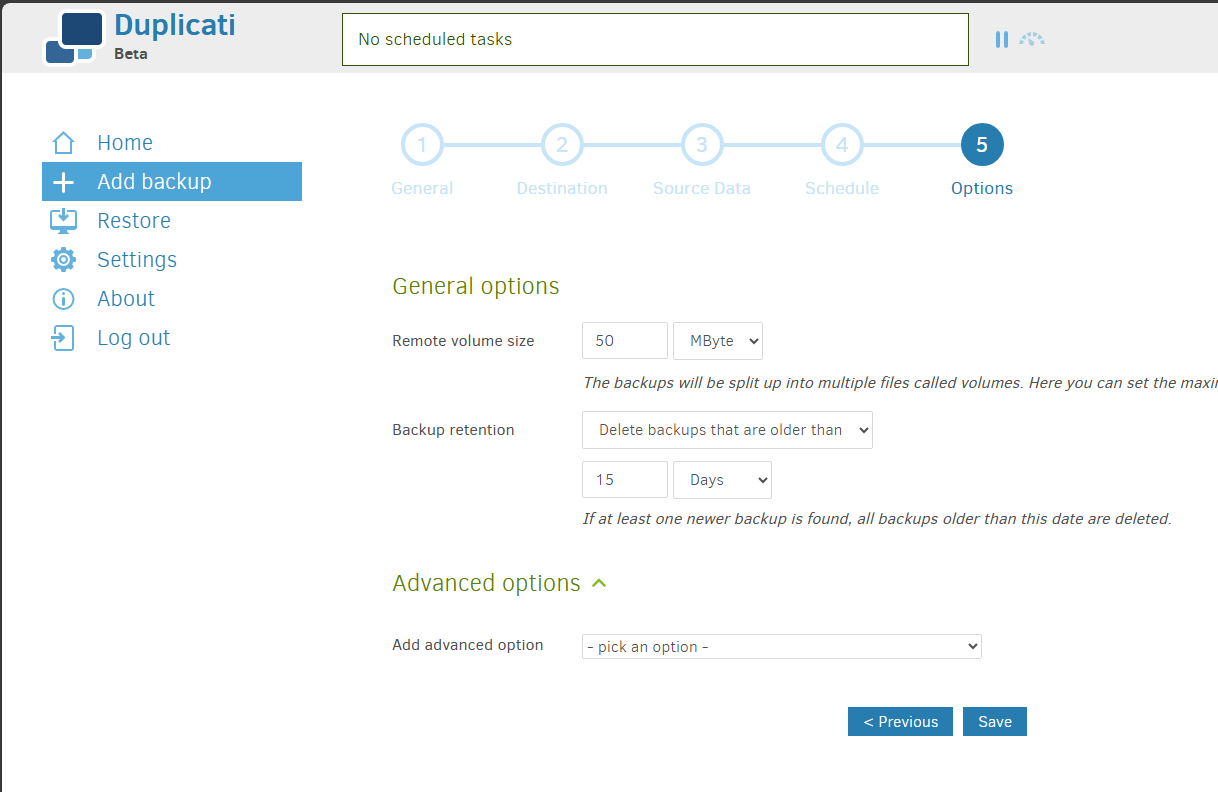

Finally, you can set other options on step 5 such as the backup retention and other advanced options.

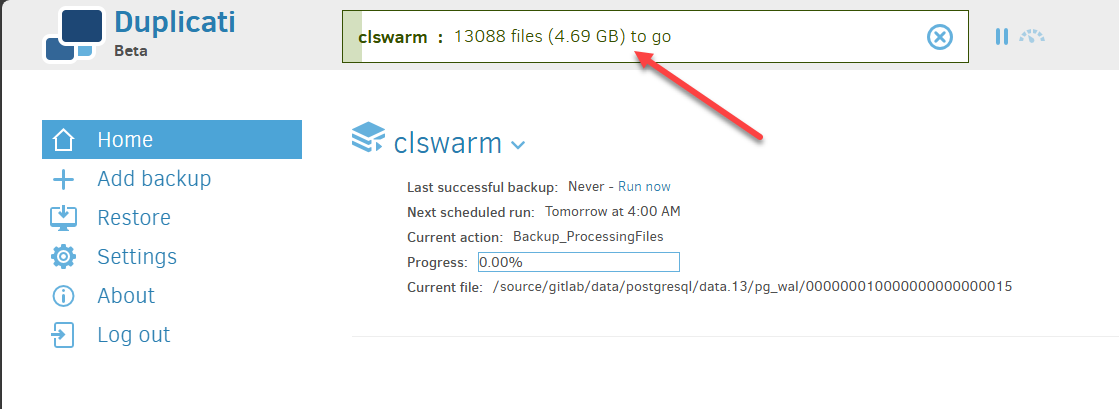

After clicking Save above, the docker container volumes backup kicks off.

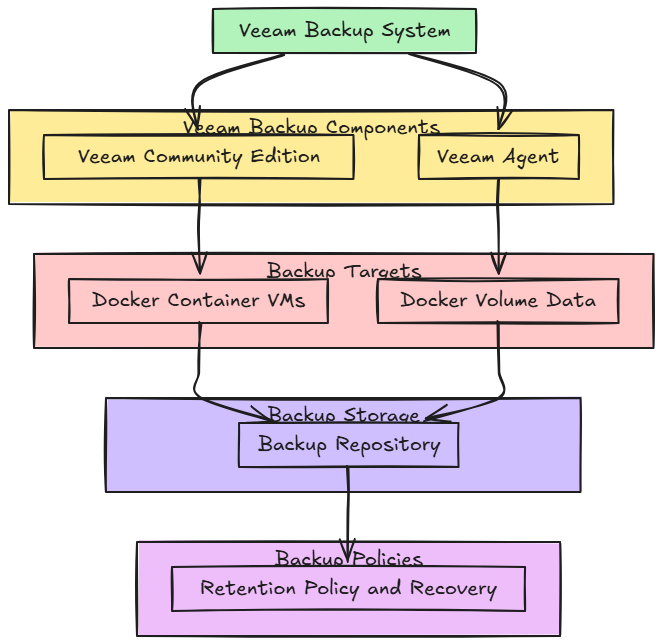

Veeam Community Edition

There is another option that is free in Veeam Community Edition. Veeam is well-known in the backup space and is a fantastic solution. With the Community Edition, you have access and licensing for 10 seats with Veeam. So this can be a combination of VMs, physical host agents, etc. So, with Veeam Community Edition, you can have a combination of backing up the “physical” machine or virtual machine and also using the physical agent if needed.

Learn more about Veeam Community Edition here: Free Backup Software For Windows, VMware, & More – Veeam.

Options for software defined storage

You need these options for software defined storage solutions like CephFS. I wrote a blog post about this just a little while ago that talks about the dangers of assuming you are getting good backups of your docker container volumes, when you may not be getting backups if you are only backing up the virtual machine from your virtualization environment.

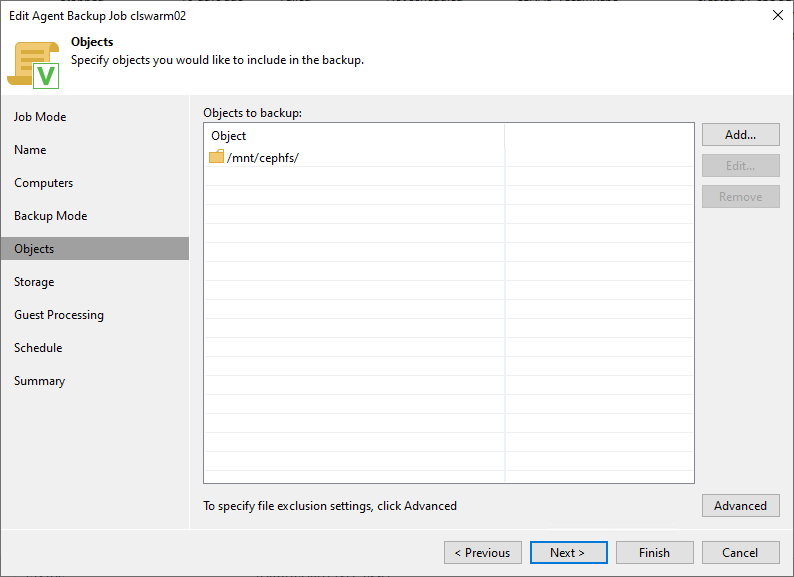

You can read that post here: Ceph Backup: Don’t Lose Your HCI Data. Below, you see the properties of an agent-based backup on the free Community Edition server I have running. I am pointing it at the /mnt/cephfs folder.

Below is a quick architectural and overview diagram of using Veeam for backing up virtual machines and file-level backups with the Veeam agent.

Wrapping up

When it comes to Docker containers, backing up your data is the key. The container image is just the application code that runs the service. However, if you have persistent data, this needs to be protected at all costs since this is what your app likely relies on. Hopefully this post gives you a good overview of what is involved with Docker containers and data that they use.

Google is updating how articles are shown. Don’t miss our leading home lab and tech content, written by humans, by setting Virtualization Howto as a preferred source.