Well, I am back at it again with running some crazy labs at home on a mini PC. I have been continually impressed with just how powerful these little computers are these days. With modern hardware like a 16 thread AMD Ryzen 9 processor and 96 GB of RAM, there are a lot of possibilities. Since I was able to push up to 250 virtual machines recently on the same hardware, I wanted to see the possibilities with running LXC containers.

You can see my recent experiment with running VMs on a mini PC here:

Table of contents

- Why LXC containers

- Going up to 400 LXC containers

- Starting off with 100 LXC containers

- 1500 LXC containers (or the quest for it anyway)

- Interesting problem

- Proxmox SDN to the rescue?

- What about Open vSwitch in Proxmox?

- No network connection still have issue

- Not giving up – more than one way to skin a cat

- Total LXC containers spun

Why LXC containers

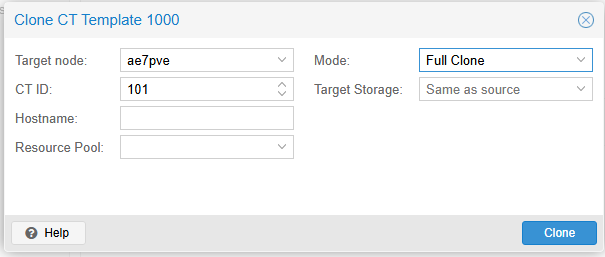

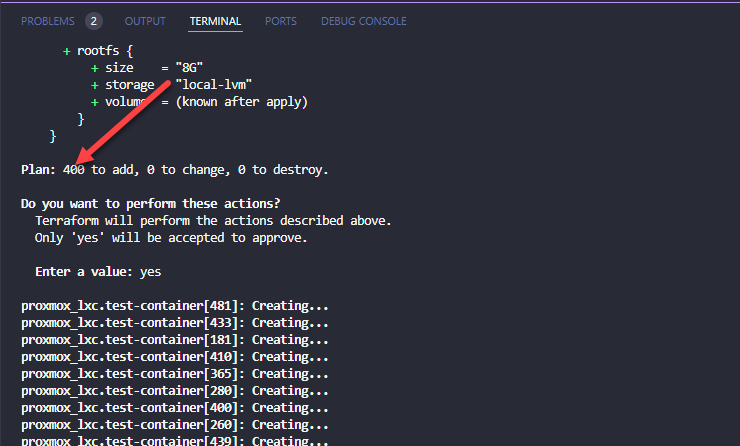

LXC containers are a great way to have an almost VM-like experience with the footprint of a lightweight OS container. They are super small and you can spin these up in no time. What’s even better in Proxmox, you can create a template as well, like you can with virtual machines, and have these customized for your needs to use in production.

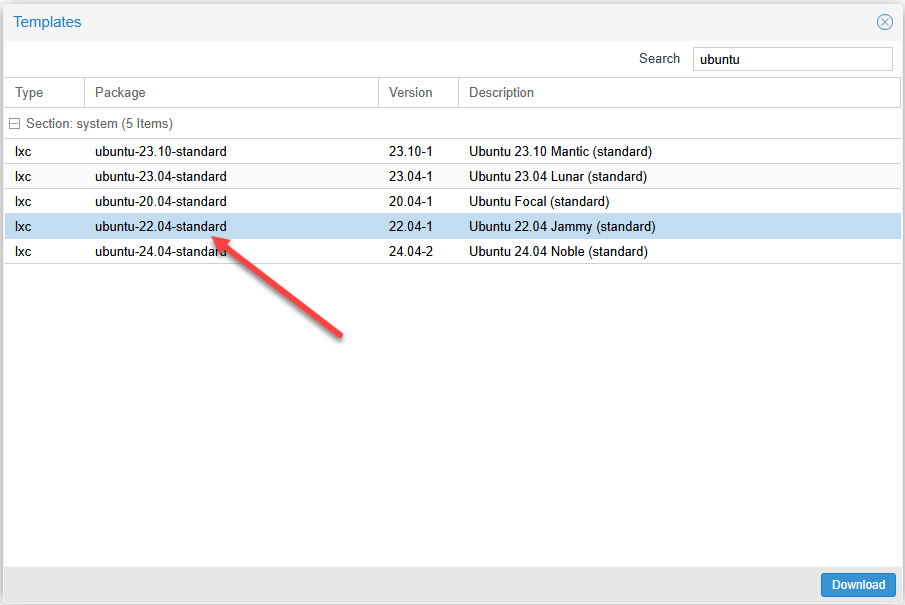

Ready to download templates

In Proxmox, you can go to your CT Templates menu in the Proxmox storage and click the Templates button.

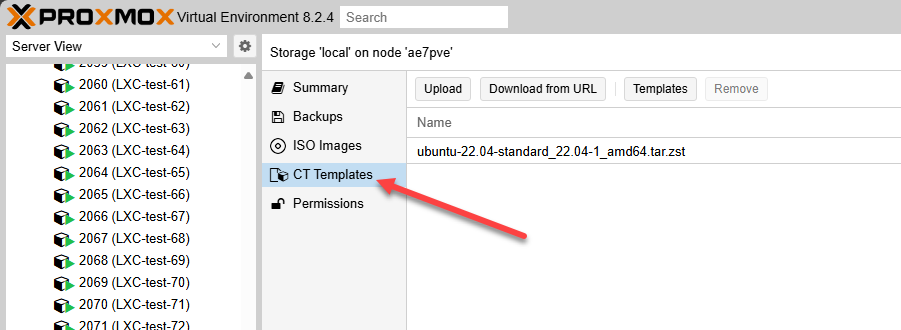

Going up to 400 LXC containers

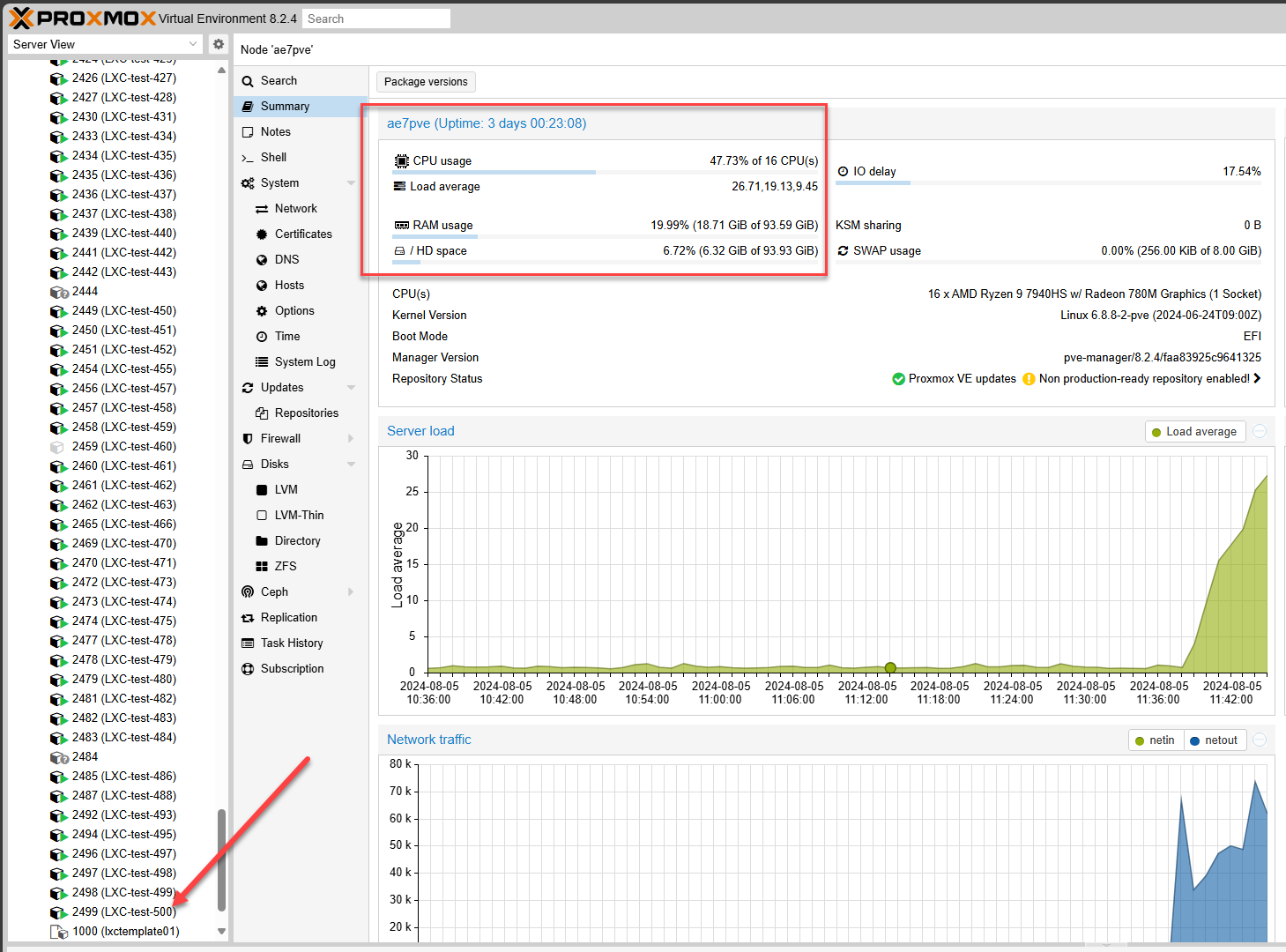

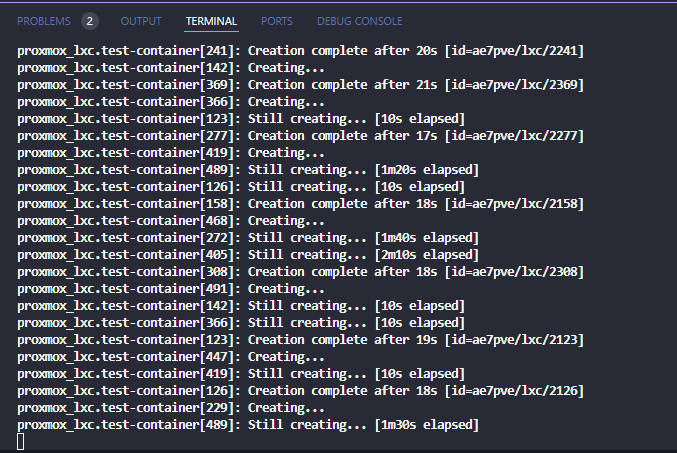

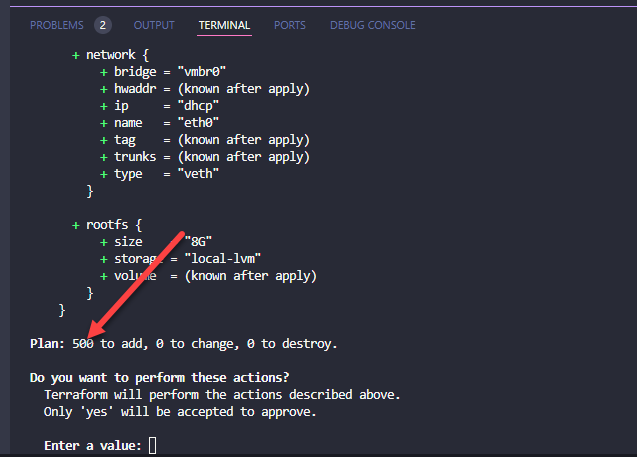

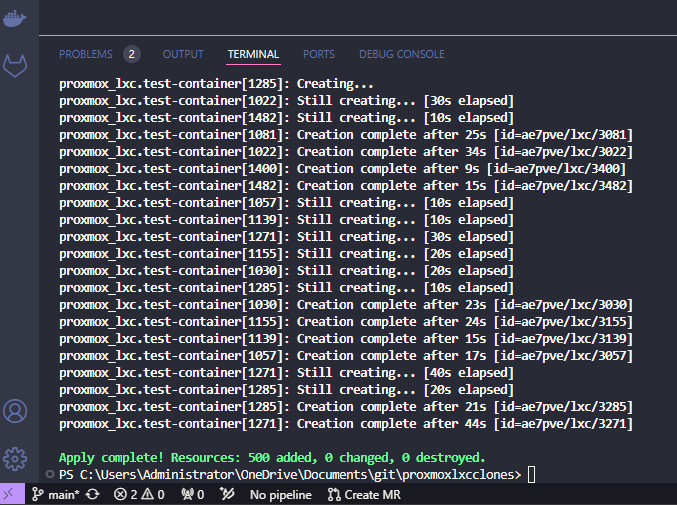

I had already started with 100 LXC containers, and the mini PC was not breathing hard at all. I decided to take it up several notches to a total of 500 containers. This meant Terraform would add 400 more to the environment.

Starting off with 100 LXC containers

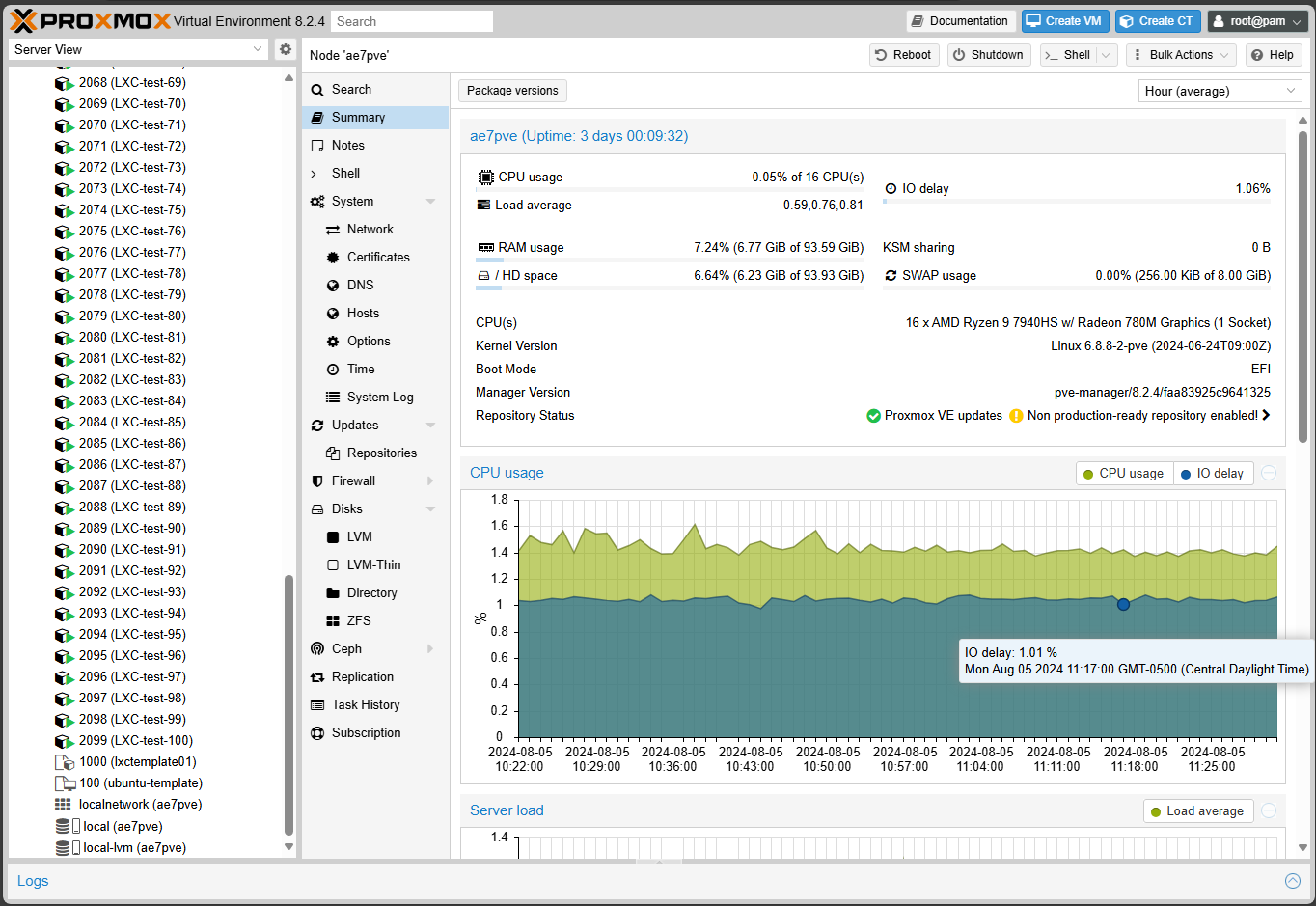

I knew this wouldn’t make the little box break a sweat, but this was the first round to just get Terraform broken in and make sure the provisioning process worked as expected. It did, no issues with the clone process. As you can see below, with 100 LXC containers running, we are sitting at around 7% memory usage. So, lots of headroom. I am also hoping that Kernel Sharedpage Memory will kick in as well as it did with the VM experiment.

Kicking off the terraform apply job.

With around 500 containers running, you can see the RAM usage at around 18 GB or so.

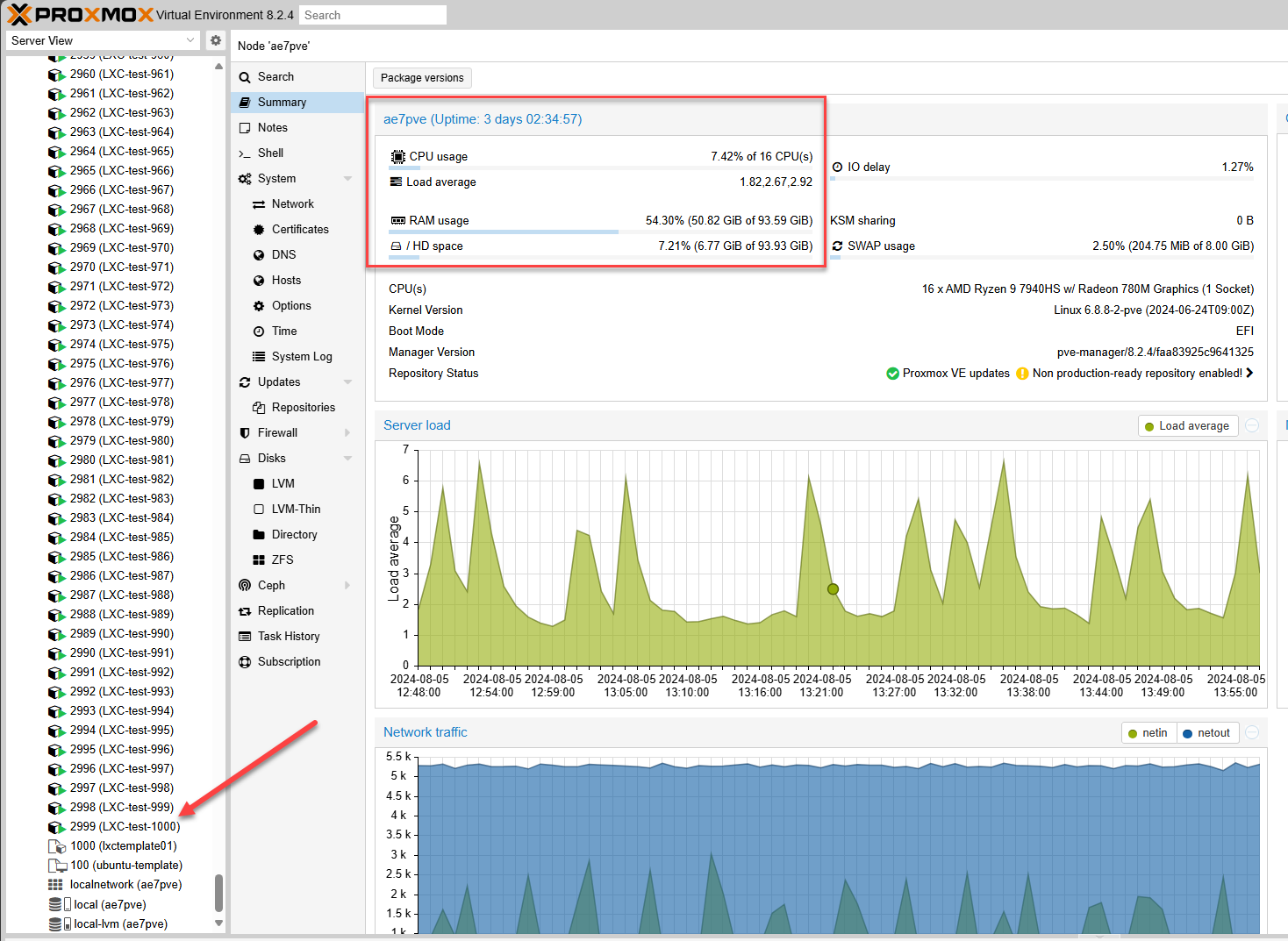

With 1000 it has spiked up to around 50 GB.

1500 LXC containers (or the quest for it anyway)

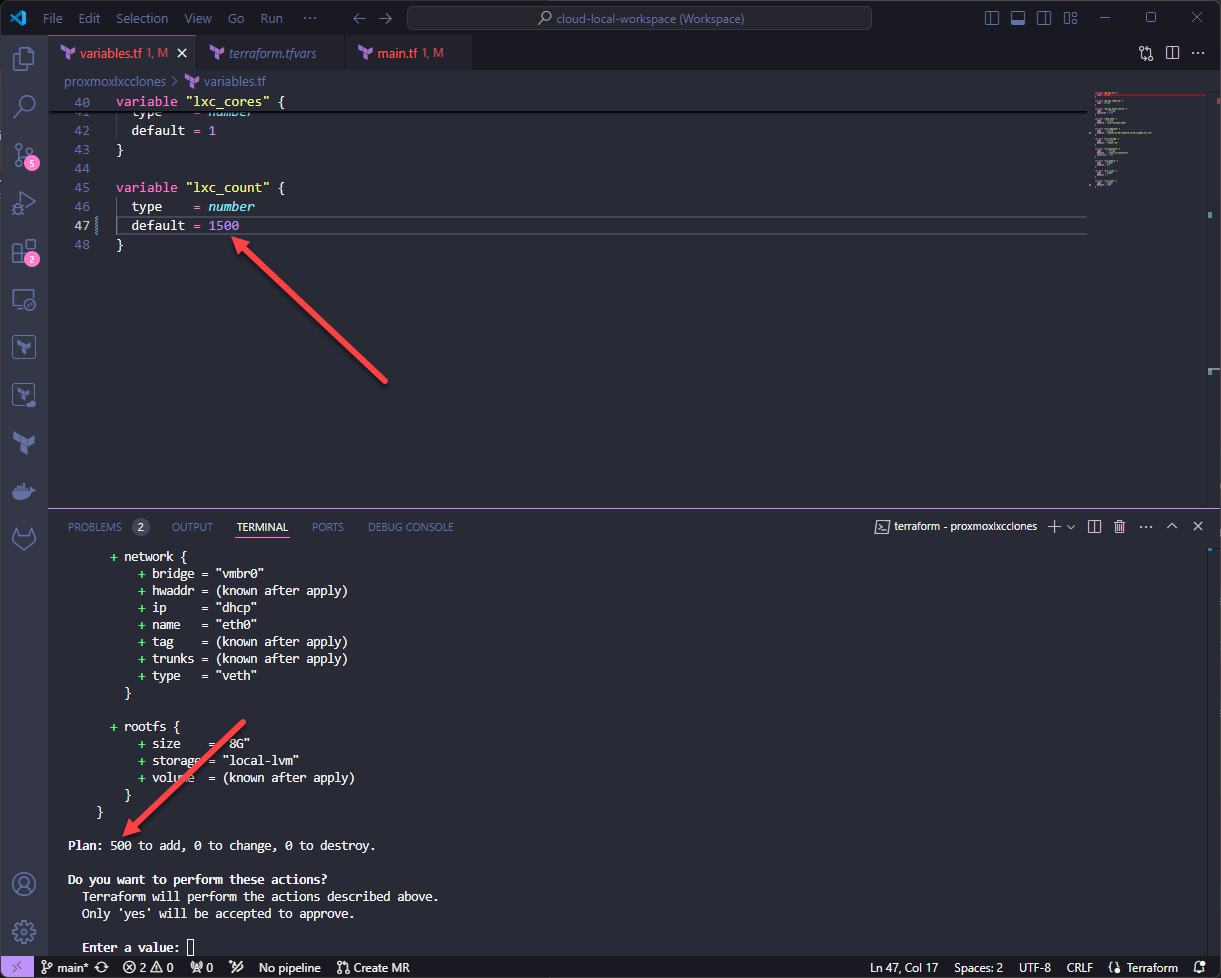

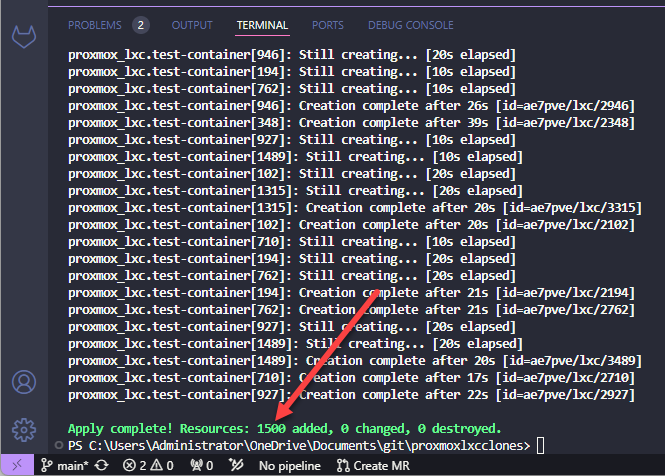

Now, let’s bump things up to 1500 LXC containers. With the 1000 containers deployed, I simply just bumped up the number of containers in the Terraform configuration and ran another terraform apply.

Going after 1500 LXC containers.

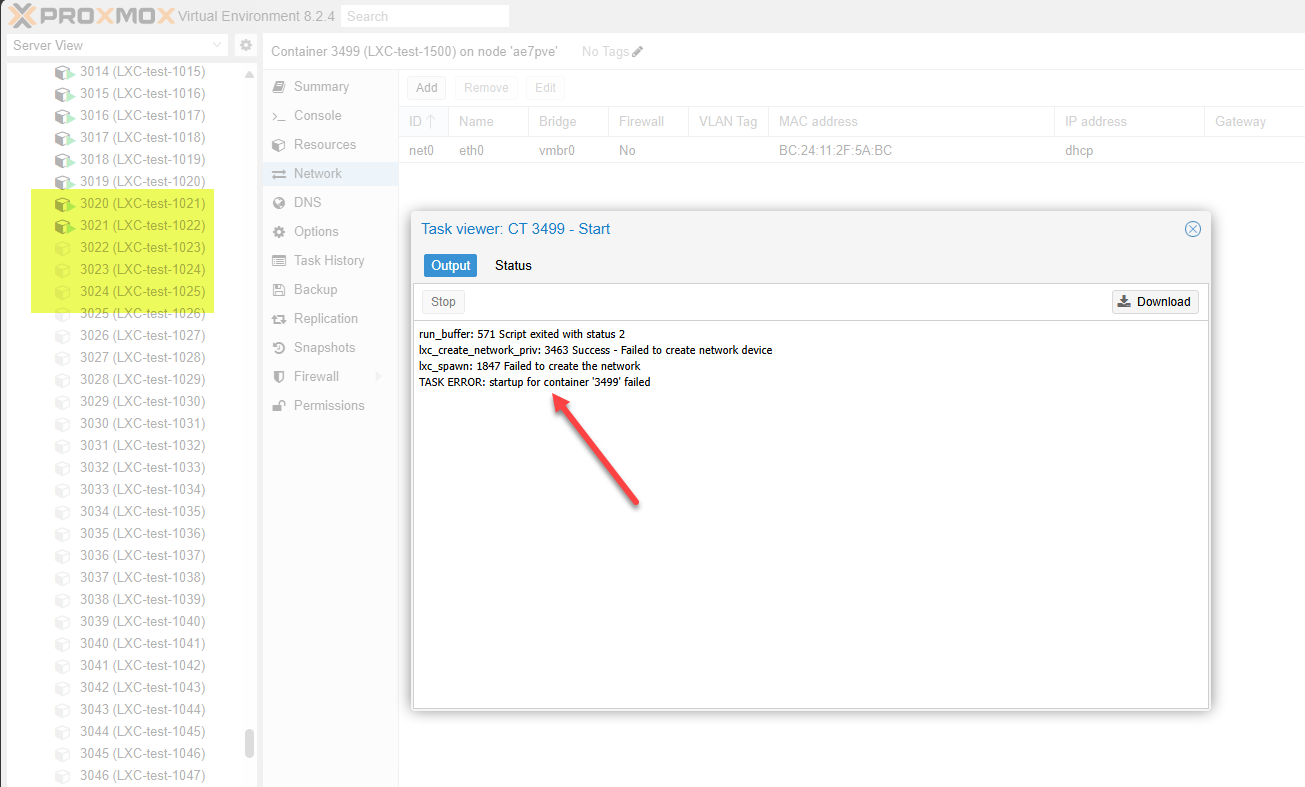

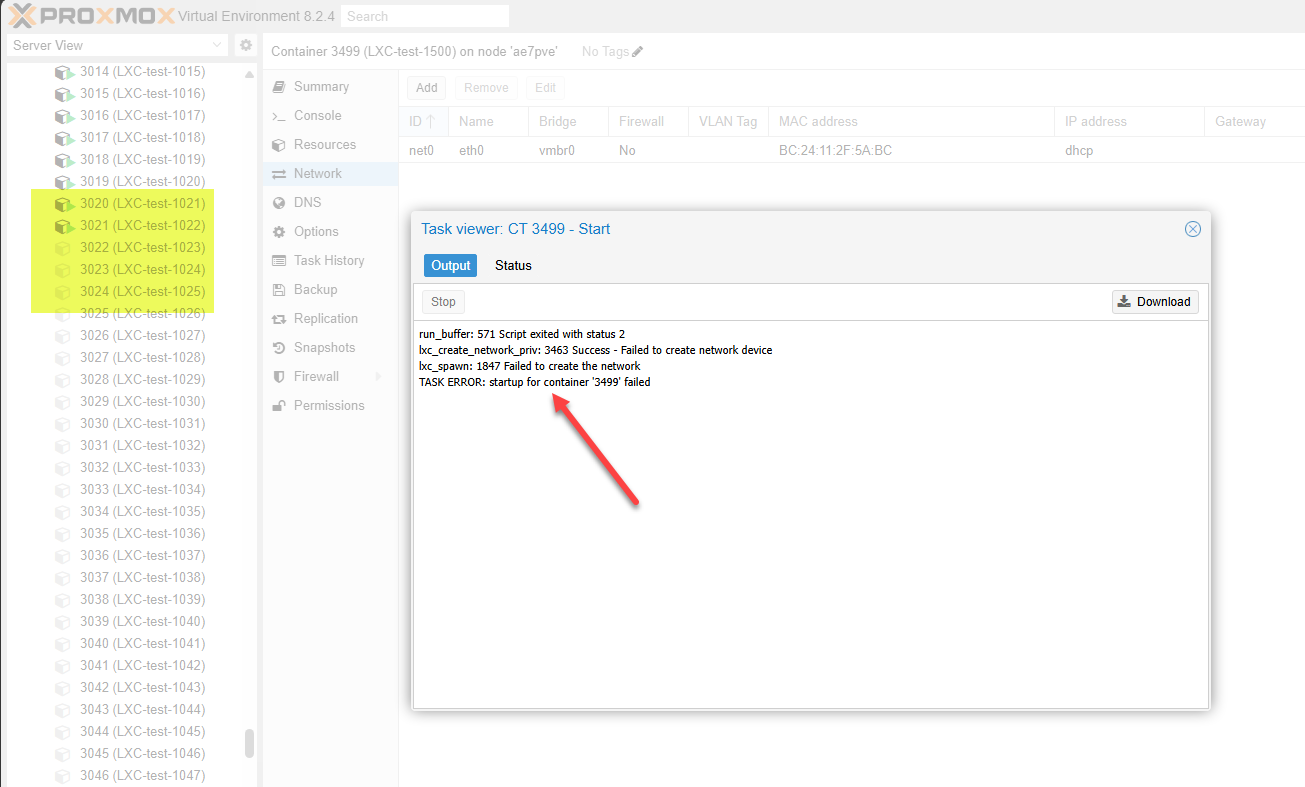

Interesting problem

I ran into an interesting problem that seems to be a limitation with the Linux bridge. I noticed that the containers spun up and powered on, seemed to add up to around 1024 containers and not anything higher. While the other LXC containers were created by Terraform, they were not powered on.

I found several Proxmox forum posts regarding limitations with Linux bridge at 1024, etc. Take a look at this interesting post here:

Proxmox SDN to the rescue?

After trying several different things with the mini PC, including editing all kinds of finds to try to find the limitation, I gave up on this front since it seems from what I am reading, the 1024 bridge ports is a limitation of the Linux kernel itself. Outside of recompiling the kernel, there isn’t really a way around this from what I can tell.

Please do correct me on that if I am wrong on that front or if you have found a workaround to this interesting issue.

I had an idea of using Proxmox SDN instead to see if just using the simple SDN network configuration might allow me to connect the LXC containers there and SNAT the traffic out through the host.

However, the same result with Simple SDN vnet and zone. However, I had a thought about VXLAN since the simple SDN configuration looks to just use Linux bridging.

Even with the VXLAN configuration, I still got the same error.

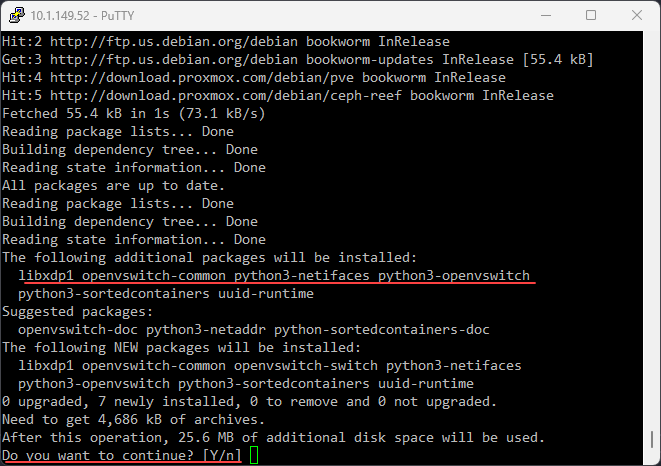

What about Open vSwitch in Proxmox?

As a last ditch effort, I thought about using open vSwitch even though I don’t have much experience with it in Proxmox. I decided to install it and give it a go.

No network connection still have issue

I started thinking this may not be directly related to the network on which the containers are connected. Rather, i think it is related to the network of the Proxmox host itself. The reason for this is in my Terraform code, I switched from using a network connection to no network connection for all the LXC containers and still got the error.

Not giving up – more than one way to skin a cat

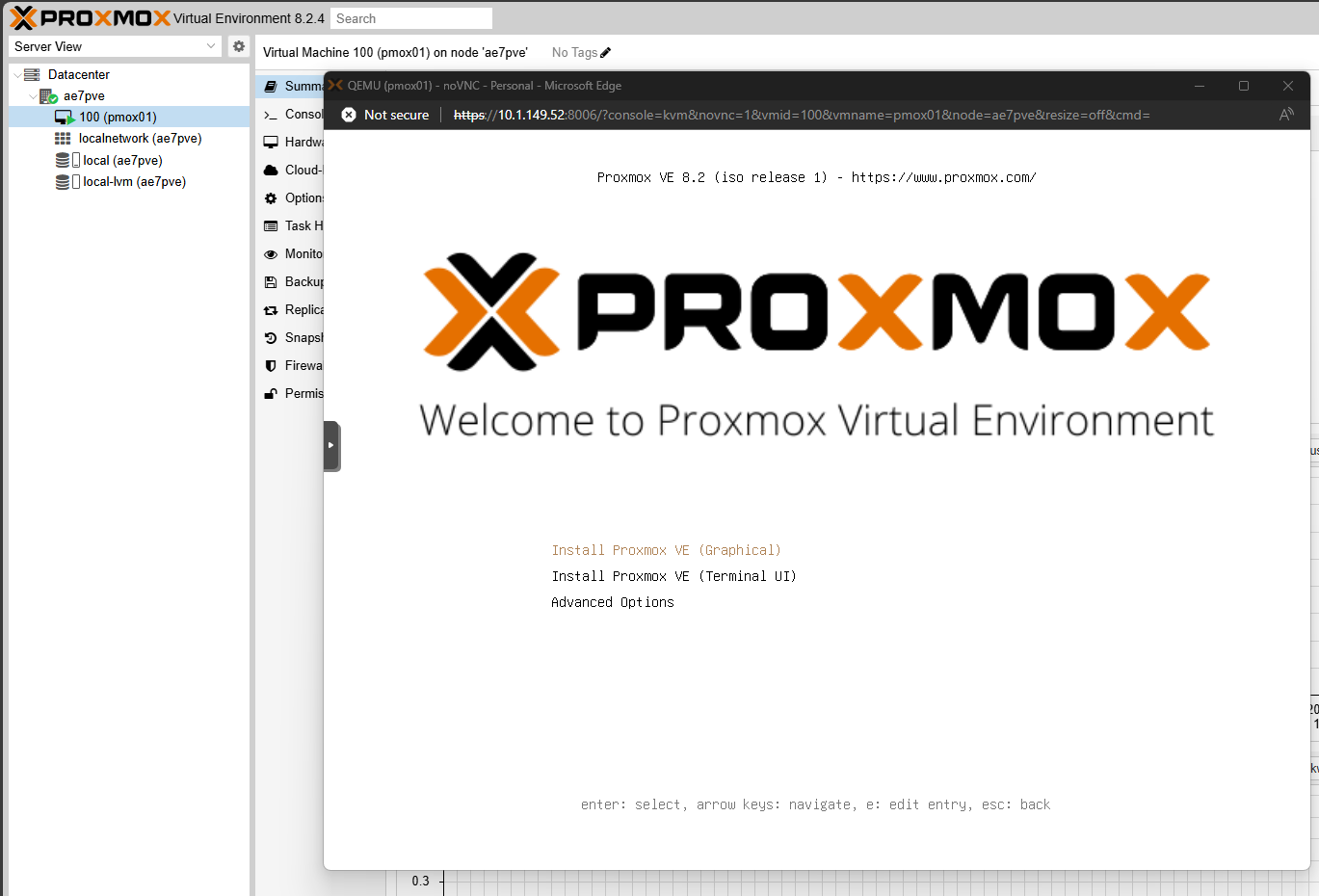

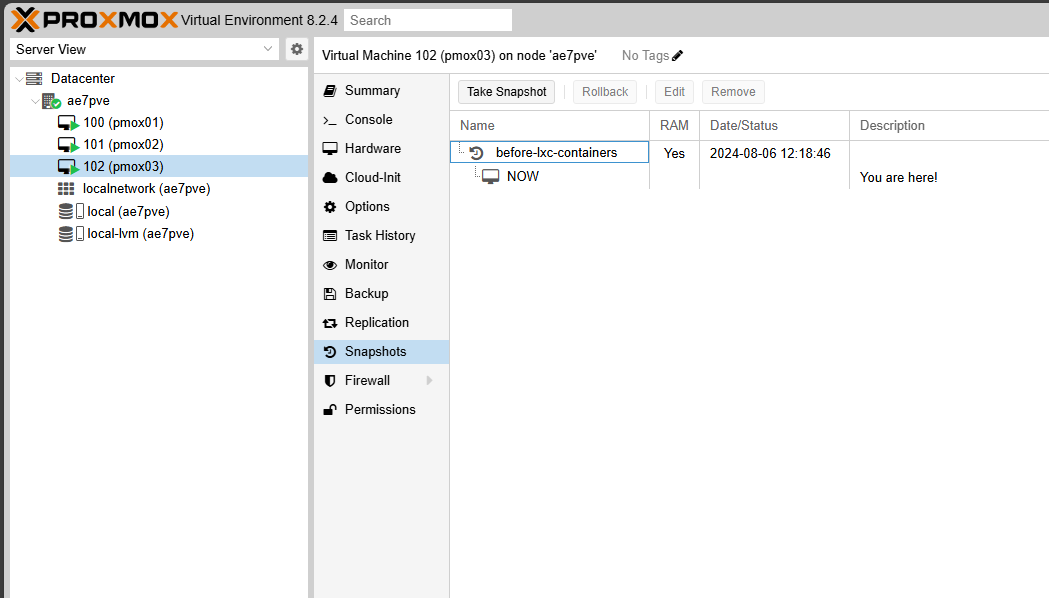

Well, I am not known to give up easily so there is more than one way to skin a cat 🙂 What I decided to do to push the envelope was to use nested Proxmox instances to achieve what I set out to achieve, how many LXC containers could I host?

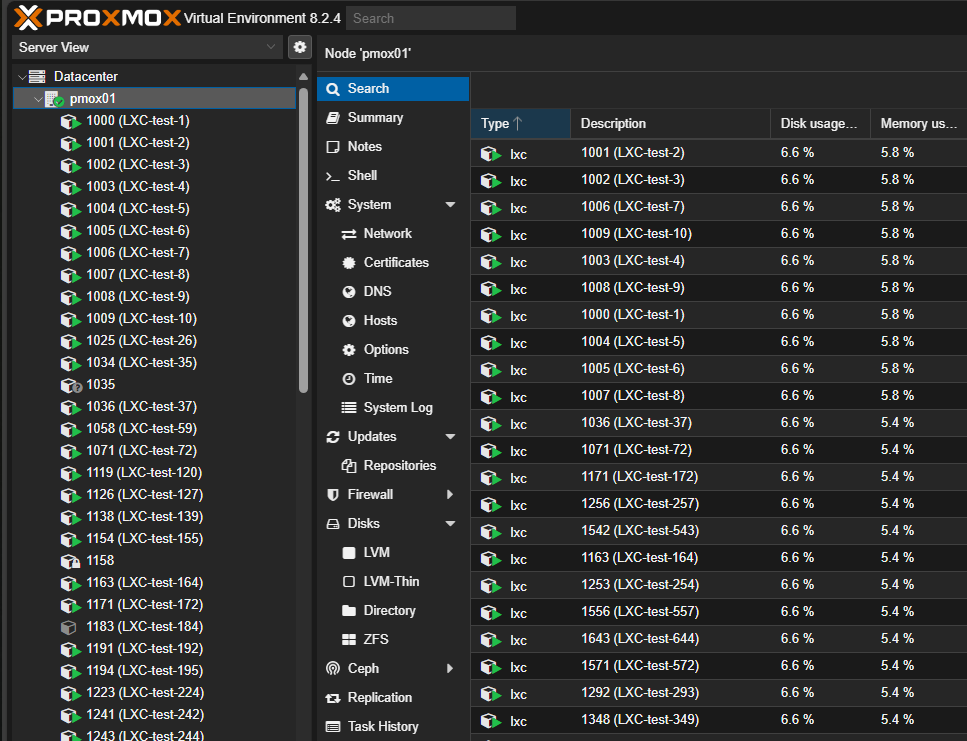

Below, is logged into the nested Proxmox instance and watching the LXC containers spinning up from the Terraform apply.

Total LXC containers spun

So, all said and done, I was able to fit 1950 LXC containers inside 3 nested Proxmox installations on a physical Proxmox server running on my mini PC with 96GB of RAM. There is probably more tweaking and experimenting I can do on the front of seeing how I can extend this. I would really like to get to the bottom of the 1024 containers on a physical host if there is a possible workaround.

Do let me know in the comments if you have found a way around this limitation!

Google is updating how articles are shown. Don’t miss our leading home lab and tech content, written by humans, by setting Virtualization Howto as a preferred source.

Comment puis-je avoir ce mini server ?

Hello Camara,

This is the Geekom AE7 mini PC with the Ryzen 9 7940HS processor and 96 GB of memory. You can find it from most online retailers.

Thanks again,

Brandon