Shut Down Server Due to Heat: Consolidating My Home Lab

Here in the northern hemisphere, we are approaching the summer with rising temperatures and heat. In replying to a comment on a blog post for the Minisforum MS-01 and talking about summer temps and moving workloads to mini PCs, I wanted to write a more in-depth post on this topic. Those of you who are running home lab environments, do you have a set plan you execute in the summer months? Do you shut your lab down entirely? Do you shut down server racks, a single server, most of them, or something else?

Table of contents

My lab plans for the summer

This year, I plan to migrate my production home lab workloads to mini PCs to reduce power draw, noise, and heat. My aging Supermicro servers have served me very well over the past several years and they honestly probably still have some life left in them. However, I am feeling the need to change things up a bit in the lab, especially with all the great mini PC hardware there is today.

I have a mini split in my server area here in the Southeastern US that definitely is needed in the hottest of the summer days/months to help keep things in the high 70’s or so. On the hottest days, it struggles to keep the room out of the 80’s.

New cluster

Right now, I have a whole slew of mini PCs from testing and reviews that I can use for housing workloads. However, I would like to build a uniform cluster with multiple identical mini PCs, ideally, the MS-01 in the form of (3) units in a cluster.

Is there a model of mini PC you guys are looking at for collapsing down your home lab for better efficiency?

Standalone hosts

There is also the possibility of running standalone hosts with good disaster recovery plans. Clusters are so nice to have for automatic HA and failover capabilities. However, for a home lab and most, they aren’t absolutely necessary.

As I have transitioned to more containerized workloads on fewer VMs, I feel like possibly collapsing down to fewer hosts makes sense.

Current power draw

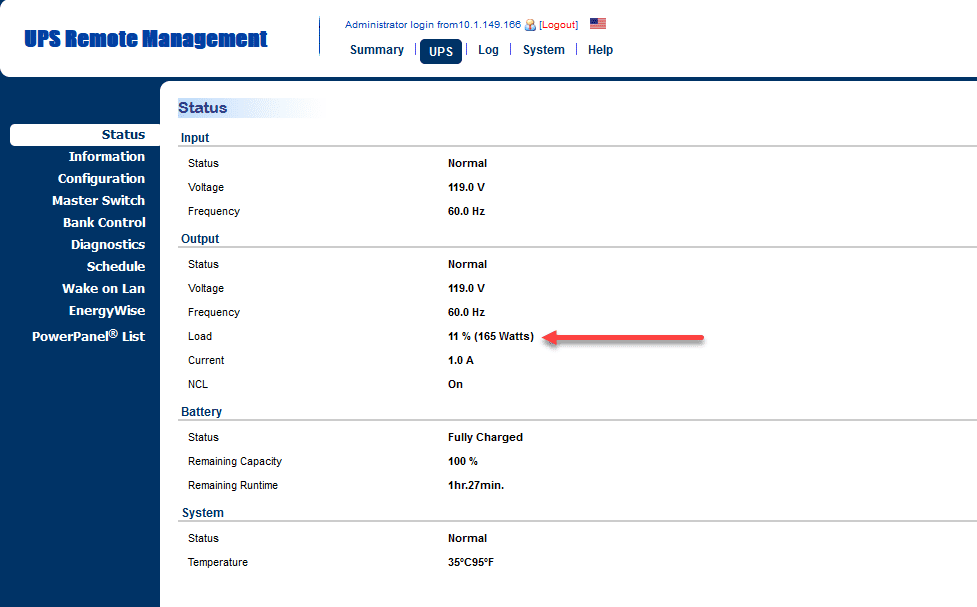

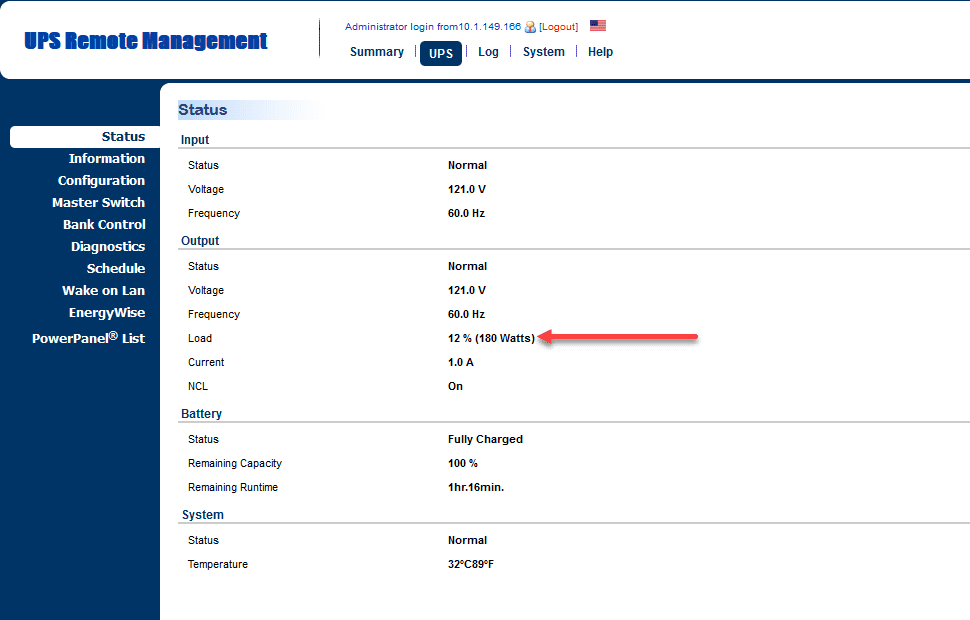

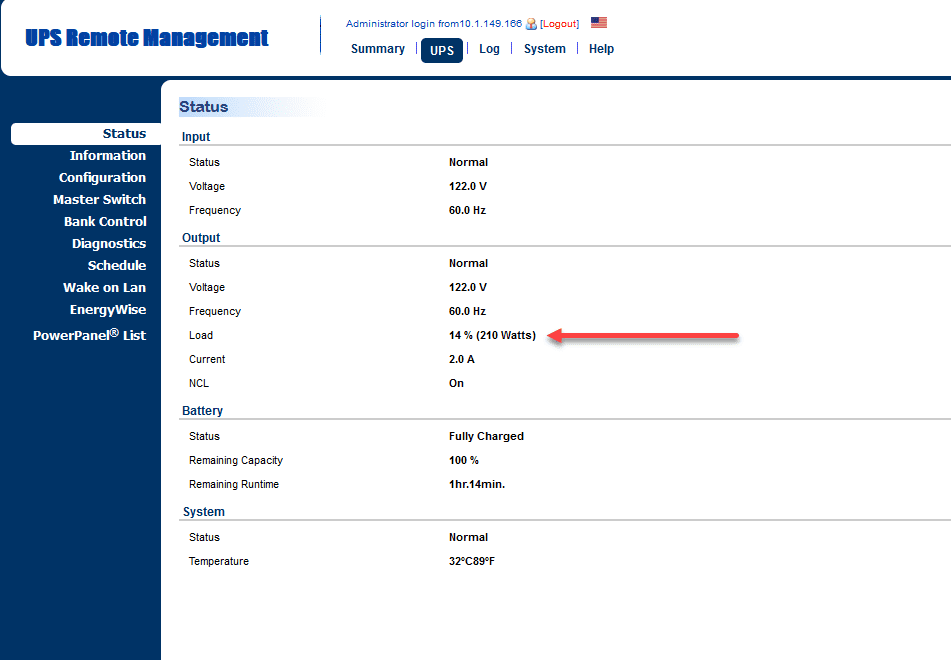

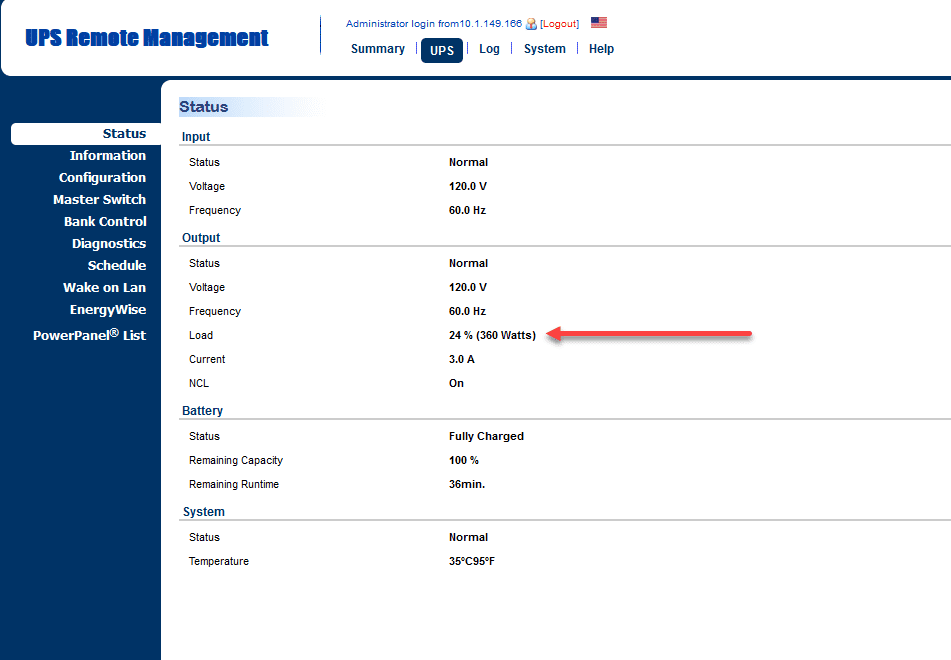

With the older super micro servers running and the network gear that I have plugged into my (2) UPS’s, the power draw looks like the following:

On the second UPS

Shutting down my vSAN Cluster

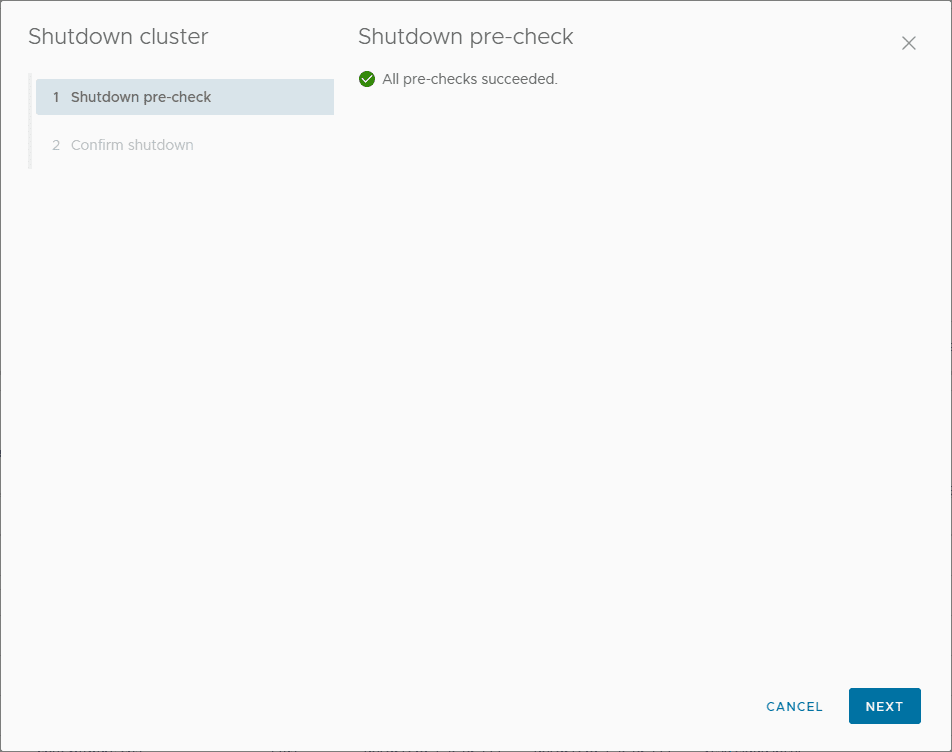

VMware vSAN clusters have a built-in shutdown cluster option to properly shutdown the cluster in the right order, etc. It will check prerequisites and step through the process using orchestration.

After moving my critical workloads to my MS-01 and another Mini PC, then getting these in backups, I initiated the shutdown of the vSAN cluster.

Entering in the shutdown reason and then clicking Shutdown.

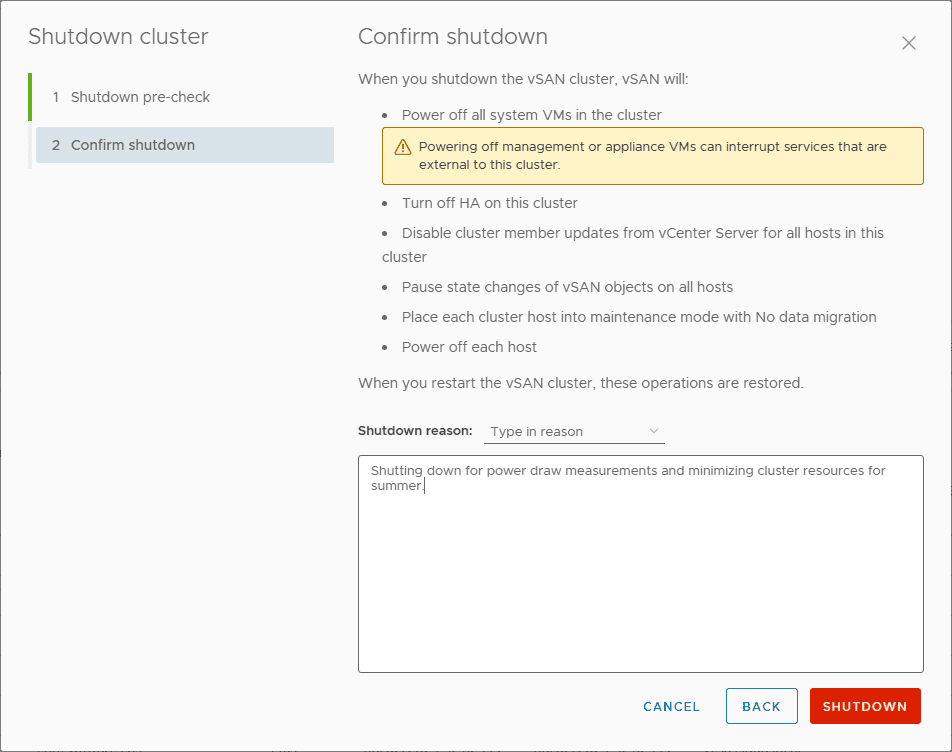

The shutdown process begins.

Power savings after shutting down the vSAN cluster

After powering down the vSAN cluster, this is the power draw on the first UPS. This is down from 210 watts to 165 watts.

This is the power draw on the second UPS. This one had the majority of the hosts. It went from 360 to 180 watts.

If my calculations are correct from where I started, I am down 225 watts from where I started running the supermicro cluster. I can already tell a tremendous difference in the heat of the room as well which is actually the driving reason behind starting the process to consolidate as a test.

Shut down server cluster: Is this permanent for me?

It could be. Honestly, I would like to have a little better HA configured for my setup currently since I shut down server cluster configurations, but I am pleased with the results so far. This should be a noticeable difference in the power bill and it compounds to the fact that I won’t have to cool as much which is an unknown cost to me at this point.

What are you guys plans for the summer? Are you struggling with heat in your home lab? Are you consolidating, powering off servers, moving to mini PCs? Let me know in the comments as I would love to get some discussions going on this topic.

Please specify F or C when mentioning temperatures. America isn’t the only country on the internet.

S B,

Thank you for the comment! Yes definitely. I need to remember not everyone in the world uses the old system, wish we would standardize on metric here as well!

Brandon