Running Dissimilar VMware Cluster on Mini PCs

As I have written about quite a bit lately, I have been pairing down the home lab environment from my older Supermicro servers to mini PCs that I have been testing on the past few months. One of the main driving factors for downsizing is heat and power consumption. Both of these are becoming increasingly important for home labbers looking to get the most out of their labs while not breaking the bank on cooling and spending on the electricity bill. I wanted to give an update on how things are going and the VMware cluster I configured with mini PCs.

Table of contents

VMware cluster and why you want one

If you are running VMware vSphere at work or at home, which many are, but this number is dropping (due to Broadcom), ESXi clustering provides a great way to enhance the experience of running self-hosted services like virtual machines and containers.

With a VMware cluster, or vSphere cluster as it is commonly referred to, you have multiple ESXi hosts configured as a logical unit. Typically, the best results are achieved using shared storage. It gives you access to things like vSphere distributed resource scheduler DRS and High availability HA.

Cluster services remain the best way to run VMware ESXi for the best features and capabilities for your VMs and services.

Requirements for a VMware cluster

You will need to meet the requirements for a VMware cluster to build one. Briefly, you will need a vCenter Server to enable cluster services using the vSphere Client. The vCenter Server also enables other features with the right licensing, such as distributed virtual switches.

Once you deploy vCenter Server on a single ESXi host, you will be able to proceed with cluster creation by creating a new data center in vSphere and a new cluster object.

Once you have a single ESXi host running, you can can actually enable vSphere cluster services with a single host. Then you can continue by adding esxi hosts in the cluster. The cluster manages a single or multiple hosts.

Also, to take advantage of all the features of a VMware cluster, you will need shared storage. This can be traditional shared storage like iSCSI or NFS connected to a SAN/NAS, or you can take advantage of an HCI (hyper converged cluster) configuration with VMware vSAN.

Starting with vSphere 7.0 update, VMware introduced a new cluster wizard to more easily create clusters and make sure the cluster configuration is configured as it needs to be, even automating vSAN storage configuration. However, you can still do all the steps manually, even performing manual vsan configuration if you want in the ESXi cluster.

Create a VMware cluster

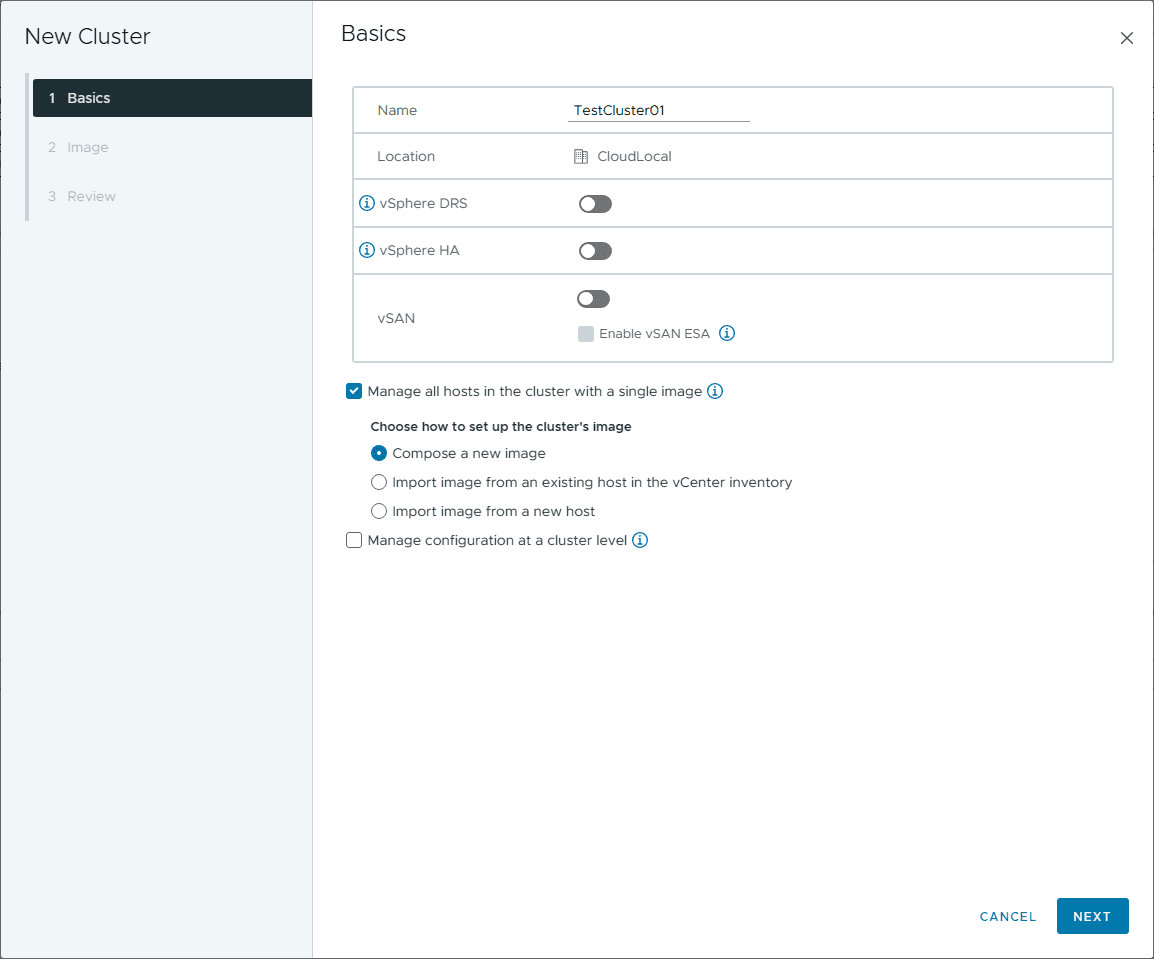

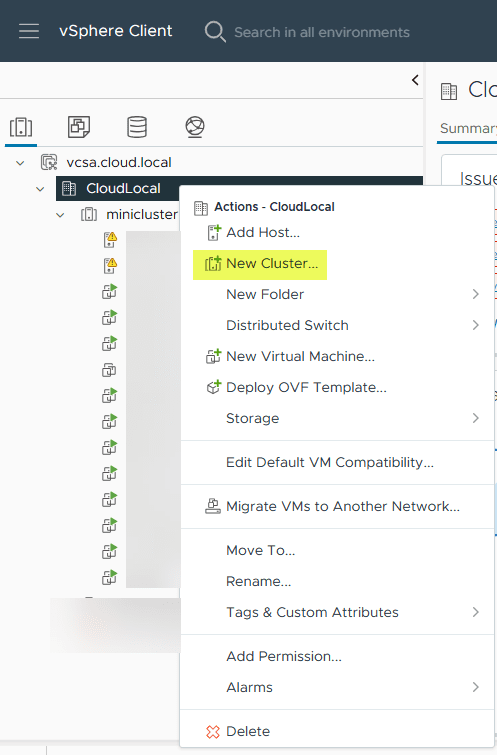

Let’s take a quick look at creating a new cluster in VMware vSphere, using the New Cluster wizard in the vSphere web client. First, we right-click the VMware datacenter and in the context menu, we click New Cluster.

This will launch the New Cluster wizard to create a cluster. Here you create a cluster name, and decide if you want to enable vSphere distributed resource scheduler, enable vSphere high availability HA with or without admission control, or vSAN.

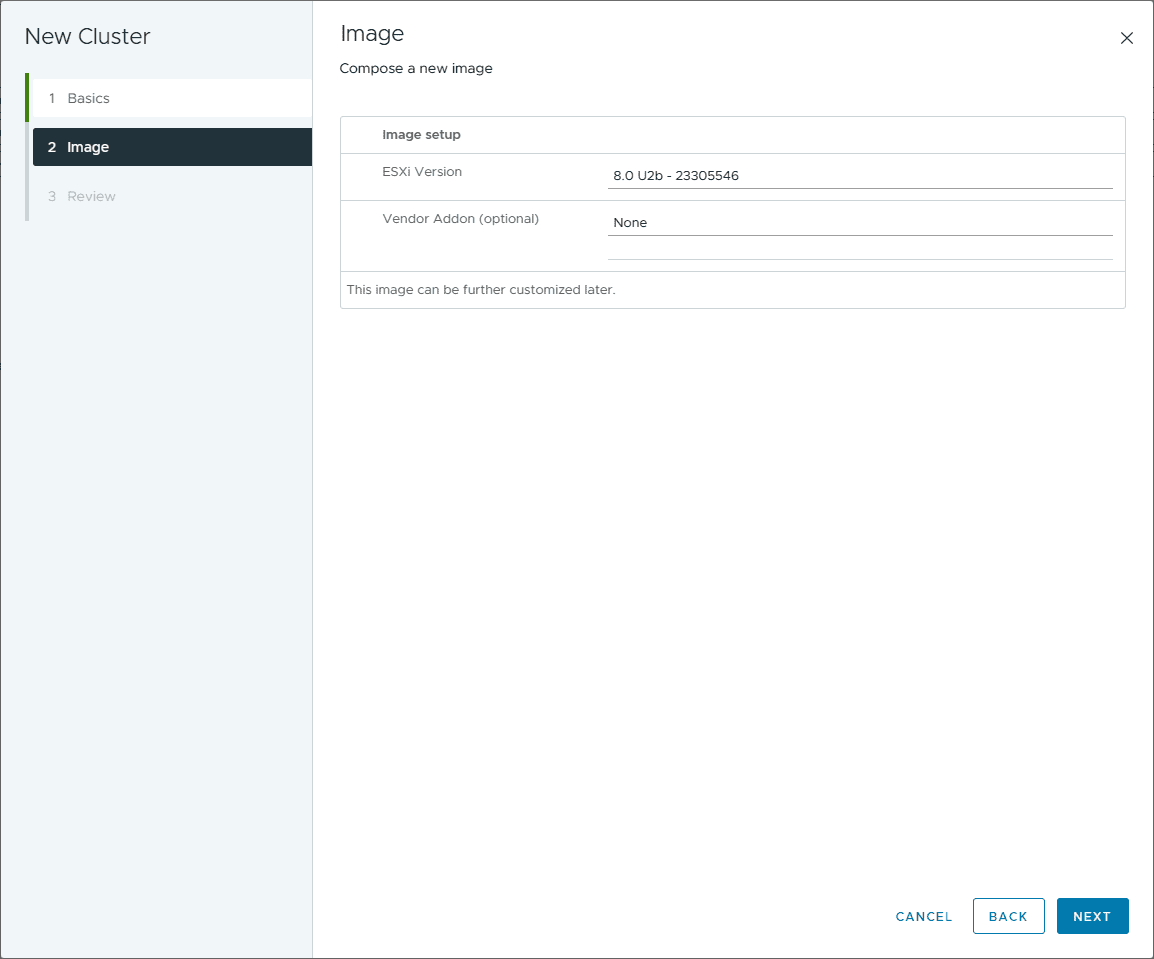

Once you have made your choices on the basics configuration, you will configure the Image for vSphere Lifecycle manager to use for the cluster hosts for updates and upgrade purposes. You can also add a reference host for cluster image.

Finally, we review the configuration and click Finish.

Once you have the cluster created, you simply add ESXi hosts to the cluster. You can add a new host, or add a standalone host that you already have added to your VMware datacenter. Also, these can be physical servers, or you could add two ESXi hosts that are nested if you are working in a lab environment. Keep in mind, if you want to remove a host from a cluster, you have to have the host in maintenance mode.

There is a workaround for this though if you don’t want to migrate off your virtual machine instances, you can simply disconnect the ESXi host in vCenter Server and then add it to the desired cluster you want to use.

Dissimilar VMware cluster

Since I have a hodgepodge of mini PCs lying around from many different review units sent to me, I have a lot of dissimilar hardware, including mini PCs that have Intel processors and mini PCs that have AMD processors.

So, to get a quick and dirty VMware cluster configured, I went ahead and placed two mini PC hosts in the same cluster, even with the dissimilar processors.

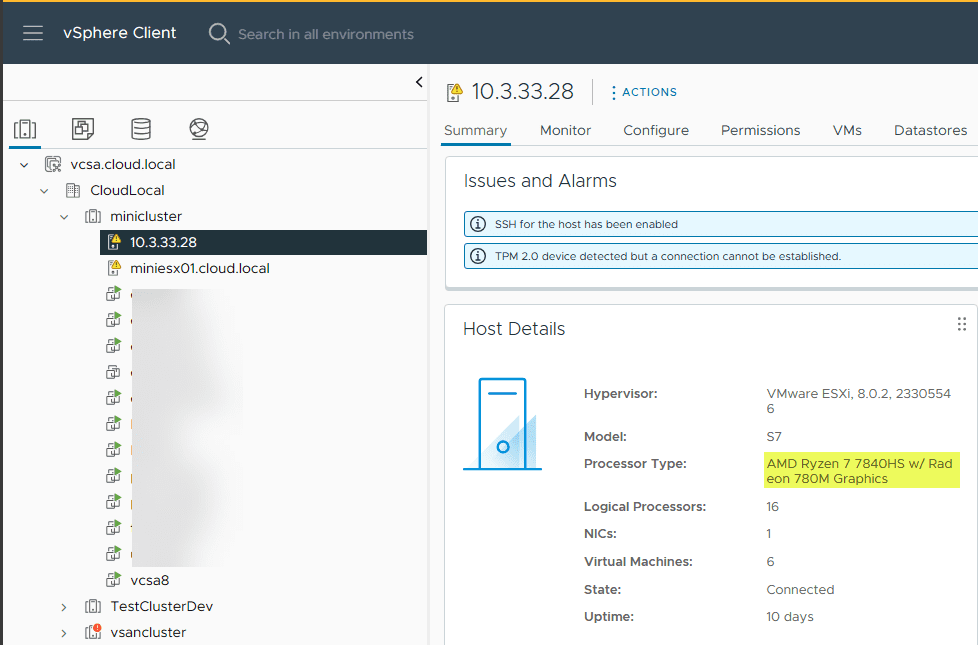

Below, you can see the AMD Ryzen 7840HS processor mini PC in the host summary and the host’s resources.

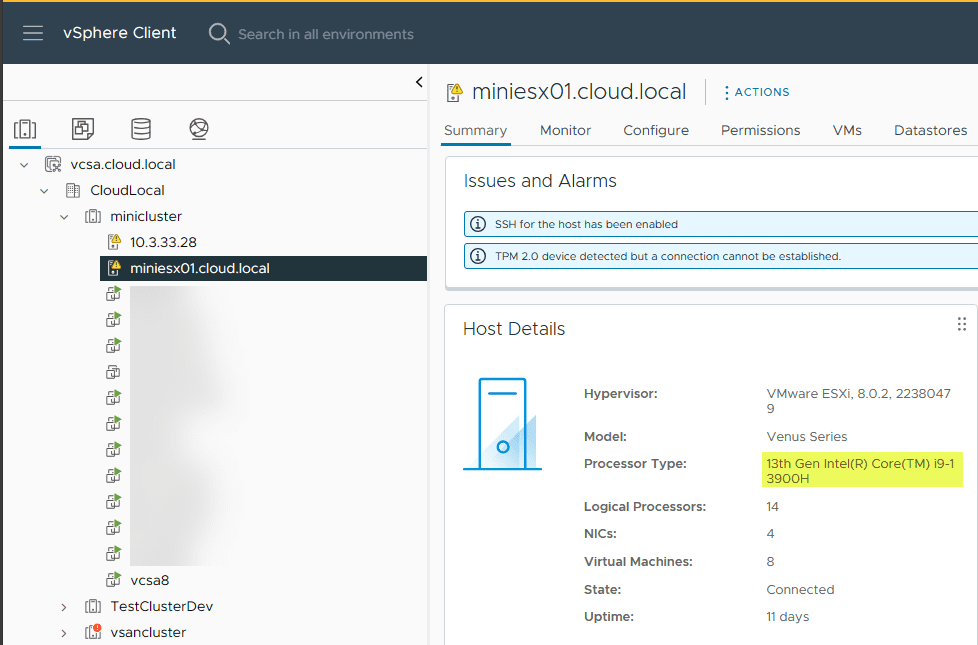

Then, you see the Minisforum MS-01, which is configured with the 13th Gen Intel Core i9-13900H.

Challenges with dissimilar processor hosts in the cluster

In addition to this not being best practice for cluster services, it creates quite a few headaches when it comes to taking advantage of many of the cluster benefits.

Note the following:

- Due to the processors being different, you can’t vMotion VMs between hosts while these are running, even if you have a vMotion network provisioned and configured.

- You can turn on EVC (enhanced vMotion) but this still doesn’t get you past the dissimilar processors between Intel and AMD. It is meant to deal with different types of processors from the same vendor (AMD or Intel).

- This all means you won’t be able to gracefully move all the virtual machines between your cluster services hosts, which is one of the huge benefits of even having a cluster.

- DRS won’t be able to do what it normally can do by proactively migrating machines to different hosts with resource contention. It will still be able to place new VMs on hosts with less load though since this happens before you power on the VM.

Will I keep it this way?

Probably not. This was again a quick and dirty experiment to see how much power I could save, if I could collapse what I needed down to two mini PCs and what the heat would be like once I turned off the three supermicro servers.

All in all, this has been a great experiment in collapsing down the home lab with mini PCs and has proven for me that I can deal with the more limited resources of mini PCs in the lab environment, since I have containerized much of my self-hosted services.

Next steps

What are the next steps? Dig in and try out a VMware cluster. Likely you have had your hands on one in production, but if you are running a VMware cluster at home, it opens a lot of possibilities. Dissimilar clusters can be configured, but you will run into a lot of problems with the dissimilar processor architectures that will prevent you from migrating virtual machines between hosts, using things like vSphere HA or DRS while VMs are running, since these rely on vMotion underneath the hood.

Wrapping up

I am planning on continuing to document my journey of clustering at home with mini PCs and any hurdles I run into during this process. All in all, with great hardware options now available by way of mini PCs, and the advantages VMware cluster services offer, you can easily create a mini PC cluster at home to provide resiliency for your services.

We use a Dell 5820 as our primary PC which has KernSafe Iscsi Server installed. Also Vmware Workstation is installed and we have Vsphere VCSA 8 installed as a virtual machine. For our Four Esxi 8 hosts we have them installed nested on a ESXi 8 Physical Host running on a Dell 7820. We even have these nested Esxi 8 hosts auto start up after the ESXi 8 Physical Host starts. We have a 4 host/node cluster made up of these Four Nested ESXi 8 hosts and have HA and DRS enabled.

We have created 25 different Virtual Machines, a mix of Windows 11, Windows 2022, Ubuntu and other Linux Distributions and

all are installed on shared Iscsi storage on our Primary PC.

Both Dells use exclusively Nvme m.2 SSD drives so performance is very good.

All works extremely well and electricity usage is minimal.

Of course we don’t leave the PC and the ESXi Server running 24 hours in our home lab.

When done testing or completing other tasks we shut down the ESXi Server and shutdown the PC when done for the day.

P.S. All the horror stories we have come across abolut Mini PC’s we are happy that we invested a small amount in two Dell workstations that serve our Home Lab needs completely with reliability and great performance.

Lars,

I really appreciate your insights with your lab environment. Great stuff! I also started my home lab with a Dell precision workstation. So, things for me are coming back full circle as well. Thank you for sharing the models you are using and nested virtualization I think it one of the most underutilized virtualization technologies, especially for labs.

Brandon