Forget Task Scheduler PowerShell Scripts Use CI/CD Instead

If you are a Windows admin, VI Admin, or you use Windows task scheduler to run powershell scripts in your environment, you have probably noticed a few headaches with this process. Yes, it is easy enough and it is a great way to start learning how scripts can be used in an environment. However, when it comes to the process to manage scheduled tasks configured across an environment, it can be a pain. So, with this post we will show how you can forget task scheduler PowerShell and use CI/CD instead to run your PowerShell actions and other scripts with many advantages.

Table of contents

The benefits of Windows Task Scheduler

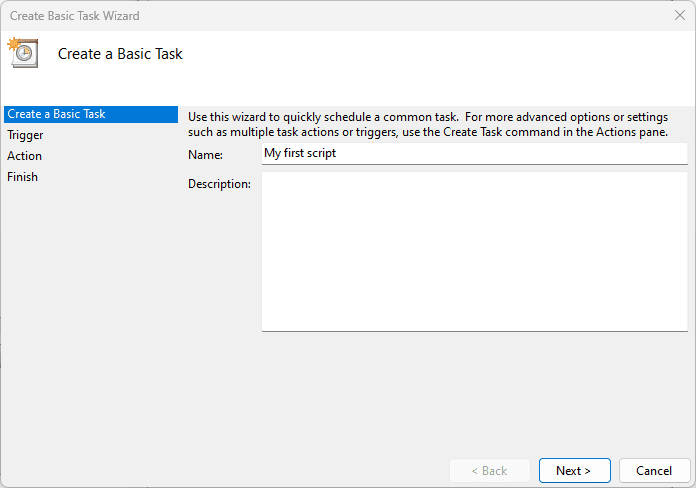

Microsoft Windows task scheduler in Windows client operating systems and Windows Server is easy, it is built in, and it is fairly intuitive to automate PowerShell scripts and other types of automation, such as using batch files. All you have to do is open task scheduler, point it to your script, and create tasks on your local computer.

Using task scheduler to create a new scheduled task is generally the way all Windows admins start with automation and run PowerShell script files in the environment.

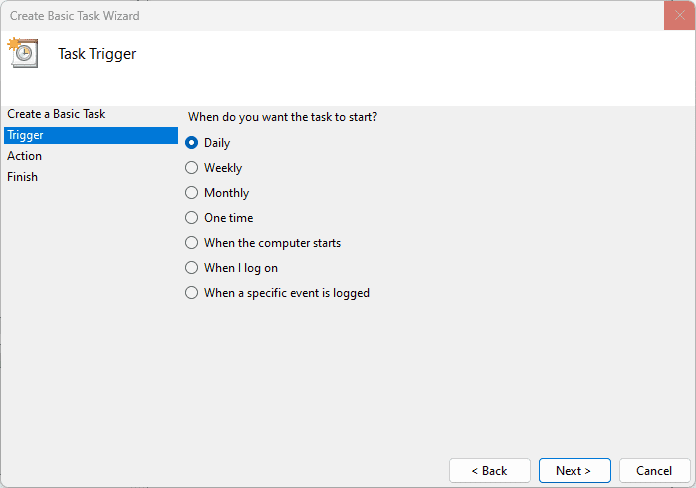

Note the scheduling options in the Triggers tab:

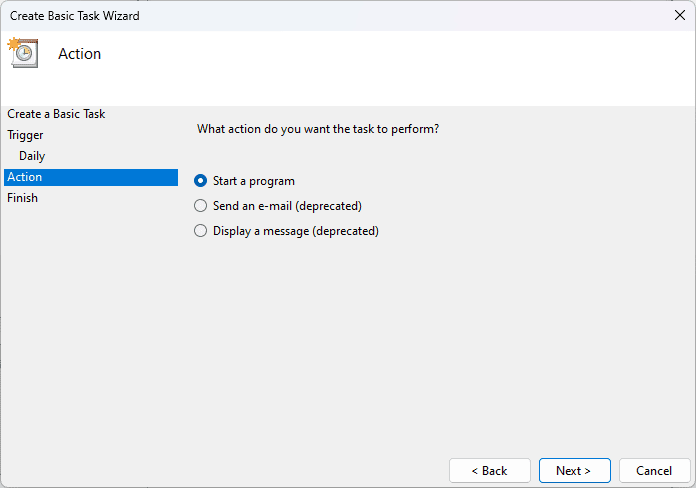

You can also choose what action you want the task to run:

The Drawbacks of Windows Task Scheduler

Windows Task Scheduler PowerShell allows users to create scheduled tasks for running PowerShell scripts but has its challenges. The Windows task scheduler library, despite being a built-in tool in Windows automation, is limited in terms of advanced scheduling, error handling, and integration with modern development practices like version control using Git.

When you create a scheduled task, you have to remember where that scheduled task was created and you may have a large number of servers where these types of tasks have been created. Each admin that creates the scheduled task may put the scripts being called in different directories and maybe even hard coded credentials which is extremely bad.

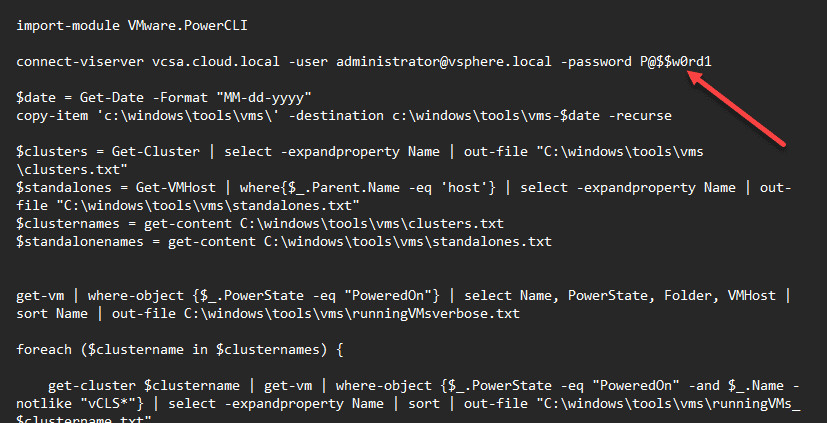

Below, we see the connect-viserver command (cmdlet) has the password argument in cleartext.

I know I have found scheduled tasks with domain admin credentials and vSphere SSO administrator creds (I have written a few of these in the home lab :-/) in clear text. Oh boy!

But it isn’t surprising. This is the easy way to do it and it allows getting something out there that works and maybe automates a tedious process. However, we have to do things better than this when thinking about production security and the overall longevity and maintainability of automated processes.

Other challenges with Scheduled tasks and PowerShell

Managing scheduled tasks, especially across multiple Windows servers, becomes cumbersome with the Task Scheduler. The process of creating a scheduled task, configuring advanced settings through the general tab, or ensuring the task runs with the correct permissions can be error-prone and very tedious.

Additionally, the task scheduler’s interface and workflow were not built with modern DevOps in mind. It doesn’t lend itself well to tasks like linting PowerShell scripts or integrating with Git for version control. These tasks are very important in modern software development and DevOps workflows.

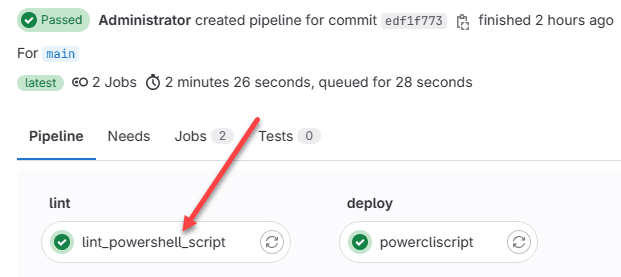

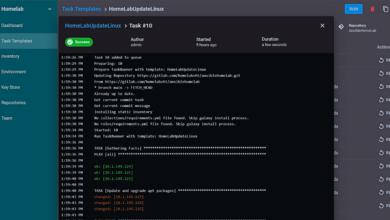

Below we see in the Gitlab task automation and task execution, the lint program (scriptblock) was executed as a stage and then the script was ran.

Limitations in Script Execution and Scheduling

When it comes to executing PowerShell scripts, the Task Scheduler offers basic functionality such as the ability to run a PowerShell script at a specific time or in response to an event. However, this often requires manually setting up each scheduled task, including specifying the file path, adding arguments, and configuring execution policy settings in a way that is less intuitive and harder to manage, especially for repetitive tasks or scripts that need to run on multiple machines.

Task scheduler also has its own cmdlets and parameters for configuration like register-scheduledtask. However, it still has many of the limitations of the GUI interface. Note the following parameters:

Register-ScheduledTask

[-Force]

[[-Password] <String>]

[[-User] <String>]

[-TaskName] <String>

[[-TaskPath] <String>]

[-Action] <CimInstance[]>

[[-Description] <String>]

[[-Settings] <CimInstance>]

[[-Trigger] <CimInstance[]>]

[[-RunLevel] <RunLevelEnum>]

[-CimSession <CimSession[]>]

[-ThrottleLimit <Int32>]

[-AsJob]

[<CommonParameters>]

Use CI/CD instead for PowerShell script execution – 5 reasons

If you have been involved with modern DevOps processes, you will no doubt be familiar with CI/CD pipelines. If not, that is ok too. A CI/CD pipeline is a mechanism built into solutions like Jenkins, GitLab, Bitbucket, etc, that is able to run scripts and code as these are changed by the developer. All of this is driven from git source control.

However, CI/CD pipelines are not just for complex development projects. They can be used as a powerful, much more secure, and flexible alternative to the Windows Task Scheduler. Using a CI/CD pipeline for running scripts provides advanced features that improve scheduling, execution, and management of scripts.

1. Enhanced Version Control and Collaboration

One of the primary benefits of using CI/CD for PowerShell scripting is the integration with version control systems like Git. This integration allows for better collaboration among team members, as scripts can be versioned, reviewed, and updated in a centralized repository. The cool thing is there is no limitations on scripts. You can use the full PowerShell scripting language as you would imaging with the ability to use scripts that contain function, parameter, and other constructs.

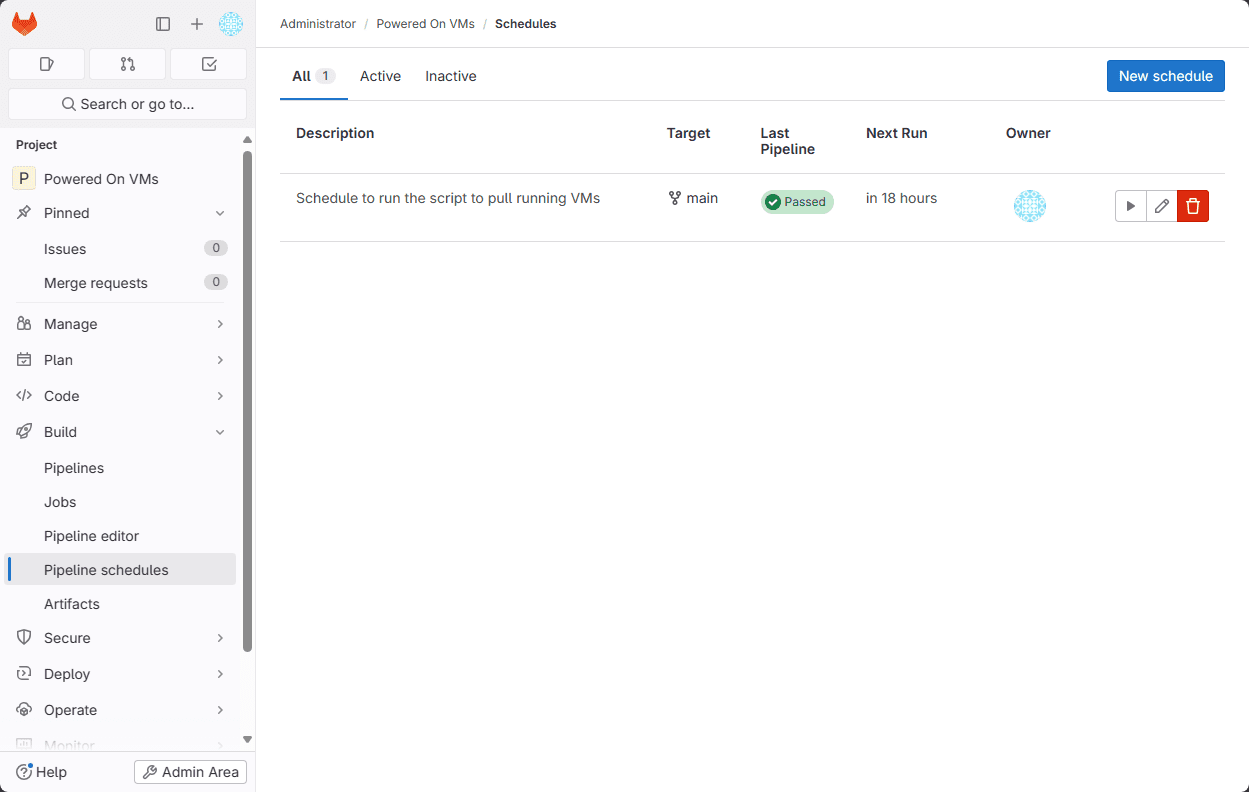

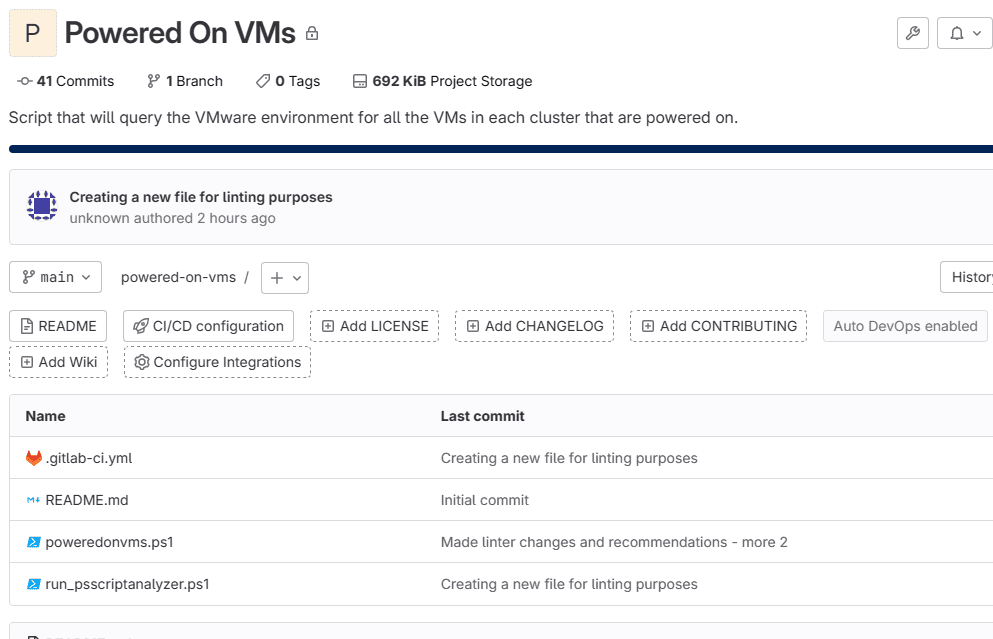

Below, you see a screen grab of one of my projects in the home lab that I converted recently from a scheduled task. It queries all the VMs that are powered on in the environment for the various VMware vSphere clusters in my environment.

Git ensures consistency and who made the changes, which helps with script management, provides versioning, and all the benefits that git version control provides.

2. Improved Scheduling and Execution

CI/CD pipelines offer advanced scheduling options that go beyond the capabilities of the basic task features in Task Scheduler. With CI/CD, it’s possible to schedule PowerShell script execution in response to specific triggers. You can also manage tasks with more control over when and how scripts are executed, and make sure scripts run under the appropriate context and with the necessary privileges.

You can also have multiple schedules for the same pipeline.

3. Testing and linting

Moreover, CI/CD platforms allow for the automation of PowerShell scripts in a way that is both scalable and efficient. Administrators can automate the deployment of scripts across multiple servers, manage dependencies, and incorporate testing and linting processes to ensure script quality and reliability. These are things that are outside the scope of Windows task scheduler.

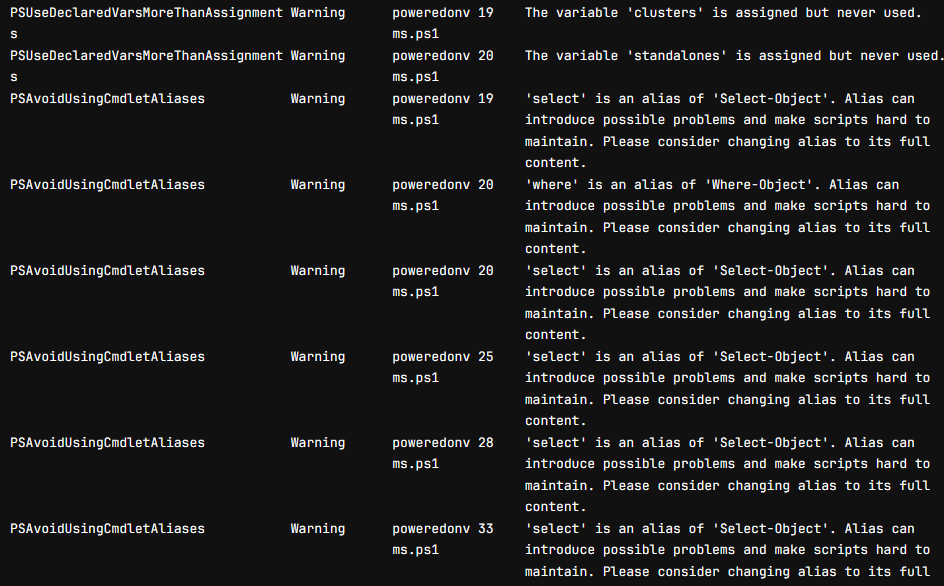

For example, I am using this PowerShell script to lint my code each time the pipeline runs. Linting will give visibility to errors and warnings, as well as things like extra spaces, and the use of aliases instead of the full commands. In other words it helps make sure your code is aligned with best practices.

4. Much better credentials management

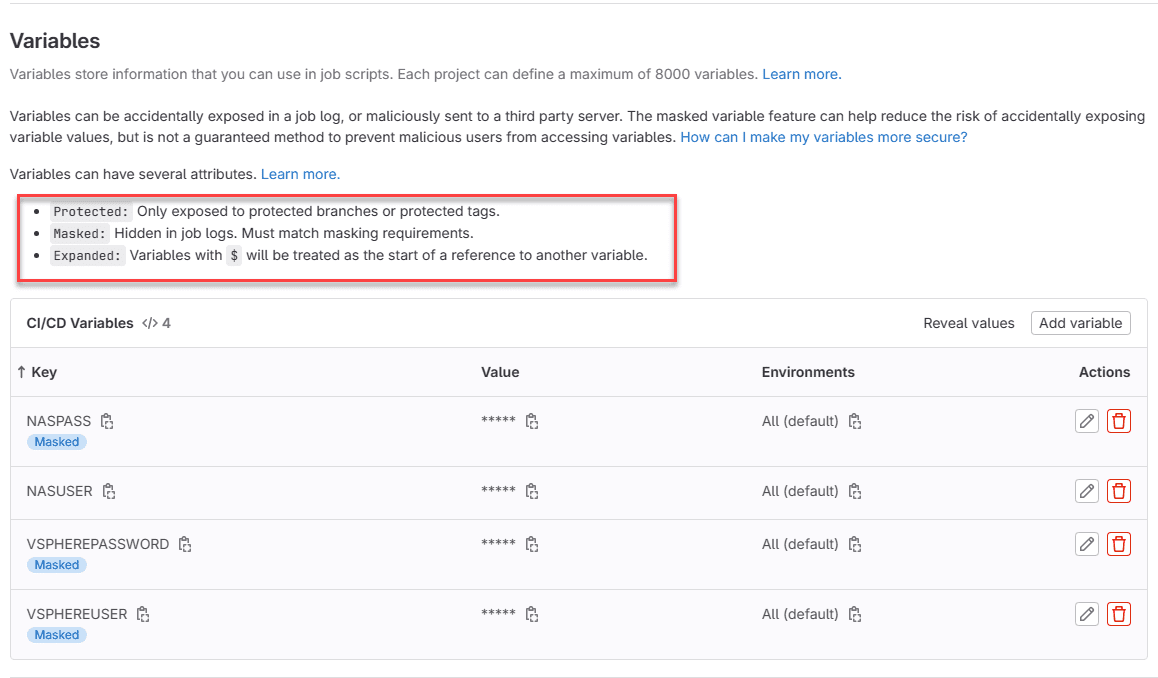

A huge advantage of using a CI/CD tool like GitLab or Jenkins for task scheduling and task scripting is the much better credentials management. These modern development lifecycle tools have built-in functionality for securely handling credentials and hiding these in your code.

You can create CI/CD variables that house your secrets and then call these in your scripts as needed. Unlike Windows System Environment Variables which are in plain text, the CI/CD variables are hidden and you have many more options on how to handle sensitive variables when you execute the script.

In Gitlab, you will see the following:

- Protected – only exposed to protected branches or protected tags

- Masked – Hidden in job logs

- Expanded – determines how Gitlab handles variables with $ and how these are treated

You can see these in the details in the screenshot below:

Another nice feature with CI/CD tools is credentials can be hidden but still used depending on the permissions level of the user. Let’s look at the example of GitLab. If the mermber is owner or maintainer then they can see the variables.

However, if a user is a developer or lower, they cannot see anything under GitLab settings so they cannot see the variables. It provides a great way to hide information that is restricted, but still empower users and developers what they need to develop.

5. Centralized management

The centralization of PowerShell scripts in a CI/CD pipeline facilitates more efficient management and deployment. Unlike the Task Scheduler, where scripts may be distributed across different servers and managed individually, CI/CD platforms enable a unified approach to script management.

This centralization not only simplifies the process of updating and maintaining scripts but also enhances security and control, providing teams with the ability to quickly adapt scripts in response to changing requirements or environments.

Actual Example

Let’s look at a real example with code. This project is a script that runs in my lab environment each day, queries the VMware vSphere environment for running VMs and creates simple text files with the date in the name to show which VMs are powered on.

Why? This came from a real-world use case in my lab. Have you ever had a host go down and wonder, “which VMs did I actually have powered on?” I know I have many times. So, I created this script initially as a scheduled task to run and help capture which VMs are running.

One of the cool things about the Gitlab pipeline file below is it pulls the vmware/powerclicore image that already contains the pwsh.exe command which is the PowerShell core equivalent to powershell.exe. So, it means you don’t have to worry about adding or installing PowerShell core on the fly during the pipeline.

While this example is specific to a certain script, you can use the below as a template to run any PowerShell script you want using a Gitlab pipeline. Just replace the script name, etc.

.gitlab-ci.yml

image:

name: vmware/powerclicore

before_script:

- apt-get update

- apt-get install -y smbclient

stages:

- lint

- deploy

lint_powershell_script:

stage: lint

script:

- pwsh -File run_psscriptanalyzer.ps1

allow_failure: false

only:

- main

powercliscript:

stage: deploy

script:

- pwsh -File poweredonvms.ps1

only:

- mainrun_psscriptanalyzer.ps1

Install-Module PSScriptAnalyzer -Force -Scope AllUsers -AllowClobber -SkipPublisherCheck

$analysisResults = Invoke-ScriptAnalyzer -Path poweredonvms.ps1 -Recurse

if ($analysisResults) {

$analysisResults | Format-Table | Out-String -Width 120 | Write-Output

$errorsAndWarnings = $analysisResults | Where-Object { $_.Severity -eq 'Error' -or $_.Severity -eq 'Warning' }

if ($errorsAndWarnings) {

exit 1

}

}

poweredonvms.ps1

In the below, VSPHEREUSER, VSPHEREPASSWORD, NASUSER, and NASPASS are all Gitlab CI/CD variables and it loads the modules needed to run the commands.

$vsphereuser = $env:VSPHEREUSER

$vspherepassword = $env:VSPHEREPASSWORD

$nasuser = $env:NASUSER

$naspass = $env:NASPASS

$date = Get-Date -Format "MM-dd-yyyy"

##Setting CEIP options

Set-PowerCliConfiguration -InvalidCertificateAction Ignore -ParticipateInCeip $false -Confirm:$false

##Connect to vCenter

connect-viserver vcsa.cloud.local -user $vsphereuser -password $vspherepassword

##Getting clusters and hosts

Get-Cluster | select-object -expandproperty Name | out-file "clusters.txt"

Get-VMHost | where-object { $_.Parent.Name -eq 'host' } | select-object -expandproperty Name | out-file "standalones.txt"

$clusternames = get-content ./clusters.txt

$standalonenames = get-content ./standalones.txt

##Getting all running VMs in the VMware estate and copying to a file

get-vm | where-object { $_.PowerState -eq "PoweredOn" } | select-object Name, PowerState, Folder, VMHost | sort-object | out-file "runningVMsverbose.txt"

smbclient //10.1.149.4/vmreports -U $nasuser --password $naspass -c "put ./runningVMsverbose.txt runningVMsverbose.txt"

##Looping through each cluster and creating files for each cluster with running VMs

foreach ($clustername in $clusternames) {

get-cluster $clustername | get-vm | where-object { $_.PowerState -eq "PoweredOn" -and $_.Name -notlike "vCLS*" } | select-object -expandproperty Name | sort-object | out-file $clustername-$date.txt

smbclient //10.1.149.4/vmreports -U $nasuser --password $naspass -c "put ./$clustername-$date.txt $clustername-$date.txt"

}

##Looping through each standalone host and outputting running VMs

foreach ($standalonename in $standalonenames) {

get-vm | where-object { $_.PowerState -eq "PoweredOn" -and $_.VMHost -like $standalonename -and $_.Name -notlike "vCLS*" } | select-object -expandproperty Name | sort-object | out-file $standalonename-$date.txt

smbclient //10.1.149.4/vmreports -U $nasuser --password $naspass -c "put ./$standalonename-$date.txt $standalonename-$date.txt"

}

##Disconnect from vCenter Server

disconnect-viserver -confirm:$falseWrapping up CI/CD for PowerShell script

While Windows Task Scheduler PowerShell scripts have historically formed the backbone of Windows automation using PowerShell, the limitations of this approach in the context of modern IT practices are clear. Transitioning to CI/CD for PowerShell scripting offers a range of benefits, from improved collaboration and version control to advanced scheduling and centralized management. For organizations looking to enhance their automation strategies, embracing CI/CD represents a forward-looking move that aligns with the demands of contemporary software development and deployment.

Good explainer, and I am keen to try this to replace some scheduled tasks. The one part I didn’t catch was how the cloud based CICD pipeline manages to trigger something to execute on a local server. Does the local server need to have a webhook, or some sort of connection to the CICD pipeline so it is available to run script? Or does this assume that the pipeline executes on a local (eg jenkins) instance and not a cloud hosted one?

Adam,

Thanks so much for the comment and great question. I didn’t dive as deep on this post into the details. In my case, I have a local on-premises self-hosted instance of GitLab running. However, you could still do this with a cloud instance of Gitlab or another tool and use on-premises “runners”. I know Gitlab does this and also services like Bitbucket also has on-premises runners. The runners are what actually do the work and the Gitlab CI/CD just triggers these to perform the tasks. So you can really have the best of both worlds if you want to cloud host your instance of Gitlab, Bitbucket, etc, and then just add on-premises runners. I like having everything local in the home lab too so this is how I have this setup configured currently. But I also have a cloud instance (free instance) of Gitlab. Let me know if this helps. Also, be sure to join the forums….if you have a question or need help with anything, we can dive much deeper there. https://www.virtualizationhowto.com/community. Thanks Adam.

Great answer – I’ll check out runners, but I also like the idea of self-hosted GitLab – I didn’t know that was possible. You have opened up a new rabbit hole for me to run down! I’ll check out a few more articles first, though. Thanks Brandon.

Awesome Adam, sounds great! It is a great learning experience I think and has been for me personally to self-host and learn the inner workings of it all (and definitely a rabbit hole, lol). Feel free to reach out if you hit any snags. Create a forum thread in the future if you need and we can work through the details: https://www.virtualizationhowto.com/community. Thanks again!