I was excited when I first saw the teaser of the Minisforum MS-01 mini PC. For a long while, I have been waiting for mini PCs to progress to the point where they were very similar to other server hardware that I have been using in my home lab environment. Let’s look at my take and review of the Minisforum MS-01 for home labbers running virtualization, virtual machines, containers, etc. As a note, I paid for the MS-01 out of my own pocket, so my thoughts are my own.

Table of contents

- State of Mini PCs up until now and my current home lab

- Minisforum MS-01 review unit hardware specifications

- Paradigm shift in home labs

- High-Speed Networking and Connectivity

- Excellent Cooling for heat

- Great storage options

- Discrete graphics possibilities

- Intel vPro for Advanced Management

- Unboxing the Minisforum MS-01

- The BIOS

- A note on Secure boot and installing from USB

- VMware hurdles

- Enabling and Disabling Efficient Cores

- 10 gig and 2.5 gig networking in ESXi

- Power consumption

- Video review

- Is this the best home server in early 2024?

- Wrapping up

State of Mini PCs up until now and my current home lab

For quite some time now, they have been stuck with 2.5 gig networking and very few storage options or expansion slots. Currently, I have Supermicro servers that are getting long in the tooth, configured with 10 gig network adapters, NVMe storage, vSAN, and other features. The Minisforum MS-01 has changed all of that, with a few caveats. I think it shows the promise of where things can be with home labs and mini PCs in the very near future with tons of hardware acceleration, making it a great server or workstation PC.

Minisforum MS-01 review unit hardware specifications

| Specification | Detail |

|---|---|

| Processor | Intel Core i9-13900H |

| RAM Options | Up to 96GB (unofficially) |

| Storage Options | (3) M.2 NVMe slots, U.2 |

| Expandable Storage | Supports up to 24TB SSD |

| Network Ports | 4 Ports |

| USB Ports | 2 USB4 Ports |

| Cooling System | High-Speed Cooling Fan |

| Additional Slots | PCIe 4.0 x16, M.2 NVMe SSD |

| Technology | Intel vPRO Compatibility |

| Networking Speed | (2) 10Gbps SFP+, (2) 2.5Gbps RJ45 |

| Display Support | 8K, Triple Displays |

| Use Case | Home lab server, workstation |

Paradigm shift in home labs

I, and I think many others, have been waiting for a mini PC like the MS-01. It really checks off most of the boxes that most have been holding off when thinking of using mini PCs for home labs. It is a compact mini PC that includes not one, but two 10 GbE adapters, and (2) 2.5 GbE adapters, along with (2) 40 gig USB-4 ports.

In addition to that, you have (3) M.2 slots with the ability to run a U.2 drive in addition to (2) of the M.2 slots. Since it runs DDR5, you can max the memory out at 96 GB, which is much better than the 64 GB with DDR4.

The intel Core i9-13900H has plenty of horsepower, although it is a hybrid processor, and we will get to the hurdles with that in just a bit. So, for virtualization purposes, this thing is spec’ed out like we would all like to see for the most part.

Also, it is great that Minisforum offers this in a barebones option without an operating system, as it allows configuring it with the RAM and storage you want to configure, without paying the premium from the factory for those options. Keep in mind with the barebones unit, you don’t get an OS license as it doesn’t come with a preinstalled hard drive. Keep that in mind, as most mini PCs will come with a low-end, no-name NVMe SSD with Windows 11 Pro included.

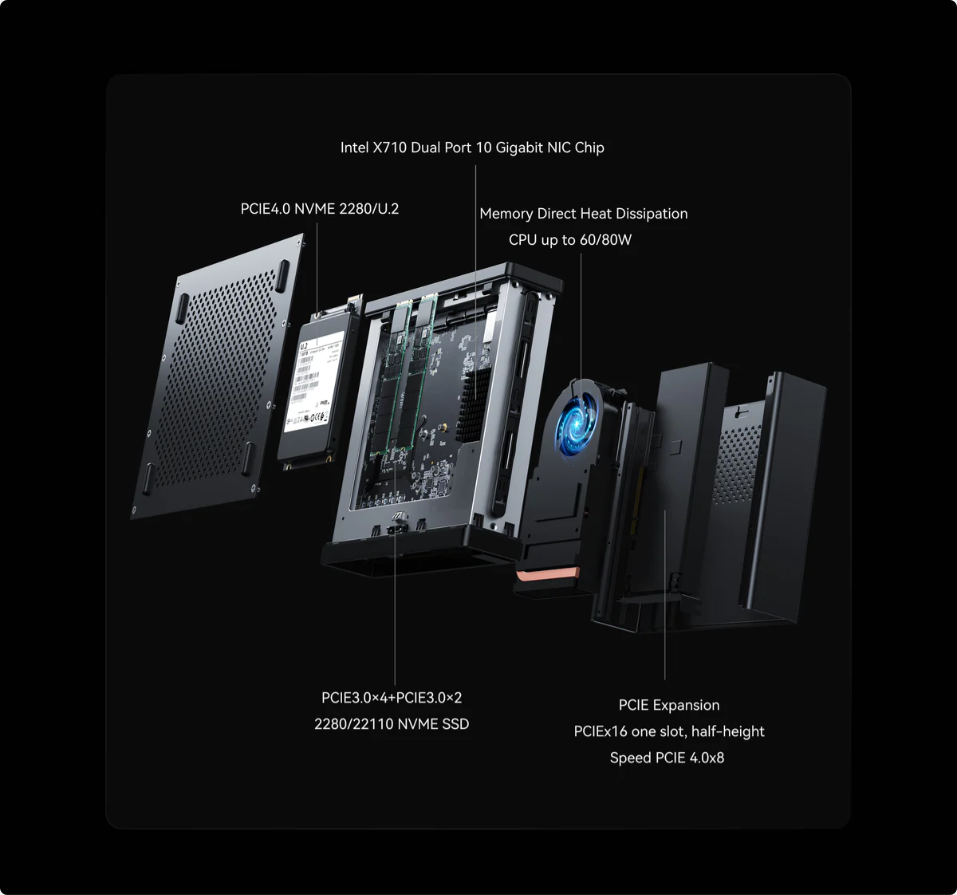

Below is an exploded view of the internals with the MS-01.

High-Speed Networking and Connectivity

With the MS-01, networking and connectivity are not lacking. Seeing a Mini PC come out with this kind of connectivity and networking is phenomenal, especially considering what we have been used to with mini PCs before.

With multiple ethernet network cards, including advanced high-speed connectivity with 10 gig SFP+ ports and multi-gig connectivity with the (2) 2.5 gig network devices. Keep in mind also with the expansion slot, you have the capability to add things like 25 gig network cards if you want.

The multitude of USB ports, including the (2) USB 4.0 40 gig ports, provides high compatibility with peripherals and other devices. Not that it matters a lot running a server, but it also includes wireless and Bluetooth connectivity.

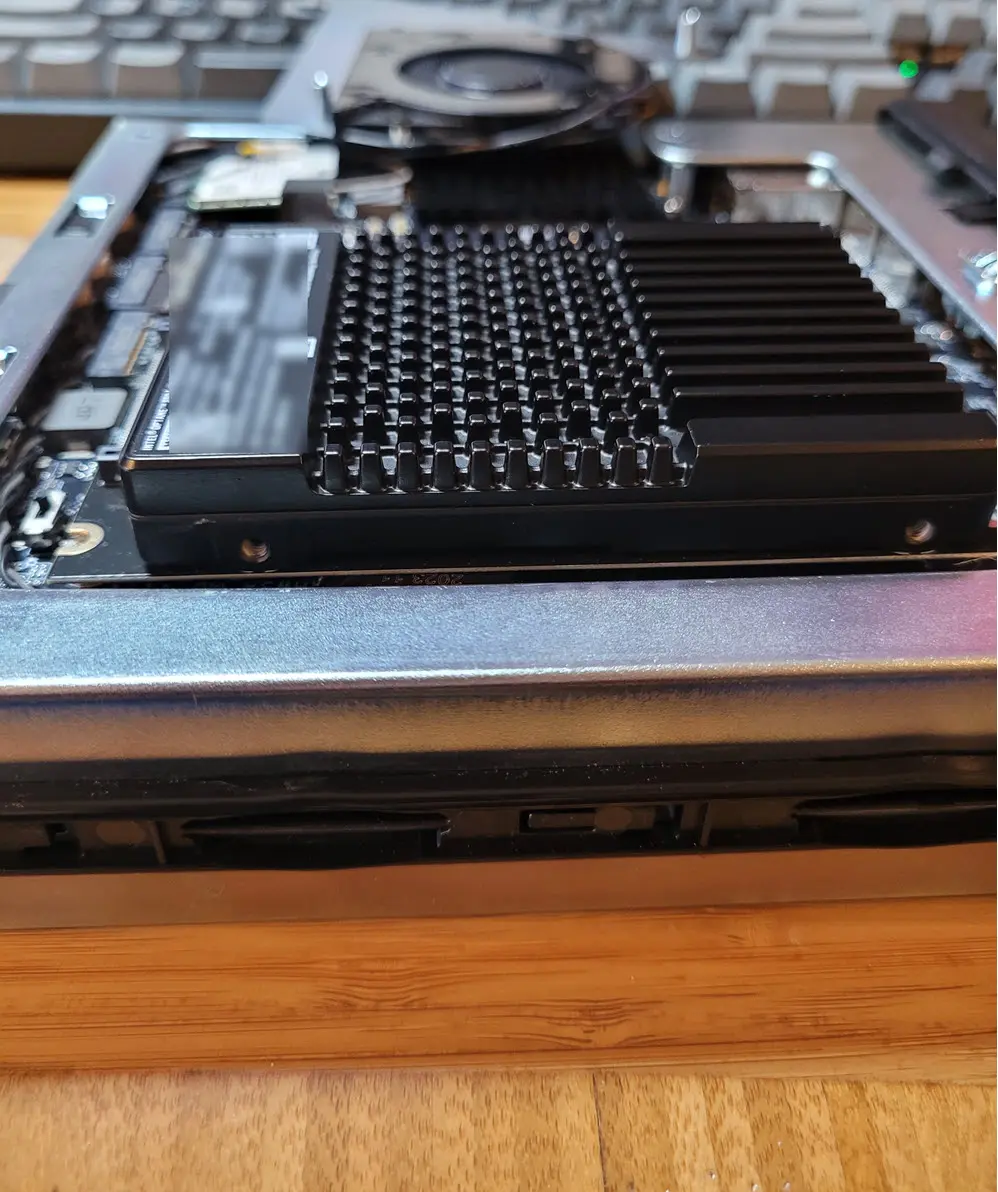

Excellent Cooling for heat

This thing is configured with a Core i9 processor that has the potential to generate some heat along withe the (3) NVMe slots. However, I really like how the fans and cooling are configured in this unit as it has the large fan on the front side where the processor is located and the high-speed cooling fan on the underneath side to actively cool the NVMe SSDs you have installed.

Great storage options

One of the limitations with many of the mini PCs that I have reviewed and had in the home lab so far has been storage. Most of the minis I have had my hands on up to this point have 1 or maybe 2 NVMe slots. Or they may have 1 NVMe slot and a 3.5 inch solid state drive slot

Storage is another highlight of the MS-01. Equipped with NVMe SSDs, it offers lightning-fast data access speeds. The flexibility to expand storage to its maximum capacity allows users to store large volumes of data without compromising on system performance.

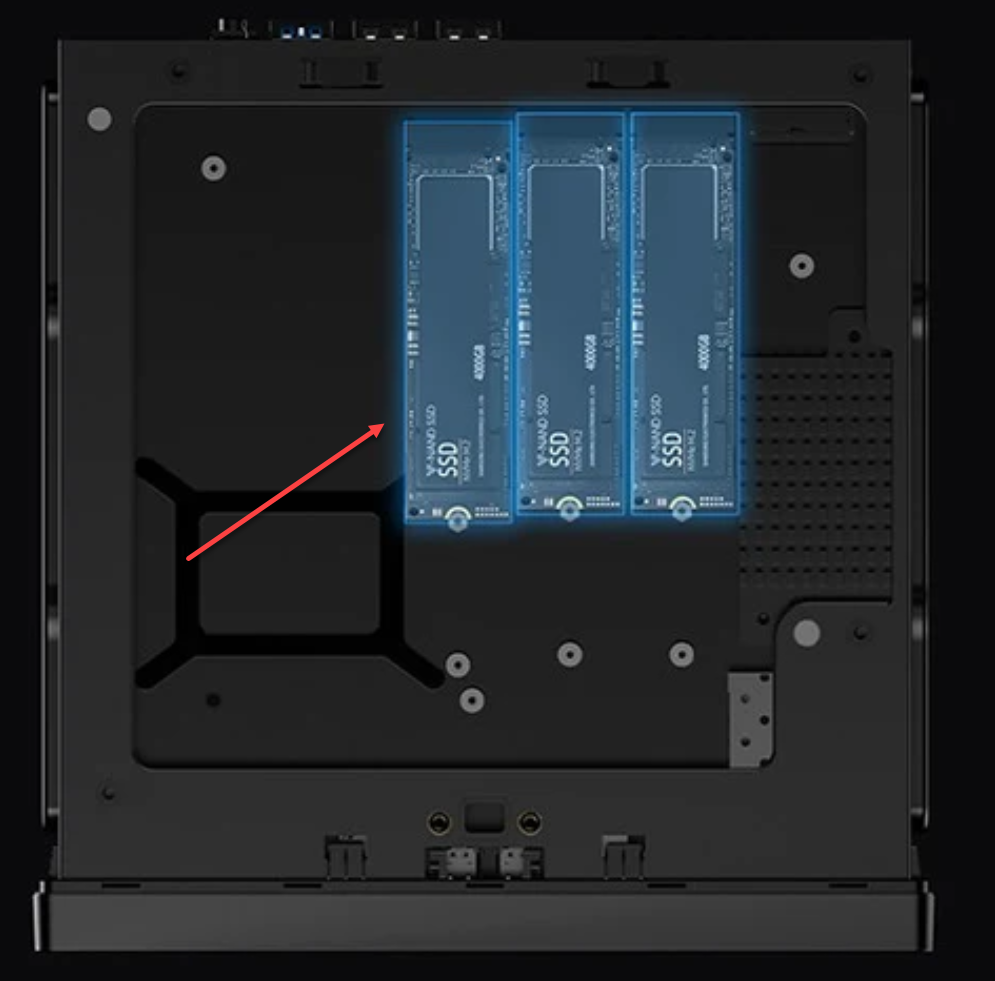

Below is the photo from Minisforum showing the 3 NVMe drives installed in the MS-01.

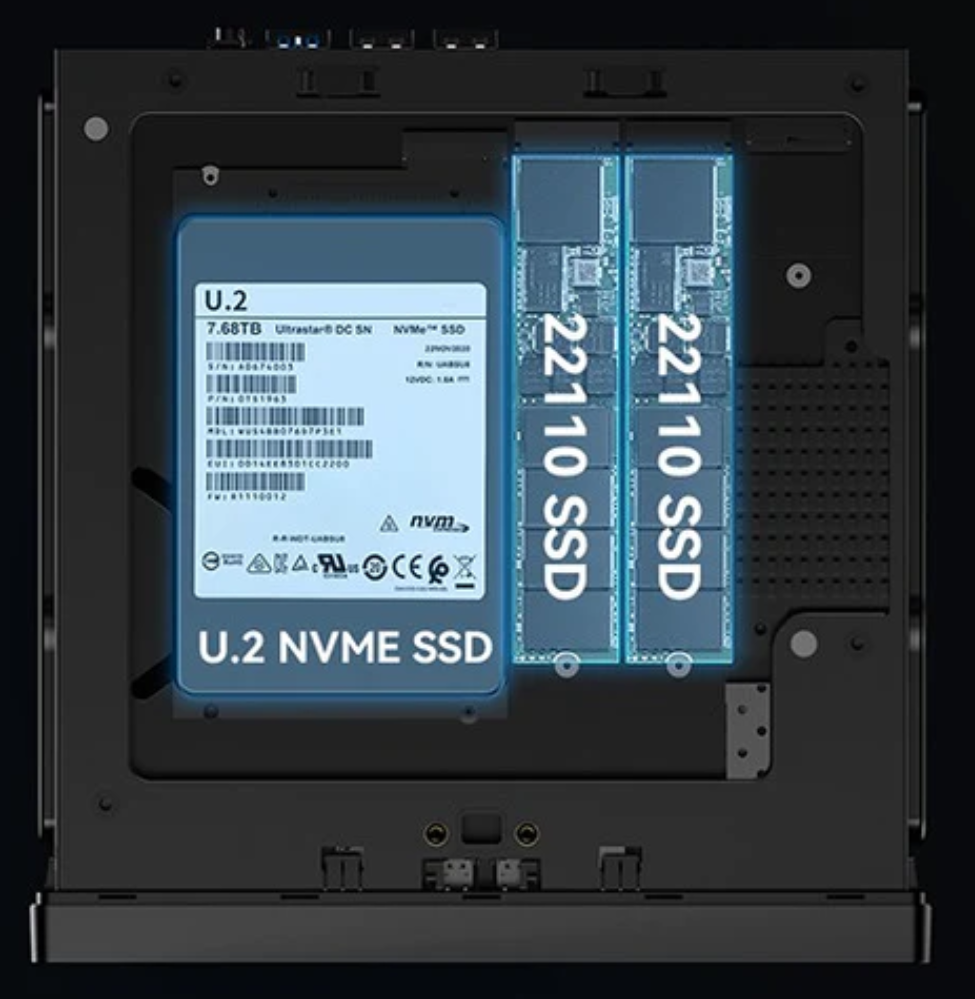

Below is the photo from Minisforum showing the U.2 drive and the (2) NVMe SSDs.

Discrete graphics possibilities

The MS-01 with the PCI-e 4.0 slot has the potential to support discrete graphics cards, something we don’t see often with mini PCs in general. This will be a great option for those who want this unit for gaming, graphic design, video editing, or other use case along those lines that need a high-end GPU.

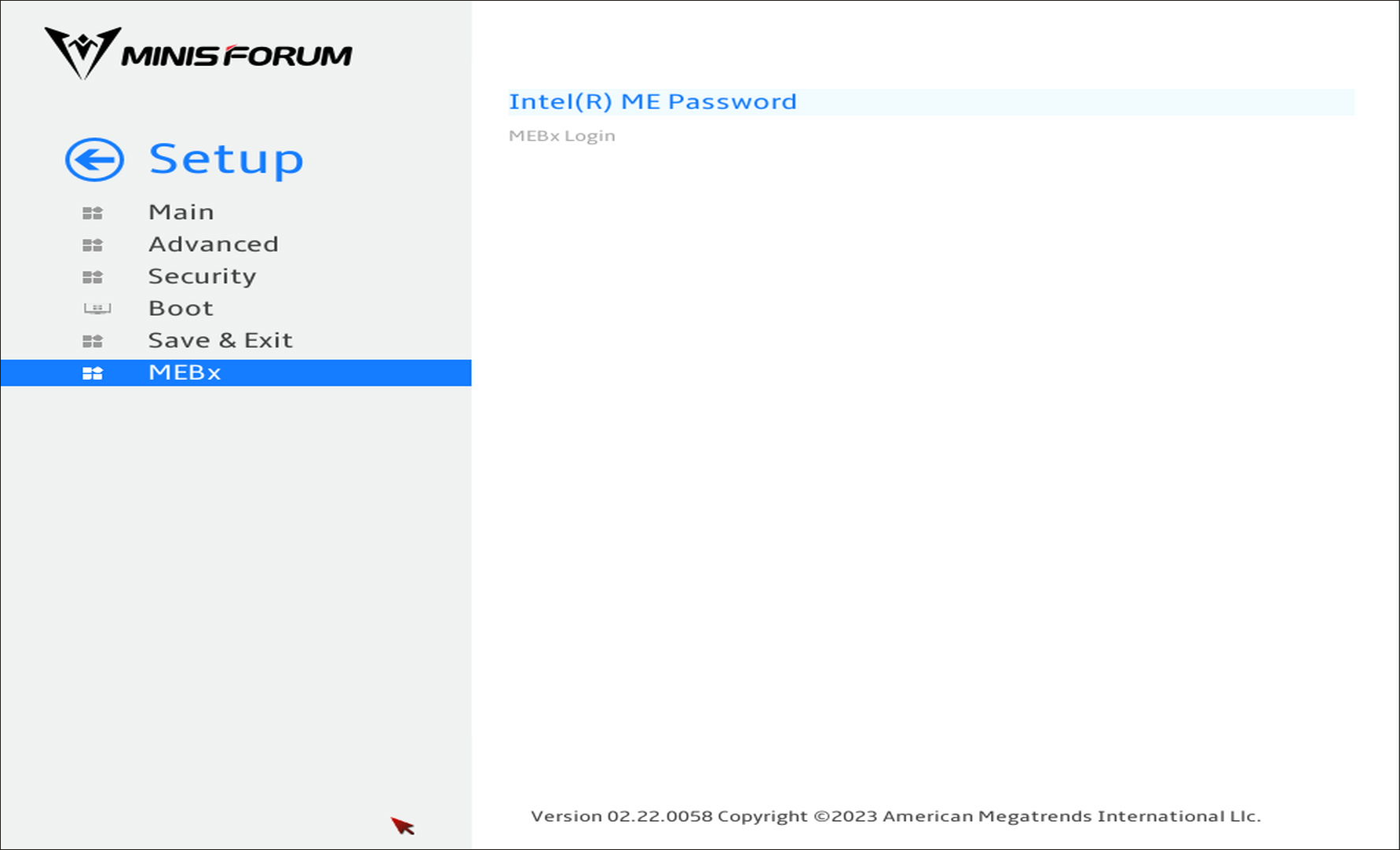

Intel vPro for Advanced Management

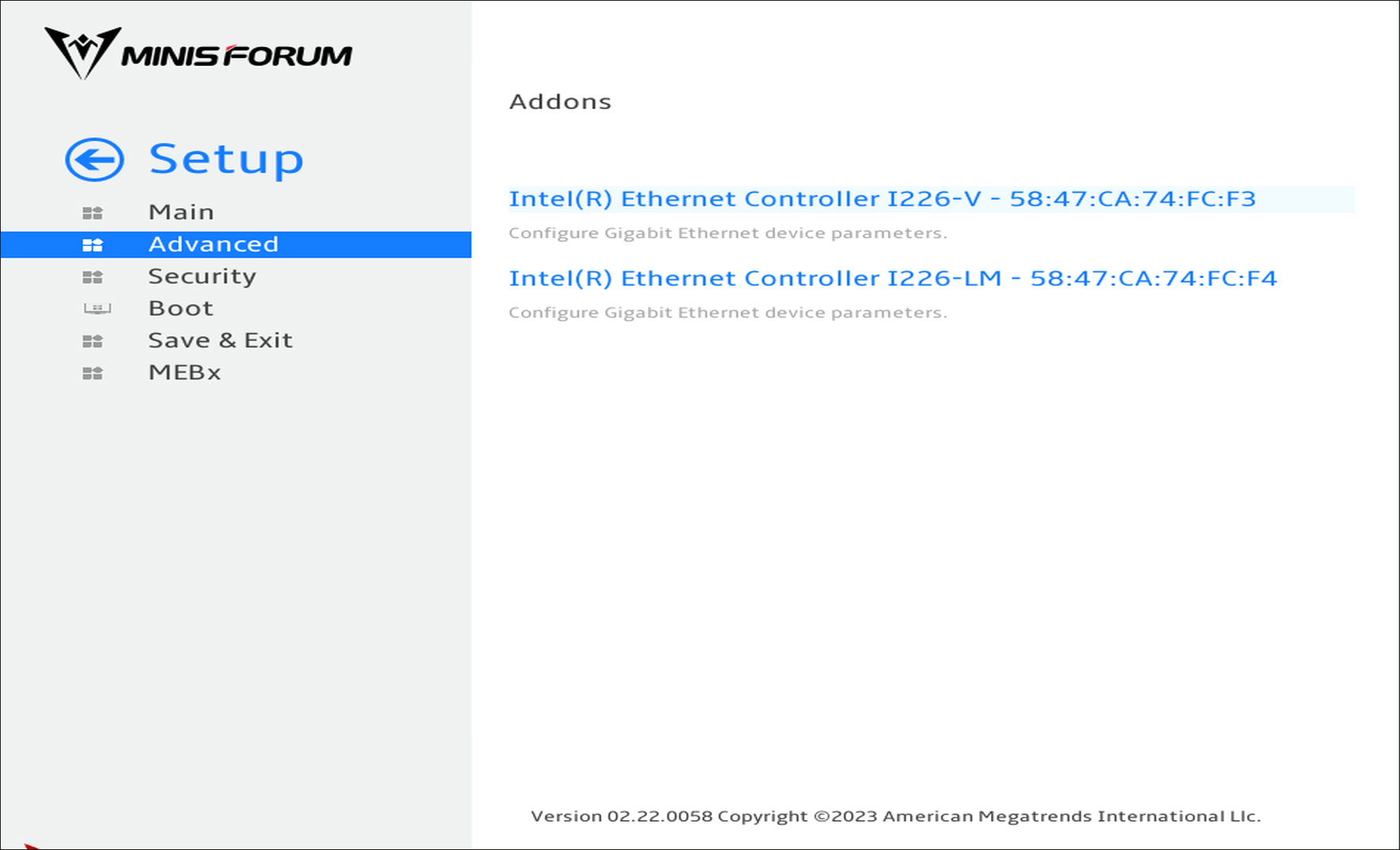

Intel vPro technology in the MS-01 allows for advanced management capabilities, such as out-of-band management. This is great for those who want to use this as a home lab server and have that IPMI-like experience. This feature enables better control over the system and provides better security and remote management possibilities.

Below you can see the i226-V and the i226-LM indicating the ability to manage the MS-01.

Unboxing the Minisforum MS-01

Let’s look at the unboxing of the Minisforum MS-01 mini PC and how it came to me. When I purchased, the only option left was the Core i9-13900H barebones configuration. This is what I opted for. Honestly, in the home lab, I think the Core i9-12900H would serve you just as well.

The MS-01 is in secured plastic inside the box in a foam compartment.

After removing the plastic, this is a view of the back of the MS-01. You can see the SFP cages on the left hand side and the 2.5 GbE ports next to those, with the USB-4 ports, HDMI, and then (2) USB-A ports, with the barrel port connector for the power brick all the way on the right-hand side.

On the front from right to left, we see the power button, the audio jack, USB-A 3.x adapter, then (2) USB-A ports.

For a comparison of the size of the power adapter, here is the MS-01 next to the power brick for the unit.

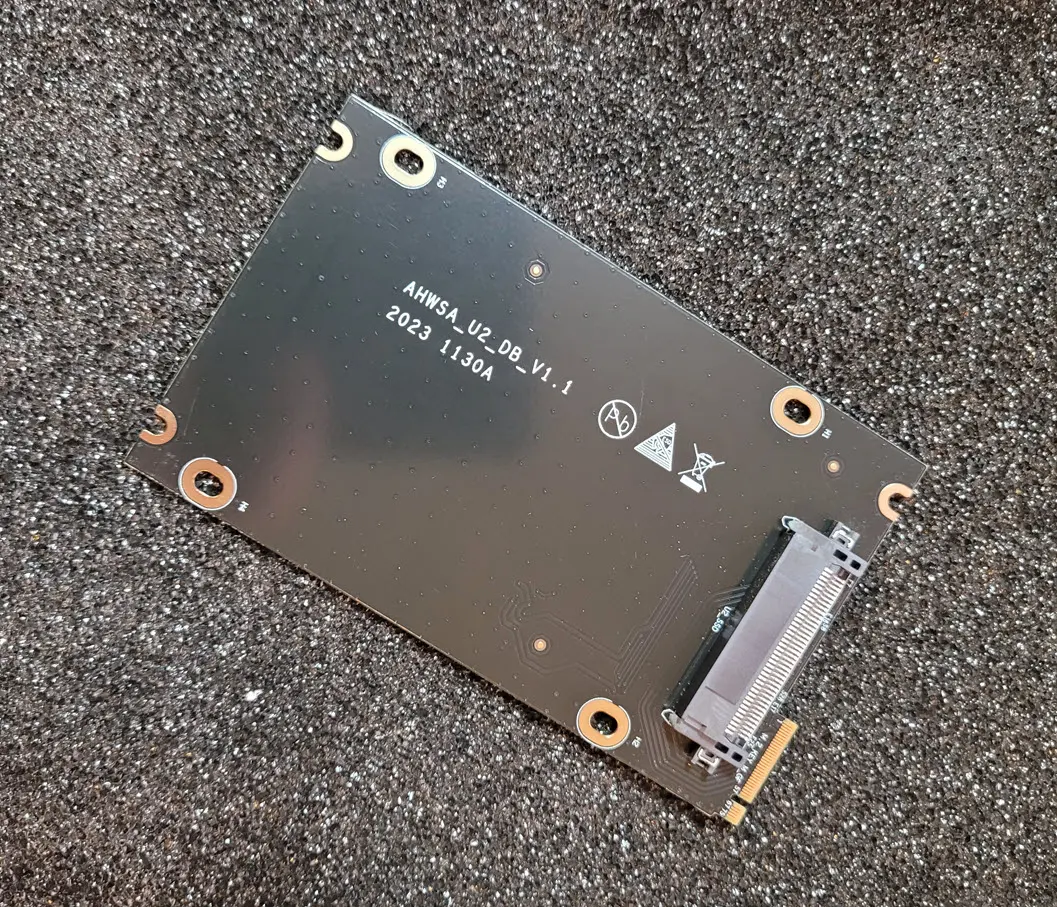

The MS-01 comes with a U.2 adapter plate that plugs into one of the PCI-e M.2 slots on the underneath side of the unit, which we will look at below.

After removing the unit from the outside housing, using their tool-less button press and the inside slides out of the outside housing. Here you can see the PCI-e 4.0 expansion slot which is great to see with a mini PC form factor.

Another angle of the top of the unit outside of the housing.

To get to the RAM slots, you have to remove the (3) little screws in the fan assembly and it pops out to reveal the (2) SO-DIMM slots. These will take an unofficial maximum of 96 GB of RAM with 92) 48 GB DIMMs.

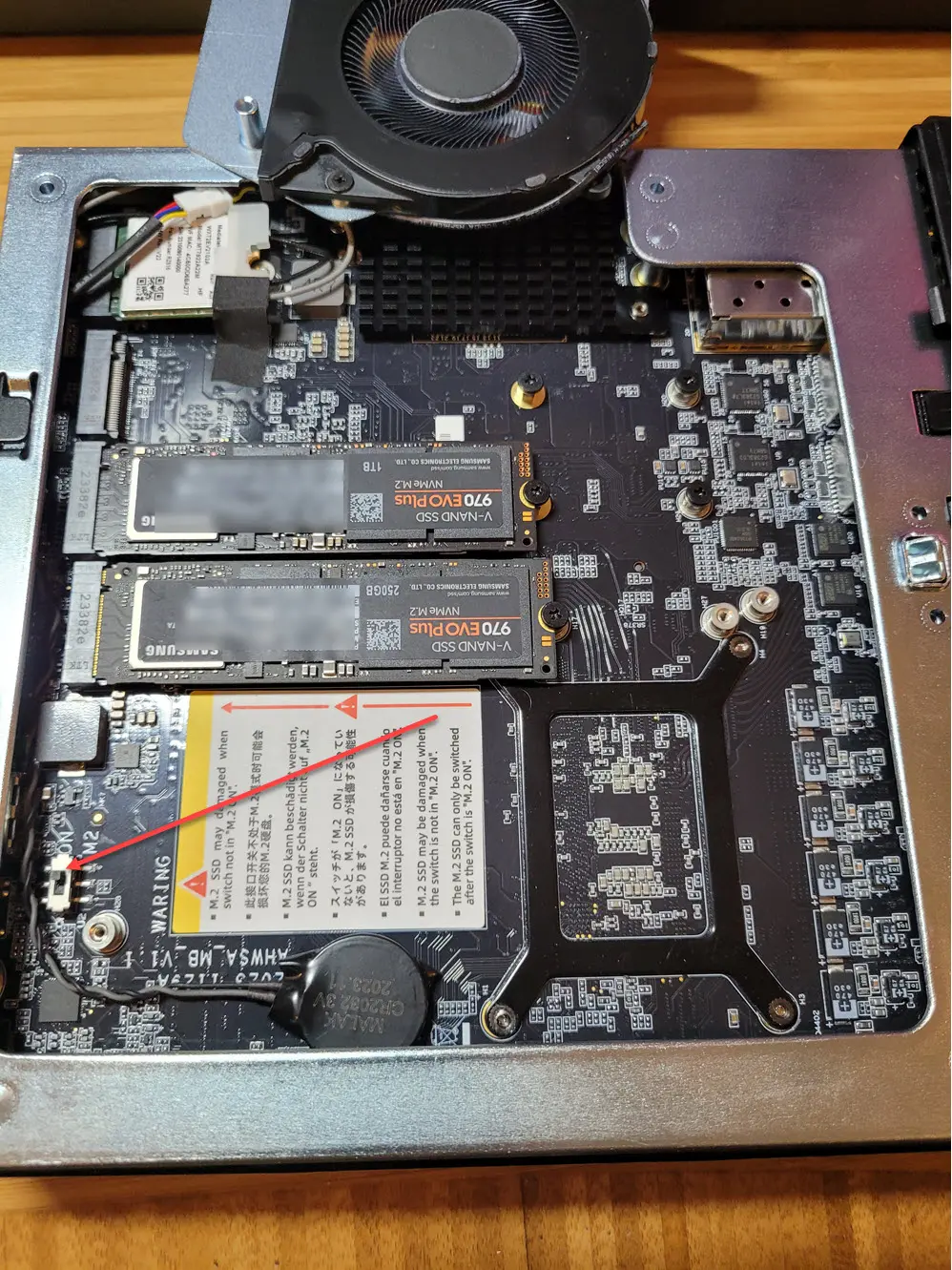

Moving to the underneath portion of the unit. There is a fan assembly that also has (3) screws on each corner. You remove these and it reveals the (3) M.2 slots.

As you can see, the U.2 adapter plate I have inserted below fits into the first M.2 slot. Then, (2) of the screws from the fan assembly will secure the U.2 plate in place once you have your drive mounted.

One disappointment here is that normal height U.2 drives, like this Intel Optane drive I received from the vExpert program won’t fit. Actually they fit in the slot ok, but you won’t be able to get the fan assembly back on there or the unit back into the housing.

Another point to note is the switch on the unit where I have the arrow pointing. This switch must be in the M.2 position if you have that slot populated with an M.2 NVMe SSD. If you accidentally have it switched to the U.2 slot, it will damage a.k.a “fry” your M.2 SSD as it puts out more voltage when it is switched to the U.2 position.

The BIOS

I have to say that I wasn’t too crazy about the “wizardized” BIOS with the Minisforum MS-01. I am old school when it comes to BIOS configuration and like the classic “blue screen” BIOS menus, etc. These just seen much easier and more responsive.

Some may like the more wizardized BIOS screens though.

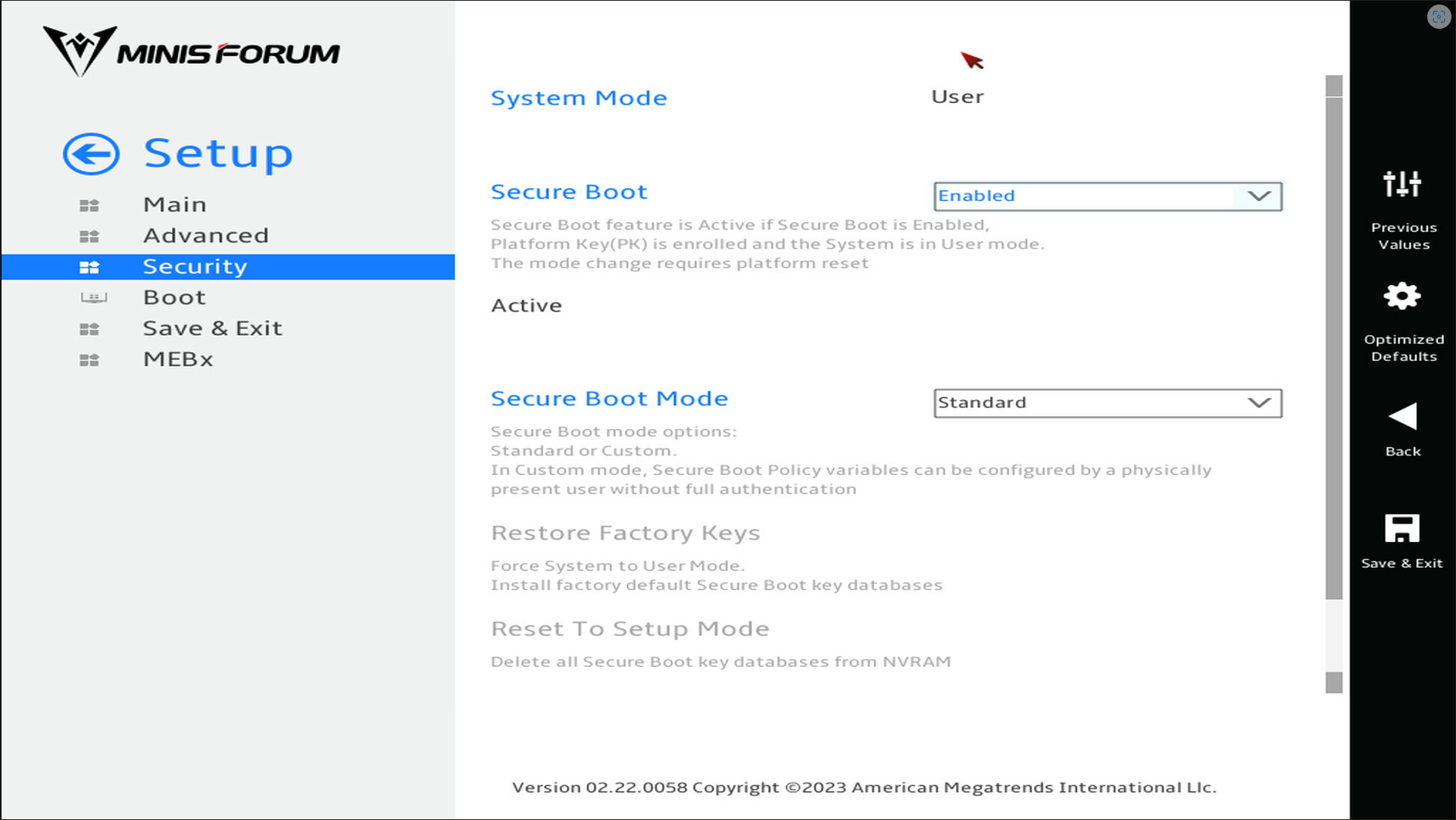

A note on Secure boot and installing from USB

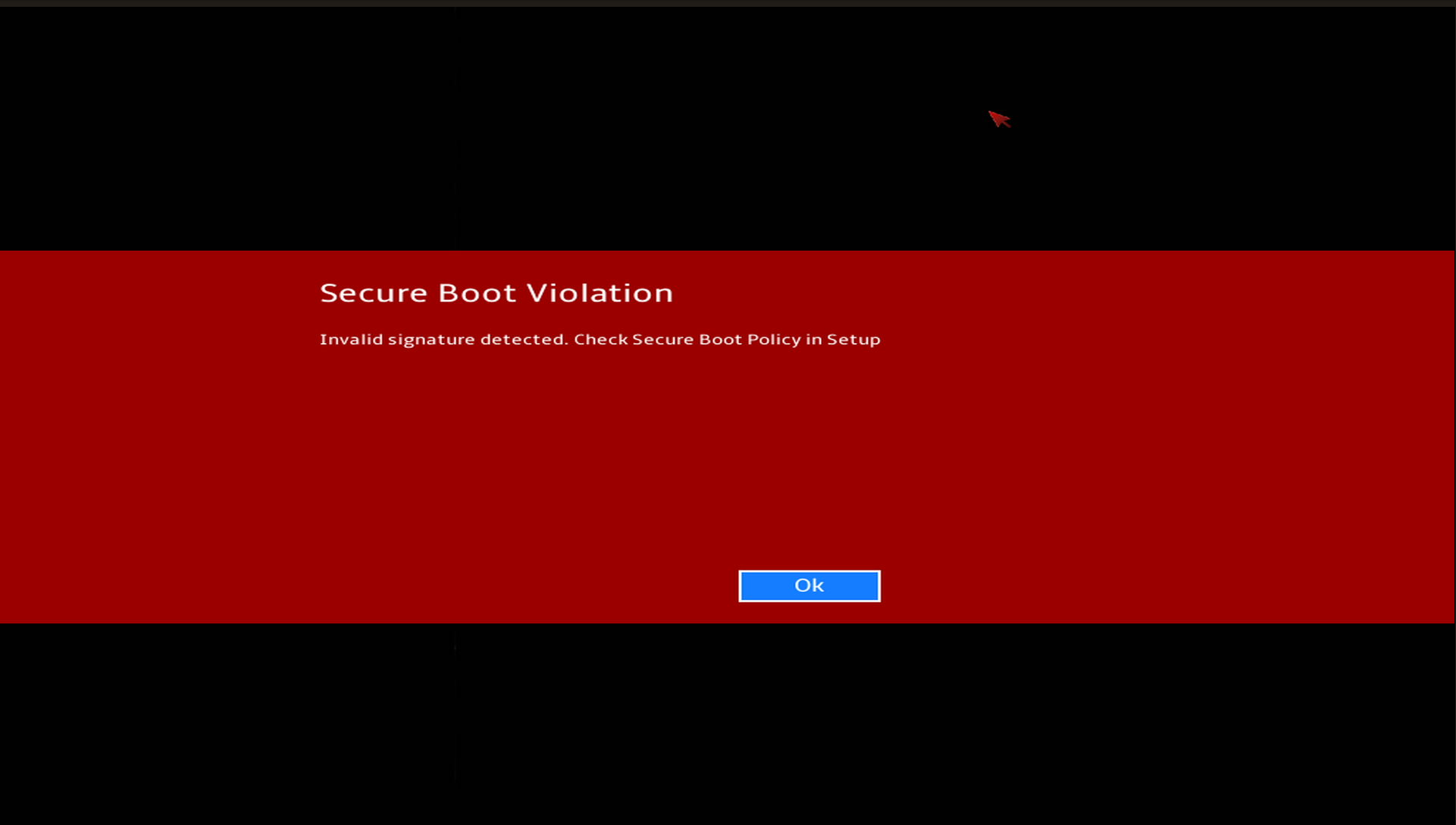

As a note, if you want to install a hypervisor like VMware ESXi or Proxmox, you will likely create a USB drive using Rufus from the ISO file. However, this will not be compatible with secure boot.

When I first unboxed the unit, I had to disable Secure Boot to get to a point where I could install it from USB. If you don’t, you will see this type of screen.

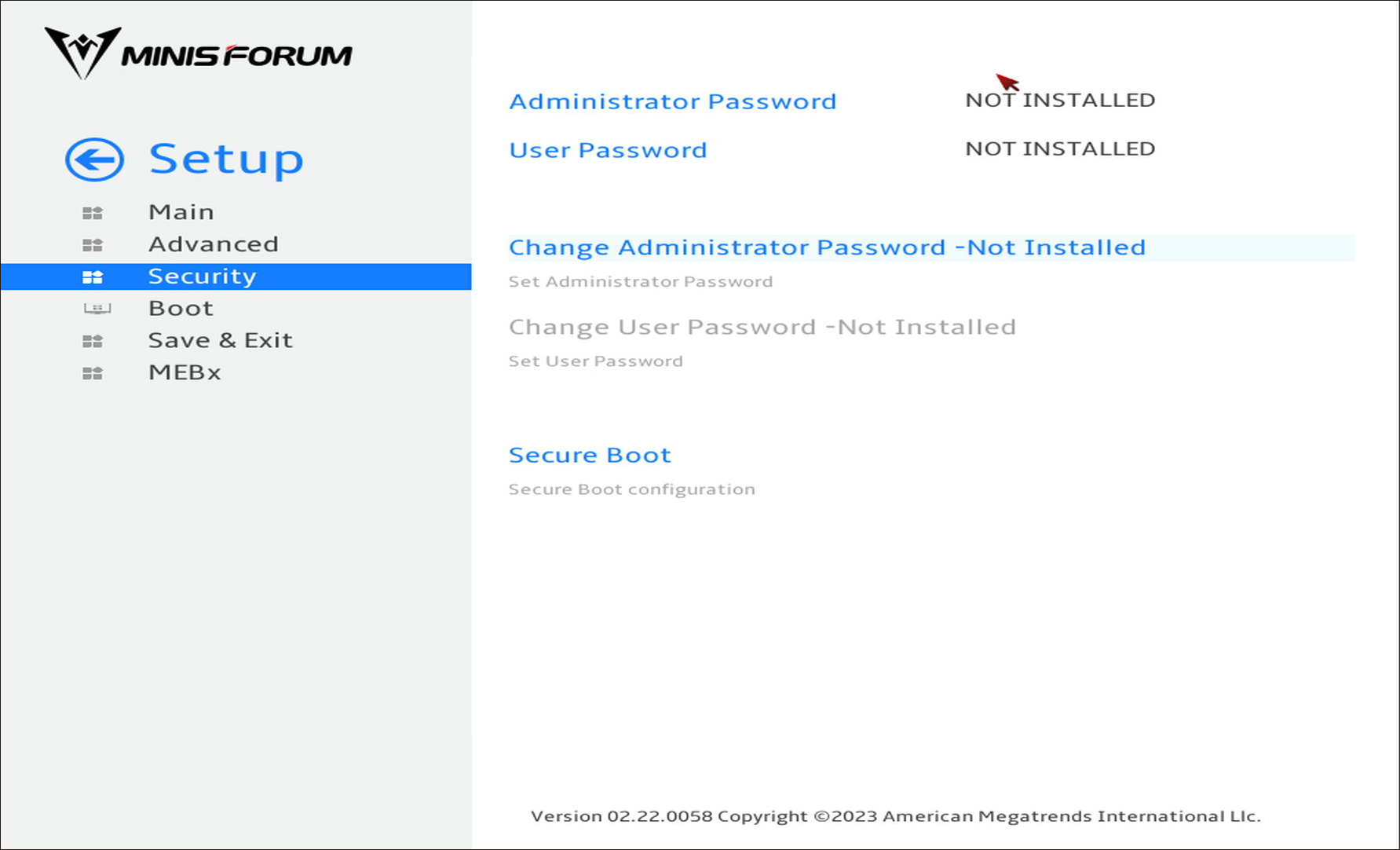

However, I ran into an issue where it wasn’t obvious how to disable Secure Boot.

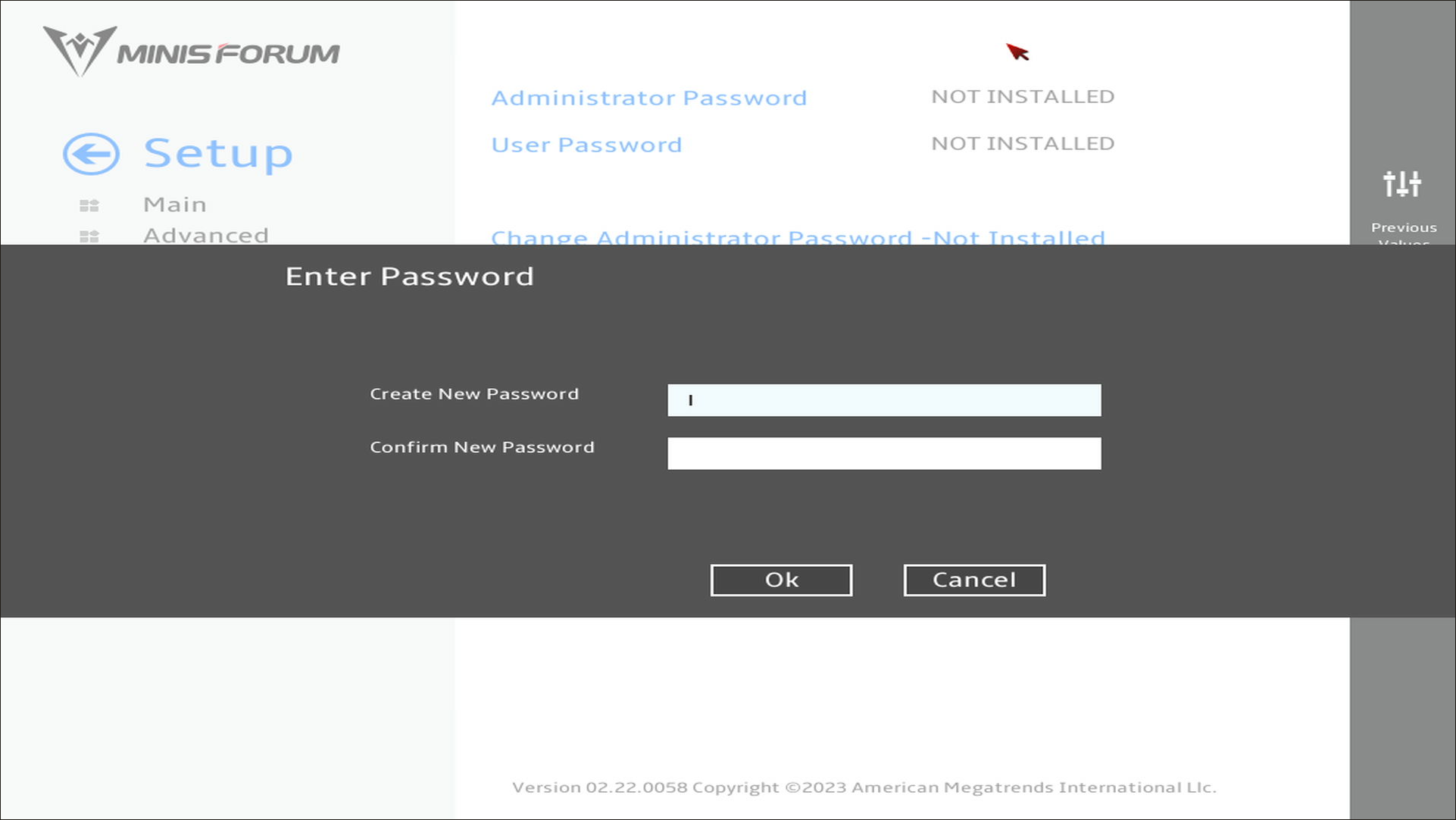

As it turns out, you can’t disable Secure Boot, unless you have a password set for the Administrator password and the user password. I set a password for both of these and was able to disable Secure Boot.

As you can see on the Security screen, the Secure Boot option is not greyed out. It will be until you set the administrator and user password and then save and reboot back into the BIOS. Then you can disable Secure Boot.

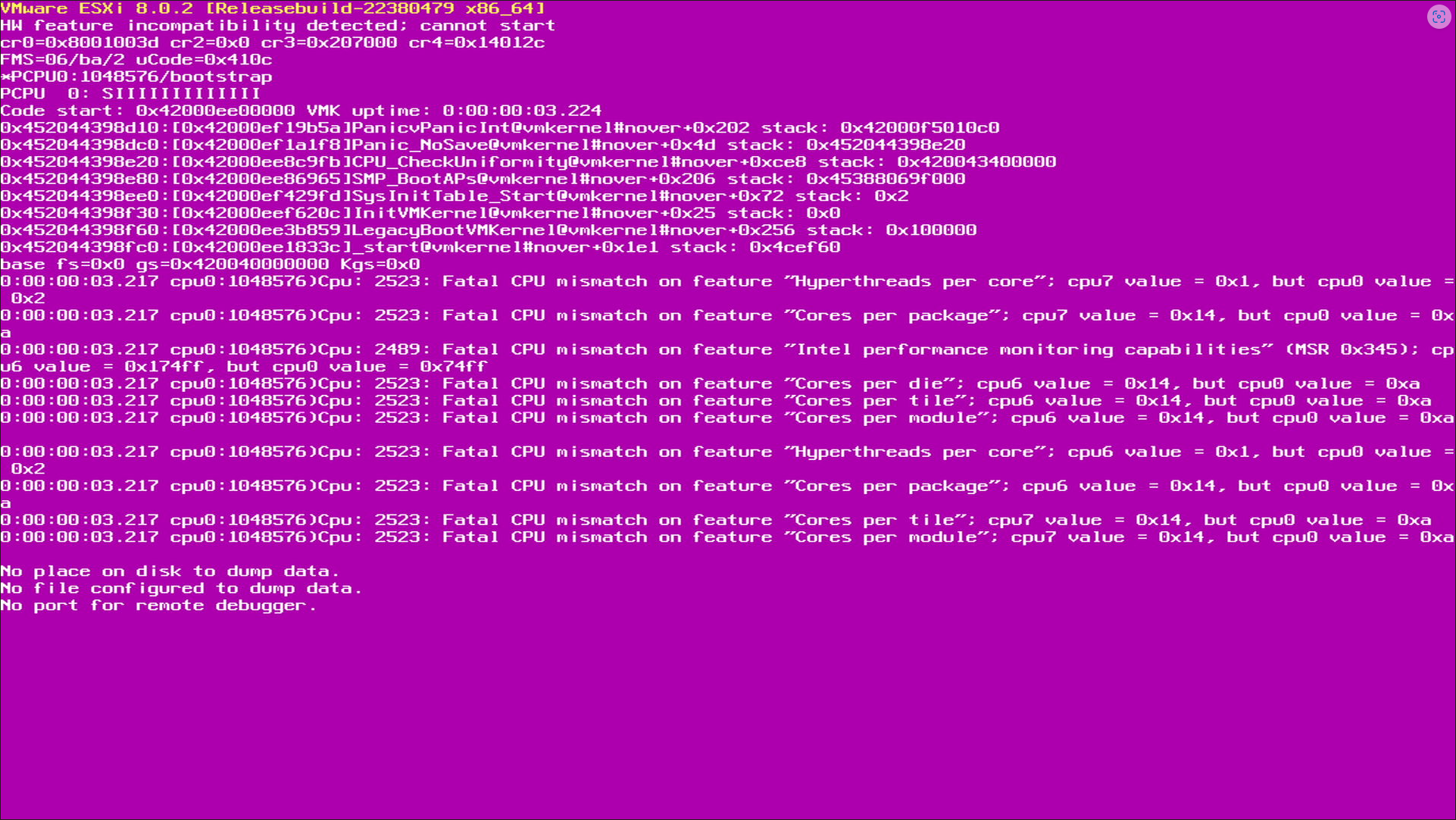

VMware hurdles

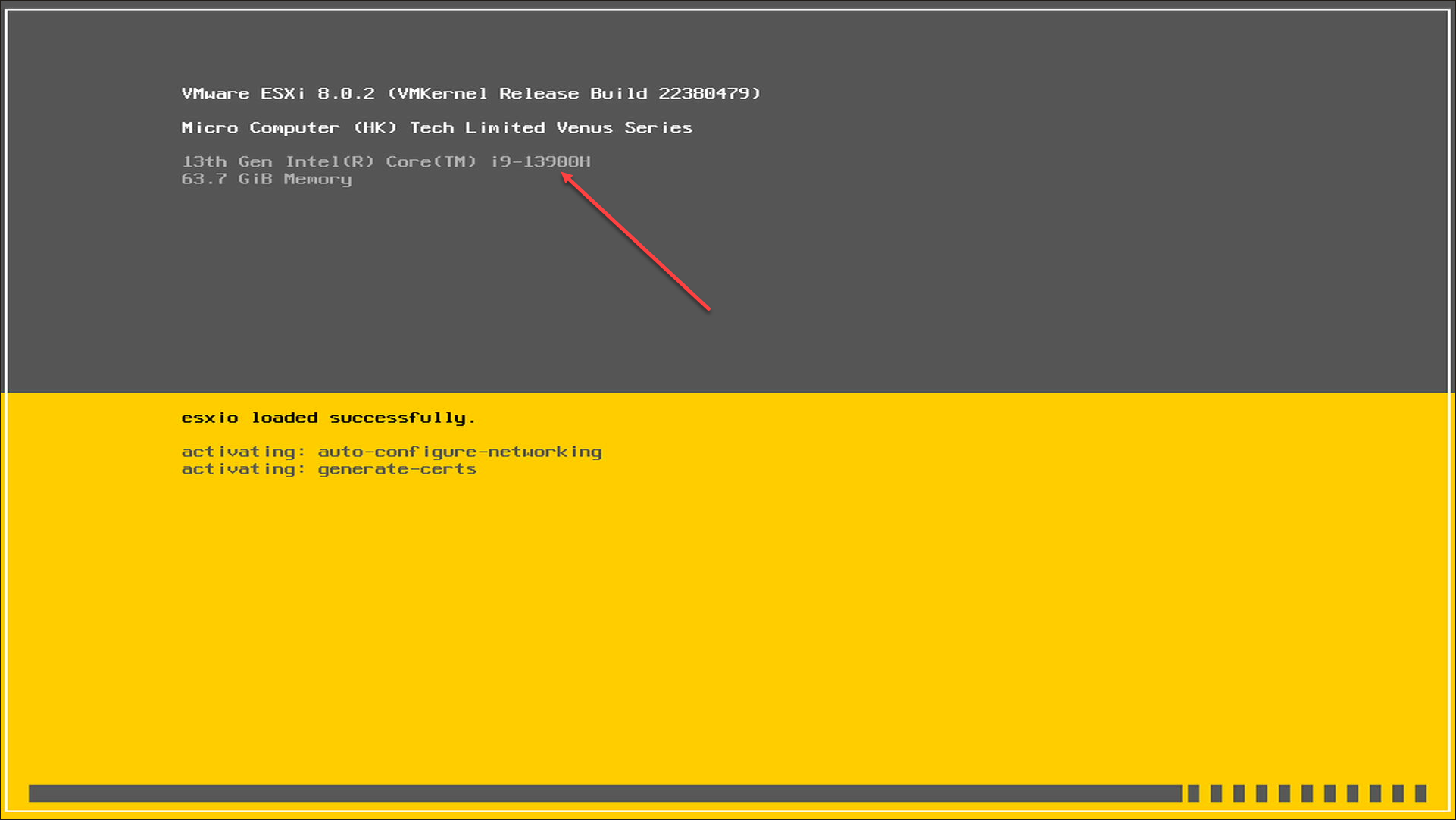

When installing VMware ESXi, you will have issues like all mini PCs with hybrid processors. You will receive a purple screen of death about the CPU mismatch.

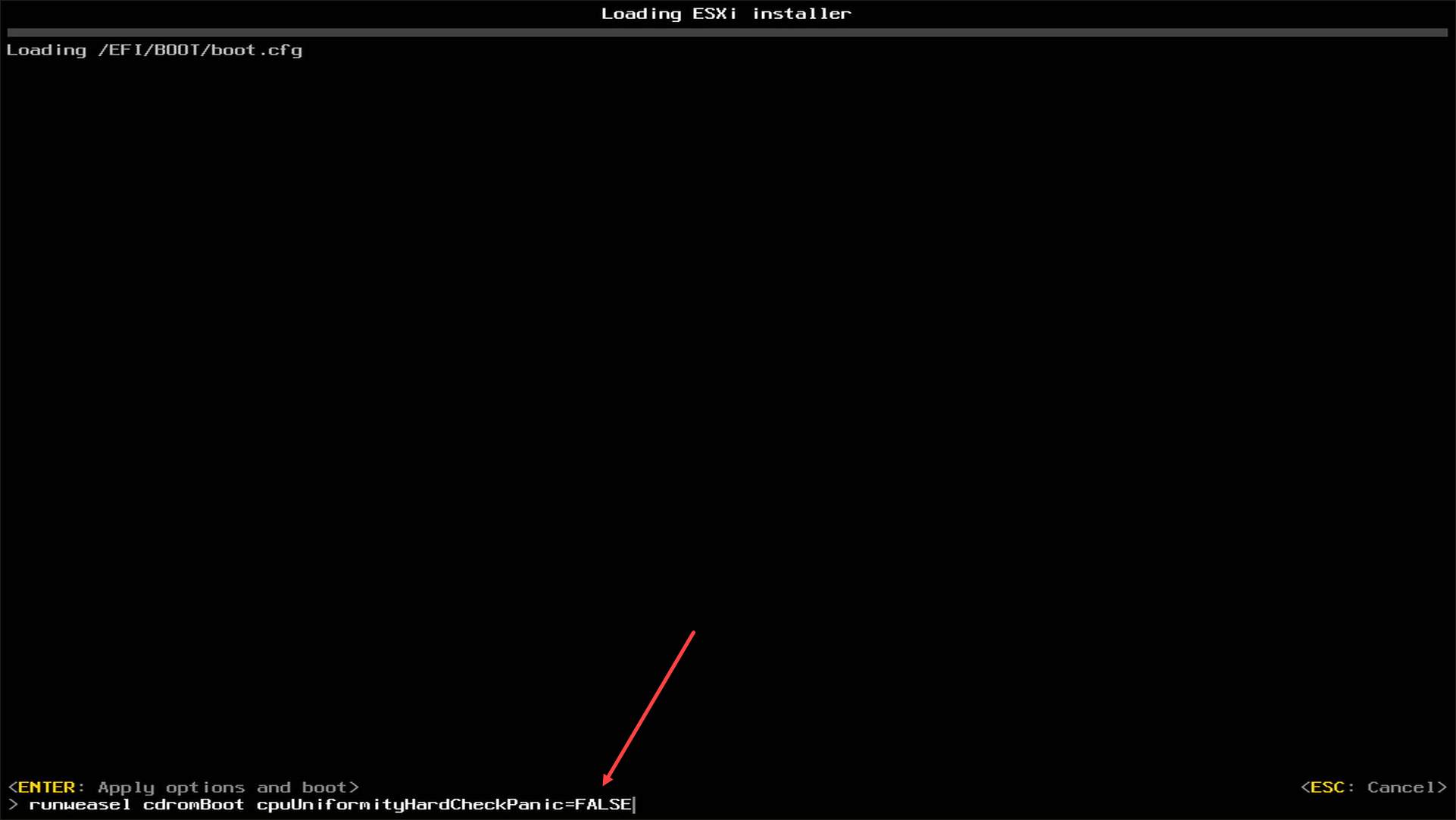

You will need to reboot the MS-01, and if you leave the E cores enabled, you will need to add the following boot option as you boot the system for installation and then again on the first boot-up.

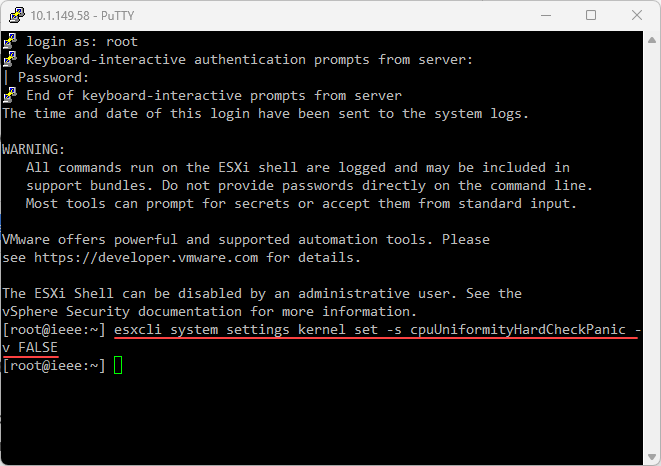

cpuUniformityHardCheckPanic=FALSEAfter you are booted into ESXi, enable SSH, remote to your MS-01 and run the following command to make the boot option persistent:

esxcli system settings kernel set -s cpuUniformityHardCheckPanic -v FALSENow we can reboot at will, without needing to enter the boot option each time.

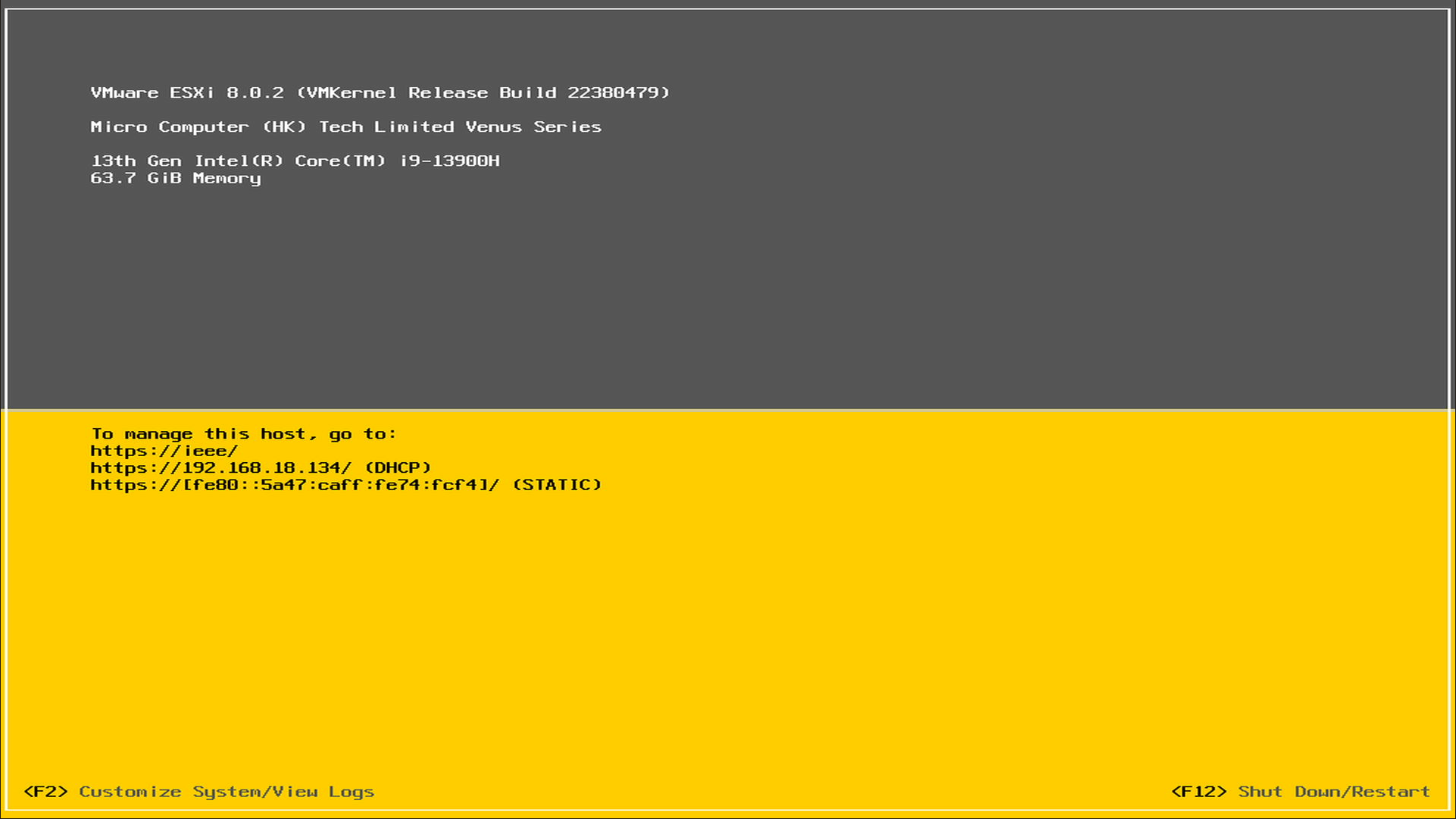

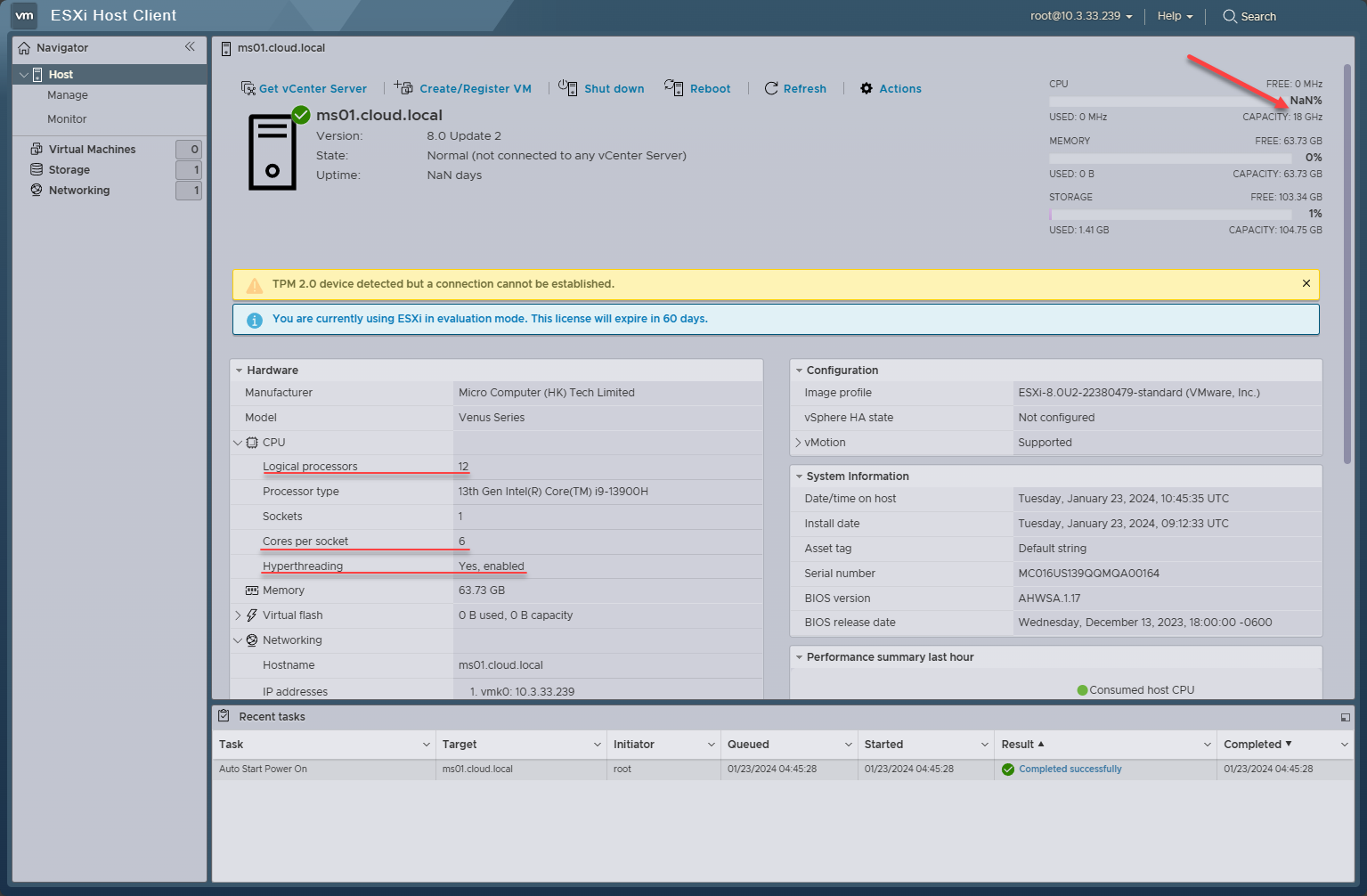

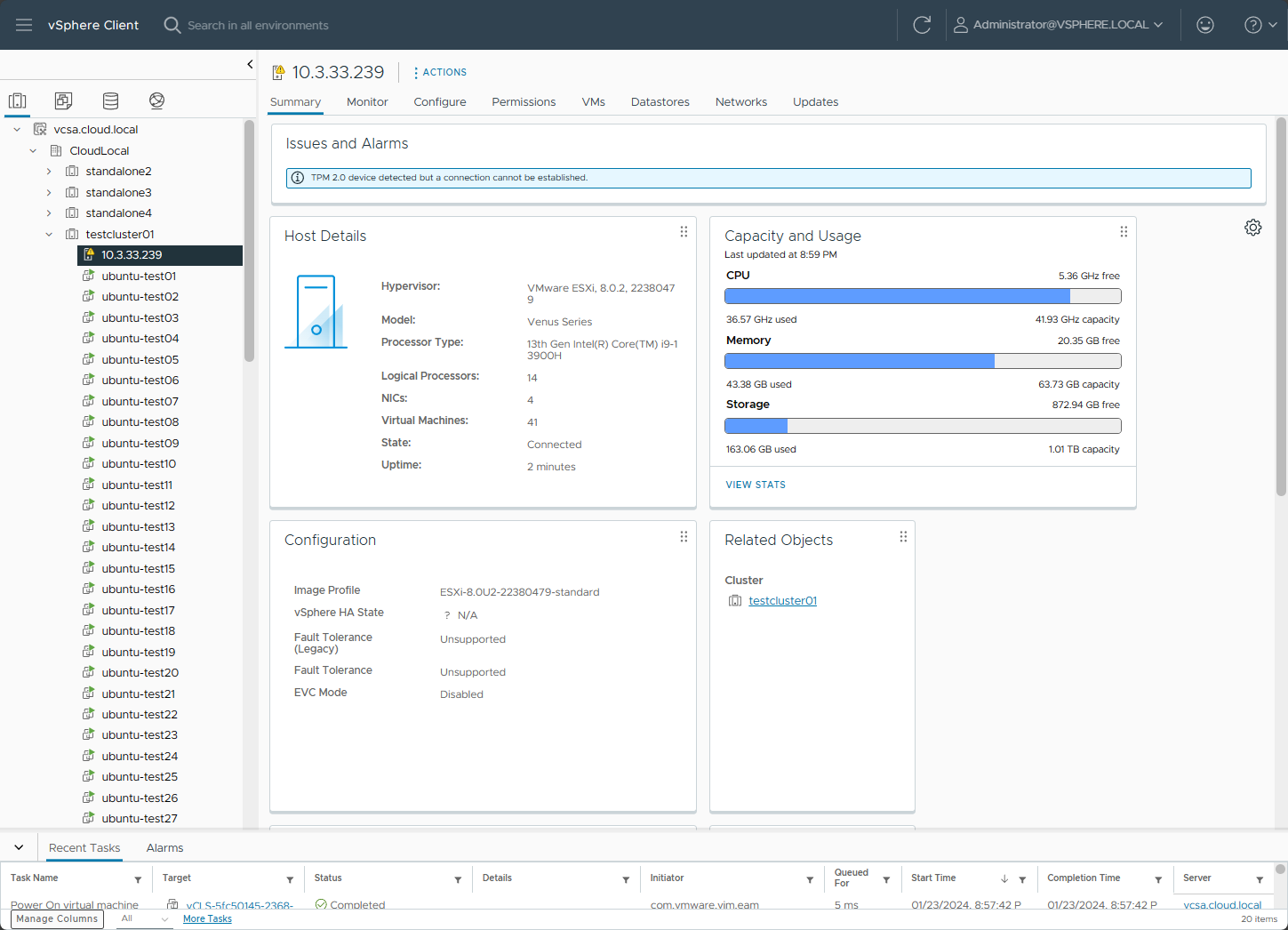

After ESXi is installed and the MS-01 is booted.

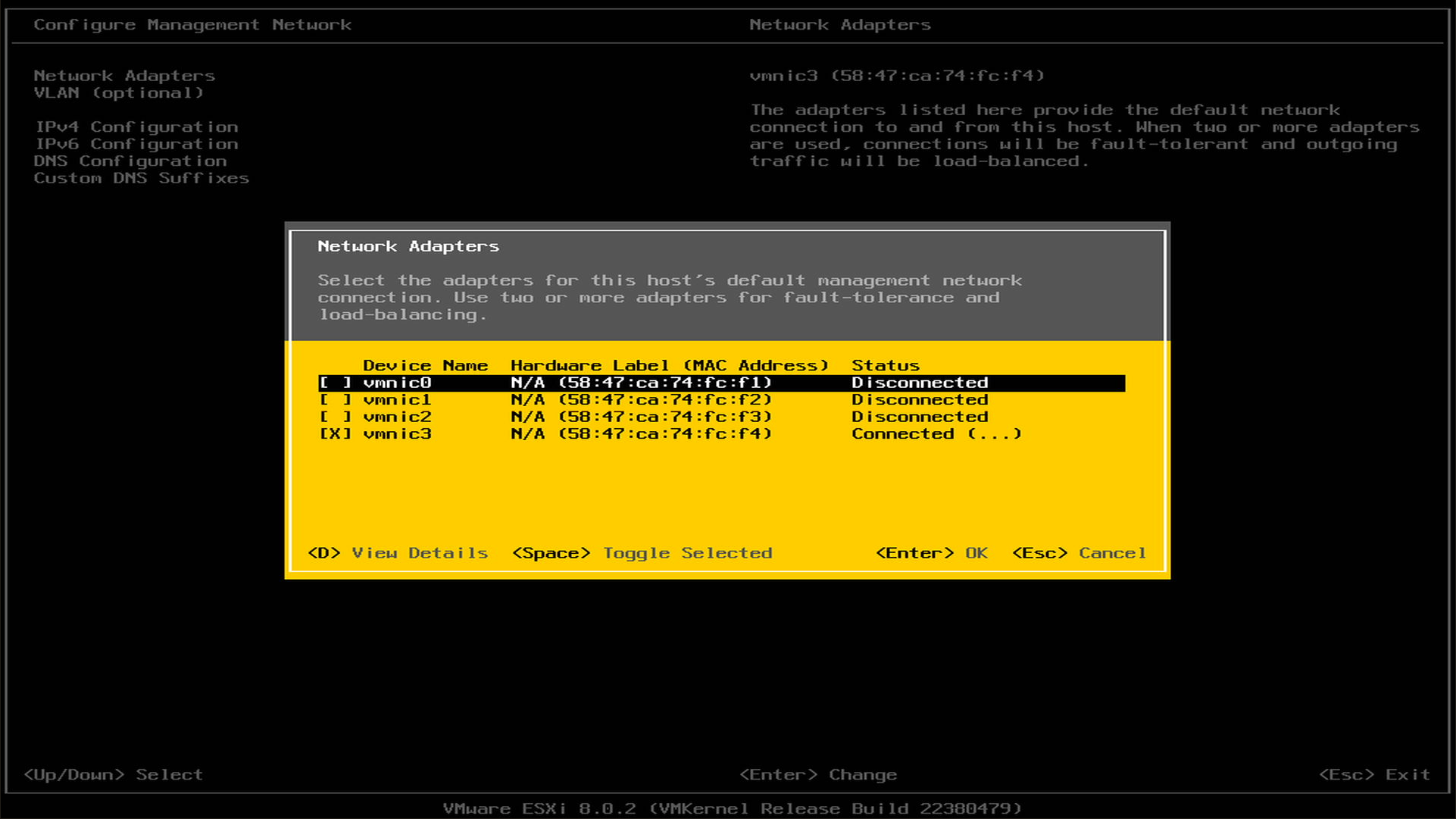

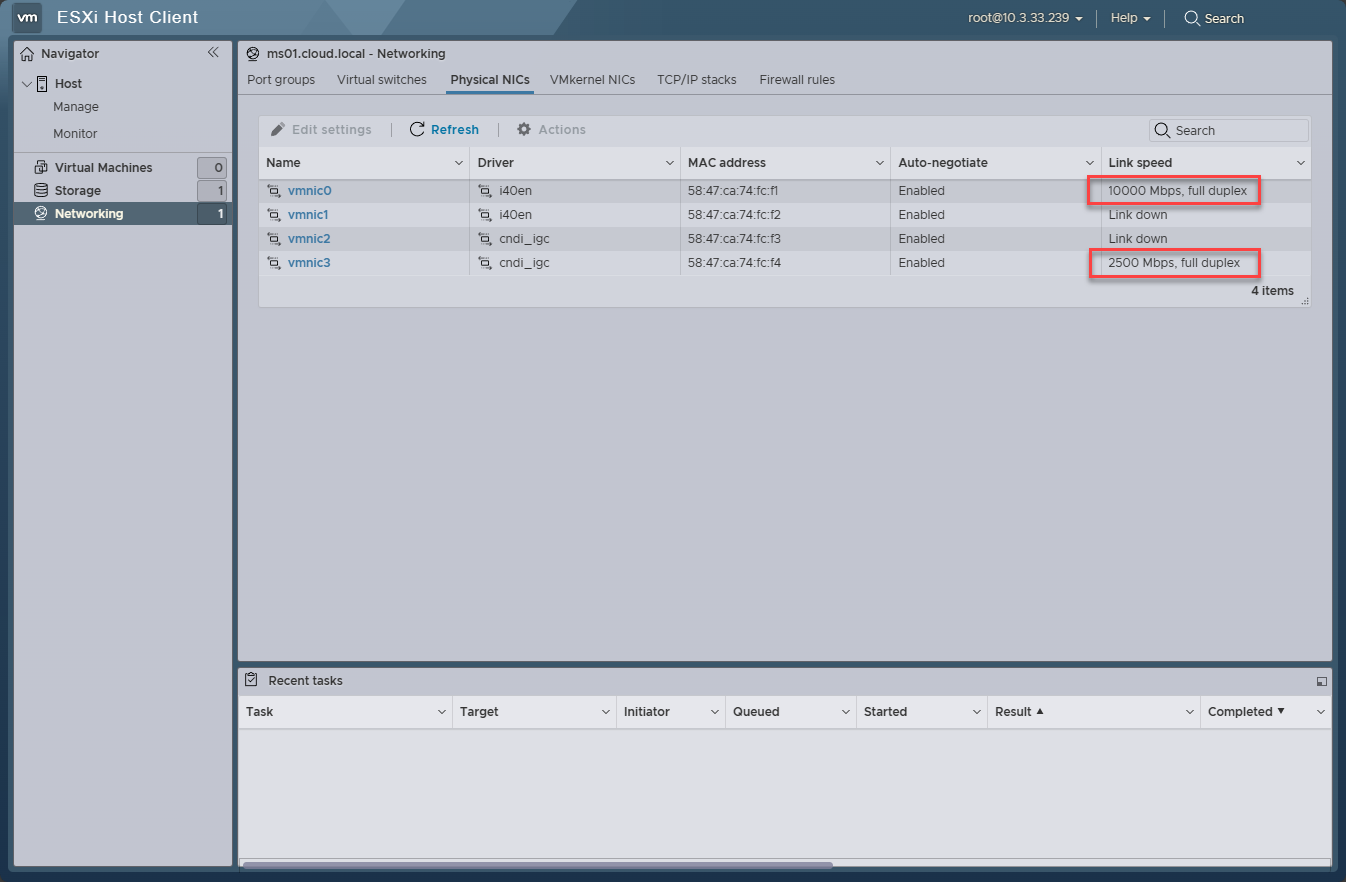

Also, a beautiful sight in ESXi is the fact that all the network adapters are discovered without issue. We see the (4) network adapters here comprised of the (2) 10 gig adapters and (2) 2.5 gig adapters.

Enabling and Disabling Efficient Cores

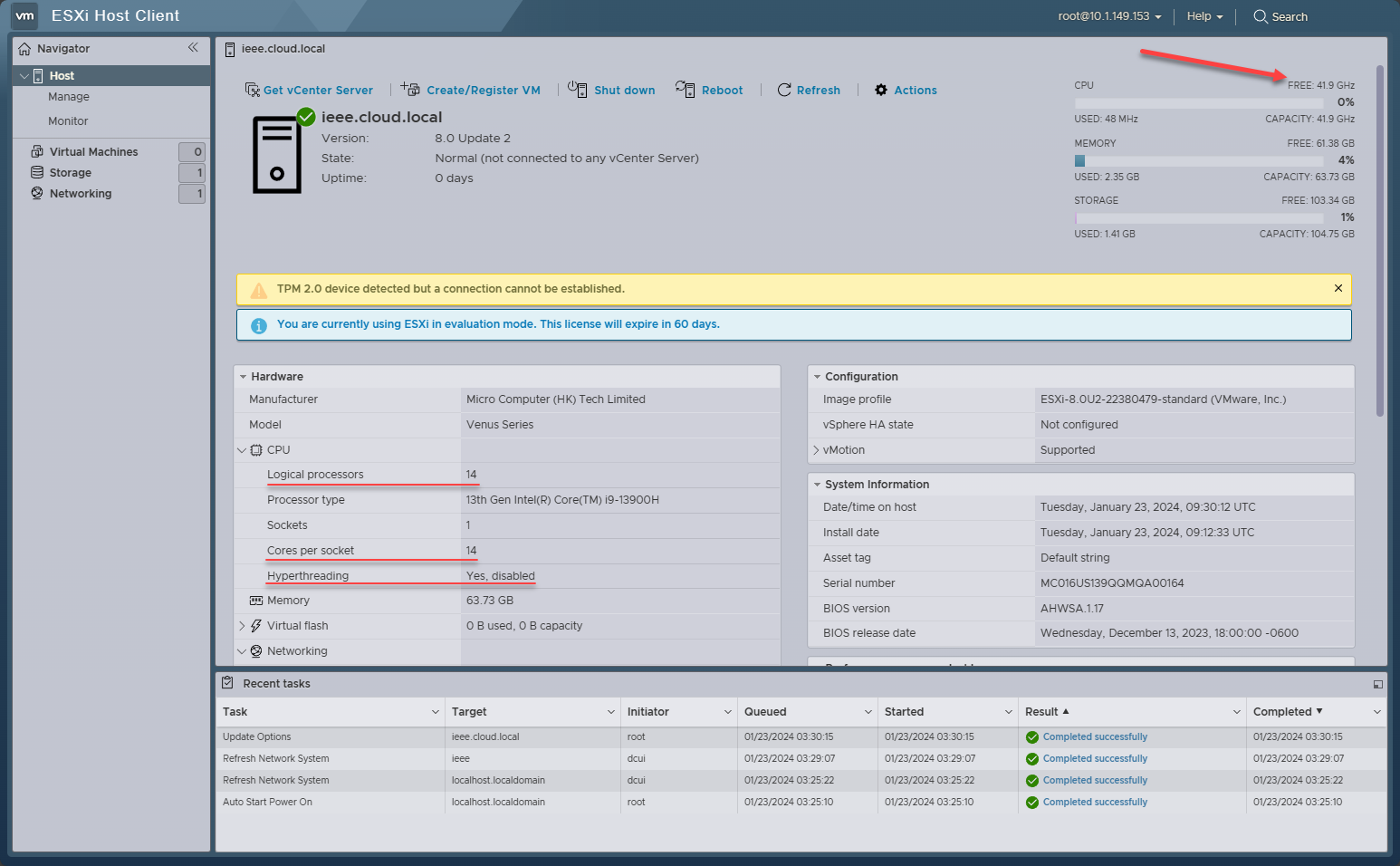

I wanted to see what we see in ESXi when enabling and disabling the Efficient cores. With everything enabled and the ESXi boot option in place to allow it to boot, we can see we have a total of 41.9 GHz available, not bad.

However, you will note a few interesting things. We see the Logical processors equal the number of cores per socket.

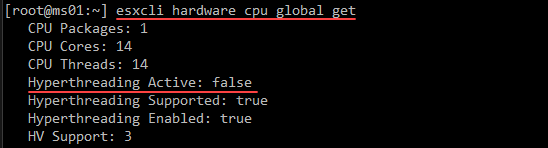

This lines up with what we see if we run the following command:esxcli hardware cpu global get

We see even though the Hyperthreading Supported: true and Hyperthreading Enabled: true, we see the Hyperthreading Active: false.

The reason for this is that due to the non-uniform architecture of the Core i9-13900H, ESXi doesn’t support hyperthreading and it is disabled.

E Cores disabled

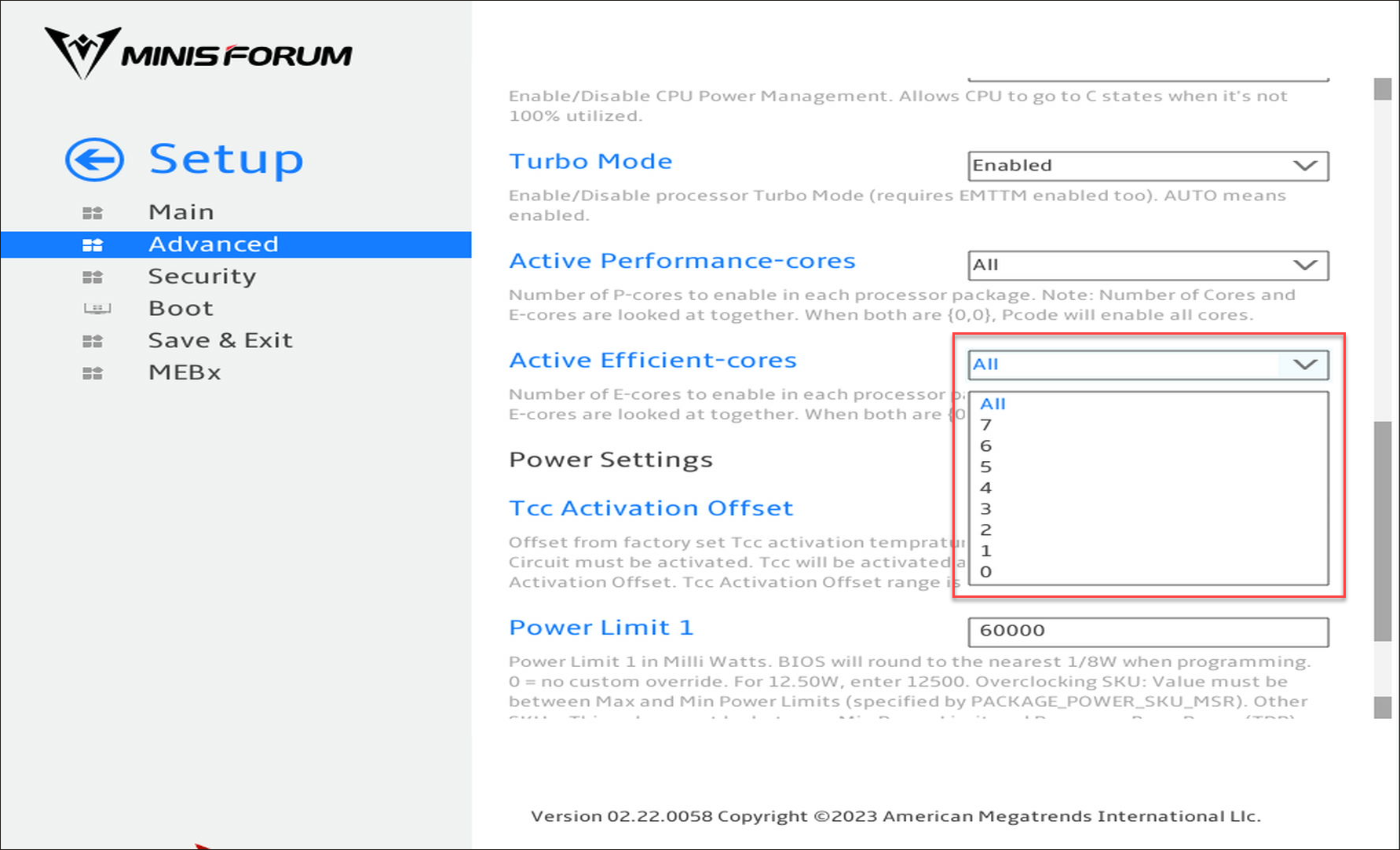

The Minisforum BIOS, under the Advanced menu, allows you to disable a number of the E cores or all of them under the Active Efficient-cores option.

With the E cores disabled, we see something different. The total CPU represented is now displaying as 18 GHz, but this is only being counted as the 6 CPUs of the Performance cores and hyperthreading is not counted.

However, we see we have 6 Cores per socket and 12 Logical Processors.

10 gig and 2.5 gig networking in ESXi

I am glad to say that I had no issues with the networking in VMware ESXi with the Minisforum MS-01. Everything worked flawlessly with the 10 gig and 2.5 gig adapters as you would want. The box linked up to my Ubiquiti 2.5 gig 48 port switch and my Ubiquiti 10 gig switch without any quirks.

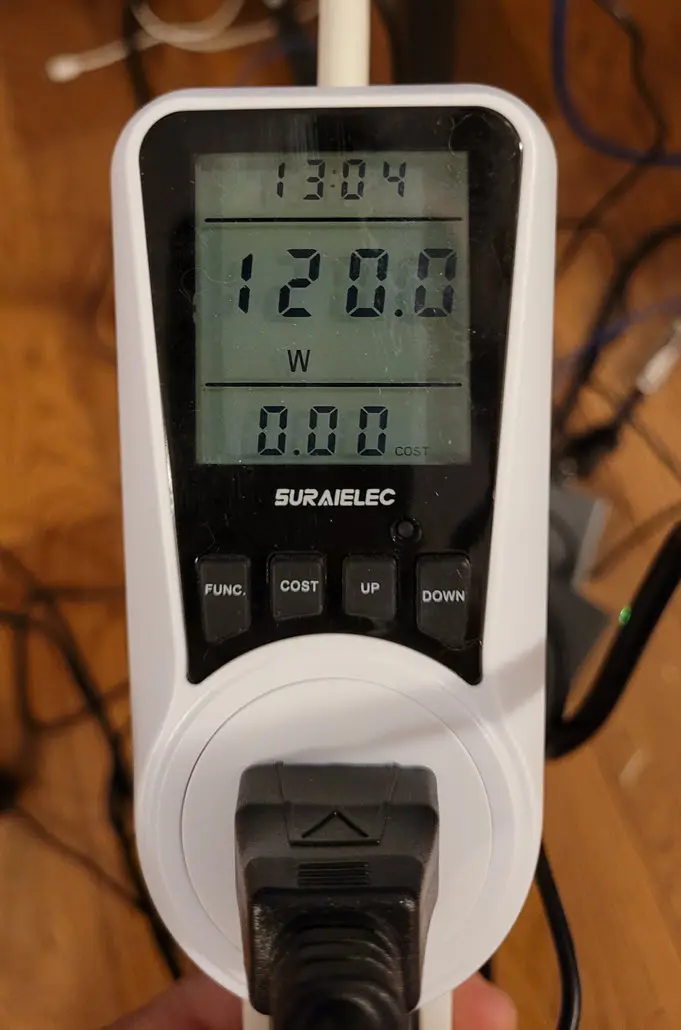

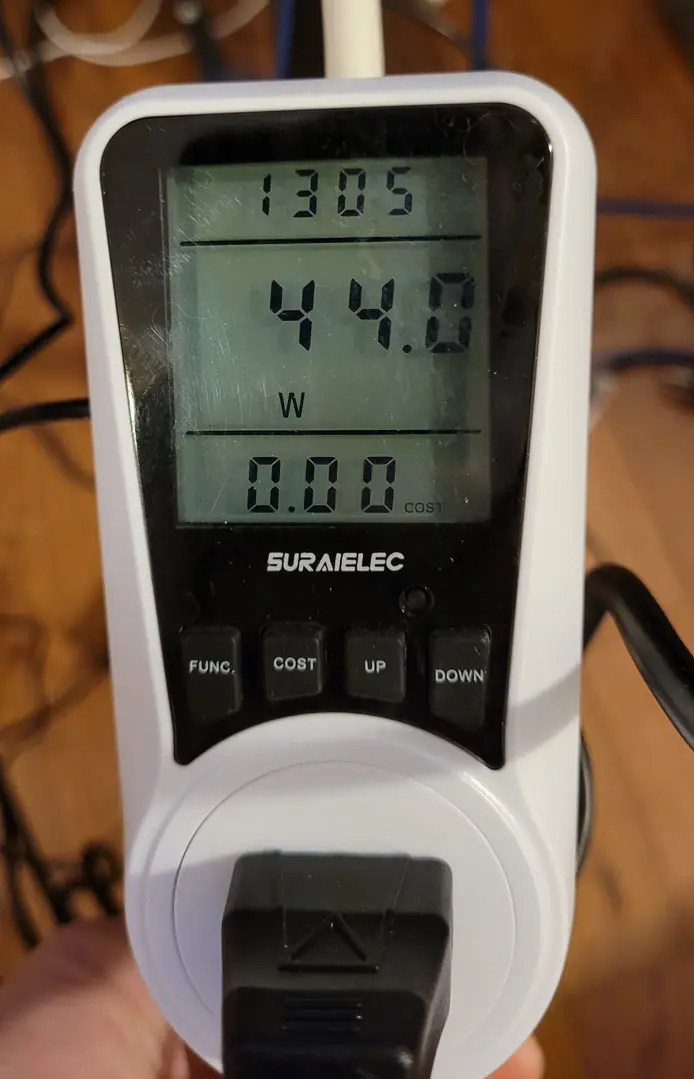

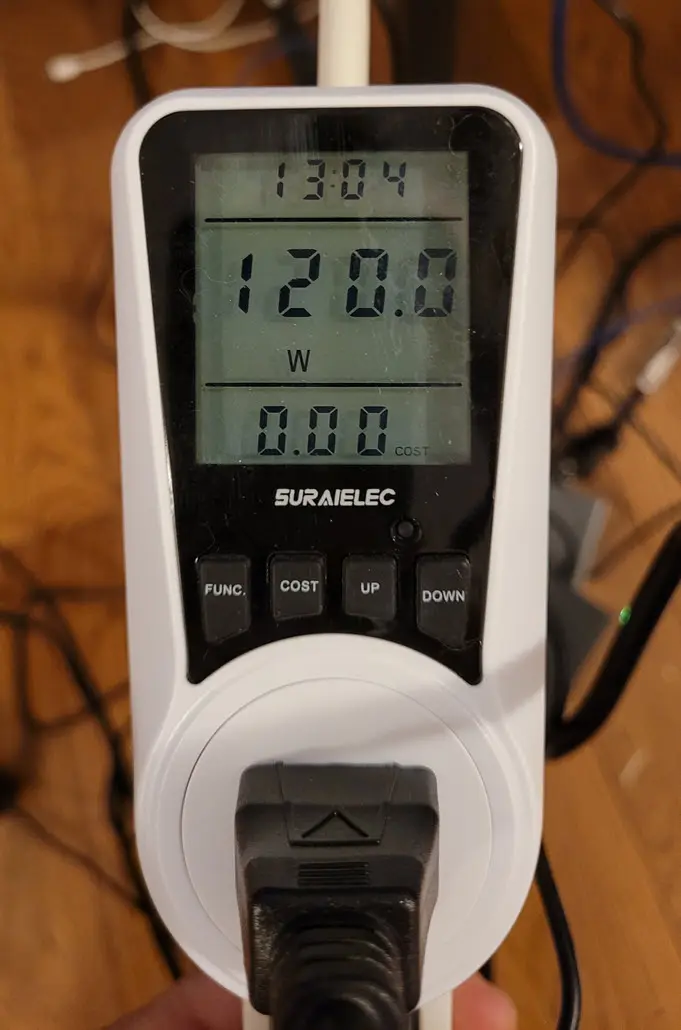

Power consumption

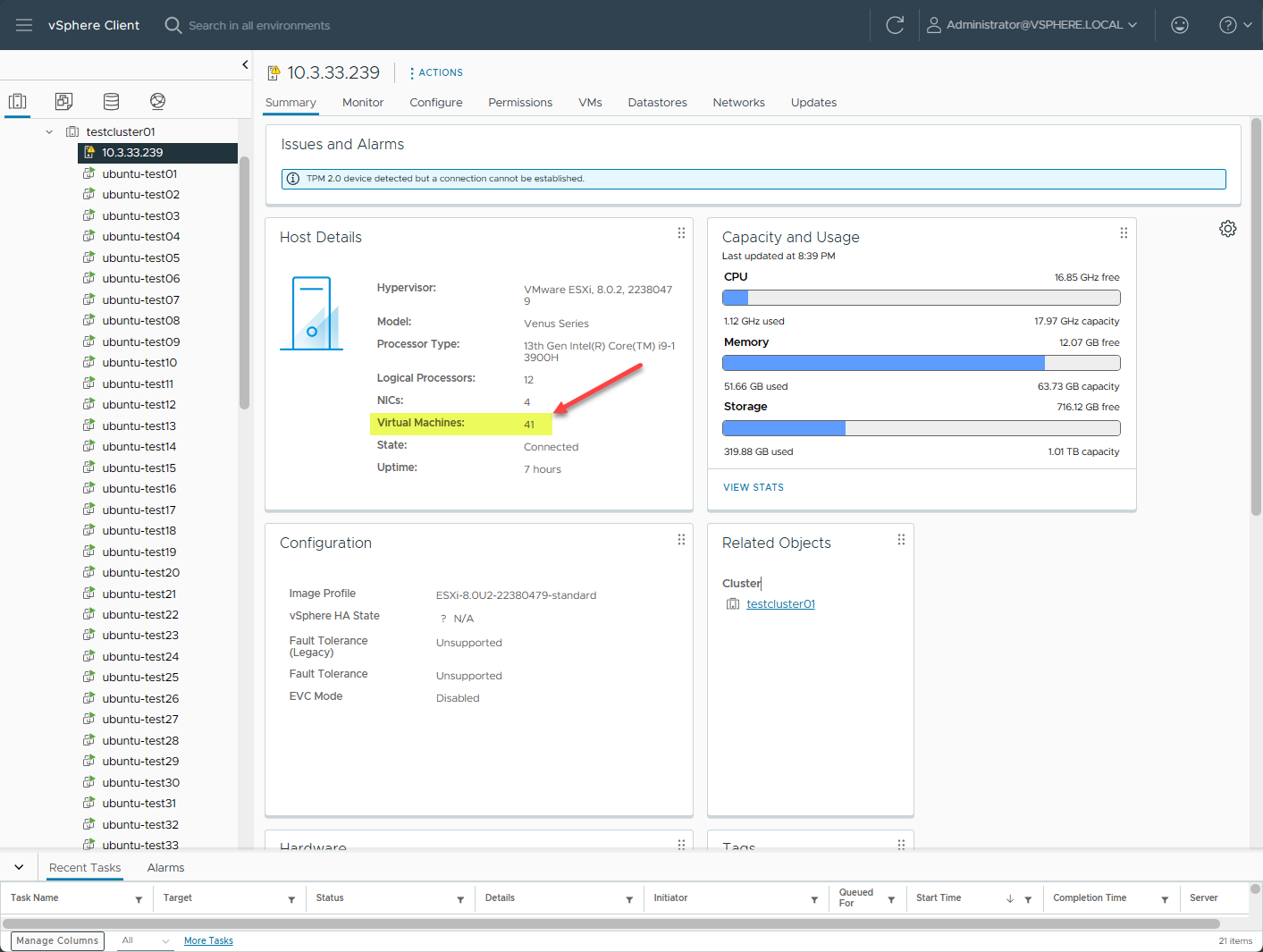

Another interesting test I wanted to perform was power consumption. The first test I did was with the E cores disabled. I created 41 virtual machines, all Ubuntu Server 22.04 LTS. Even with 41 VMs, it barely stressed the CPU, although, in fairness, these weren’t doing a whole lot.

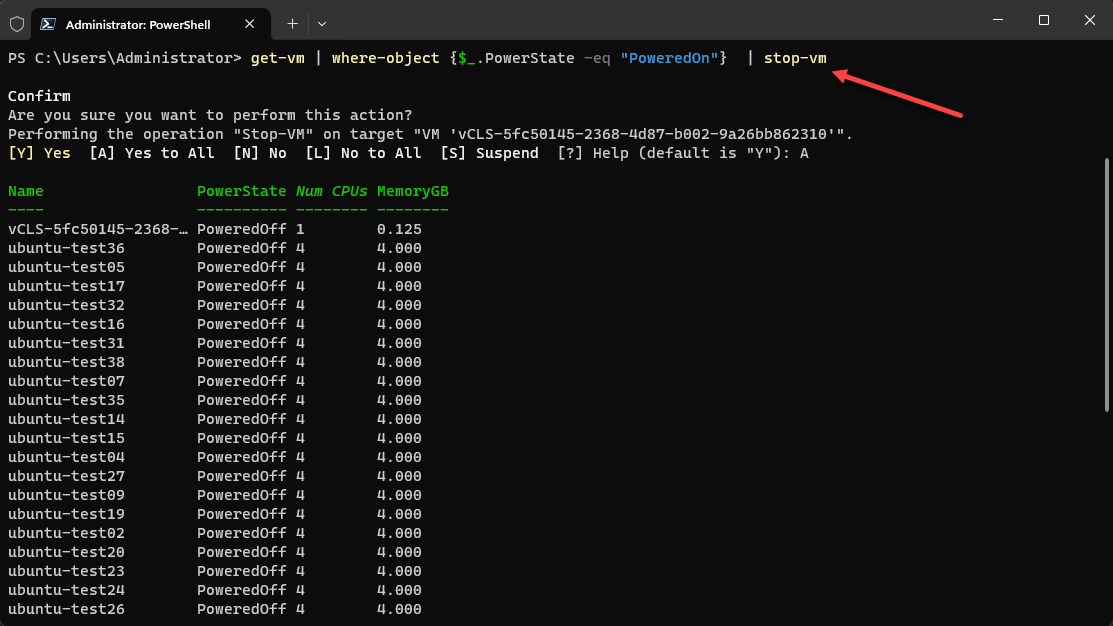

However, using PowerCLI, I powered off all the VMs and then powered them all back on at the same time.

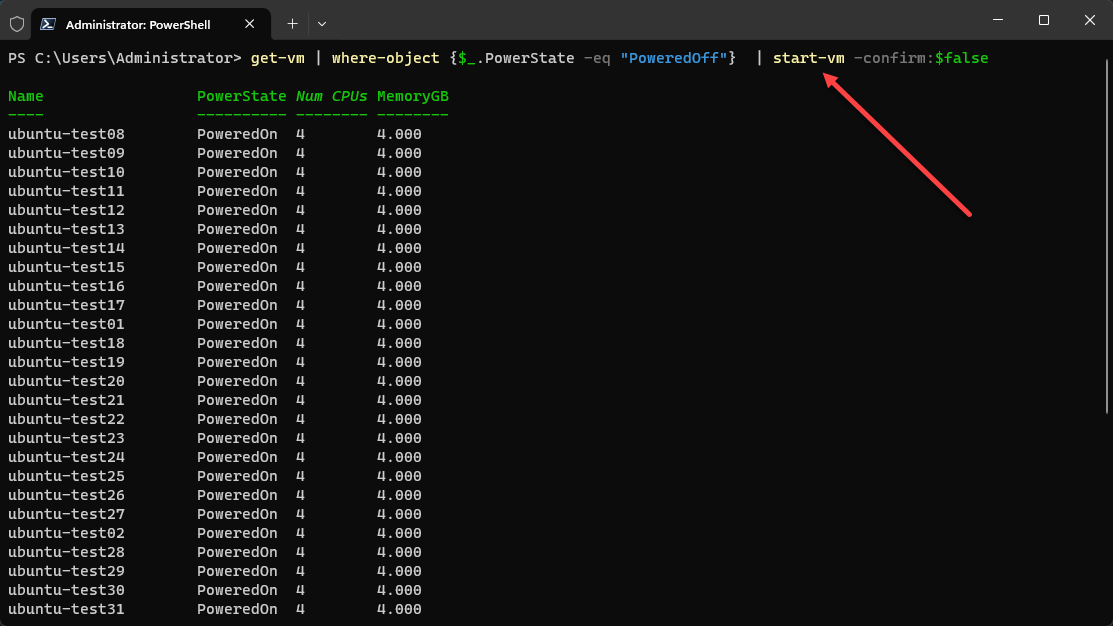

Next, powering them back on.

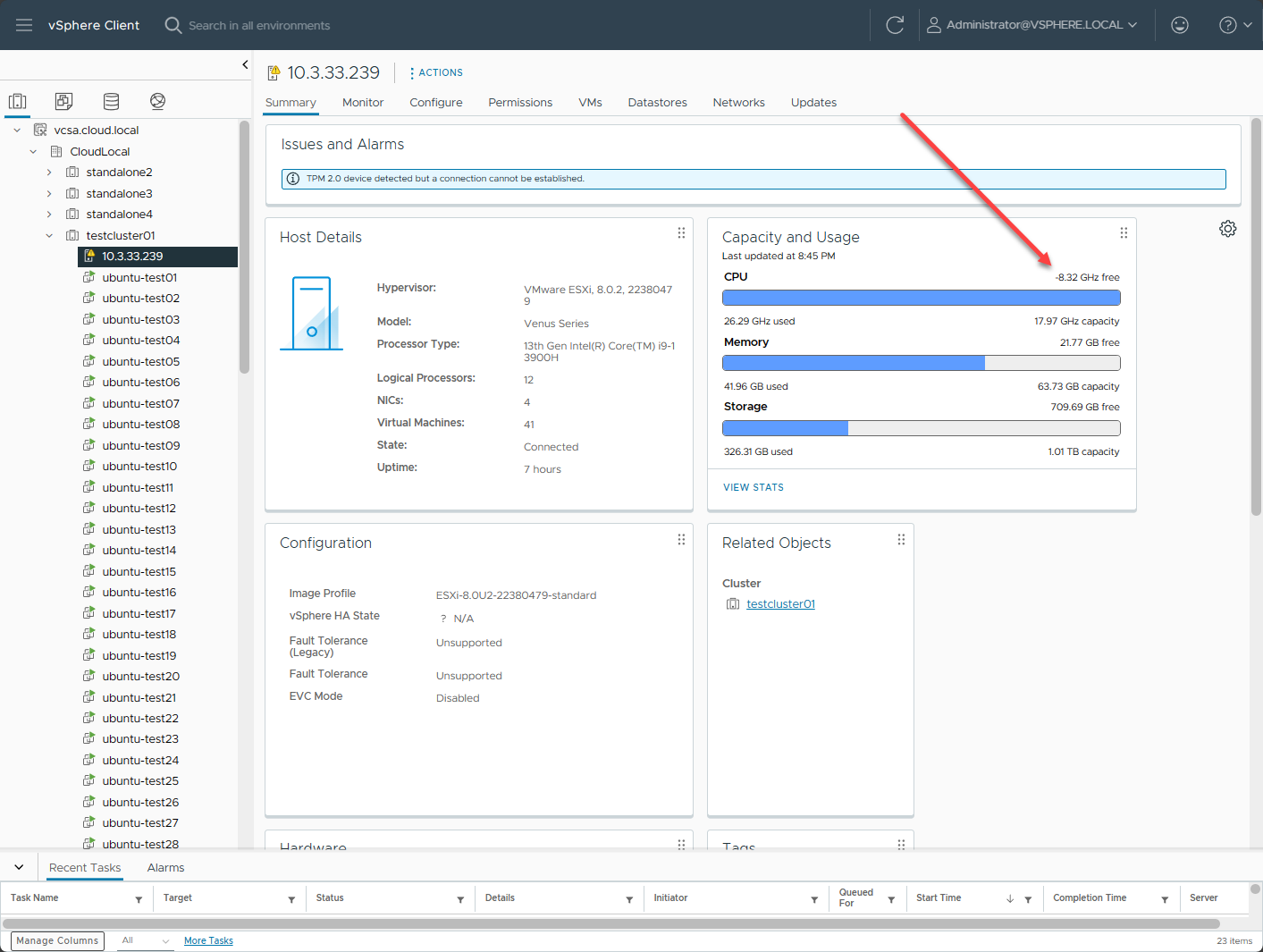

As expected, I was able to saturate the CPUs with this operation. Impressively, I didn’t really hear the MS-01 ramp up in noise during this time. In all fairness though, I have other older servers running that may have just overshadowed it.

Power usage shot up to 120 watts.

However, this only lasted for around 10 seconds. After that, it quickly ramped back down to 44 watts.

After enabling the E-Cores, the MS-01 at max still draws 120 watts. It seemed to quickly get back to normal (40-45 watts) after the VMs quickly settled down.

This was when I caught the 120-watt draw with the power on operation. Below, I didn’t catch it with the E Cores enabled, where it was completely at 100%. But, this may have just been timing.

Video review

Is this the best home server in early 2024?

Out of the units that I have seen that can be purchased at this time, yes, I think this is the best mini PC you can buy if you want to have a home lab server. The cost is right, the features are excellent, and the hardware and expansion capabilities you get with this unit, are just simply not found in any other model of mini PC that I have seen.

Is this mini PC perfect? No. Here is my laundry list of what could potentially be awesome improvements to the MS-01:

- A uniform Xeon processor option for a true “workstation” class machine (this would be killer). Maybe even a Ryzen counterpart would be interesting as well since these are uniform procs.

- DIMM slots instead of SODIMMs. It seems there is real estate inside and on the board where this could be possible in this form factor. It would allow for a much higher maximum memory capacity. ECC memory capabilities would be excellent in line with the XEON processor option above.

- Ability to take the full height U.2 drives like the Optane drives I have

- No easy-to-bump physical switch for switching between U.2 and M.2

- More tool-less design inside the MS-01, such as taking the fans out, etc.

However, all of this considered, I applaud Minisforum for making what I think is the best mini PC for a server option that you can buy and would be my first choice right now. It would also be a great mini workstation running a GeForce or AMD add-in graphics card (GPUs).

Wrapping up

Wrapping up this Minisforum MS-01 mini PC review, I think this is a great little PC, and it will make a fantastic server running enterprise hypervisors. Hopefully, this information will help you in your decision if you think this might be a good option for you. Things I love are the 10 gig network adapters, 2.5 gig network adapters, (3) NVMe slots, DDR 5, and PCIe 4.0. The Core i9-13900H is a very powerful CPU. However, it presents challenges like all hybrid CPUs do with modern hypervisors like VMware ESXi. Let me know in the comments what you think of this mini PC. Also, check out the forums here, where we have a thread on the MS-01 going as well. Chime in if you want to put in your two cents worth there.

Google is updating how articles are shown. Don’t miss our leading home lab and tech content, written by humans, by setting Virtualization Howto as a preferred source.

The problem is not the e cores, the problem is VMware not knowing what to do with them.

Yolo,

I agree with you. VMware hasn’t been designed to work with them. I am doubtful at least in the near future that enterprise CPUs will have these types of hybrid core configurations and VMware may never fully support them. Proxmox with the 6.x kernel from what I understand is able to schedule them but you still don’t really have any control over which cores it picks for which VMs. I am hoping this capability will be built in, maybe in a future release of Linux virtualization.

Dear Brandon

Thank you for yout great review.

Im waiting also the same box and i bought it exactly for the same reasons as you. Basically to decomision my power hungry esxi server that i have now in home.

I have a question because its not clear in your review. If you dont disable the E or P cores in the machine, what is really huppening with the VMs? they run fine or the crash? i dont care what cores esxi will give to each vm, i just try to understant what is happening.

Thx

Chris

Hey Chris! Thank you for the comment. Glad you like the review on the MS-01. So far it has been a great little machine. Good question on the VMs. Actually nothing bad happens when you enable the E cores. The VMs are stable and run as expected. Same goes for disabling the E cores. As long as you run the VMware kernel parameters and make those persistent you should be fine. Let me know if this helps. Thanks again.

Brandon

I’m guessing your test VMs only had one vCPU each? From what I’ve read, there are scheduling conflicts that can lead to crashes when you have a multi-core guest that jumps between (eg) two P-cores and a P-core and an E-core. William Lam has a blog post on potential workarounds with CPU Affinity.

Squozen,

Thank you for your comment! No crashes that I have seen when not managing the cores manually. However, I need to do more extensive testing.

Brandon

Security Boot. You just enter bios before you try to boot any os. Boot up direct go to bios. You will see, it’s editable.

Great review Brandon.

Question on the primary NVMe slot and the specs. They state 2TB max on the website. Can a larger M.2 drive capacity fit with double sided memory chips or will it only support single sided M.2 drives up to 2TB max only?

AF, thank you for the comment. Good question. I would test this to confirm or deny but I don’t have anything larger than a 2 TB drive in the lab 🙂 Will keep you posted though if I get my hands on one.

Brandon

Brandon, howdy!

So, I’ve been thinking, and I would appreciate your input.

For the summer, what if I spent a grand, or a bit more, on an MS-01 with a U.2 of some size, maybe an M.2 as well, and 96GB of DDR5.

I am wondering if I can get myself down from three big NASes and a PowerEdge R740 running all the time to just the little PC. For the summer.

My reasoning is as follows. Electricity has gotten more expensive. The rack puts out a lot of heat. I need to run the AC anyway in the summer, but it does work longer and harder with all the equipment in the room.

I doubt it, but could the machine pay for itself in two or three summers?

This would be an ESXi 8.0U2 host, connected to a BliKVM for management. (I have both PiKVM and BliKVM finally working here, the PiKVM fronting an 8-port HDMI KVM. That’s a whole story.) I plan to disable the processor’s E cores in the BIOS.

I’d be replacing the two Scalable Xeon 2nd gen Silver processors in the R740 with a single 13th gen Core i9. Load is about 30 VMs. Mostly Windows and VMware’s Photon appliance VMs.

I would be losing my 25Gb connections between PowerEdge and NAS, but… I will do dual 10G and “suffer” the performance hit for a few months.

Some stuff would break shutting down two NASes. Veeam and some other stuff, I am sure. This would be a major project.

But then… new hardware. Hmmmm

Your thoughts, sir?

Jeff,

I think this is definitely a worthy goal and something I am planning on tackling myself. I have one MS-01 currently, but would like to add 2 more to have 3 nodes and collapse down my old supermicro servers that are sucking quite a bit of electricity. I think the MS-01 would house the 30 VMs fine. One thing to note is the size of the U.2 drive needs to be the slim drives. I was disappointed I couldn’t use my Optane drives in there, but it has the 3 NVMe slots as well. Also, I haven’t personally tested, but the PCI-e slot I believe can house some 25 GB cards. I think Servethehome did some testing with a few 25 GB cards. Just a thought on that front. There may be model numbers listed there to play around with. I do know there are some weird things with bifurcation that I ran into with plugging in an add-on card and it cut my memory in half on the MS-01. But, there was a minisforum employee who posted on the forums here on the issue with bifurcation, and there is an easy fix (albeit a little unorthodox of placing tape over a few pins. You can read that thread here: https://www.virtualizationhowto.com/community/mini-pcs/does-the-minisforum-ms-01-support-bifurcation-with-the-pci-e-slot.

Even if the machine can’t pay for itself in 2 or 3 summers, I think you will have fun in the process, have less noise, and less heat, along with lower power draw 😉

Brandon

Brandon, thanks for the reply.

I went ahead and ordered the MS-01 a week ago. It hasn’t shipped yet. Argh!

I have acquired, during a period of extreme haziness, both an Nvidia T600 (low profile, thin, active heatsink, 40 watt) video card to pass through to a Plex server AND a Mellanox ConnectX-4 25G card. Oops! Two cards, one slot.

I am going to try the video card and hope that works. If not, I might put the 25G card in while I look for another GPU. I purchased only one 25G transceiver for the Mellanox card, and two 10G transceivers for the MS-01.

The last time I bought one of these Mellanox cards, it was nearly $400. This latest one was $35. Jeez.

Two Samsung 2TB M.2 SSDs for VM storage are waiting here. They’ll go in the two fastest slots in the machine. Another 512GB M.2 will house ESXi from the slowest slot.

96GB RAM is on the way also.

As you said, it’ll be a fun project.

Jeff,

That is great! I will be looking forward to your experience and feedback on the MS-01. Will be great to see how you get along with the Mellanox card and 25G. This is something I would love to test myself. Please do keep us posted!

Brandon

Quick reply: Experience and feedback.

The machine arrived this morning. Impressive fit and finish. Heavier than I thought it would be and not plasticky at all.

The Nvida T600 GPU that I bought for it doesn’t work. The machine doesn’t

POST with the card installed. Even after a 10 minute wait. Yah, the machine’s first boot took four or five minutes to kick off after power on.

ESXi 8.0 U2 is installed. The installation took maybe two minutes? Less.

I have two 10G drops to the network using 10GTek transceivers, which is my preferred brand for hosts and my big Agg switch (though they don’t seem to be MikroTik friendly).

ESXi can see but won’t communicate with the TPM in the MS-01. This is the same thing I’ve seen in the GMKtec NUCbox machines. Something with the protocol used with the chip, which is not the protocol used by ESXi. I am going to disable the TPM so I don’t have a banner in vCenter and a yellow mark on the server’s icon.

That’s it for now. I’ll continue to look for a GPU that fits in the machine.

Question for you, Brandon: I thought the integrated GPU on the Core i9 would be in use by ESXi and I would need something else to pass through to a VM, but ESXi is listing the iGPU as a PCI device that can be passed through. Do you know if this is something that will work? What does ESXi do if I attach a monitor/KVM to the machine to troubleshoot and the iGPU is in use in a VM?

Jeff,

It is great to know your feedback on the Nvidia T600 GPU. That is a bummer that it didn’t work. I think that is one of the downsides of this unit. I am not sure if this is in play…check out the forum thread here: https://www.virtualizationhowto.com/community/mini-pcs/does-the-minisforum-ms-01-support-bifurcation-with-the-pci-e-slot/, where a minisforum employee mentioned a peculiarity of the pins on the slot. I am not sure this would be a workaround, but might be worth trying out. To be honest, I haven’t had very good success either in ESXi or Proxmox passing through an APU to a virtual machine. Even though it is “sort of” discreet, I haven’t been able to get them to work. But there may be a trick I am not trying there and I need to spend more time with it to be honest.

The TPM issue is one that I have seen as well across every single mini PC I have been sent or purchased myself. They use the protocol format that ESXi doesn’t like. I am not sure why the difference, but there seems to be a different spec used in this consumer grade hardware. I know William Lam has written about this a few times as well.

Keep us updated on your findings Jeff with the MS-01…it is great to have you putting it through the paces in a home lab scenario 🙂

if there was an option with AMD Ryzen Pro it would be a right choice for me. Losing 8 E-Cores in a virtualized environment would be a big loss.

Yes, i am very much looking forward to seeing if Minisforum puts out a Ryzen model. I think that would be fantastic. Also, if you enter the boot parameters in ESXi, it actually allows you to leave on the E cores.

Brandon

Will this drive fit in the u..2 slot?

Product Specifications

Data Center

SSD D7 Series

D7-P5520

PCIe 4.0 x4, NVMe

U.2 15mm

15.36 TB

Product Details-

Capacity

15.36 TB

Form Factor

U.2 15mm

Interface

PCIe 4.0 x4, NVMe

Weight

165g +/- 5g

Lithography Type

144L TLC 3D NAND

Use Conditions

Server/Enterprise

I’ve heard that U.3 SSDs can fit and function in U.2 hosts. The Minisforum MS-01 has a U.2 NVMe PCIe Gen 4 slot, and I’m curious if the WD Ultrastar DC SN650, U.3, 15MM, 15,360GB, 2.5-inch, PCIe Gen 4 would be a suitable option. I noticed that this SSD is cheaper than a U.2 SSD from another brand with similar specifications, aside from the U.2 interface and the read/write speeds.

The manufacturer’s page clearly states that the second configuration example can support a total of 24TB.

Especially in the minisforum website is mentioned quoting below:

quoting first configuration example:

“It is possible to expand with up to three M.2 NVMe SSDs, with each slot supporting a maximum capacity of 2TB. The leftmost slot is PCIe 4.0 compatible, with a maximum transfer speed of 7000MB/s. It supports both RAID0 for increased capacity and RAID1 for enhanced data safety.”

First slot- 1 x 2TB M.2 22110 NVMe SSD (PCIe Gen 4×4 slot) = 2TB

Second slot- 1 x 2TB M.2 22110 NVMe SSD (PCIe 3.0 x4 slot) = 2TB

Third slot – 1 x 2TB M.2 22110 NVMe SSD (PCIe 3.0 x2 slot) = 2TB

quoting second configuration example:

“It also supports enterprise-class storage, allowing for expansion up to a total maximum of 24TB with a configuration of one U.2 NVMe SSD and two 22110 M.2 NVMe SSDs. This meets the demands of professionals who require high-capacity storage.”

First slot- 1 x 20TB U.2 NVMe SSD(PCIe Gen 4×4 slot) = 20TB

Second slot- 1 x 2TB M.2 22110 NVMe SSD (PCIe 3.0 x4 slot) = 2TB

Third slot – 1 x 2TB M.2 22110 NVMe SSD (PCIe 3.0 x2 slot) = 2TB

The manufacturer chose to use M.2 2280 for the first and second configurations exmples instead of M.2 22110. However, I believe that M.2 22110 offers better performance and is more suitable for enterprise environments.