Slow Veeam Restores after vCenter Server 7 upgrade

With many customers scrambling to get their now unsupported vSphere 6.5 and 6.7 servers upgraded to vSphere 7, many are getting their vCenter Servers upgraded to VCSA 7. I want to detail an issue I have seen with Veeam v11, and slow Veeam restores after vCenter Server 7 upgrade to give visibility to this potential issue and a workaround.

The issue after upgrading to vCenter Server 7

The issue is that after upgrading to vCenter Server 7, customers see a combination of slow restores, replication, and even backups when using the Network Mode (Network Block Device or NBD). HotAdd and direct attached storage do not exhibit the behavior.

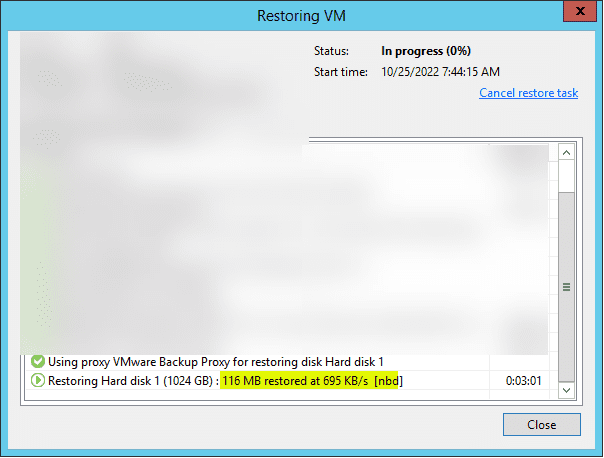

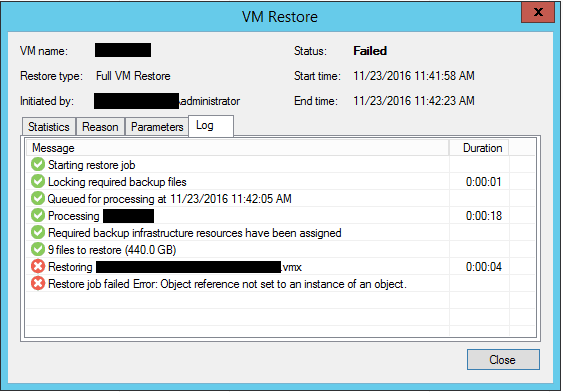

Below is the behavior that I have seen personally. In an environment with no previous issues related to backup, replication, or restore performance over Network Mode, after upgrading to vCenter Server 7, a restore job would kick off and restore painfully slow. Compared to the previous average of 200-300 MBps, the environment saw around 600-800 KBps, yes, kilobytes per second.

Below is a screenshot of the behavior:

Relevant forum post and KBs

As it turns out, customers are seeing similar issues. A quick Google of the issue will most likely land you on the following forum post:

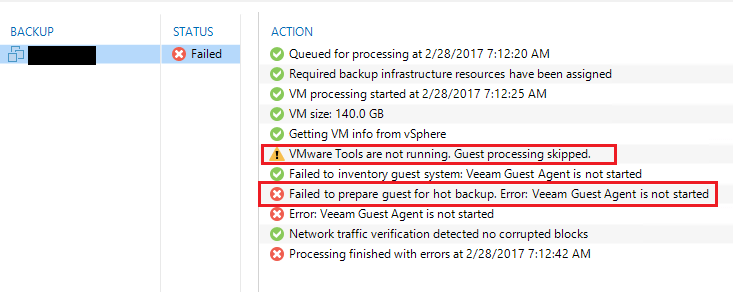

Also, Veeam will guide you to the following topic from VMware, where there is a known issue with the VDDK, and VMware Engineering has identified this with the following:

Connecting the dots

One of the problems I see happening with this issue after placing a support ticket with Veeam is the workaround proposed is only to transition to using HotAdd in the Veeam environment. However, this will mean several things that most will consider undesirable.

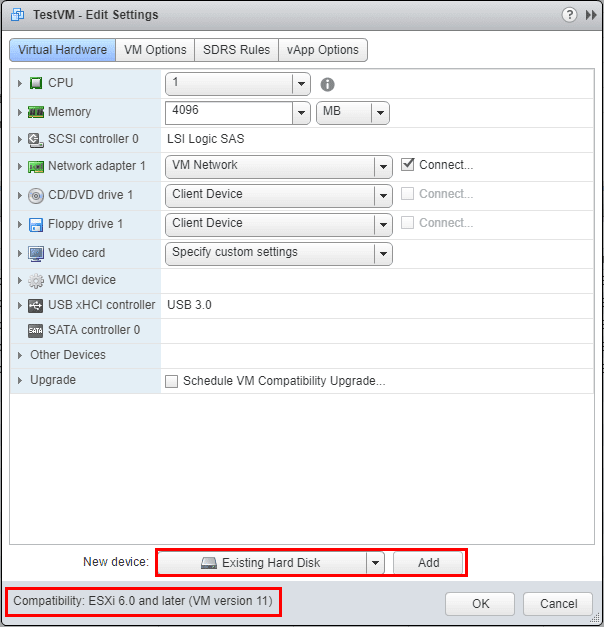

HotAdd requires the proxy VM to meet several requirements. Per the documentation listed here, note the following:

- The role of a backup proxy must be assigned to a VM.

- The backup proxy and processed VMs must reside in the same data center.

- The backup proxy must have access to the disks of the VM that this proxy processes. For example, in a replication job, the source backup proxy must have access to the disks of the source VM, the target proxy — to the disks of the replica. If a backup proxy acts as both source and target proxy, it must have access to the disks of the source VM and replica. In restore operations, the backup proxy must have access to the disks of the restored VMs.

- [For NFS 3.0] If you plan to process VMs that store disks on the NFS datastore, you must configure Veeam Backup & Replication to use the proxy on the same host as VMs. This is required due to an issue described in this VMware KB article. For more information on configuring the proxy, see this Veeam KB article.

Alternatively, you can use ESXi 6.0 or higher and NFS 4.1.

- The backup proxy must have the latest version of VMware Tools installed. Note that the backup server installed on a VM can also perform the role of the backup proxy that uses Virtual appliance transport mode. In this case, ensure the backup server has the latest VMware Tools installed.

- SCSI 0:X controller must be present on a backup proxy. Conversely, VM data processing in the Virtual appliance transport mode will fail.

The implications of using a virtual appliance and HotAdd mode in a large environment with many datastores, separate clusters, and even standalone hosts is that you would be required to stand up many additional virtual appliances to achieve the workaround.

A better solution – Use Linux proxies

A key detail that is easy to miss in the VMware KB is found under the relevant information. It states:

VMware Engineering has identified the issue only happen for Windows proxy, we have improved the performance for Windows with VDDK 8.0 and VDDK 7.0.3 Patch2.

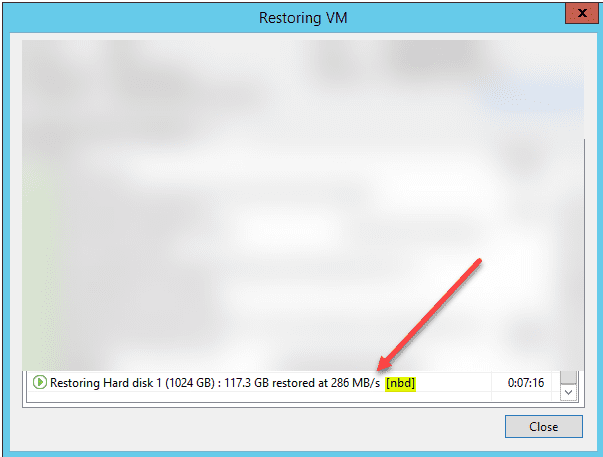

In testing this out in my environment, standing up a Veeam proxy running on Ubuntu 20.04 and assigning this VM the VMware Backup proxy role, it works in HotAdd mode, as expected. However, more importantly, it resolves the issue with Network Block Device (NBD) restores, and I assume any other performance issues after the upgrade to vSphere 7.

Below is a restore of the same virtual machine and selecting the Linux proxy.

It is great that Veeam has added the ability to host the Proxy role in Linux, as in this case, it certainly comes in handy with slow performance seen in environments post-upgrade to vSphere 7. Unfortunately, it looks like there is no patch or workaround for Windows proxies as of yet.

So, customers will need to rethink their Veeam infrastructure to work around the issue in a “Windows-only” Veeam environment as they transition to vSphere 7 and if they are configured to use Network Block Device (NBD) for jobs.

Slow Veeam Restores after vCenter Server 7 upgrade FAQs

When will you see slow restores with Veeam and vSphere 7? Many customers see slow performance when using the Network Block Device (NBD) backup mode. It does not affect customers using direct-attached storage or HotAdd.

Do you have to use HotAdd as a workaround? No, as shown above, while HotAdd is a workaround, it is not the only workaround. The better solution in environments configured with NBD backups/restores/replication, etc., is to transition to using a virtual Linux VMware Backup Proxy. VMware has noted that the slow performance is only seen with Windows proxies.

Is using a Veeam Linux proxy supported? Yes, it is supported, and it is a great way to work around the issue where slow NBD performance is seen.

Wrapping Up

Hopefully, this quick post will shed more light on this issue and will help highlight the relevant details of the VMware KB article. The testing I performed in my environment aligns with VMware’s findings using Windows proxies and VDDK performance.

Should be “fixed” with VDDK 7.0.3 Patch2. Had probably the same issue with a customer using IBM Spectrum Protect for VE. Using this Patch brought back even a slight better performance compared to 6.7