NUMA Affinity VMware Fling

When it comes down to the nitty-gritty performance details of CPU and memory with a modern hypervisor like VMware vSphere, you no doubt have heard a lot about NUMA, Non-Uniform Memory Access. NUMA is extremely important when you are working with large, “wide” VMs that are generally seen with large database servers that require a large amount of memory allocated. It is possible to set what is called NUMA node affinity. Let’s take a look at setting NUMA affinity in VMware and also NUMA Observer, a NUMA affinity VMware Fling.

Why is NUMA important?

Generally speaking, you do not want to cross a NUMA node, or boundary, if possible as this can affect performance. When you assign more memory to a VM than is physically contained in a NUMA node as defined by how modern CPUs are configured to access memory, a virtual machine may have to use memory assigned to another CPU package to satisfy the memory requirements.

A great resource for all things NUMA is Frank Denneman:

Understanding this relationship between how modern CPUs and memory interact is key to ensuring you have the best performance for your virtual machines. There is a configuration setting that allows administrators to control NUMA nodes for a particular VM.

There is a configuration setting in VMware vSphere that allows specifying the NUMA node affinity for a virtual machine. This allows constraining the set of NUMA nodes on which VMware ESXi can schedule a virtual machine’s virtual CPU and memory. Why is this needed?

Going back to large, sometimes called “wide” or “monster” VMs, these are generally configured for many database applications. These may include SAP HANA, SQL Server, or others. These VMs often require large resource footprints and have strict performance requirements that specifically relate to latency.

In the context of these types of VMs, it is actually recommended to set core and NUMA node affinities to help ensure low latency, and by extension, performance.

Challenges of NUMA affinity

While there may be good reasons to set NUMA affinity on certain VMs, it is important to understand this can cause challenges when it comes to maintenance and HA events. When you constrain NUMA node affinities, you might interfere with the ability of the ESXi NUMA scheduler to rebalance virtual machines across NUMA nodes for fairness. Specify NUMA node affinity only after you consider the rebalancing issues.

In environments where there may be multiple monster VMs, an HA event may attempt to migrate VMs to other hosts with spare capacity and those hosts may already be running virtual machines with affinity to the same cores or sockets. In this case, there may be multiple VMs assigned/scheduled to the same set of logical cores.

Overlapping affinities can lead to performance degradation as you run into CPU contention and non-local memory allocation.

How to configure NUMA Affinity VMware

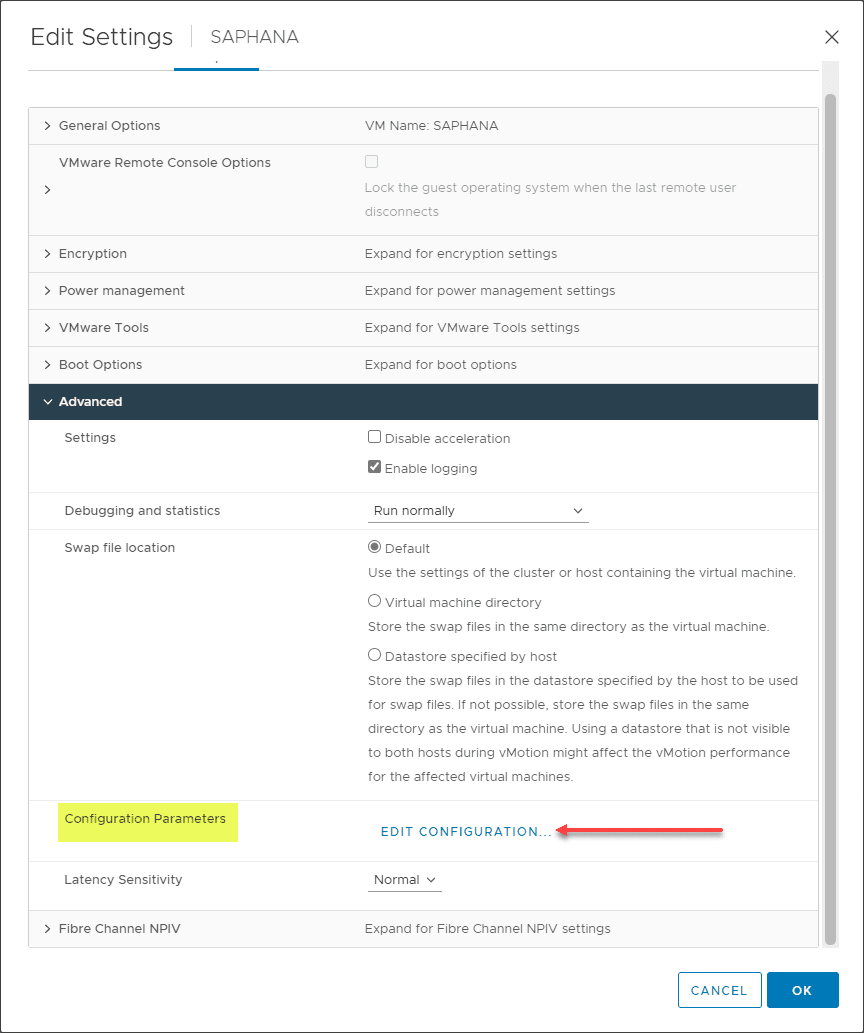

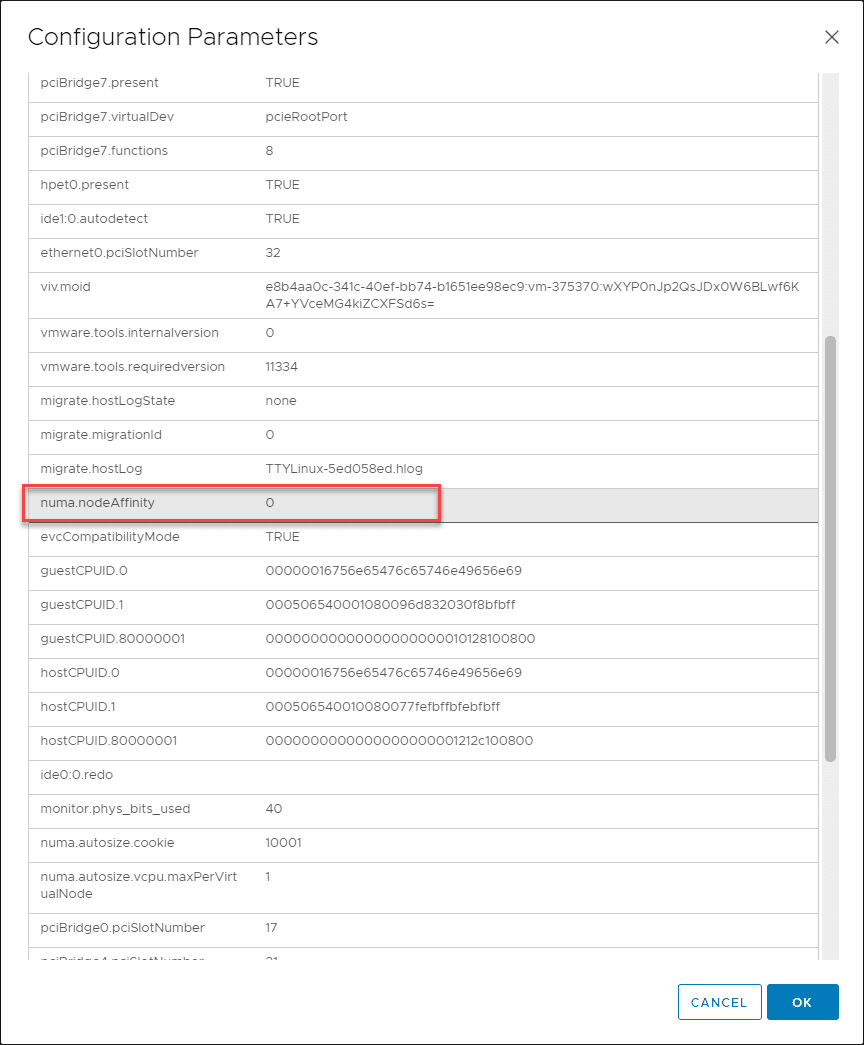

How is NUMA Affinity configured? In the vSphere Client, you can easily assign the NUMA node for the virtual machine or the NUMA node for a specific Virtual NUMA node, these settings are configured in the Advanced configuration settings for a particular virtual machine.

Per the VMware KB article, Associate Virtual Machines with Specified NUMA Nodes, you can use the configurations below:

- To specify NUMA node for the virtual machine, in the Name column, enter numa.nodeAffinity.

- To specify NUMA node for a specific Virtual NUMA node on the virtual machine, in the Name column, enter sched.nodeX.affinity, where X is the Virtual NUMA node number. For example, sched.node0.affinity specifies Virtual NUMA node 0 on the virtual machine.

NUMA Observer VMware Fling

Having visibility to the virtual machines in an environment that may have conflicting NUMA affinity thinking about an HA or maintenance event, there is a really cool VMware Fling, called NUMA Observer, you can find in the VMware Fling site.

Download NUMA Observer here:

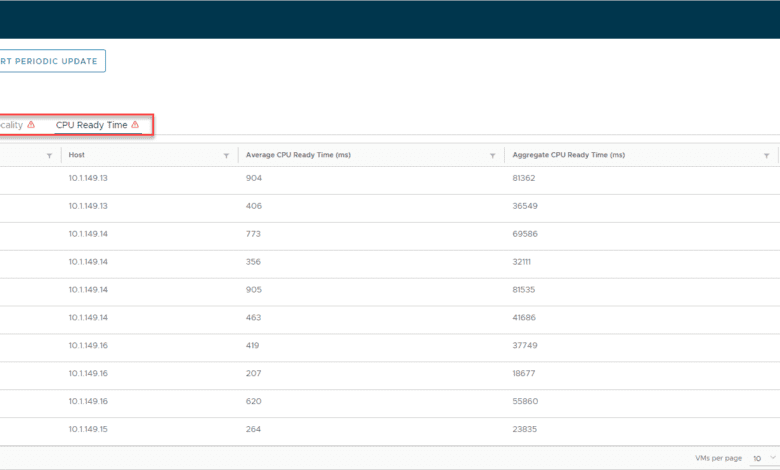

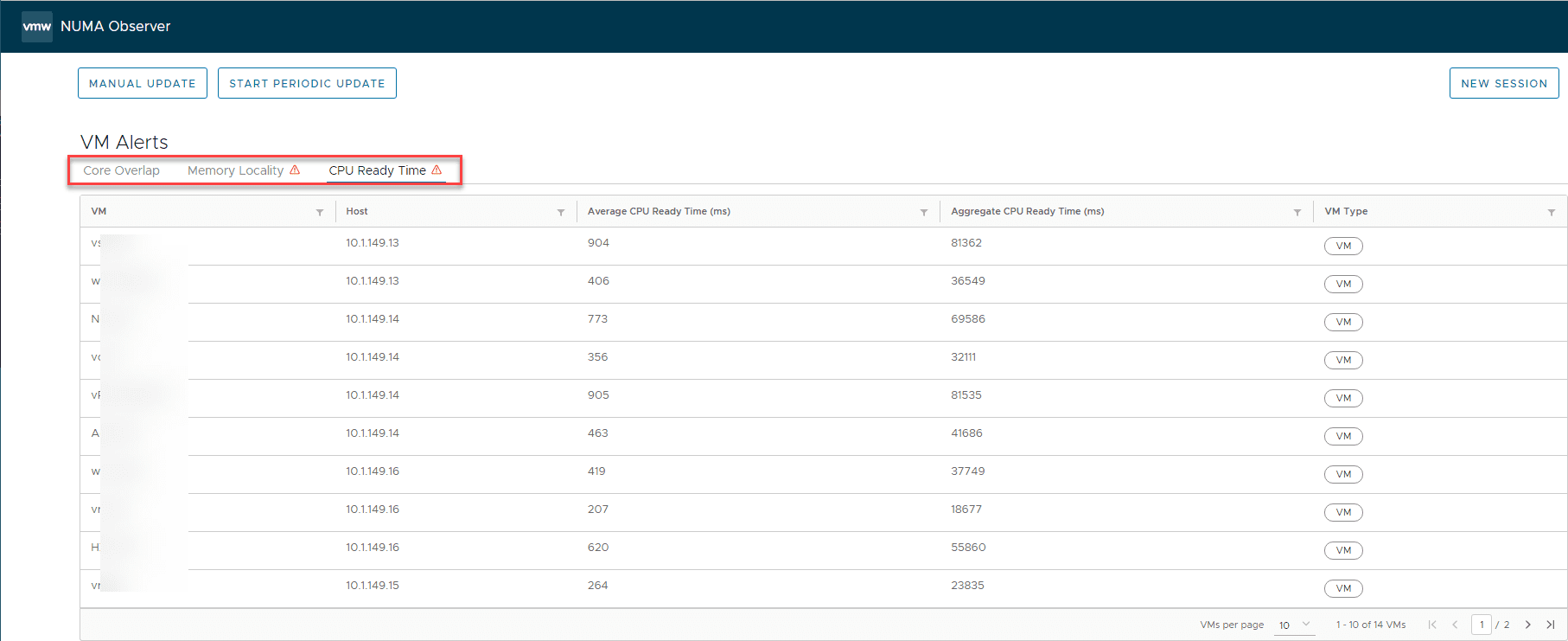

The NUMA Observer scans your VM inventory and identifies virtual machines configured with overlapping core and NUMA affinities. It then generates alerts to these. It also collects statistics on remote memory usage and CPU starvation of critical VMs and alerts on these conditions as well.

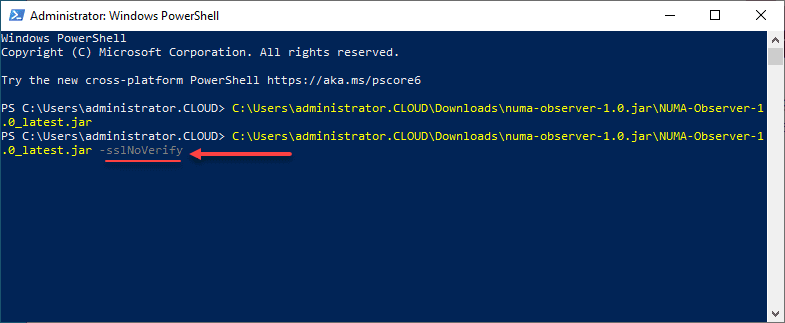

The NUMA Observer fling is easy to get up and running. There is no OVA to deploy. It simply uses locally installed JAVA to create a local web listener that you connect to on port 8443. To install, simply download the NUMA Observer .zip file containing the .jar file. Unzip the file. Then run the .jar file.

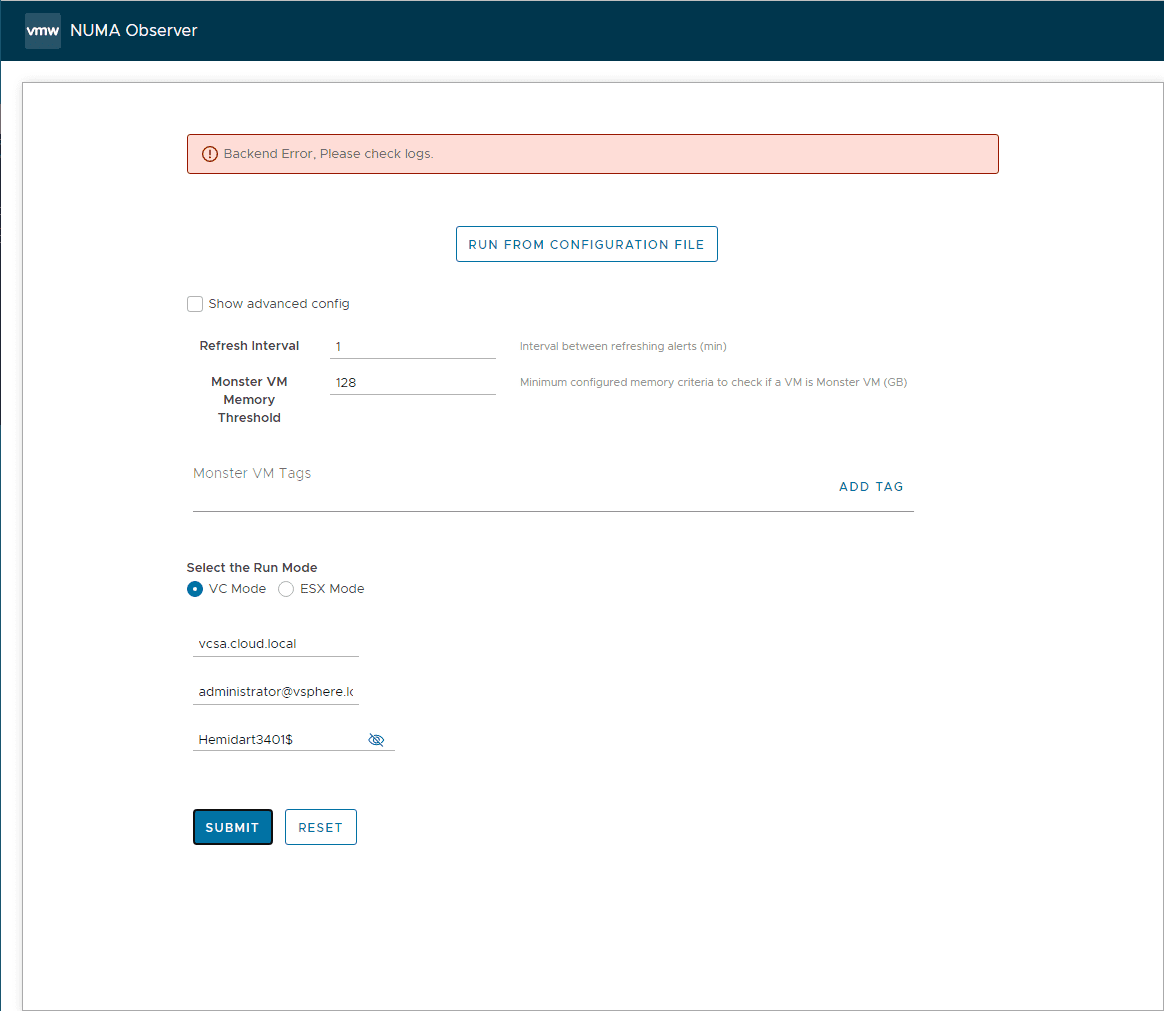

One note I wanted to mention. Thankfully, it was answered in the bug listing for the program. When I first ran the .jar file and attempted to connect to my vCenter Server, I received the error Backend Error, Please check logs. I knew I typed the password correctly, etc.

However, this turned out to be a case of the self-signed certificate on the vCenter Server. There is a -noSSLVerify option you need to add when launching the .jar file.

After launching the program, you will see a nice interface with helpful information right off the start with your vSphere environment. Note the columns, Core Overlap, Memory Locality, and CPU Ready Time.

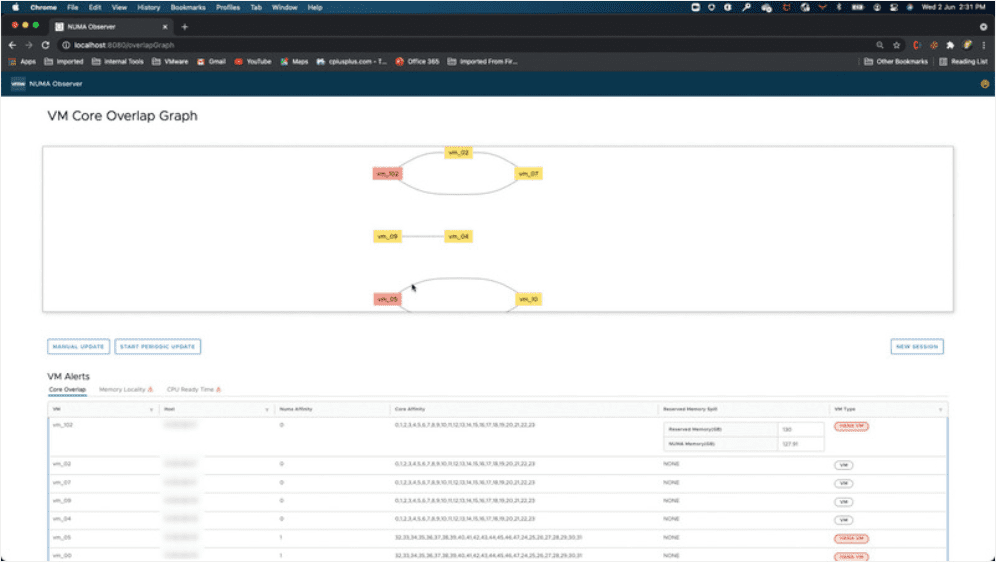

All of my lab hosts are single proc machines, so I didn’t really have the hardware to fully test the app out. However, below is one of the screenshots on the VMware Fling site for NUMA Observer. It is blurry, however, gives an idea of some of the information you will see.

Wrapping Up

NUMA affinity is an extremely important setting that can be helpful in certain situations with specific types of virtual machines. However, it is certainly a setting that must be used with caution and understanding of the potential unexpected consequences due to an HA or maintenance operation. Hopefully, this look at setting NUMA affinity in VMware and also NUMA Observer, a NUMA affinity VMware Fling will help to shed light on this setting and potential impacts across your vSphere environment.