VMware vSphere with Tanzu Nested Lab Networking Configuration

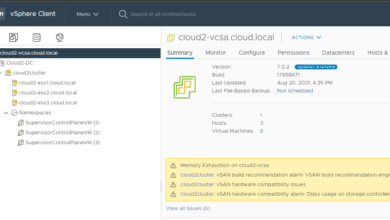

VMware vSphere with Tanzu has been a hot topic for me in my lab the past couple of weeks. It has been a fun learning experience getting things up and running in the lab for use with vSphere with Tanzu. One of the hurdles I initially had was the networking for vSphere with Tanzu. It took a lot of trial and error as well as referencing blog posts by Cormac Hogan showing his configuration. This helped me to wrap my head around what values needed to be placed where. Frank Denneman also just posted a great blog post covering the topic vSphere with Tanzu vCenter server network configuration overview. I highly recommend his post to get a feel for Tanzu networking in great detail. I wanted to add just a bit to these two resources to help any who may be struggling with getting the networking configured for a nested lab. Nested virtualization can be a bit of a mind-bender when working with nested networking, VLAN tagging, etc. Let’s take a look at VMware vSphere with Tanzu nested lab networking configuration to see how you can configure your vSphere with Tanzu lab for proper network communication.

vSphere with Tanzu Network Requirements

If like me, you are using William Lam’s vSphere with Tanzu automation script to easily deploy the underlying infrastructure for vSphere with Tanzu, you may want to know how you need to set up the vSphere with Tanzu network both on your physical ESXi host(s) as well as your nested ESXi hosts. Before we get into this, let’s see what networks are required.

For the purposes of configuring a vSphere with Tanzu lab, you will need at least two to three networks that can be used for connectivity. The networks that you will need to have configured are the following:

- Management – This is used for “management” traffic to communicate with the vCenter Server as well as your HAProxy appliance

- Workload – Your workloads are your Kubernetes nodes

- Frontend – The VIP range for the K8s clusters

You can also use a single IP network for both the Workload and Frontend networks. If you want the names of your Workload and Frontend networks to line up but still use a single IP address space, you can create two virtual switches to point to the same IP network to make things simpler. This will be more clear with the pictures of my configuration below.

VMware vSphere with Tanzu Nested Lab Networking Configuration

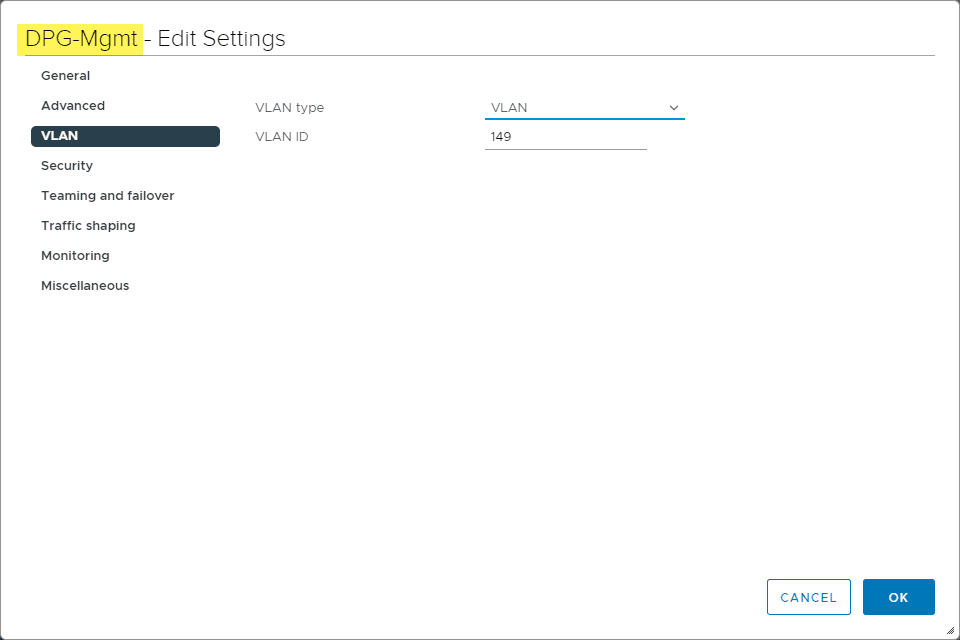

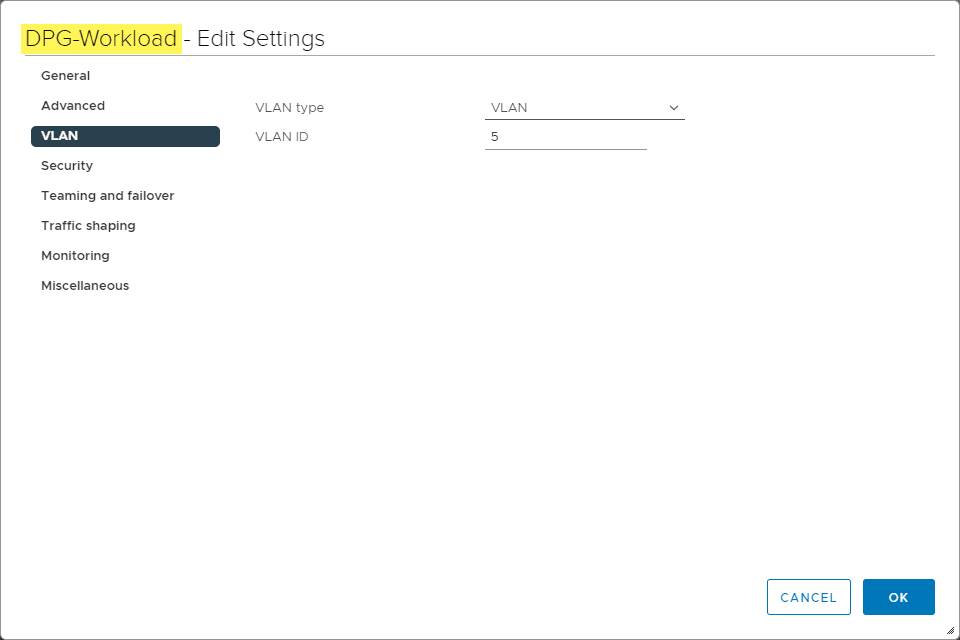

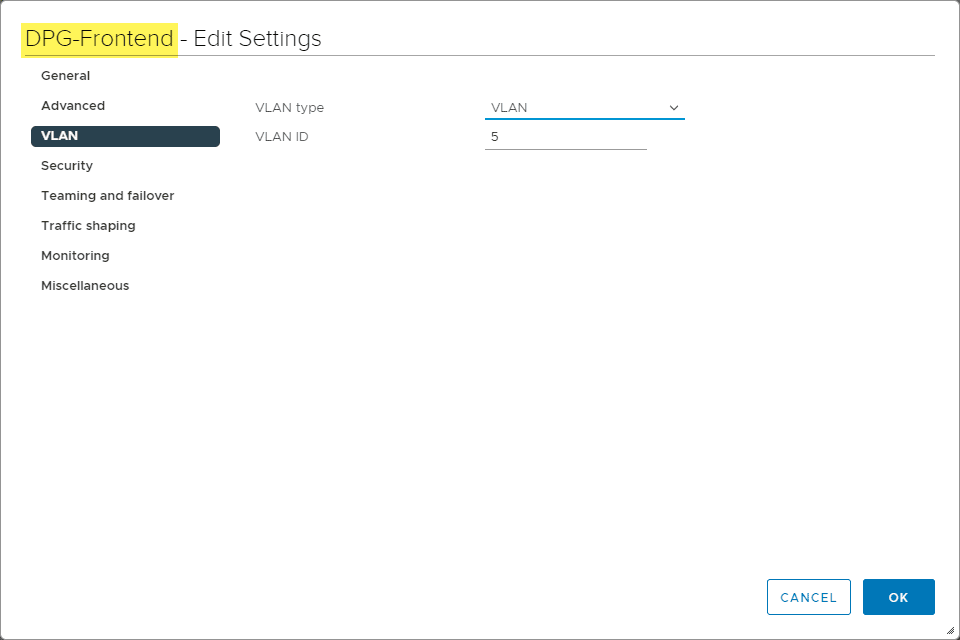

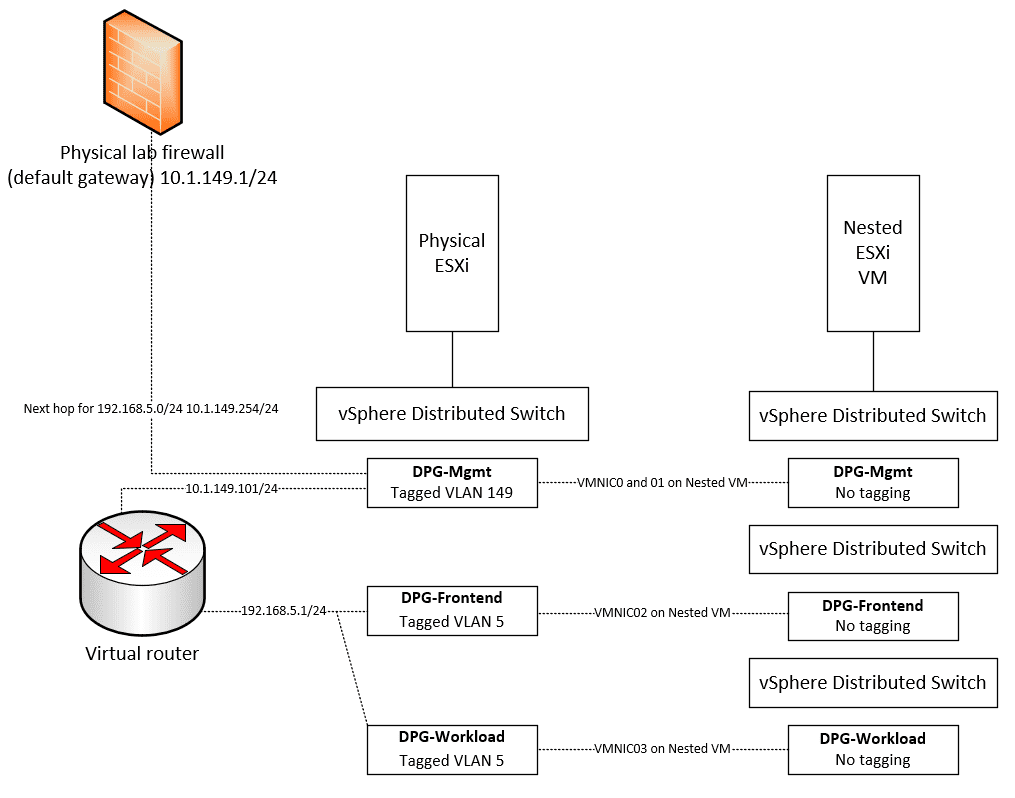

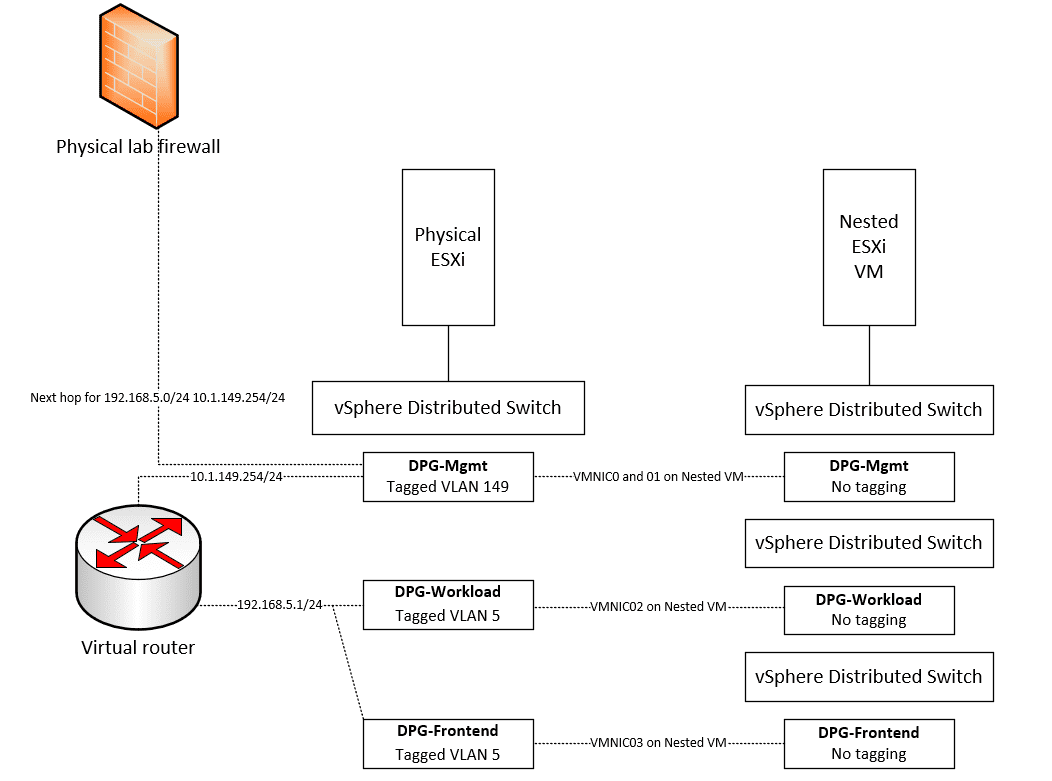

To setup my nested networking for the vSphere with Tanzu lab, I have three vSphere Distributed Switch port groups configured (DPG Mgmt, DPG-Workloads, and DPG-Frontend).

IP subnets for each network are as follows:

- DPG-Mgmt – 10.1.149.0/24

- DPG-Workload – 192.168.5.0/24

- DPG-Frontend – 192.168.5.0/24

As you can see, to make things even simpler, I am just using the same subnet for Workload and Frontend. At this point, the two DPG port groups are simple network labels referring to the same VLAN.

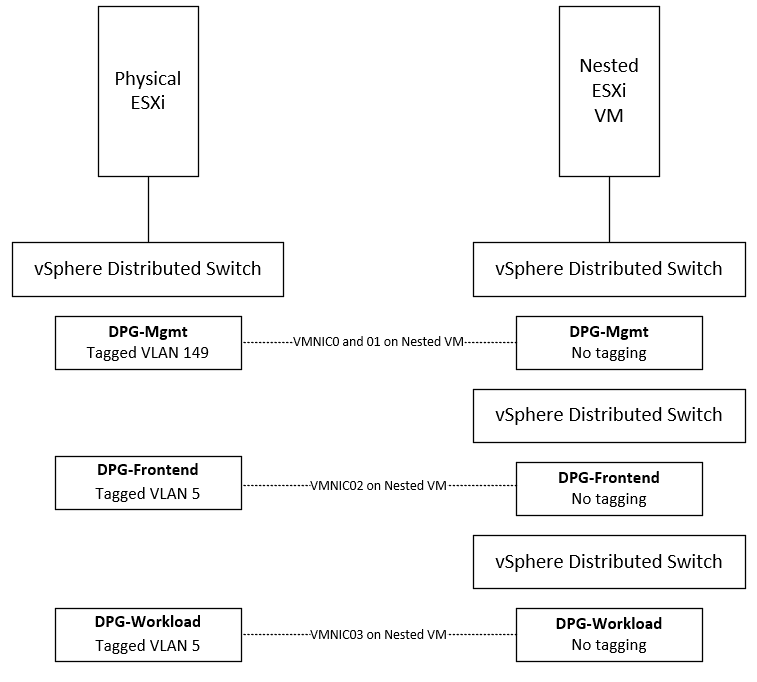

Now, let’s think about how the physical ESXi and nested ESXi hosts are connected. As mentioned, I have three DPG port groups configured on the physical ESXi host side. On the nested ESXi host side (VM running in physical vSphere environment) I have customized the deployment of vSphere with Tanzu so that I have the nested vSphere networking that lines up with the physical side.

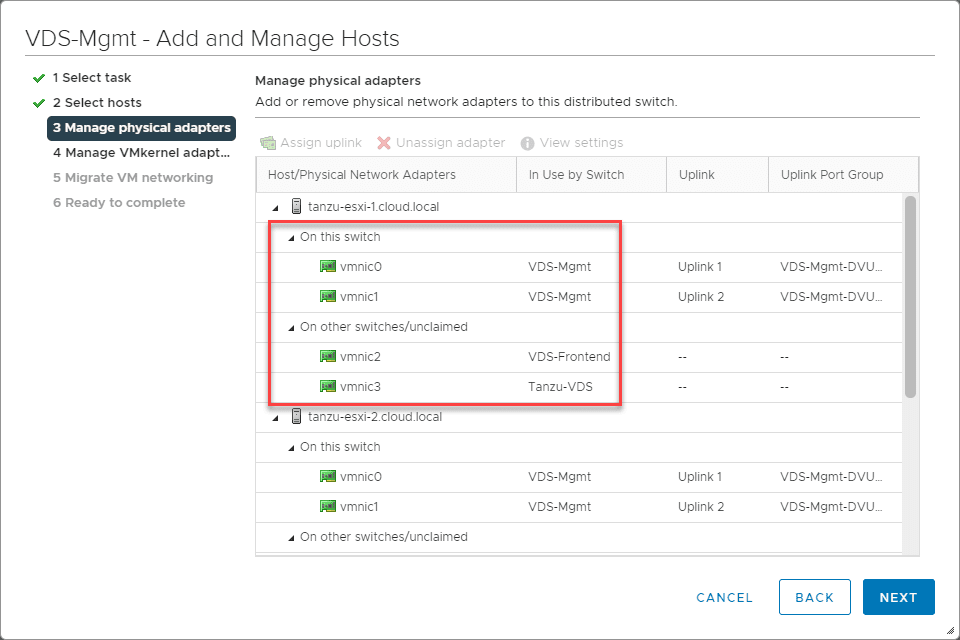

Since the nested ESXi host VMs need to be connected to different physical networks by way of the network adapters assigned to each VM, I needed to create multiple VDS switches to use different “physical” network adapters on the VM. These are plumbed into the vDS DPGs on in the physical vSphere environment.

When you create your nested vDS and DPG environment, remember you don’t want to tag VLANs in the nested environment as this will result in “double tagging” which will keep traffic from flowing.

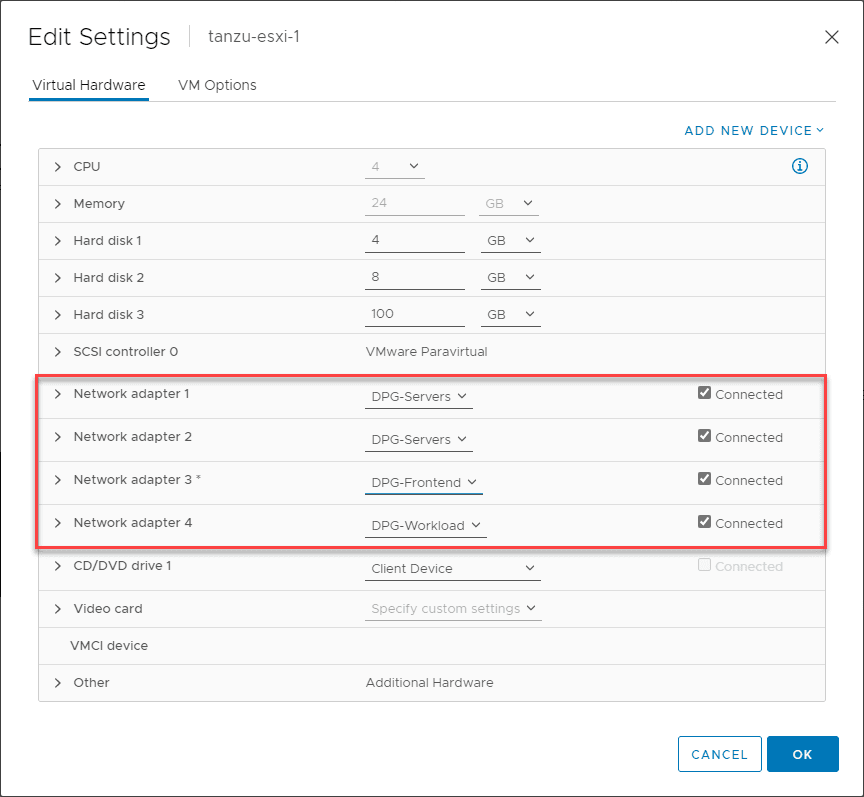

The settings on my nested ESXi virtual machine looks like this:

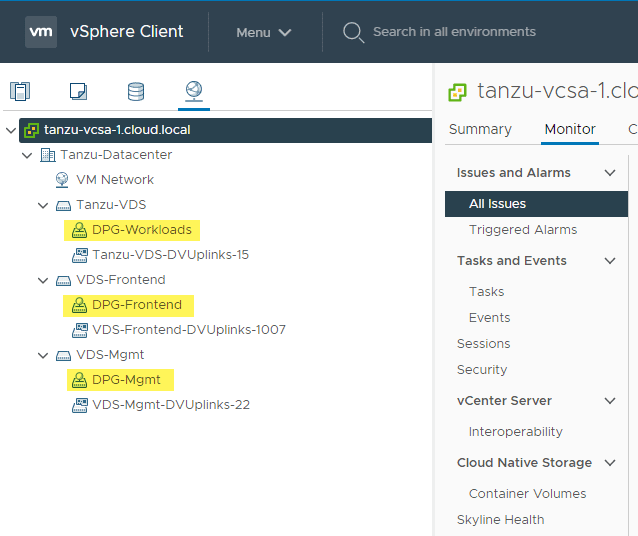

In the nested vSphere environment, below, is the configuration of the vSphere Distributed Switches and distributed port groups.

With each nested ESXi host, the following physical adapters are assigned.

Layer 2 Trunking vs Routing

One of the areas that can cause complexity and issues is improper trunking of VLANs throughout your network core. In working with the nested ESXi vSphere with Tanzu deployment, I am not trunking VLAN 5 which you will note is the VLAN being used for both the Workload and Frontend network.

This makes things much easier from a lab perspective. The distributed port group is simply a tagged VLAN without any tagging done on the physical switches in my lab environment.

To allow traffic to flow to the Frontend and Workload environments, I am routing traffic using a virtual router that can serve as a static route from my core firewall to the Workload subnet.

What virtual router? You can download something free like Untangle 16. You don’t have to add any of the apps. Just use the routing feature of the appliance. However, any virtual router appliance will work. This is just one that is free and easy to use.

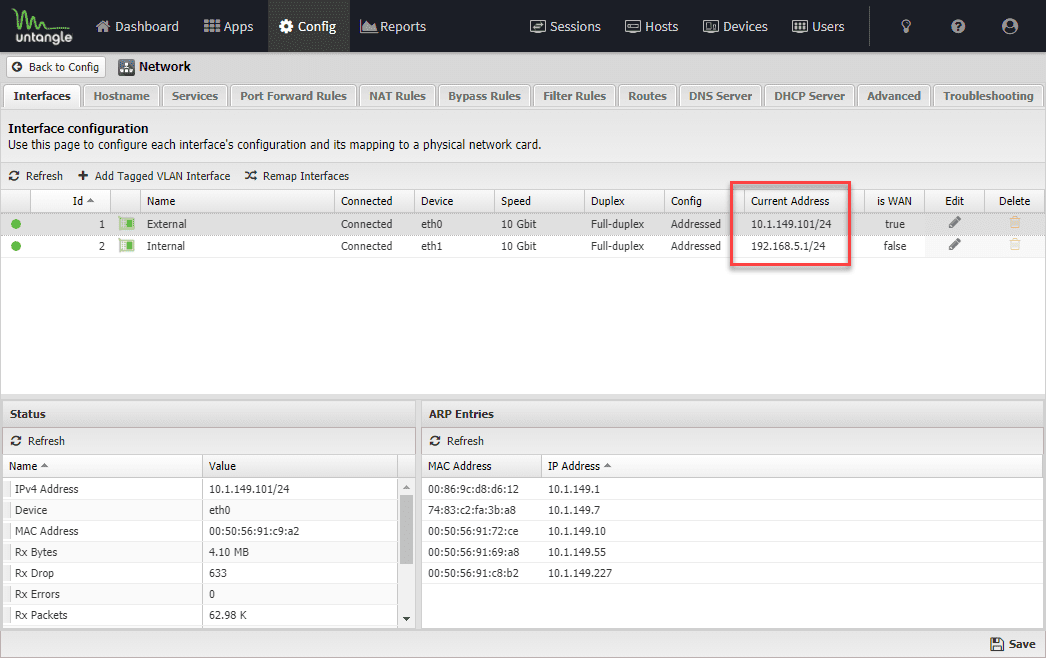

Below, the Untangle virtual appliance with two interfaces configured (one on the Mgmt network and one on the Frontend/Workload network.

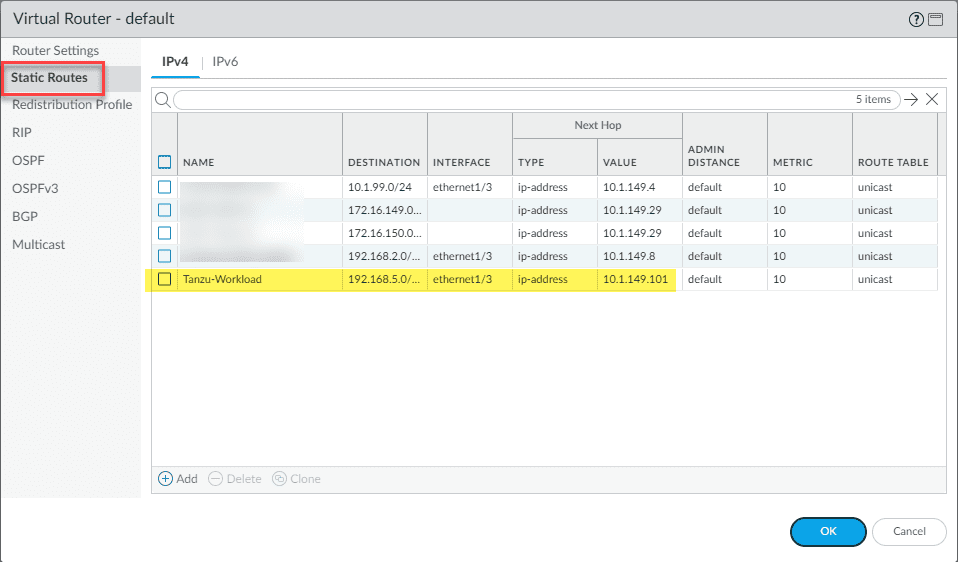

On the physical firewall (PA-220 configured as the default gateway in the lab), I have a static route configured that carries traffic destined for the 192.168.5.0/24 network to the next hop router, which is my virtual router appliance, configured on 10.1.149.101/24.

In doing things this way, you don’t have to trunk your Workload/Frontend VLANs up your network stack and anything configured with your default gateway will automatically become aware of the Workload/Frontend networks by way of the static route.

Recommended resources for vSphere with Tanzu

When I configured my vSphere with Tanzu lab, I referenced many different resources that I want to document in one place. These include the following:

- Deploy HA-Proxy for vSphere with Tanzu

- Enabling vSphere with Tanzu using HA-Proxy

- VSPHERE WITH TANZU VCENTER SERVER NETWORK CONFIGURATION OVERVIEW

- Automated vSphere with Tanzu Basic Lab Deployment

My posts covering vSphere with Tanzu:

- How to Create a vSphere with Tanzu Namespace

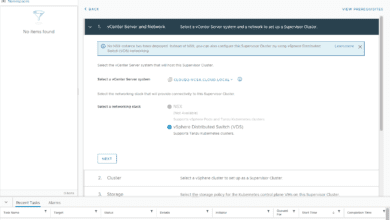

- Configure VMware vSphere with Tanzu Workload Management

- Install HAProxy in VMware vSphere with Tanzu Kubernetes

Wrapping Up

Hopefully this walkthrough of VMware vSphere with Tanzu nested lab Networking Configuration will help any who may be struggling with getting the networking configured in a vSphere with Tanzu nested lab. Nested networking can be a bit confusing when trying to wrap your mind around how networking is plumbed through.

You can make things easier in a nested lab by consolidating the Frontend and the Workload networks and also by routing traffic between your nested environment and your core network stack as I have shown above. It works just as well and you don’t have to trunk the VLAN(s) you use for Tanzu all the way through.