vSAN Daemon Liveness Check Failed – vSAN 7

***UPDATE*** Fix is detailed in the VMware KB below.

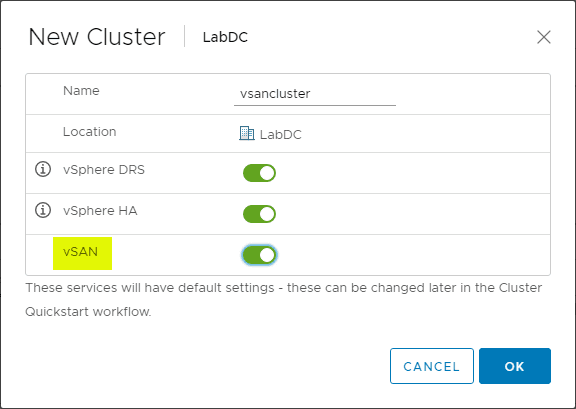

One of the great things about running the home lab is you get to triage issues just like you would see in production. I came across a new issue for me in the home lab running vSAN 7.0 on top of the latest build of vSphere ESXi 7.0b, build 16324942. This has to do with one of the Skyline health checks for vSAN calle vSAN daemon liveness. In this post, we will take a look at vSphere vSAN daemon liveness check failed with a failure starting the epd process. Let’s dive more into this issue and see the symptoms of the issue.

vSphere vSAN daemon liveness check failed

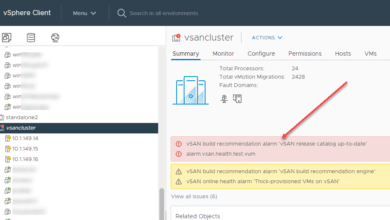

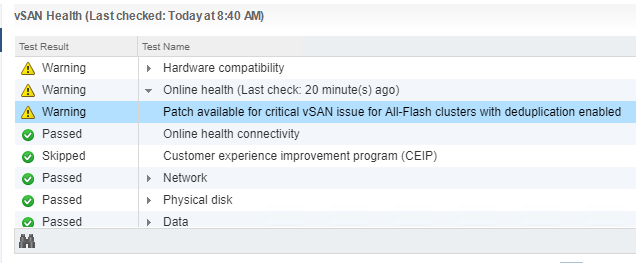

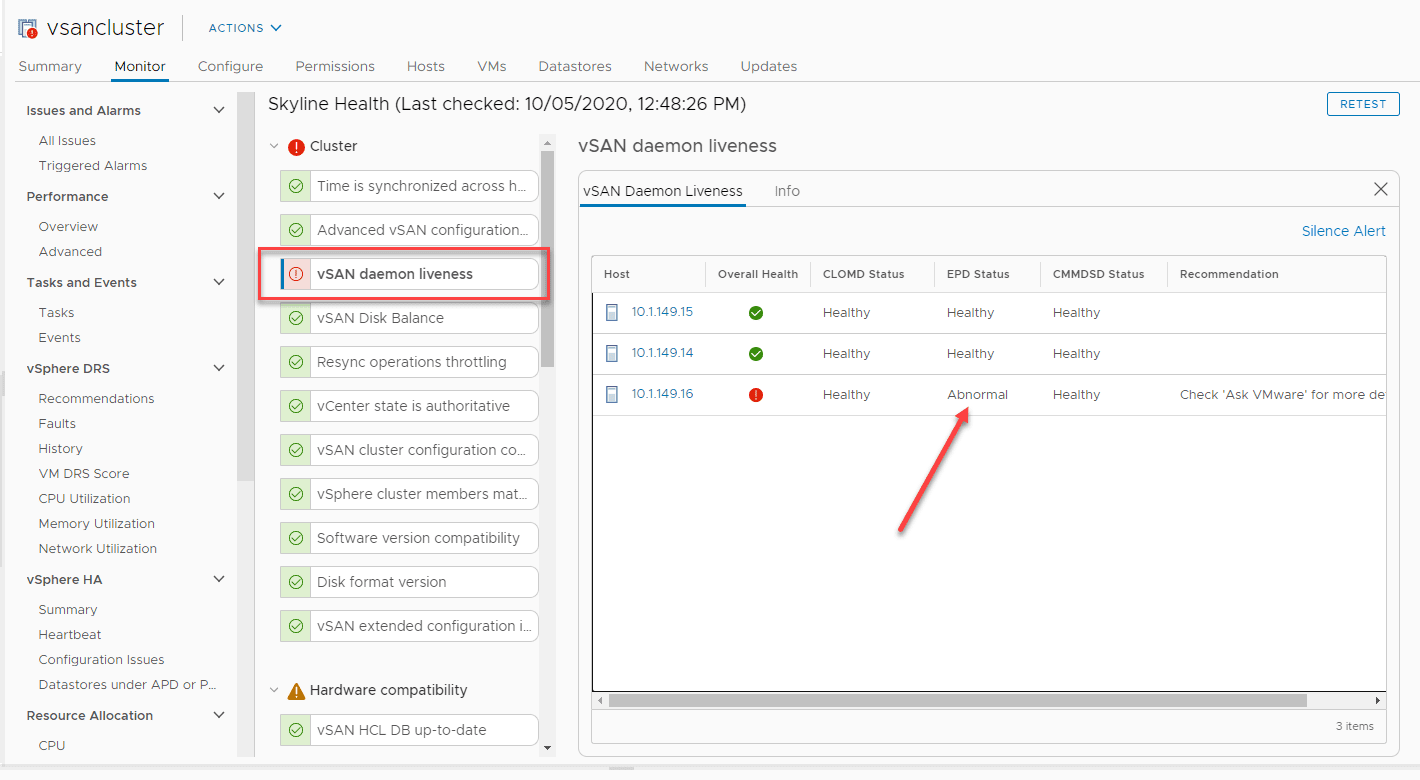

I came into the lab this morning with a “red bang” on the vSAN cluster. After looking at the Skyline health of the cluster itself, I saw the vSAN daemon check was the culprit.

The VMware KB here describes it this way:

“vSAN daemons may still have issues, but this test does a very basic check to make sure that they are still running. If this reports an error, the state of the CLOMD, EPD, and CMMDSD service(s) is not working as expected and needs to be checked on the relevant ESXi host. A good way to further probe into CLOMD health is to perform a virtual machine creation test (Proactive tests), as this involves object creation that will exercise and test CLOMD thoroughly. For more information about this issue, refer to the following article: vSAN CLOMD daemon may fail when trying to repair objects with 0 byte components (2149968)“

Interestingly for me, this was an issue that was only with the EPD component of the vSAN daemon check.

The other two checks were normal. The check performs checks on the following:

- COMD

- EPD

- CMMDSD

From the KB:

| Data node of stretched cluster | Witness node of stretched cluster | Data node of metadata cluster | Metadata node of metadata cluster | |

| CLOMD | Yes | No | Yes | Yes |

| EPD | Yes | No | Yes | No |

| CMMDSD | Yes | Yes | Yes | Yes |

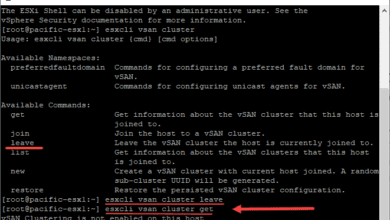

Troubleshooting

According to the KB, you can try a few things for troubleshooting purposes including the following:

/etc/init.d/cmmdsd status && /etc/init.d/epd status && /etc/init.d/clomd status If the daemon is not running, try run restart command on the ESXi host: /etc/init.d/cmmdsd restart && /etc/init.d/epd restart && /etc/init.d/clomd restart

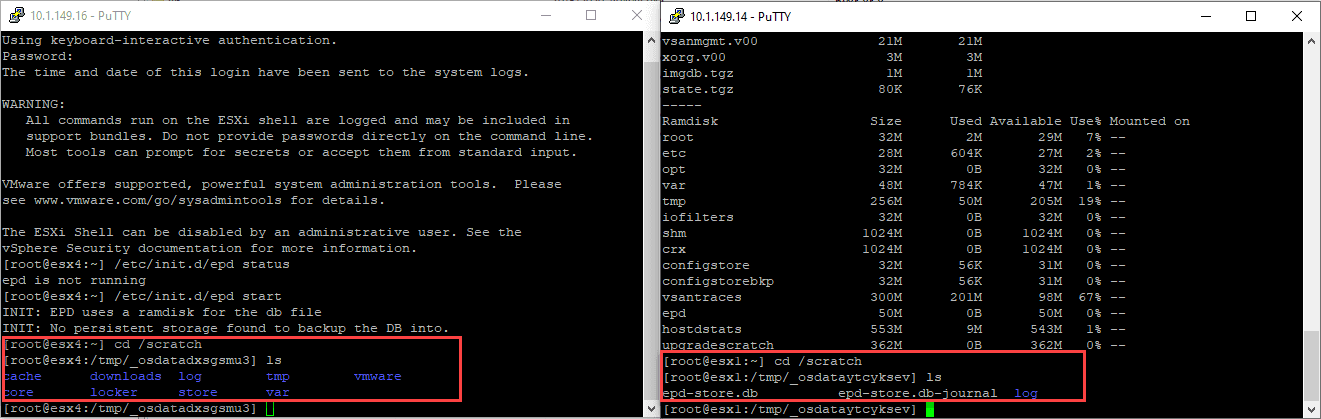

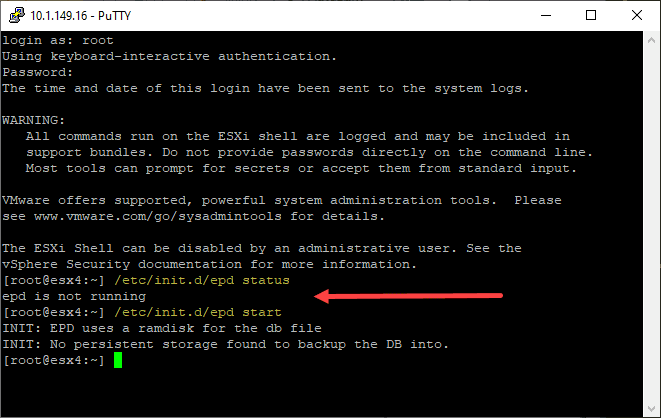

For me, I only had an issue with the EPD service. When I run the relevant commands for only that service, I received the following:

As you can see, manually attempting to start the service fails with the error:

- EPD uses a ramdisk for the db file

On the left, I have the server that is “unhealthy” and on the right, I have a server that is “healthy” from the vSAN daemon liveness checks. As you can see, the server on the right, there are two files:

- epd-store.db

- epd-store.db-journal

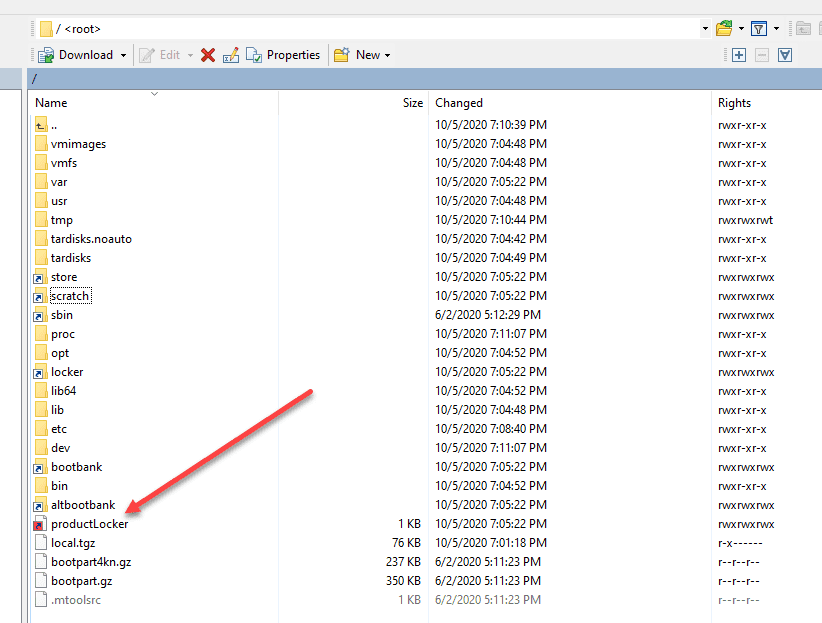

As you can see, the server on the left that is having issues with the liveness checks with the vSAN daemon contains a directory structure. Also, upon looking at my host, I saw what looks to be other issues on the drive such as the productLocker link was dead due to the underlying directory not being available.

So, with a couple of these findings, is it corruption? I am running the ESXi 7.0 hosts booting from USB devices.

One weird thing, in Googling the error, I have turned up a couple of other posts related to vSAN 7.0 with ESXi 7.0b hosts. Take a look at those posts here:

- Cannot Start EPD |VMware Communities

- Cannot Start EDP – IronCastle

As of today, I have not resolved the issue. However, definitely seeing some weird layout on my USB boot disk for sure. It makes me wonder however with others seeing the exact same error if this is a bug with 7.0b.

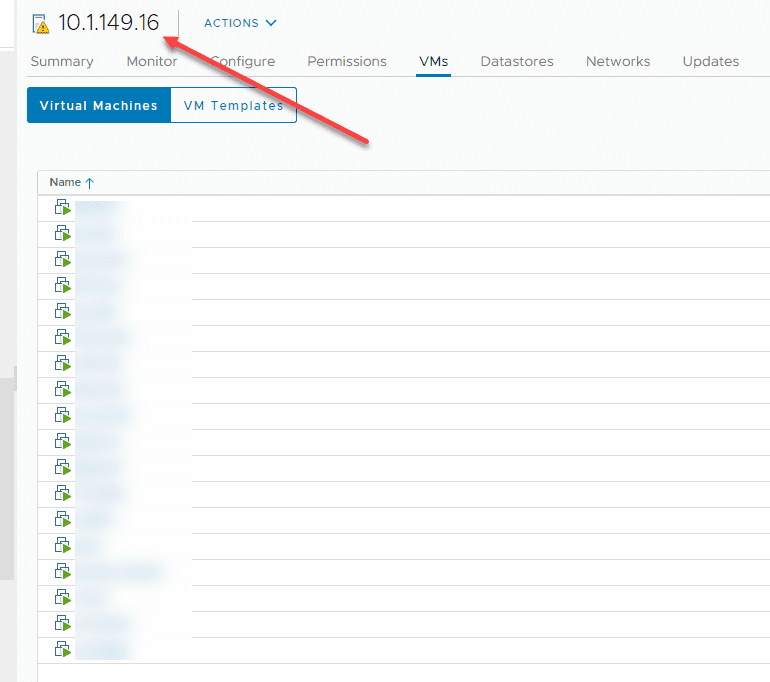

Another interesting note to make about this error, it does not prevent the vSAN host from running VMs. As soon as I brought this host up from multiple reboots troubleshooting the issue, each time, DRS was able to successfully place virtual machines on the server as you can see below. For now, I am going to leave the host in play to continue to tinker with the issue for a possible workaround.

Please make a comment if you have ran into this issue with vSAN 7.0 and the vSAN daemon liveness check error.

VMware KB detailing the fix

A new VMware KB article entitled Bootbank loads in /tmp/ after reboot of ESXi 7.0 Update 1 host (2149444) has been posted which addresses the issue. This appears to be a timing issue with the boot of ESXi.

Cause

The storage-path-claim service claims the storage device ESXi is installed on during startup. This causes the bootbank/altbootbank image to become unavailable and the ESXi host reverts to ramdisk.

Wrapping Up

The vSAN Daemon Liveness Check Failed error with vSAN 7 in my case was due to the EPD service failing with a ramdisk for the db file error message. I am still attempting to narrow this down to corruption on the boot disk or perhaps a bug with the 7.0b release due to finding two other cases of this being posted out in the community forum as well as blog posts. Let me know if you guys see this.