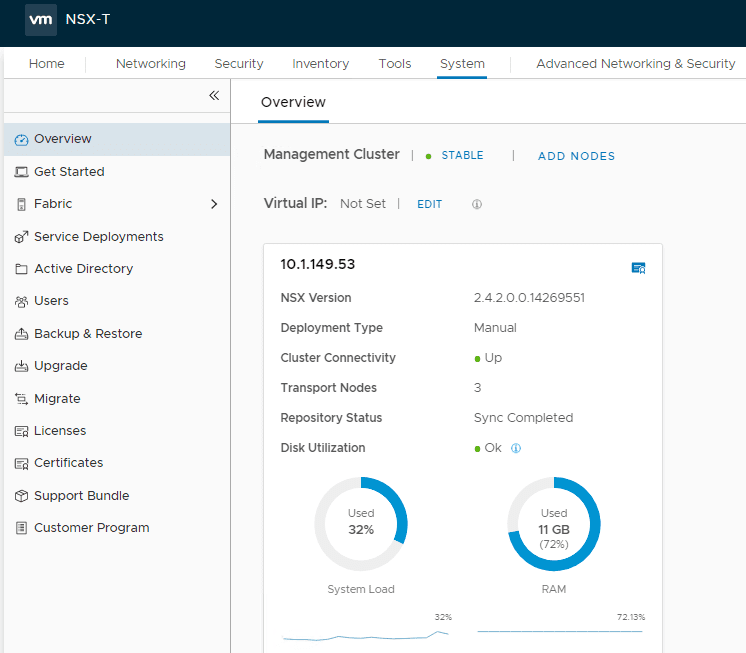

NSX-T is VMware’s virtual networking solution moving forward that is hypervisor agnostic and not specifically tied to VMware vSphere. If you are looking at moving to NSX-T as your network “hypervisor”, there are certain tweaks and hardware features that you will want to look at from a performance perspective to gain the most performance benefits from your NSX-T infrastructure. In this post, we will take an overview look at VMware NSX-T Performance tips and tuning to see what things you want to key in on to ensure you are squeezing as much performance out of the solution as possible.

NIC Considerations

Even though NSX-T is a “software-driven” solution, there are hardware considerations with your NIC that you want to make to ensure the best performance possible. What are the supported NIC features?

- Checksum offload (CSO)

- TCP segmentation offload (TSO)

- UDP Checksum offload (CSO)

- Encapsulated Packets (Overlay)

- Geneve TCP/UDP Checksum offload (CSO)

- Geneve TCP segmentation offload (TSO)

- Geneve Rx filter

- Geneve OAM packet filter

- Ability to handle high-memory DMA (64-bit DMA addresses)

- Ability to handle multiple Scatter Gather elements per Tx frame

- Jumbo frames (JF)

- Receive Side Scaling (RSS)

NSX-T Performance Tweaks that Matter

There is a great VMworld 2019 US session that goes into this topic in detail and is the inspiration behind this post. You can find the session here: https://videos.vmworld.com/global/2019?q=CNET1243BU

Looking at key areas of your underlying VMware vSphere infrastructure that can affect performance with NSX-T, we look at an overview of the following topics/areas for tweaking performance with NSX-T:

- MTU

- RSS

- Geneve RX/TX Filters

- TCP Segmentation Office (TSO) for Overlay Traffic (Geneve Offload for Sending)

- Large Receive Offload (LRO) for Overlay Traffic (Geneve Offload for Receiving)

Tweaking MTU Size

MTU Size has long been a quick tweak to squeeze more throughput out of the network. The default 1500 byte MTU size is limiting when looking at potential bottlenecks for performance in an NSX-T network for VMs running in the environment.

To achieve the most performance benefit in terms of throughput through the NSX-T environment, you need to ensure the MTU size has been extended for both ESXi and your VM.

Recommendations for MTU sizes:

- 9000 for ESXi

- 8800 on the VM

- Won’t see nearly the benefit in just increasing the MTU for the ESXi hosts only.

Having an enlarged MTU throughout the NSX-T environment can certainly allow for ensuring you have the greatest throughput. This is especially beneficial in networks that are in the realm of 25-40 Gbps.

Receive Side Scaling (RSS)

Receive Side Scaling is also a feature that can yield great benefits to the performance of your NSX-T environment. What is Receive Side Scaling (RSS)? Receive Side Scaling (RSS) allows network packets from a NIC to be processed in parallel on multiple CPUs by creating a different thread for each hardware queue. ESX supports two variations of RSS – NetQueue and Device. When used in NetQueue mode which is default, ESXi controls how traffic is distributed to multiple hardware queues. In Device RSS mode, hardware is in complete control of how traffic is distributed to multiple hardware queues.

If you aren’t sure if your network card has RSS enabled, use the command:

- vsish get /net/pNics/vmnic0/rxqueues/info

Especially for the VM Edge, RSS is important for performance. You want to go for cards for your VM Edges that have RSS enabled. It has been mentioned that with current new cards on the market, Mellanox has a better RSS implementation.

Intel’s new 710 cards have RX/TX filters which are actually more powerful than RSS in its own right. However, for the VM Edge, it is still important to make use of RSS.

Geneve RX/TX Filters

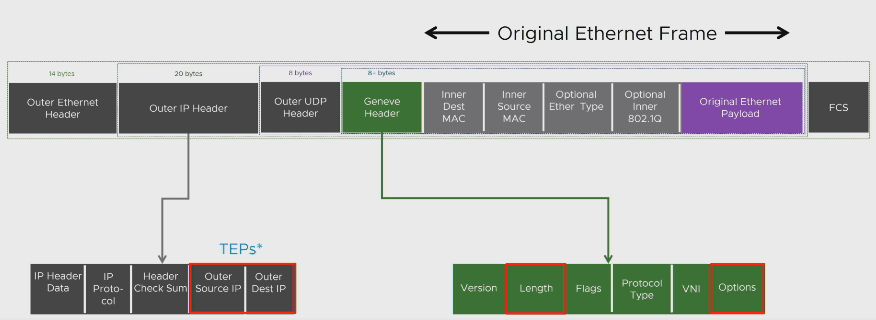

As you may know, NSX-T uses Geneve encapsulation instead of VXLAN. This mainly due to Geneve having a more flexible frame header that can be used for information storage of various kinds. So, Geneve encap is what you are looking at with NSX-T and performance tweaks align with this encapsulation protocol instead of VXLAN with NSX-V.

One of the Geneve performance enhancements is RX/TX filtering. With Geneve RX/TX Filters you have even more control over the flows than with RSS. With the RX/TX filters you are looking at which flows are coming into the ESXi host.

As mentioned while the Intel 710’s are lacking in RSS features, they include the RX/TX filters. You want to make sure you are using the right drivers for your card. You can check and see if your card supports Geneve RX/TX filters with the command:

- vsish /> cat /net/pNics/vmnic5/rxqueues/info rx queues info

TCP Segmentation Offic for Overlay Traffic (Geneve Offload for sending)

Within the hypervisor, the encapsulated payload can be 64000+ bytes, so extremely large. However, once this payload hits the physical NIC of the hypervisor host, it must be broken down into much smaller frames based on the MTU size.

Without offloading capabilities, this process is handled by the CPU. However, with the TSO capable NIC cards, this process is offloaded to the NIC and not the CPU which greatly increases performance. Check to see if your NIC card supports this:

- vsish -e get /net/pNics/vmnic2/properties | grep “GENEVE”

Large Receive Offload (LRO) for Overlay Traffic (Geneve Offload for Receiving)

Large Receive Offload is the reverse of what was described with TCP Segmentation Offload. With LRO, the receiving host is having to reconstruct the payload that has been segmented.

There are some NIC cards on the market that are able to perform LRO on the NIC itself. Packet aggregation can also be done by the hypervisor itself as well. ESXi command:

- vsish /> cat /net/pNics/vmnic5/rxqueues/queues/1/filters/0/filter

VMware NSX-T Performance Tips and Tuning

To help with which features matter with which NSX-T virtual components, let’s take a look at the features that matter with each type of component and why.

- Compute Transport Nodes (N-VDS Standard) – Geneve Offload saves on CPU cyles. Geneve-RxFilters increase throughput by using more cores and using software based LRO. Use RSS if Geneve-RxFilters do not exist which helps to increase throughput and use more CPU cores.

- Compute Transport Nodes (Enhanced Data Path) – N-VDS Enhanced Data Path for DPDK like capabilities.

- ESXi nodes with VM Edges – Use RSS to leverage multiple cores.

Wrapping Up

Even in software-defined networking, performance is important. This can be especially true when it comes to service providers. VMware NSX-T Performance Tips and Tuning can ironically mean giving attention to physical hardware in your hypervisor hosts as well as tweaking and ensuring certain hardware-assisted features are turned on and being used for offsetting CPU impacts.

Google is updating how articles are shown. Don’t miss our leading home lab and tech content, written by humans, by setting Virtualization Howto as a preferred source.