Configure Network I/O Control NIOC with vSAN vSphere Distributed Switch

Recently, in the home lab, I wanted to move to converged networking with vSphere Distributed Switch on my “production” vSAN cluster running my various home lab resources (nested, etc). Prior to this, I was running two vSphere Standard Switches on each host and carving these out between virtual machine VLAN backed port groups and then “vSphere services” port groups such as vMotion and vSAN. However, this left my virtual machine port groups stuck on 1 Gbps connections (which I am not saturating by any stretch under normal operations), but was limiting in the realm of incremental backups and full backups taken of the environment. Additionally I wanted to free up a couple of network cards to create some one-off port groups for other testing that I wanted to do using the same hosts and same network cards. So I migrated all the port groups to a vSphere Distributed Switch with Network I/O Control or NIOC. Let’s see how to configure Network I/O Control with vSAN vSphere Distributed Switch and properly implement converged networking for the various network requirements of the cluster.

What is Network I/O Control or NIOC?

Network I/O Control allows sharing physical network connections but at the same time being able to guarantee bandwidth for the various services running across the physical connections so they perform and are prioritized adequately. VMware recommends 10 Gbps network connects for vSAN traffic. However, there is generally bandwidth available for other services in normal operations and with Network I/O Control this can be guaranteed for those services you deem as important such as vSAN.

Network I/O Control Version 3 introduced the mechanism to reserve bandwidth for system traffic based on the capacity of the physical adapters on the host. This allows fine-grained resource control at the VM network adapter level in much the same way that you can allocate CPU and memory resources.

NIOC is only available on the vSphere Distributed Switch so cannot be used on the vSphere Standard Switch which is certainly a reason to use VDS switches instead of VSS. Also, with a vSAN license, you get the vSphere Distributed Switch for free which is normally an Enterprise Plus feature. So, if you are running vSAN, why not use it?

For me this made sense, since I wanted to take advantage of VDS in the home lab on my physical hosts and not just in my nested lab, as well as free up network ports (1 Gbps) ports, in my case, to do other testing.

Configure Network I/O Control NIOC with vSAN vSphere Distributed Switch

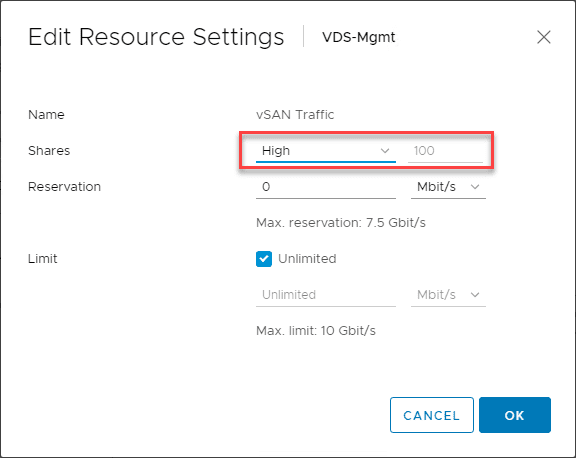

The great thing about NIOC with the vSphere Distributed Switch is that it is enabled and turned on by default. This means that all you have to do is configure it. Configuring it includes configuring the Shares Value appropriately for each type of System traffic.

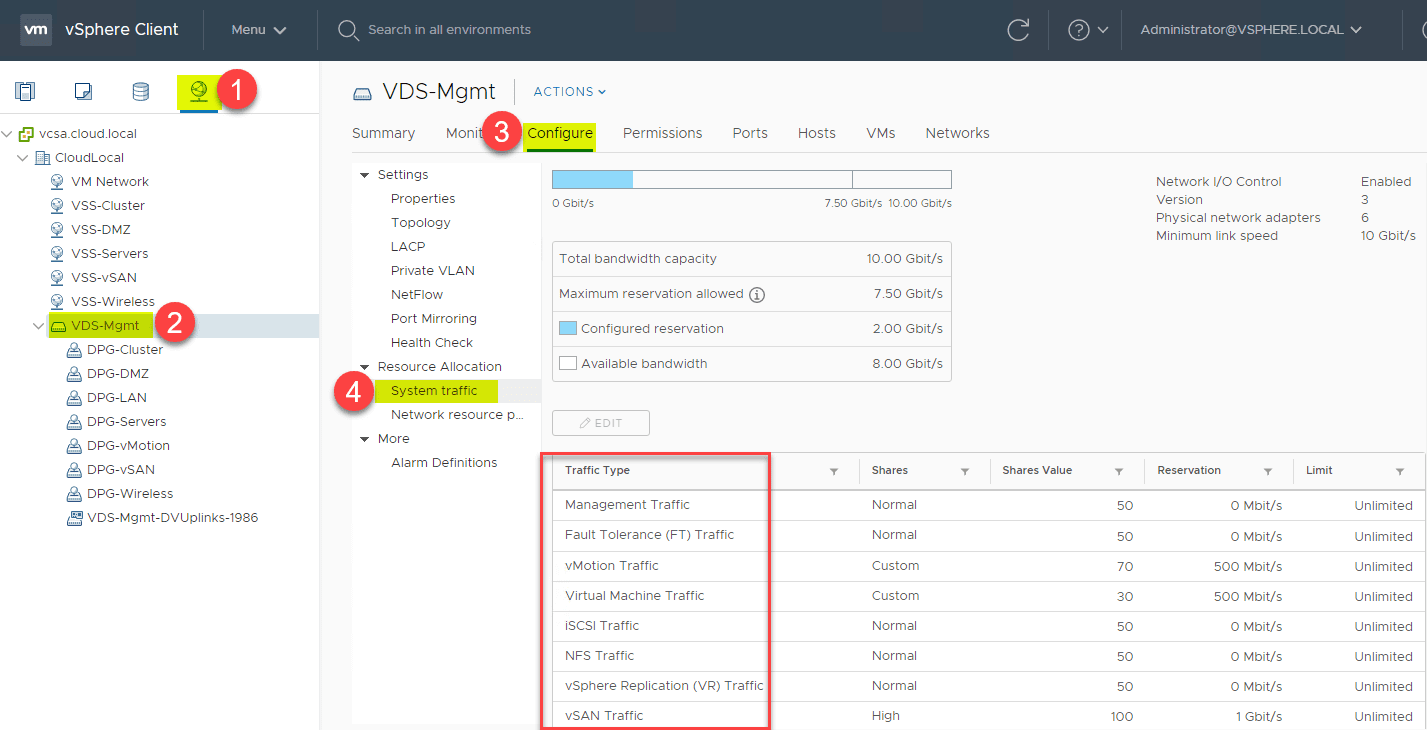

The words of wisdom I used in the home lab comes straight from the vSAN 6.7 U1 Deep Dive written by Duncan Epping and Cormac Hogan (which I highly recommend). In chapter three, there is a really great breakdown of the shares that need to be configured in scenarios with redundant 10 GbE switches with Link aggregation capability and without Link aggregation capability. The recommended shares are as follows:

| Traffic Type | Shares | Limit |

| Management Network | 20 | N/A |

| vMotion Vmkernel Interface | 50 | N/A |

| VM Port Group | 30 | N/A |

| vSAN VMkernel Interface | 100 | N/A |

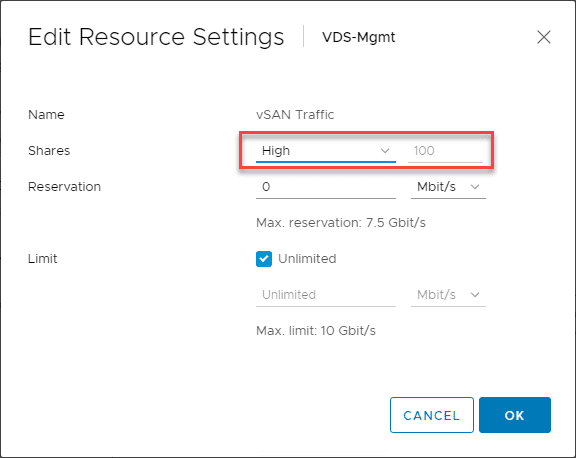

After navigating to the Network I/O Control settings page, you can edit the resources settings simply by hitting the Edit button on the particular system traffic you want to edit and setting the shares to what you want.

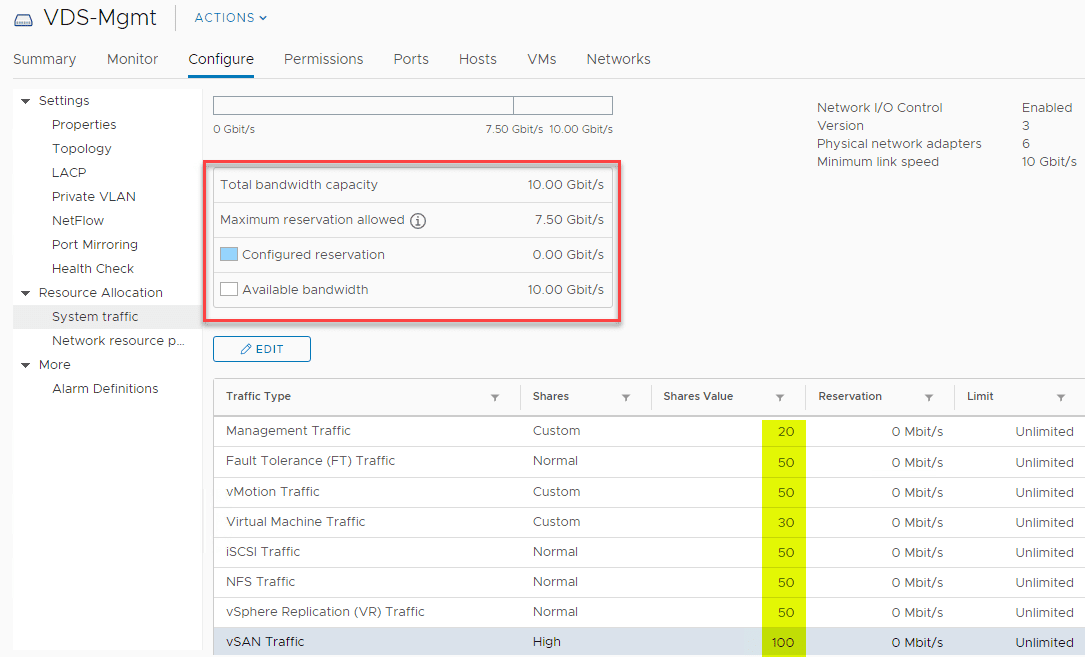

Below are the settings in Network I/O Control after updating the shares accordingly on the vSphere Distributed Switch to reflect the recommended settings for use with a converged networking configuration on a vSphere Distributed Switch, where both VM traffic and other system traffic is sharing the links backing the VDS.

Wrapping Up

So far, after migrating my production home lab hosts over to the VDS with NIOC running all the network services converged on the single VDS switch, I have not noticed any issues and everything has been running very smoothly. This has allowed me to accomplish my objective of freeing up some network adapters and reprovisioning for other testing I want to do. More on this later, but can now provision these as additional vSwitches to plumb other kinds of traffic that I need adapters for. Be sure to check out Network I/O Control as it allows very cool performance features for many types of network traffic sharing the same VDS backed by your physical adapters.