A couple of days ago in a production environment, I ran into an issue upgrading an existing VCSA 6.5 appliance to a VCSA 6.7 appliance. Stage 1 of the upgrade process with the appliance deployment was going fine, however, with Stage 2 where data is actually migrated over, the upgrade process failed with a Postgres error. As it turns out, the solution was fairly simple after performing a bit of investigative work. Let’s take a look at VMware vSphere VCSA 6.5 to VCSA 6.7 Postgres upgrade error fix and see the solution to this Postgres error on Stage 2 of the upgrade process that I ran into in this recent upgrade of a production environment.

VCSA 6.7 Upgrade Error Description

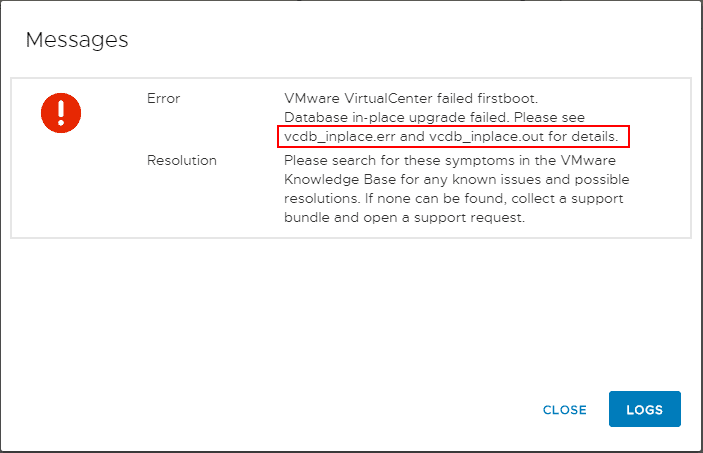

Just a bit of background on the environment that I was upgrading. The production vCenter VCSA 6.5 appliance was servicing around 20 VMware ESXi hosts in various configurations. There were around 500 VMs in the environment total. So it was a fairly moderate to larger environment for an SMB. The first phase of the upgrade (Stage 1) was going fine. The new VCSA 6.7 appliance was getting deployed just fine and the initial part of Stage 2 was going okay as well. The upgrade actually progressed fairly far along each time I tried the upgrade when it was hitting the error message shown below. The exact error that I captured in Stage 2 of the upgrade process:

As directed by the above error message, I downloaded the logs bundle and looked at the vcdb_inplace.err file and recorded the following error.

Error while executing ./Upgrade-v2016-to-v2017/postgresql/upgrade_PostgreSQL.sql:3531, reason: Statement failure(rc=-1).

ALTER TABLE VPX_TEXT_ARRAY

ALTER COLUMN MO_ID TYPE BIGINT;

1 [53100](1) ERROR: could not extend file “base/16395/1772075.4”: wrote only 4096 of 8192 bytes at block 622726;

Error while executing the query

I had snapshots of both the source VCSA appliance and the new VCSA 6.7 appliance at Stage 2, so I had a quick way to keep rolling back and trying things. After the first failure, I had a hunch this could be disk space related, either on the source or the target VCSA.

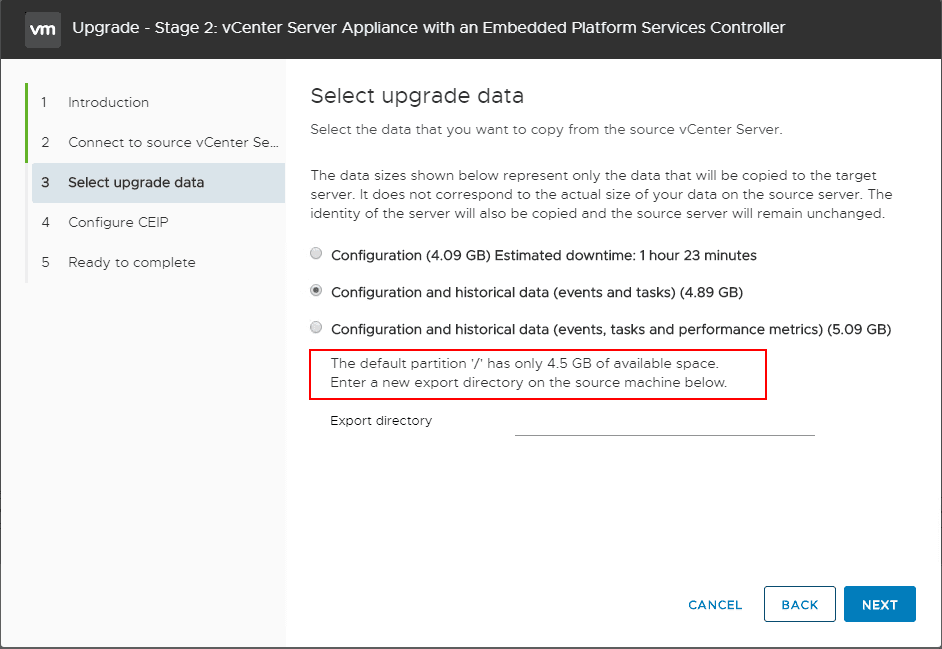

To go along with this, when choosing either the 2nd or the 3rd option in the Select upgrade data, I would see the message below stating there wasn’t enough disk space on the ‘/’ partition, Enter a new export directory on the source machine below. I could enter /tmp or some other directory here and the upgrade would proceed along on either the 2nd or 3rd option. So I knew disk space was at least an issue on the source appliance, outside of the workaround directory for exporting.

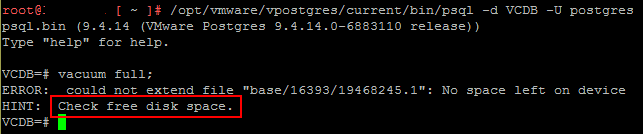

I had the thought that I would perform a vacuum of the DB on the source VCSA appliance before running Stage 2 of the process to see if that would make a difference, however, again, it looks like disk space is causing issues here even trying to perform the maintenance on the VCDB Postgres DB.

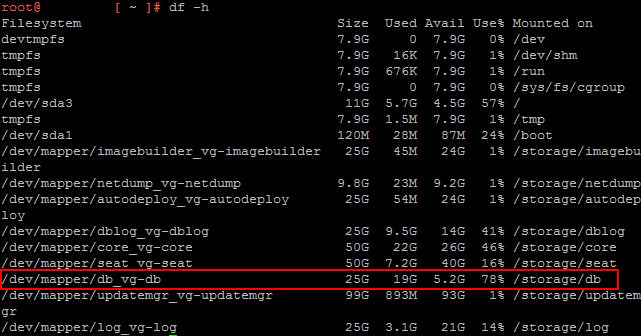

Just a high-level look at the available disk space of the source appliance here and it looks like the /storage/db is fairly full but not completely. Also, the “/” directory is 57% full.

So, I had a pretty good feeling that the sheer amount of data and the partition sizes were blowing up the upgrade process.

VMware vSphere VCSA 6.5 to VCSA 6.7 Postgres Upgrade Error Fix

What about determining the size of the VCDB tables and see if we can truncate them? Following the article here: https://kb.vmware.com/s/article/2147285 let’s list out the top 20 tables in our VCDB by size. The query found in the KB article is below:

SELECT nspname || ‘.’ || relname AS “relation”, pg_size_pretty(pg_total_relation_size(C.oid)) AS “total_size” FROM pg_class C LEFT JOIN pg_namespace N ON (N.oid = C.relnamespace) WHERE nspname NOT IN (‘pg_catalog’, ‘information_schema’) AND C.relkind <> ‘i’ AND nspname !~ ‘^pg_toast’ ORDER BY pg_total_relation_size(C.oid) DESC LIMIT 20;

This will return the largest tables, ordered by size. This is extremely helpful to see where your DB space is being used. For me, this was the vc.vpx_text_array and vc.vpx_task. The vc.vpx_int_array is one that we don’t want to truncate.

Using a couple of super simple truncate commands, we can truncate large tables before the migration. Before truncating, follow this VMware KB to backup the Postgres DB first: https://kb.vmware.com/s/article/2091961

truncate table vc.vpx_text_array;

truncate table vc.vpx_task;

After truncating the tables with the truncate command, we run the same “top 20” query to see space after the truncate operations.

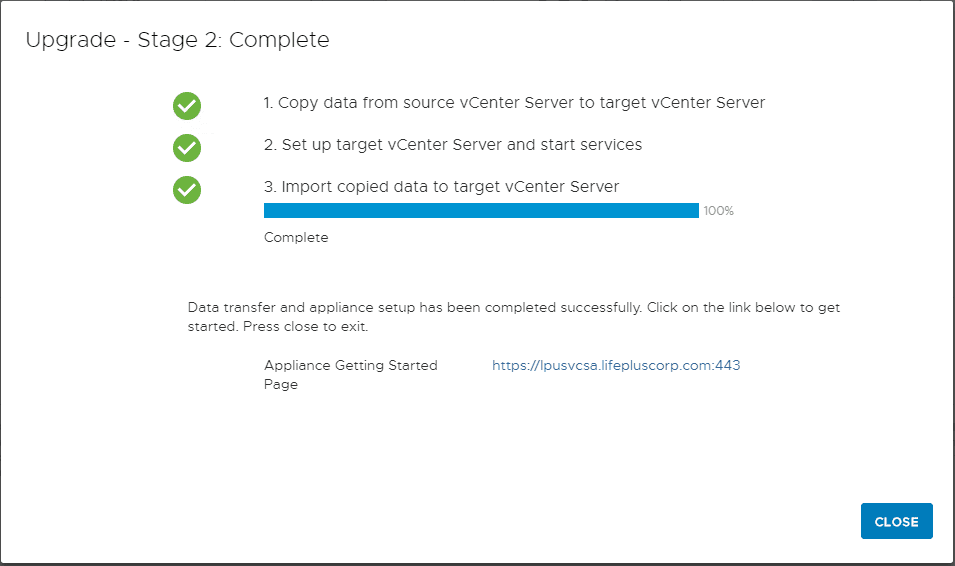

Now, we have a much smaller amount of data to work with for the upgrade process. This time, running through Stage 2 went through successfully!

Wrapping Up

Hopefully, this little walk through on a VMware vSphere VCSA 6.5 to VCSA 6.7 Postgres Upgrade Error Fix I had seen recently may help others who may run into issues trying to get their VCSA 6.5 appliance upgraded to vSphere 6.7 with a new VCSA 6.7 appliance. All in all, VMware has really made the process extremely easy when looking at the upgrade, however, from my experience, the most common cause of many of the upgrade failures will be Postgres errors and data related with Stage 2. So gear up and do your due diligence and test your upgrade in a lab environment first before running in production. Make strategic use of snapshots during the process After Stage 1 but before Stage 2, and so forth. The features of vSphere 6.7 are killer and are well worth the effort and preparation it takes to get there.

Google is updating how articles are shown. Don’t miss our leading home lab and tech content, written by humans, by setting Virtualization Howto as a preferred source.