Create High Performance VMware VMs Checklist

Virtualization technology allows us to make the most of the underlying physical hardware like was never possible without using a hypervisor. Along with virtualizing hardware, it has introduced some very interesting challenges with building virtual machines for high performance applications. Luckily, with today’s hypervisors, such as VMware vSphere ESXi 6.5, there is already so much tuning and tweaking that has been done by default, it generally runs great out of the box. There are some performance considerations that need to be made when creating a new virtual machine, especially in virtual hardware settings chosen. Let’s take a look how to create high performance VMware VMs checklist and considerations that need to be made to achieve the best performance in virtual machines in a vSphere infrastructure.

Create High Performance VMware VMs Checklist

As mentioned, VMware already has a lot of efficiency and performance built into the ESXi hypervisor. Let’s talk about creating high performance VMware VMs Checklist and considerations you want to make from a virtual hardware perspective. We will look at the following areas:

- High Performance VM vCPU allocation

- Memory configuration and memory reservations

- NUMA considerations

- Using PVSCSI controllers and use multiple controllers

- Use VMXNET3 paravirtualized NIC

Resources

In the below we consider certain key areas that need to be addressed when configuring a virtual machine. We are not taking a deep dive approach here but rather looking at focus areas that often lead to performance gotchas when configuring virtual machines and the virtual hardware. As most already know, the vSphere 6.5 Host Resources Deep Dive by Frank Denneman and Niels Hagoort is arguably the most valuable resource for a deep dive into virtual machine settings and their impact on performance. This is available for free from Rubrick – only a registration needed to receive the book!

High Performance VM vCPU allocation and configuration

The vCPU configuration on a VMware virtual machine is a much talked about area of configuration/allocation when thinking about performance. Generally speaking, a good place to start when building a high-performance VMware VM, when sizing the initial vCPU configuration, the total number of vCPUs assigned to all the VMs need not exceed the total number of physical cores (not logical cores) available to the physical ESXi host.

This is a good rule of thumb to start with as this helps to measure the actual performance of the environment without having to take into consideration any overcommitted resources that have been configured on the CPU side. The higher-tier performance VMs benefit from less overcommit whereas lower tier less performant sensitive VMs are generally placed on hosts where greater overcommit is achieved from a resource perspective. As with any troubleshooting or baselining, the less variables we have to work with, the better.

Hyper-threading weighs into the picture with creating high performance VMware VMs. VMware documentation says that Hyper-threading “generally improves the overall host throughput anywhere from 10 to 30 percent”. However as stated in the host resources deep dive book, “One important thing to understand is the accounting of CPU time. The CPU scheduler accounts for used CPU time measured in full core seconds and not in logical processor seconds.”

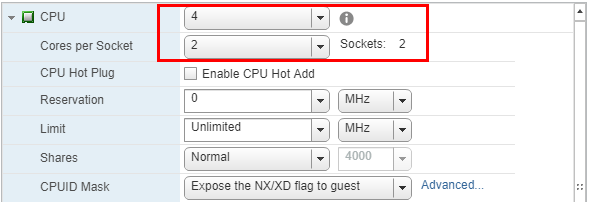

Many have wondered about the “cores per socket” versus sockets count when sizing VMware virtual machines. This setting is generally made available for assisting with licensing limitations. Especially when thinking about applications such as Microsoft SQL Server editions which may limit the number of sockets or total cores, this would come into play.

Additionally, when considering cores per socket, this does have implications on vNUMA layout of the virtual machine. For wide SQL Server VMs when the number of allocated vCPUs is greater than the number of cores in the NUMA node, ESXi divides the CPU and memory of the VM into two or more virtual NUMA nodes or vNUMA nodes and places each of these onto a different physical NUMA node.

Make sure you fully understand your sizing requirements and the particular needs of the application the virtual machine will be servicing in your particular vSphere environment and how NUMA architecture plays into “wide VM” sizing.

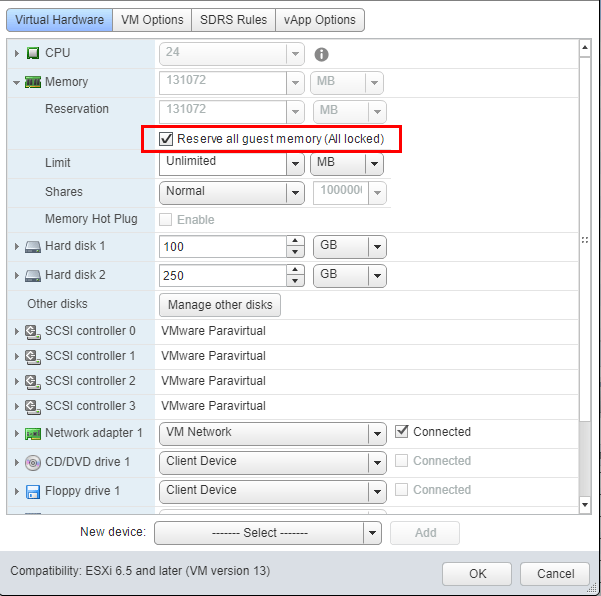

High Performance VMware VM memory configuration and memory reservation

As is the case in configuration vCPU resources, when thinking about configuring a high-performance VMware VM and memory configuration, avoid overcommitting memory resources on the physical ESXi host. NUMA also comes into play with memory with the “wide-VMs” that may be configured with memory that exceeds a physical NUMA node. Wide VMs will split the memory between the physical NUMA nodes in the ESXi host as it will vCPU resources.

Using the memory reservation setting eliminates the possibility of memory ballooning coming into play or swapping operations since this guarantees the VM gets only physical memory when the reservation setting is in play.

NUMA Considerations

NUMA or “non-uniform memory access” mentioned above should always be taken into consideration when building high performance VMware VMs. This is the concept of each CPU being assigned local memory that can be accessed, providing the best performance and lowest latency. Accessing remote memory that is assigned to another CPU can affect performance due to increased latency.

As is documented in great detail in vSphere 6.5 Host Resources Deep Dive failing to design a high-performance VMware VM that especially is a “wide VM” without NUMA in mind can greatly impact performance of that particular VM. Wide VMs are distributed across the NUMA nodes, so keeping the NUMA architecture in mind especially when creating “wide VMs” is essential for high performance VMware VMs configuration.

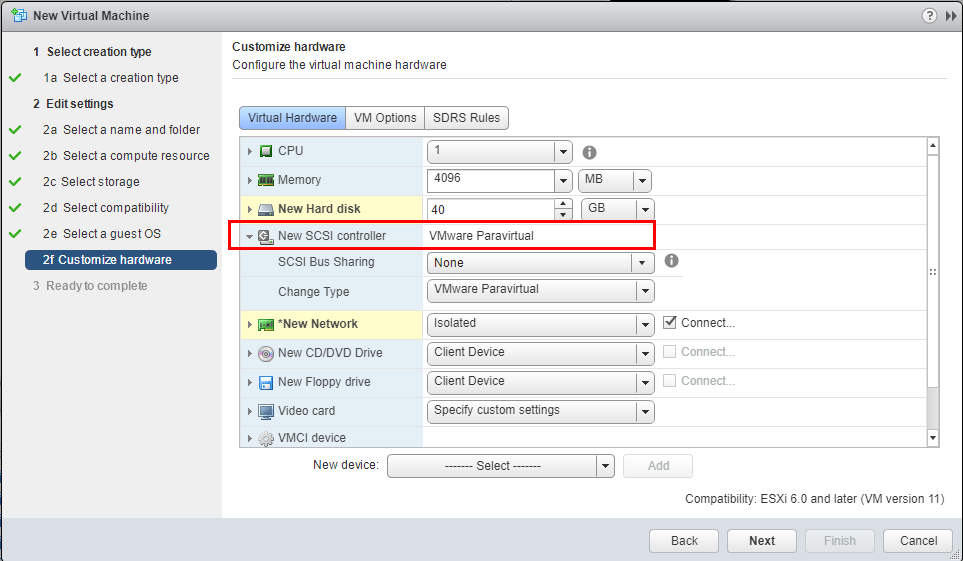

Use PVSCSI storage controllers in high performance VMware VMs

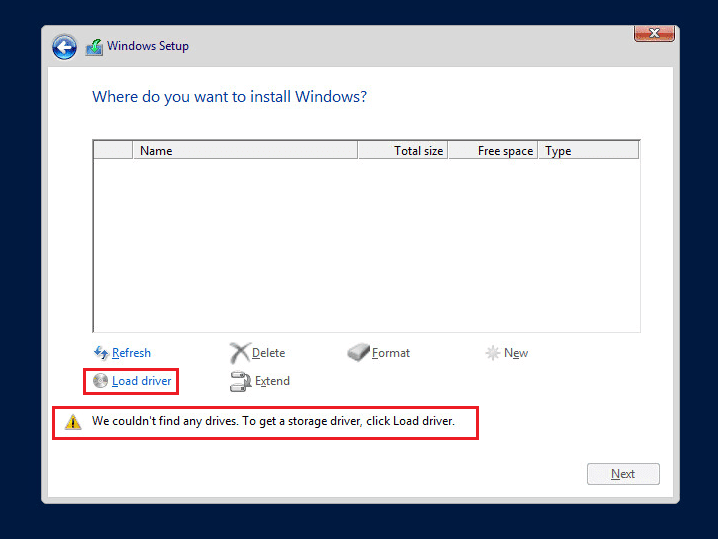

VMware virtual machine hardware defaults to using the “LSI Logic SAS” controller. This is an emulated controller that basically has built-in drivers from most current operating systems, so it is an easy to use storage controller for most applications. However, for creating the most performant VMs running in a vSphere environment, using the much newer Paravirtual SCSI (PVSCSI) controller definitely has performance advantages.

This virtual storage controller is much more efficient in its operation over the legacy LSI Logic SAS controller as it requires much fewer CPU cycles since it is not emulating hardware. When designing VMs with the most high performance, high I/O requirements in mind, using the PVSCSI controller is the best practice approach.

Additionally, making use of multiple storage controllers in the VM configuration allows multiplying the associated queue depth limits of each controller. Assigning a single controller per volume across high performance virtual machines can yield very good results

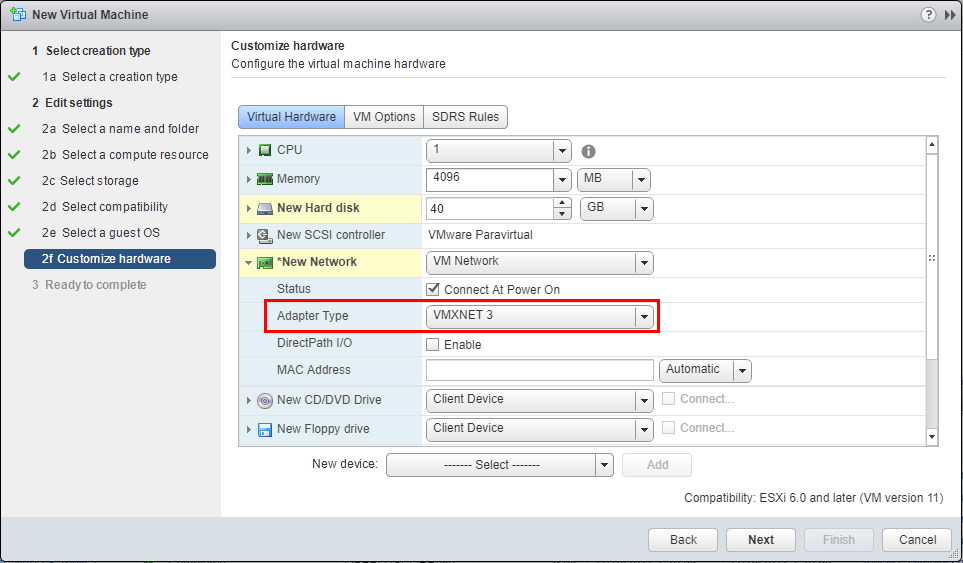

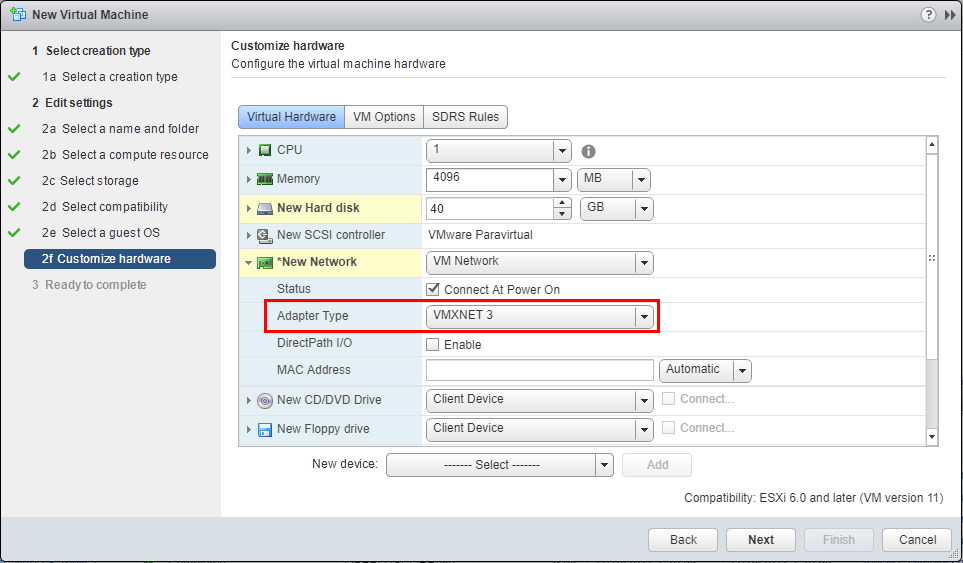

Use VMXNET3 paravirtualized NIC

Finally, using the VMXNET3 paravirtualized NIC yields many of the same above-mentioned benefits of performance when it comes to eliminating the emulation of hardware. The VMXNET3 adapter is a light weight adapter that was built for virtualization and is not emulating hardware such as an E1000 for connectivity. It also yields the fastest link connectivity speeds, i.e. 10 gig, etc, whereas this is limited with the emulated hardware.

Thoughts

Using the latest VMware virtual hardware goes a long way in creating high performance VMware VMs and helps to ensure we have the most efficient and powerful setup to service hungry applications. Understanding NUMA and how this affects VMs that have a “wide” configuration that spans across physical CPUs in the ESXi host is also an important consideration. Aligning CPUs closely to the actual physical layout of the CPUs in a host is a good rule of thumb. Make sure you checkout the host resources deep dive book from Frank Denneman and Niels Hagoort in looking and creating high performance VMware VMs.