Lost access to volume due to connectivity issues

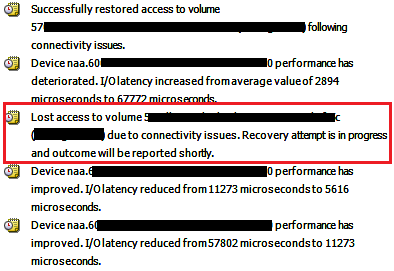

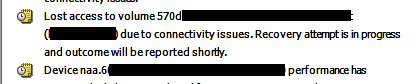

Recently, I ran into an issue with a host where performance would tank from the client side. VM consoles and RDP sessions would disconnect as well as the host would become unresponsive to any types of commands within the vCenter console or WebUI. In looking into the logs, I discovered that there were many “lost access to volume due to connectivity issues” messages. I wanted to detail a few of the findings on this particular host and where things stand now.

Lost access to volume due to connectivity issues

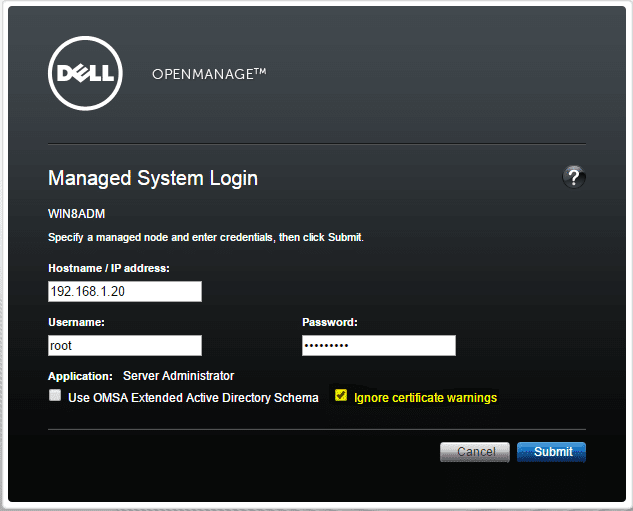

A few environmental notes about this particular host:

- It was running as a development environment

- It had tons of snapshots running as it was being abused for rinse/repeat operations

- It is a whitebox supermicro server so, had some pieced together hardware for RAID, etc

- Is running ESXi 6.0 U2 without any other patches

Things Tried

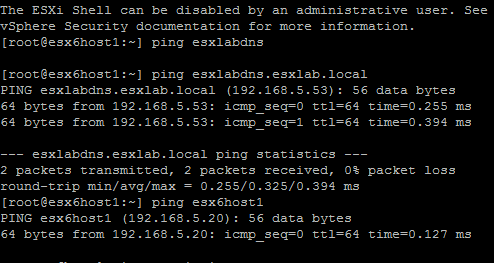

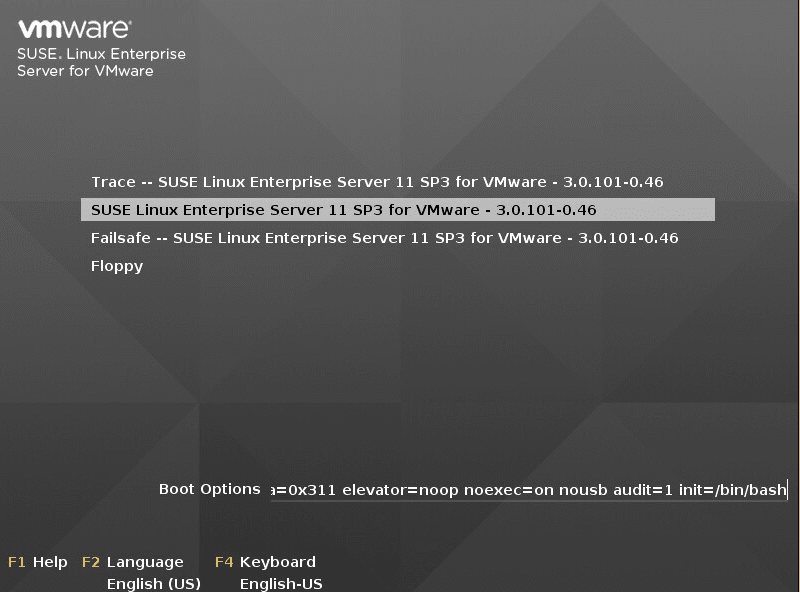

After having some serious issues where the host finally nose dived and became totally unresponsive, I had to power slam the box. At this point I drained the flea power and brought the host back up – Upgrading and updating had to wait this time as there was no maintenance window other than getting things back up and running as quickly as possible.

The first thing that was done was to perform snapshot maintenance on the box. Between the reboot and the deletion of several large and numerous snapshots, the host started performing much better and over the course of a couple of days, I only saw one or two isolated “lost access to volume due to connectivity issues” messages in the events log.

However, this is still not normal. There are heartbeats that take place to make sure the datastore is still present and healthy. Every 3 seconds a write operation is made to the datastore by the heartbeat process. The host will expect these write operations to finish within an 8 second window of time. If it times out, another heartbeat operation is initiated. However, if the heartbeat operations don’t complete within a 16 second window, the datastore is offlined and you will see the “lost access to volume due to connectivity issues” message in the event log.

So, long story short, something is causing the datastore write operations to fail periodically. This could be a hardware issue or something else.

Other possible issues

There is a good VMware KB noting issues with VAAI ATS heartbeat which is the new heartbeat mechanism found in VMFS5 and is on by default.

If you see the following message in the vmkernel.log this KB may apply to you:

- ATS Miscompare detected beween test and set HB images at offset XXX on vol YYY

For this particular host, I didn’t find this message in the vmkernel.log so I haven’t flagged this setting on as of yet.

Thoughts

So far, this is an open ended issue that I am dealing with as of late. I will update everyone as to any new findings here on this issue of “lost access to volume due to connectivity issues”. Stay tuned!

Did you get anywhere with this issue? I’m getting the same on a production DaaS environment so you can imagine what the user experience is like. We’re using Compellents with iSCSI and the vendors are at a loss.

Andy,

Sad to say I am still dealing with this issue. I am working with the vendor on my end to come up with any other possibilities. I have been replacing hardware along the way to rule things out and have made it through the RAID controller and some cabling, next onto the backplane. I will definitely keep this post updated if I stumble onto anything.

We are running into a similar, very random, issue. All of a sudden the VM’s on any datastores being seen by the host experience very high disk write latency killing their performance. The host then may disconnect from vCenter. A few days ago it happened a second time on a different host. This time (host running ESXi 6 build 4192238) it eventually fixed itself within an hour as it happened very early in the morning. The first time (host running ESXi build 3634789) we restarted the management agents and things went back to normal. In this second case, though, we waited a few days, attempted to restart the management agents and it hung so we were forced to restart the host. We’ve reopened the case with VMware and hope to get and update today. I’ll respond back if they find anything relevant.

The storage affected is fiber channel-attached and when it happens the vmkernel.log file is flooded with “hdr status = FCPIO_OUT_OF_RESOURCE” errors. Also, we’re running in a Cisco UCS blade environment.

Travis,

I would be very interested to know your findings as you work through the case. I have another whitebox host that I have seen the messages on now as well. The odd thing in my case is that the hosts are all dealing with local storage as opposed to shared storage. I will keep this post updated as well if I stumble onto anything relevant.

Thanks again Travis!