One of the coolest parts of having a virtualization environment is that you can setup as many labs as you want or as many as you have the hardware resources for I might say. As a current project setting up a production lab environment to mirror a production network that has multiple sites – it was asked if we could setup latency that would reflect what we actually see between sites. Objective – simulate latency virtualization test lab.

If you would like to know how to setup a test lab that mirrors your production lab, all with the same ip addresses – check out the post “Create isolated test environment same ips and subnet with VMware“.

In the aforementioned post above, I used an Untangle box setup in a VM to route traffic between a “go between” VLAN and the same IP address scheme running on VMs “inside” the Untangle internal network. So all of that is fine and dandy Brandon you might say, but how do we simulate network latency in that type of environment.

Simulate latency virtualization test lab

An awesome little gem to simulate network latency is built into most linux distributions and by extension is built into our Untangle VM distro as well. NetEM provides network emulation functionality that helps to test the properties of WAN connections. It can simulate delay, loss, duplication, and reordering to name a few. By using the tc command which can show or manipulate traffic control settings. Using the tc command along with the netem command, we can effectively filter and also introduce latency or loss into packet transmissions of certain source or destination traffic.

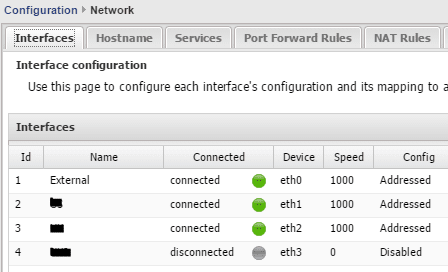

On the Untangle VM in the environment, I have 3 NICs that are being used in a particular lab situation, shown below:

As above, there is one connection used for External traffic (traffic connected to the Lab VLAN). Then I have (2) connections which are the same IP subnets as two connected sites.

On eth1 the main site is connected via the ip range/subnet 172.16.60.0/23 and the 2nd site is connected on eth2 via ip range/subnet 192.168.80.0/24.

Using TC and NetEM on the

tc qdisc add dev eth1 root handle 1: htb tc class add dev eth1 parent 1: classid 1:1 htb rate 1000mbit tc filter add dev eth1 parent 1: protocol ip prio 1 u32 flowid 1:1 match ip src 192.168.80.0/24 tc qdisc add dev eth1 parent 1:1 handle 10: netem delay 100ms 10ms

Using the above lines we build the tree in this order:

- Create the root tree

- Create the default root class

- Create children classes

- Apply rules on each class/pipe

- Apply filters

Above, we specify the src address as traffic that originates from eth2 but the rule is applied on eth1. Don’t let this confuse you. I first was trying to classify traffic that was leaving outbound, however, was never able to get the traffic classed correctly. So you have to think about it from a reverse angle a bit.

After adding the commands above at a root prompt on the Untangle box, ping times went from <1ms to 100ms + or – 10ms.

If you want to get rid of your tc filter simply enter the command below replacing the interface with the interface you have rules applied to:

tc qdisc del dev eth1 root

Other useful commands

The below lists current rules for an interface.

tc -s qdisc ls dev eth0

Show statistics:

tc -s qdisc show dev eth2

Final Thoughts

If you are looking for a great way to simulate traffic between sites in your test lab environment, take a look at the netem and tc modules in linux. As you can see above with the Untangle box and the few commands we had to enter, we were able to introduce effective latency “in the box” so to speak in communications between subnets.

Google is updating how articles are shown. Don’t miss our leading home lab and tech content, written by humans, by setting Virtualization Howto as a preferred source.